Open R1: Hugging Face Replicates the Training Process of DeepSeek-R1

General Introduction

Hugging Face's Open R1 project is a fully open-source DeepSeek-R1 replication project designed to build the missing pieces of the R1 pipeline so that everyone can replicate and build upon them. The project is designed to be simple and consists mainly of scripts for training and evaluating models as well as generating synthetic data.The goal of the Open R1 project is to demonstrate the process of reproducing the complete R1 pipeline through multi-stage training, from the base model to the reinforcement-learning tuned model. The project includes detailed installation and usage instructions, and supports community contributions and collaboration.

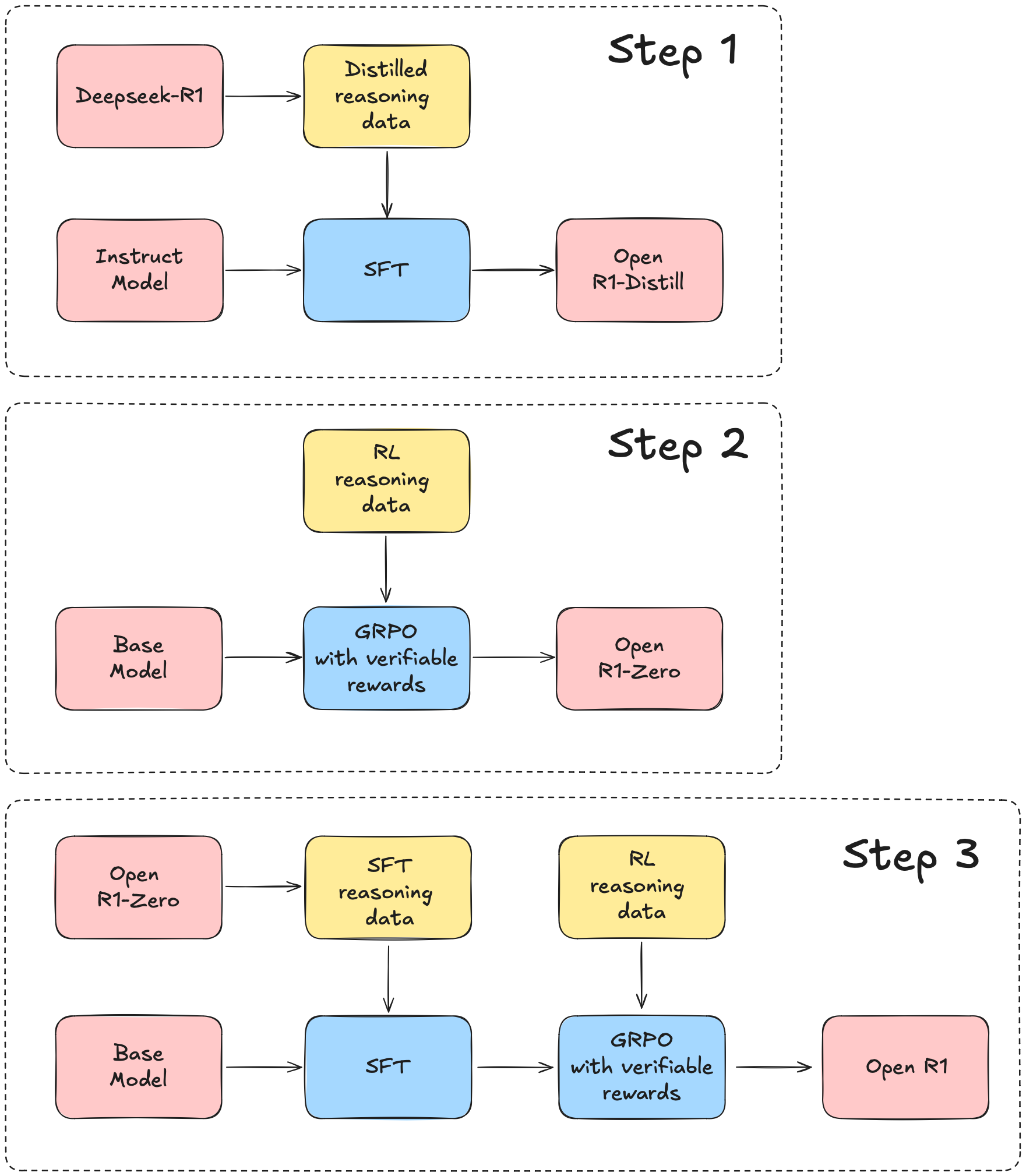

We are going to start with DeepSeek-R1 The technical report serves as a guide, which can be broadly broken down into three main steps:

Step 1: Replicate the R1-Distill model by extracting a high-quality corpus from DeepSeek-R1.

Step 2: Replication DeepSeek Pure Reinforcement Learning (RL) process for creating R1-Zero. This may require organizing new large-scale datasets for math, inference, and code.

Step 3: Demonstrate that we can transition from a base model to an RL-tuned model through multi-stage training.

Function List

- model training: Provides scripts for training models, including GRPO and SFT training methods.

- Model Evaluation: Provides scripts for evaluating model performance and supports R1 benchmarking.

- Data generation: Scripts for generating synthetic data using Distilabel.

- Multi-stage training: Demonstrate a multi-stage training process from base modeling to reinforcement learning tuning.

- Community Contributions: Support community members to contribute datasets and model improvements.

Using Help

Installation process

- Creating a Python Virtual Environment::

conda create -n openr1 python=3.11

conda activate openr1

- Installing vLLM::

pip install vllm==0.6.6.post1

This will install PyTorch v2.5.1 at the same time, make sure to use this version for compatibility with vLLM binaries.

- Install project dependencies::

pip install -e ".[dev]"

- Login to Hugging Face and Weights and Biases accounts::

huggingface-cli login

wandb login

- Installing Git LFS::

sudo apt-get install git-lfs

Guidelines for use

- training model::

- Use GRPO to train the model:

python src/open_r1/grpo.py --dataset <dataset_path>- Train the model using SFT:

python src/open_r1/sft.py --dataset <dataset_path> - assessment model::

python src/open_r1/evaluate.py --model <model_path> --benchmark <benchmark_name>

- Generating synthetic data::

python src/open_r1/generate.py --model <model_path> --output <output_path>

- Multi-stage training::

- Step 1: Replicate the R1-Distill model:

bash

python src/open_r1/distill.py --corpus <corpus_path> - Step 2: Replicate the pure RL pipeline:

bash

python src/open_r1/rl_pipeline.py --dataset <dataset_path> - Step 3: From base model to RL tuning:

bash

python src/open_r1/multi_stage_training.py --model <model_path>

- Step 1: Replicate the R1-Distill model:

Contribution Guidelines

- Project Fork: fork the project to your own account on GitHub.

- cloning project::

git clone https://github.com/<your_username>/open-r1.git

- Creating a new branch::

git checkout -b new-feature

- Submit changes::

git add .

git commit -m "Add new feature"

git push origin new-feature

- Creating a Pull Request: Submit a Pull Request on GitHub describing the changes made.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...