Open-o3 Video - A Video Reasoning Model Open-Sourced by Peking University United Bytes

What is Open-o3 Video

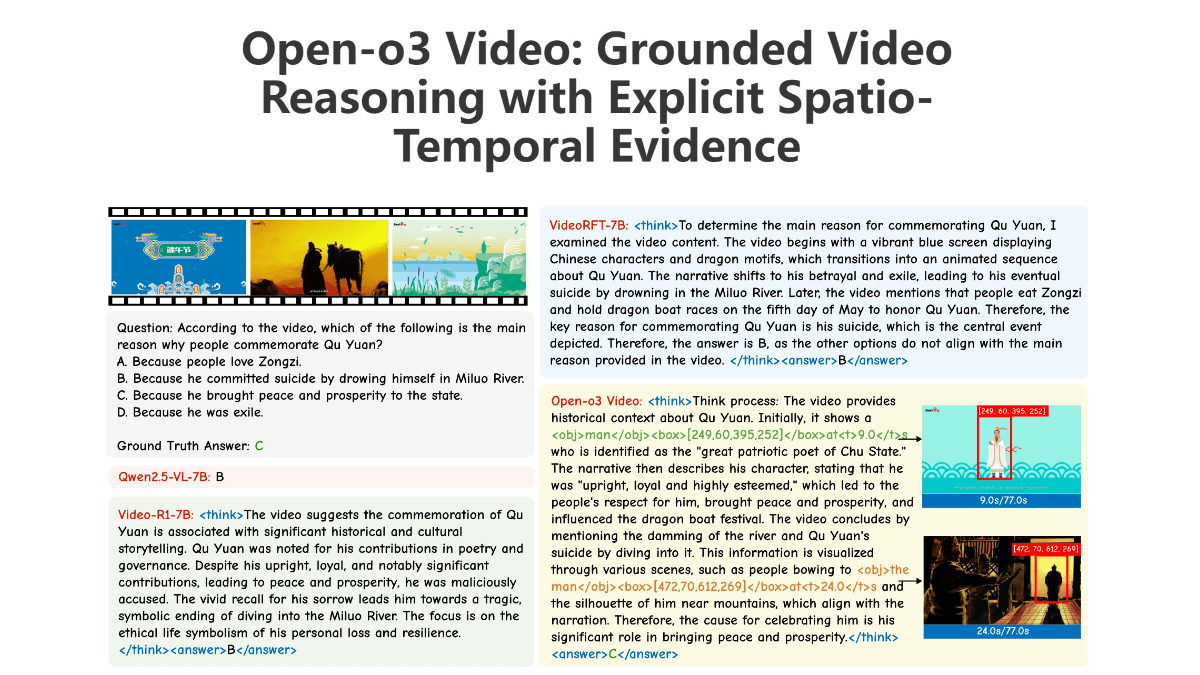

Open-o3 Video is an open source video inference model jointly developed by Peking University and ByteDance, focusing on enhancing video inference through temporal and spatial evidence. By explicitly labeling key evidence with timestamps and bounding boxes, it helps the model better understand and interpret video content. The model is trained using a two-phase training strategy, starting with a cold-start via supervised fine-tuning (SFT), and then incorporating reinforcement learning (RL) optimization to ensure answer accuracy and spatio-temporal alignment. The team also created high-quality datasets STGR-CoT-30k and STGR-RL-36k, which provide rich spatio-temporal supervised signals for model training.

Features of Open-o3 Video

- Temporal evidence enhances reasoning: Open-o3 Video incorporates temporal and spatial evidence into the reasoning process by explicitly labeling key timestamps and bounding boxes to enhance the accuracy and interpretability of video understanding.

- High quality dataset support: The team constructed two high-quality datasets, STGR-CoT-30k and STGR-RL-36k, to provide rich spatio-temporal supervised signals for model training and ensure the improvement of inference capability.

- Two-stage training strategy: A combination of supervised fine-tuning (SFT) and reinforcement learning (RL) training is used to optimize the model's inference accuracy, temporal alignment, and spatial precision through multiple reward mechanisms.

- Excellent performance: In the V-STAR benchmark test, Open-o3 Video significantly outperforms other models, with mAM and mLGM metrics reaching 35.5% and 49.0%, respectively, demonstrating strong video inference capabilities.

- Open Source and Ease of Use: The code and model have been open-sourced on GitHub and Hugging Face to facilitate the use and further development by researchers and developers, and to promote the wide application of video understanding technology.

Core Benefits of Open-o3 Video

- Spatio-temporal evidence integration: The model explicitly labels key timestamps and bounding boxes during the inference process, tightly combining temporal and spatial information with inference paths, significantly improving the accuracy and interpretability of video inference.

- Driven by high quality datasets: The development team constructed two high-quality datasets (STGR-CoT-30k and STGR-RL-36k) to provide uniform spatio-temporal supervised signals, which provide a solid data base for model training and ensure the performance of the model in complex scenarios.

- Two-stage optimized training: A training strategy combining supervised fine-tuning (SFT) and reinforcement learning (RL) is used to optimize the model's inference accuracy, temporal alignment, and spatial accuracy through a variety of rewarding mechanisms to comprehensively improve the model's performance.

- Excellent performance: In the V-STAR benchmark, Open-o3 Video significantly outperforms other similar models in key metrics (e.g., mAM and mLGM), demonstrating its strong competitiveness in video inference.

- Multi-modal fusion capability: Based on powerful multimodal base models (e.g., Qwen3-VL-8B), Open-o3 Video is able to efficiently process text, image, and temporal information in videos for more accurate reasoning and interpretation.

What is Open-o3 Video's official website?

- Project website:: https://marinero4972.github.io/projects/Open-o3-Video/

- Github repository:: https://github.com/marinero4972/Open-o3-Video

- HuggingFace Model Library:: https://huggingface.co/marinero4972/Open-o3-Video/tree/main

- arXiv Technical Paper:: https://arxiv.org/pdf/2510.20579

Who Open-o3 Video is for

- Artificial intelligence researchers: Researchers focusing on video understanding, multimodal learning, and natural language processing can use the model for cutting-edge research and algorithm optimization.

- Computer Vision Engineer: Engineers working in video analytics, target detection, and video content generation can leverage models to improve project performance and development efficiency.

- data scientist: Data scientists who need to process and analyze large-scale video data can use the model to obtain more accurate video inference results.

- teachers and students of higher education: Faculty and students in computer science and artificial intelligence-related programs can use it as a teaching and research tool to explore the latest technologies in the field of video understanding.

- Corporate Technical Team: Enterprise technology teams in the fields of video content creation, intelligent security, and automated driving can apply the model to actual business scenarios to enhance product competitiveness.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...