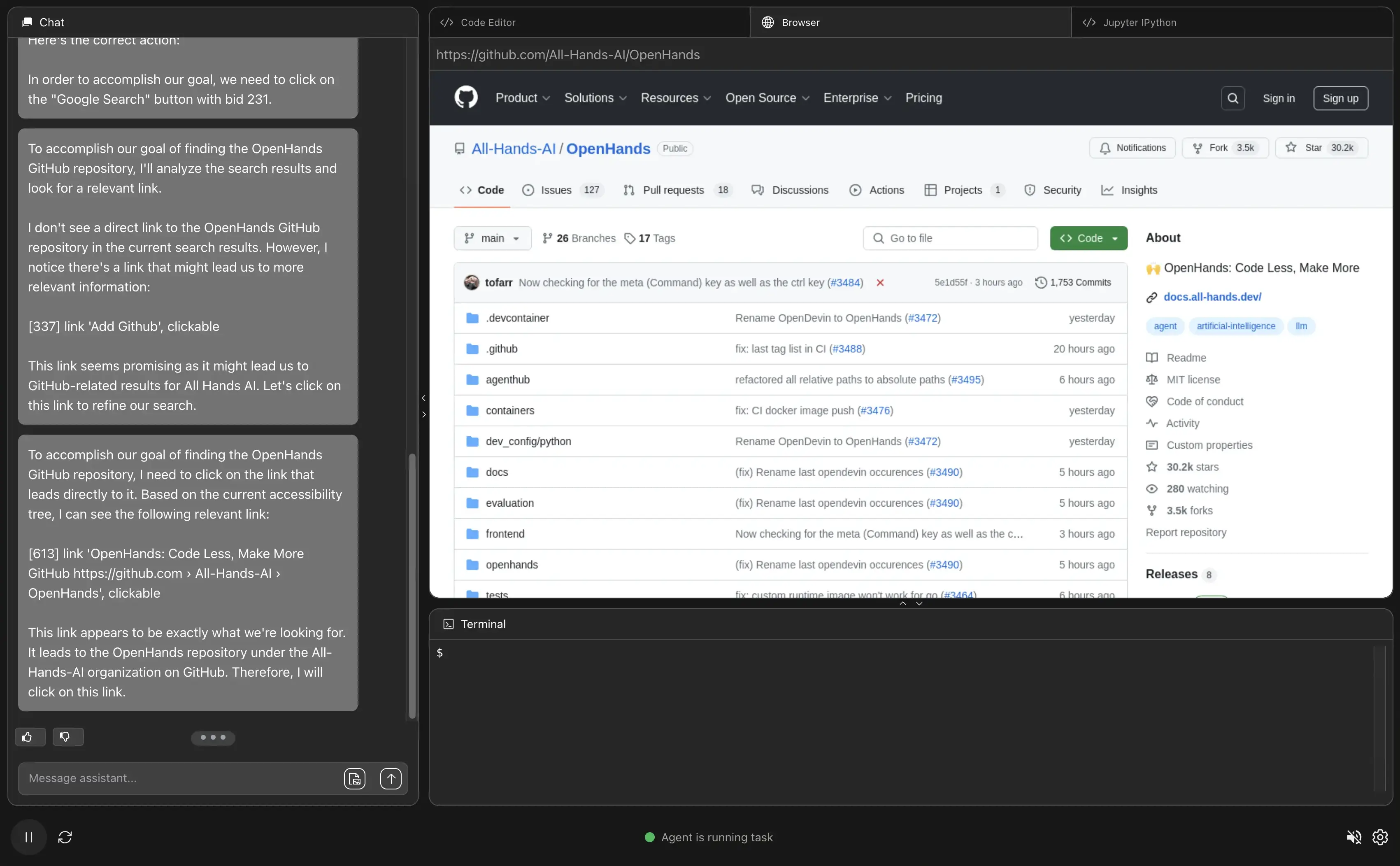

Open-LLM-VTuber: Live2D animated AI virtual companion for real-time voice interaction

General Introduction

Open-LLM-VTuber is an open source project that allows users to interact with Large Language Models (LLMs) via voice and text, and incorporates Live2D technology to present dynamic virtual characters. It supports Windows, macOS and Linux, runs completely offline, and has both web and desktop client modes. Users can use it as a virtual girlfriend, pet, or desktop assistant, creating a personalized AI companion by customizing its appearance, personality, and voice. The project started as a replica of the closed-source AI virtual anchor "neuro-sama" and has evolved into a feature-rich platform that supports multiple language models, speech recognition, text-to-speech, and visual perception. The current version has been refactored with v1.0.0 and is under active development, with more features to be added in the future.

Function List

- voice interaction: Hands-free voice conversation is supported, so users can interrupt the AI at any time for smooth communication.

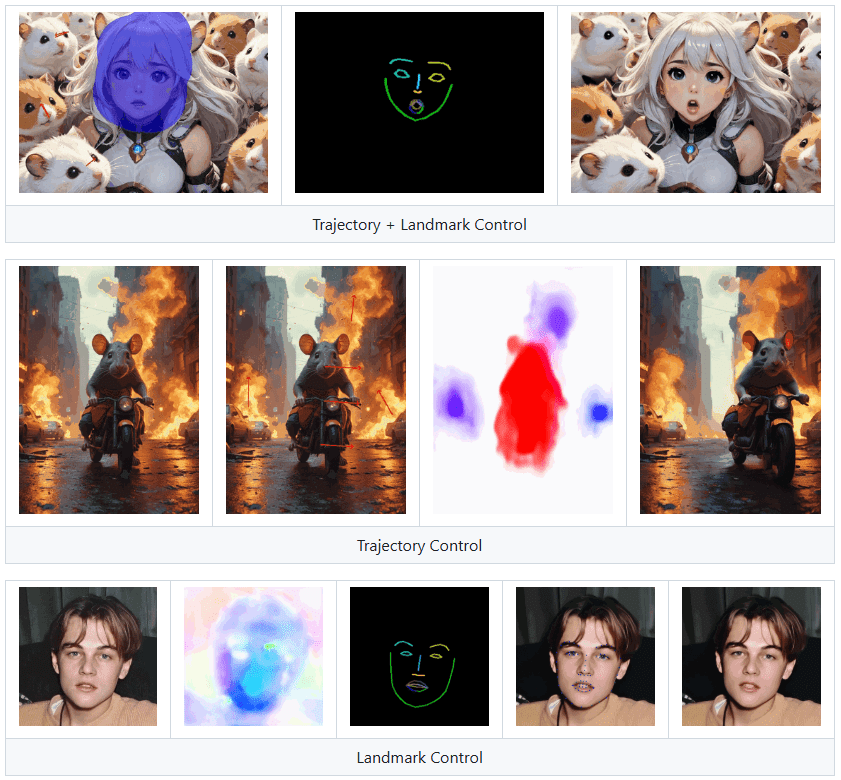

- Live2D animation: Built-in dynamic avatars that generate emoticons and actions based on the content of the conversation.

- Cross-platform support: Compatible with Windows, macOS and Linux, supports NVIDIA/non-NVIDIA GPU and CPU operation.

- offline operation: All functions can be run without network to ensure privacy and security.

- Desktop Pet Mode: Transparent background, global top and mouse penetration are supported, and characters can be dragged to any position on the screen.

- visual perception: Video interaction with AI through camera or screen content recognition.

- Multi-model support: Compatible with a wide range of LLMs such as Ollama, OpenAI, Claude, Mistral, and other speech modules such as sherpa-onnx and Whisper.

- Character Customization: Live2D models can be imported to adjust character and voice.

- haptic feedback: Click or drag the character to trigger an interactive response.

- Chat Record Keeping: Support for switching historical conversations and retaining interactive content.

Using Help

Installation process

Open-LLM-VTuber needs to be deployed locally, here are the detailed steps:

1. Pre-conditions

- software: Support for Windows, macOS or Linux computers with recommended NVIDIA GPUs (can be run without a GPU).

- hardware: Install Git, Python 3.10+ and uv (the recommended package management tool).

- reticulation: Initial deployment requires Internet access to download dependencies, and it is recommended that Chinese users use proxy acceleration.

2. Downloading code

- Clone the project through the terminal:

git clone https://github.com/Open-LLM-VTuber/Open-LLM-VTuber --recursive cd Open-LLM-VTuber

- Or download the latest ZIP file from GitHub Release and unzip it.

- Note: If not used

--recursiveRequired to rungit submodule update --initGet the front-end submodule.

3. Installation of dependencies

- Install uv:

- Windows (PowerShell):

irm https://astral.sh/uv/install.ps1 | iex - macOS/Linux:

curl -LsSf https://astral.sh/uv/install.sh | sh

- Windows (PowerShell):

- Run in the project directory:

uv syncAutomatically installs FastAPI, onnxruntime and other dependencies.

4. Configuration environment

- The first run generates a configuration file:

uv run run_server.py - Edit the generated

conf.yaml, configure the following:- LLM: Select the model (e.g. Ollama (for llama3 or OpenAI API, the key needs to be filled in).

- ASR: Speech recognition module (e.g. sherpa-onnx).

- TTS: Text-to-speech modules (such as Edge TTS).

- Example:

llm: provider: ollama model: llama3 asr: provider: sherpa-onnx tts: provider: edge-tts

5. Activation of services

- Running:

uv run run_server.py - interviews

http://localhost:8000Use the web version, or download the desktop client to run it.

6. Desktop client (optional)

- Download from GitHub Release

open-llm-vtuber-electron(.exe for Windows, .dmg for macOS). - Launch the client and make sure the back-end service is running to experience desktop pet mode.

7. Updating and uninstallation

- update: after v1.0.0

uv run update.pyUpdates, earlier versions need to be redeployed with the latest documentation. - uninstallation: Delete the project folder, check

MODELSCOPE_CACHEmaybeHF_HOMEThe model files in the model, uninstalling tools such as uv.

Functional operation flow

voice interaction

- Enable Voice: Click on the "Microphone" icon on the web page or in the client.

- dialogues: Speak directly and the AI responds in real time; press the "Interrupt" button to interrupt the AI.

- make superior: in

conf.yamlAdjust the ASR and TTS modules to improve recognition and pronunciation.

Character Customization

- Import model: Place the .moc3 file into the

frontend/live2d_modelsCatalog. - Adjustment of personality:: Editorial

conf.yaml(used form a nominal expression)promptLike a "gentle big sister". - Sound Customization: Record samples using tools such as GPTSoVITS to generate unique voices.

Desktop Pet Mode

- Open modeSelect "Desktop Pets", check "Transparent Background" and "Top" in the client.

- moving image: Drag to any position on the screen.

- interactivity: Tap on a character to trigger tactile feedback and view an inner monologue or change in expression.

visual perception

- Activate camera: Click on "Video Chat" to authorize access.

- on-screen recognition: Select "Screen Sense" to have AI analyze the screen content.

- typical exampleAsk "what's on the screen" and the AI will describe the image.

caveat

- browser (software): Chrome is recommended, other browsers may affect the Live2D display.

- performances: GPU acceleration requires properly configured drivers and may run slower on the CPU.

- license: The built-in Live2D sample model is subject to a separate license, and commercial use requires contacting Live2D Inc.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...