OneCAT - Open source multimodal modeling by Meituan and Shanghai Jiaotong University

What is OneCAT

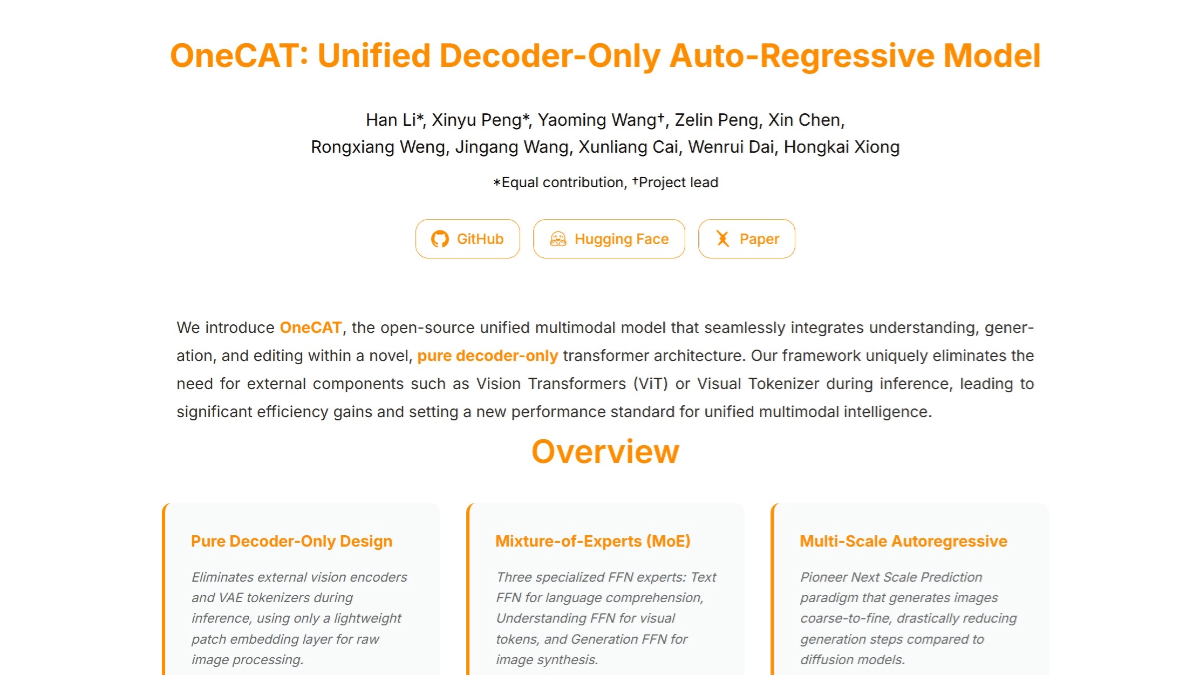

OneCAT is a new unified multimodal model launched by Meituan in collaboration with Shanghai Jiaotong University, which adopts a pure decoder architecture and can seamlessly integrate multimodal comprehension, text-to-image generation and image editing functions. The model abandons the design of traditional multimodal models that rely on external visual coders and disambiguators, and realizes efficient multimodal processing through a modality-specific Mixed of Experts (MoE) architecture and a multiscale autoregressive mechanism.OneCAT's core strengths lie in its concise architecture and significantly improved inference efficiency, especially when dealing with high-resolution image inputs and outputs. It further enhances visual generation capabilities and cross-modal alignment through innovative scale-aware adapters and multimodal multifunctional attention mechanisms. OneCAT has demonstrated excellent performance in multiple benchmark tests for multimodal understanding, text-to-image generation and image editing, setting a new standard for the development of unified multimodal intelligence.

Features of OneCAT

- Efficient multimodal processingThe pure decoder architecture, which eliminates the need for external visual coders or word splitters, significantly simplifies the model structure and reduces the computational overhead, especially when dealing with high-resolution inputs.

- Powerful generative capabilitiesThe autoregressive multi-scale mechanism can generate high-quality images step by step in a coarse-to-fine manner, which is suitable for text-to-image generation and image editing tasks, and produces excellent generation results.

- Flexible image editing: Supports command-based image editing with precise local and global adjustments to images based on user commands, enabling powerful conditional generation capabilities without additional architectural modifications.

- Cross-modal alignment capability: Enhanced alignment capabilities between different modalities and improved model performance in multimodal tasks through modality-specific Mixing of Experts (MoE) structures and shared QKV and attention layers.

- Dynamic Resolution Support: Native support for dynamic resolution, able to adapt to different sizes of inputs, improves the flexibility and applicability of the model.

Core Benefits of OneCAT

- Simple and efficient architecture: Adopting a pure decoder architecture without the need for an external visual encoder or disambiguator significantly simplifies the model structure and reduces the computational overhead, especially when dealing with high-resolution inputs, with a significant increase in inference efficiency.

- Strong multimodal fusion capability: Through the modality-specific Mixing of Experts (MoE) structure, it can seamlessly process text, images and other multimodal data to realize efficient multimodal comprehension, generation and editing functions, which enhances the depth and efficiency of cross-modal information fusion.

- Excellent generation performance: The innovative introduction of a multi-scale visual autoregressive mechanism to generate images incrementally in a coarse-to-fine manner drastically reduces the decoding steps while maintaining high-quality visual outputs, and demonstrates robust performance in text-to-image generation and image editing tasks.

- Strong command adherence: Demonstrates excellent command adherence in multimodal generation and editing tasks, accurately understands and executes user commands, and generates compliant image content that enhances the user experience.

- Dynamic Resolution Support: Native support for dynamic resolution, able to adapt to different sizes of inputs, improves the flexibility and applicability of the model to a wide range of application scenarios.

What is OneCAT's official website?

- Project website:: https://onecat-ai.github.io/

- Github repository:: https://github.com/onecat-ai/onecat

- HuggingFace Model Library:: https://huggingface.co/onecat-ai/OneCAT-3B

- arXiv Technical Paper:: https://arxiv.org/pdf/2509.03498

Who OneCAT is for

- Artificial intelligence researchers: OneCAT, as a novel multimodal model, provides researchers with new research directions and experimental platforms that can be used to explore cutting-edge technologies for multimodal understanding, generation and editing.

- Data scientists and engineers: In projects that need to deal with multimodal data, OneCAT can help them quickly realize the functions of text-to-image generation, image editing and other functions to improve development efficiency.

- Creative designers and artists: OneCAT generates high-quality images based on text descriptions, providing inspiration and material for creative design and artwork, helping them to quickly realize creative ideas.

- educator: In the field of education, OneCAT can generate images related to the teaching content to help students better understand and memorize the knowledge and enrich the teaching resources.

- Content creators and media practitioners: OneCAT can be used to generate and edit image content to assist in the creation of advertisements, videos, social media content and more, improving the efficiency and quality of content creation.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...