One Hub: managing and distributing OpenAI interfaces, supporting multiple models and statistical functions

General Introduction

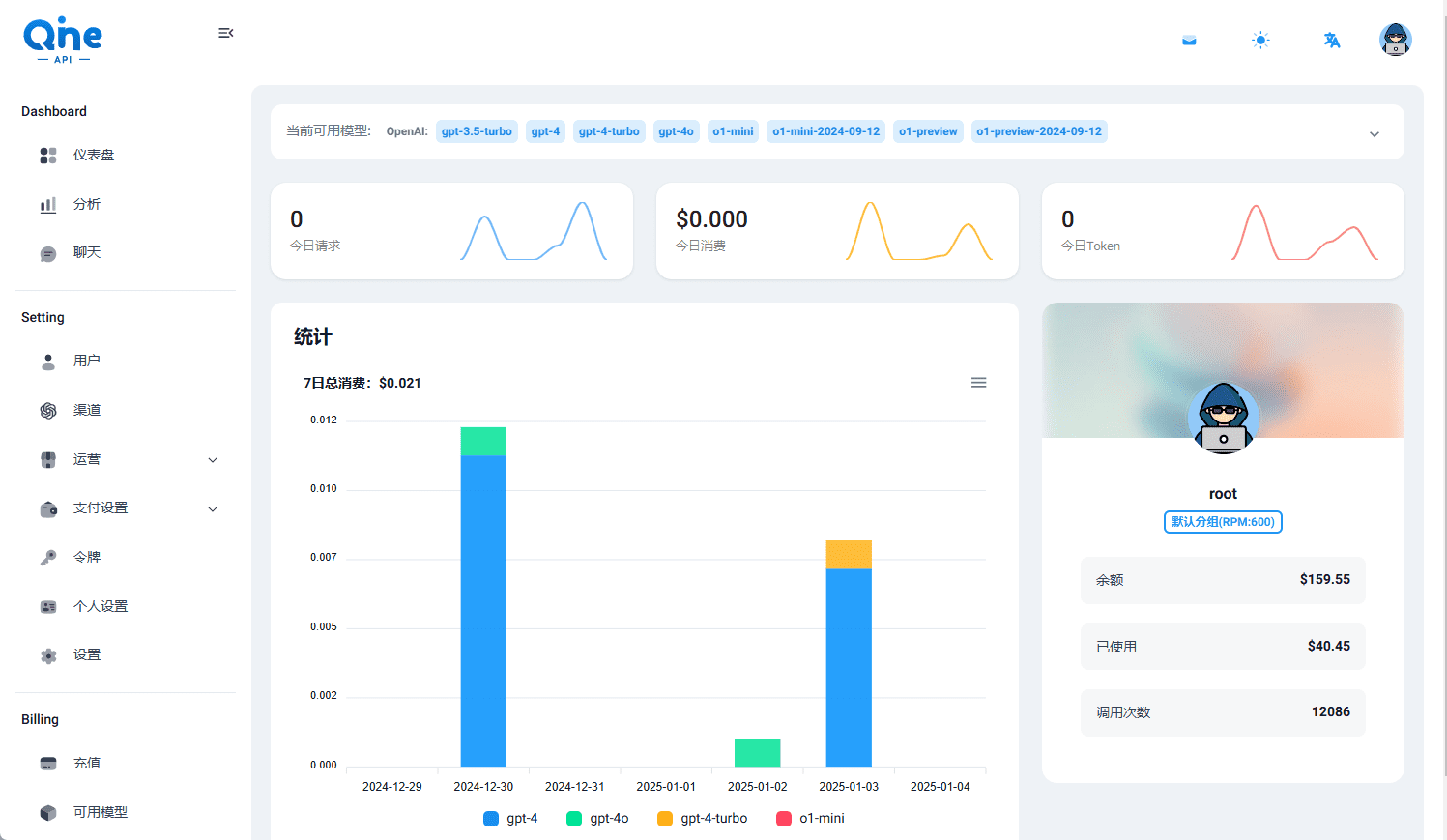

The One Hub is a program based on the One API Second development of the OpenAI interface management and distribution system. Developed by MartialBE to provide broader model support and improved statistics, One Hub features a new user interface with a new user dashboard and administrator statistics interface, and a refactored transit provider module. It supports function calls for a wide range of models, including non-OpenAI models, and provides a rich set of configuration options and monitoring capabilities. One Hub is intended for developers and organizations that need to manage and distribute OpenAI interfaces, helping them to use and monitor a wide range of AI models more efficiently.

Function List

- Supports function calls for a wide range of OpenAI and non-OpenAI models

- New user dashboard and administrator statistics interface

- Refactoring of the transit provider module to support dynamic return to the list of user models

- Support for Azure Speech Emulation TTS Features

- Support for configuring a separate http/socks5 proxy

- Support for customized speed models and log request elapsed time

- Telegram bot and model pay per view support

- Support for model wildcards and configuration files to start the program

- Supports Prometheus monitoring

- Support for Payment and User Group RPM Configuration

Using Help

Installation process

- Clone the project code:

git clone https://github.com/MartialBE/one-hub.git

- Go to the project catalog:

cd one-hub

- Configure environment variables and dependencies:

cp config.example.yaml config.yaml

Modify as necessary config.yaml configuration items in the file.

- Booting with Docker:

docker-compose up -d

Or use the local environment to start:

go run main.go

Usage Functions

User Dashboard

The user dashboard provides an intuitive interface where users can view and manage their API calls. The dashboard allows you to monitor API usage in real-time and view detailed call logs and statistics.

Administrator statistics screen

The administrator statistics interface provides administrators with a comprehensive data analysis tool. Administrators can view API calls for all users, generate detailed statistical reports, and optimize and adjust based on the data.

Transit provider module

The transit provider module supports dynamically returning a list of user models and allows configuration of individual http/socks5 proxies. Administrators can add or remove providers as needed and set up different proxy configurations to optimize performance and stability of API calls.

Azure Speech Emulates TTS Features

One Hub supports using Azure Speech to emulate the TTS feature, which allows users to configure the Azure Speech service to generate high-quality speech output. The configuration method is as follows:

- exist

config.yamlfile to add the Azure Speech configuration:

azure_speech:

api_key: "your_api_key"

region: "your_region"

- Restart the One Hub service for the configuration to take effect.

Customized speed model and log request elapsed time

Users can customize the speed model in One Hub and view the request elapsed time for each API call. With these features, users can optimize the performance of the model and ensure that API calls are efficient.

Telegram bot and model pay per view support

One Hub supports notifications and management via Telegram bot, allowing users to receive real-time notifications of API calls on Telegram. In addition, One Hub supports model per-use charging, allowing users to bill and manage flexibly based on usage.

Detailed Operation Procedure

- After logging in to One Hub, go to the user dashboard to view the API calls.

- In the administrator interface, add or remove vendors and configure agent settings.

- Configure the Azure Speech service to generate speech output.

- Customize the speed measurement model to see the request elapsed time for API calls.

- Receive notifications via Telegram bot and model pay-per-view management.

With the detailed help above, users can quickly get started with One Hub and take full advantage of its rich features for managing and distributing OpenAI interfaces.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...