OmniGen: Unified Image Generation Model with Multimodal Inputs to Generate Character-Consistent Images

General Introduction

OmniGen is a "universal" image generation model developed by VectorSpaceLab that allows users to create diverse and contextually rich visuals with simple text prompts or multimodal inputs. It is particularly well suited to scenes that require character recognition and consistent character rendering. Users can upload up to three images and generate high-quality images with detailed prompts. In addition, OmniGen supports editing of previously generated images, providing flexible seeding capabilities suitable for image refinement and experimentation.

OmniGen does not require additional plug-ins or operations to automatically recognize features in the input image and generate the desired image. Existing image generation models usually need to load several additional network modules (e.g., ControlNet, IP-Adapter, Reference-Net, etc.) and perform additional preprocessing steps (e.g., face detection, pose estimation, cropping, etc.) in order to generate satisfactory images. However, we believe that future image generation paradigms should be simpler and more flexible, i.e., generating various images directly from arbitrary multimodal instructions without additional plug-ins and operations, similar to how GPT works in language generation.

Function List

- Image Generation: Generate diverse images with text prompts or multimodal inputs.

- Personalized Image Creation: Upload up to three images to generate a personalized image.

- character rendering (computing): Maintains consistency and recognizability of characters and is suitable for scenes where characters need to be identified.

- image editing: Provides flexible seeding capabilities by editing previously generated images.

- Image condition generation: Generate a new image based on the specific conditions of the input image.

- High quality output: Detailed tips to generate clearer and higher quality images.

Using Help

- Upload a picture: Upload up to three images in the OmniGen interface, which can be character, item, or condition maps.

- Describe the image: Describe in detail the image you want to generate in the prompt box. For sections involving image elements, use the format <img><|image_i|></img> Introduce them.

- Adjustment parameters: Adjust OmniGen generation parameters such as image scale in Settings. Other settings are recommended to remain default.

- Generating images: Click the Generate button to enter the queue and wait for the image to be generated.

- Edit Image: Edit and refine the resulting image using OmniGen's seeding feature.

Tip:

- For image editing tasks and controlnet tasks, it is recommended to set the height and width of the output image to the same as the input image. For example, if you want to edit a 512x512 image, you should set the height and width of the output image to 512x512. You can also set the

use_input_image_size_as_outputto automatically align the height and width of the output image with the input image. - If you are experiencing a lack of memory or cost of time, you can set the

offload_model=Trueor reference . /docs/inference.md#requiremented-resources Select the appropriate settings. - When inputting multiple images, if the inference time is too long, try reducing the

max_input_image_size. For detailed information, please refer to . /docs/inference.md#requiremented-resourcesThe - Oversaturation: If the image looks over-saturated, lower the

guidance_scaleThe - Low quality: more detailed cue words would produce better results.

- Anime style: If the generated image presents an anime style, you can try to add the cue words in the

photoThe - Editing generated images: If you generate an image with omnigen and later want to edit it, you cannot do so with the same seed. For example, if an image was generated with seed=0, it should be edited with seed=1.

- For image editing tasks, it is recommended that you place the image before the edit command. For example, using the

<img><|image_1|></img> remove suitInstead ofremove suit <img><|image_1|></img>The

OmniGen Online Access and One-Click Installation Package

Official website for online use: aiomnigen.comComfyui

Node: github.com/AIFSH/OmniGen-ComfyUIOmniGen

One-click installation package: pan.quark.cn/s/a1fd7d5298f9

OmniGen More Application Scenarios

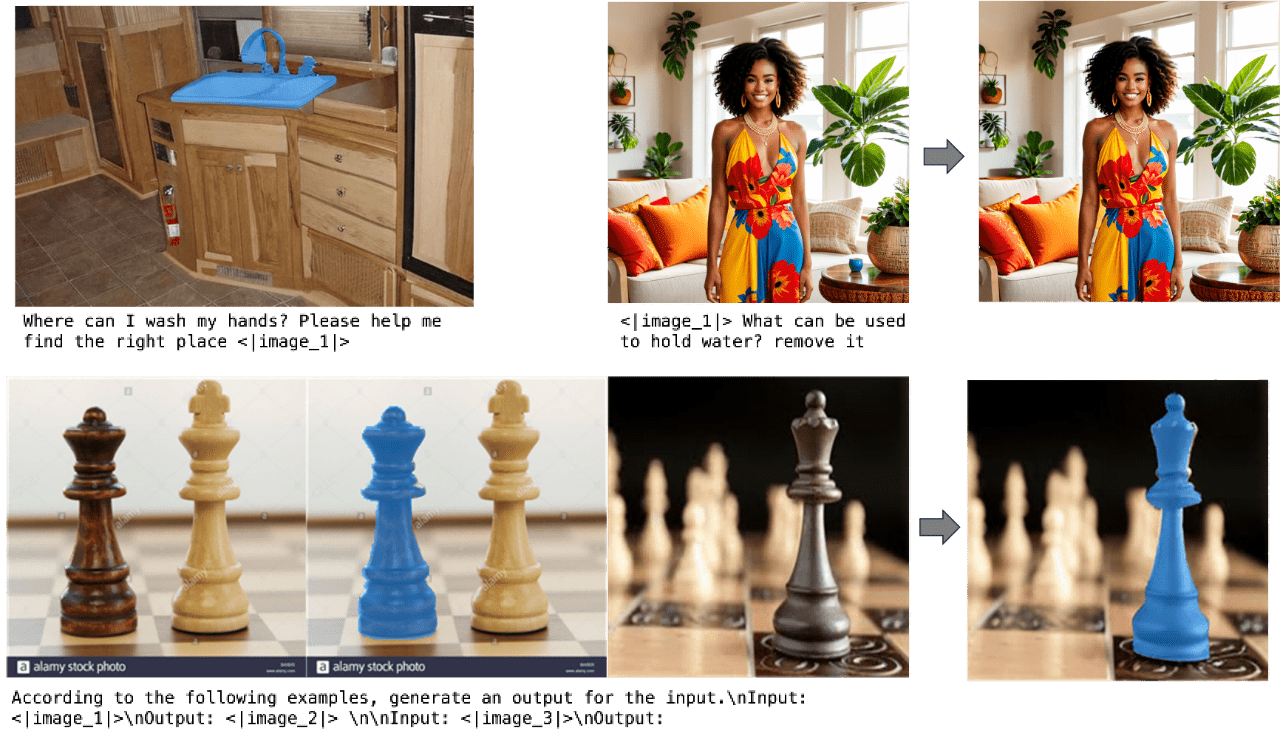

image editing

OmniGen has good image editing capabilities and can also do text generation of images.

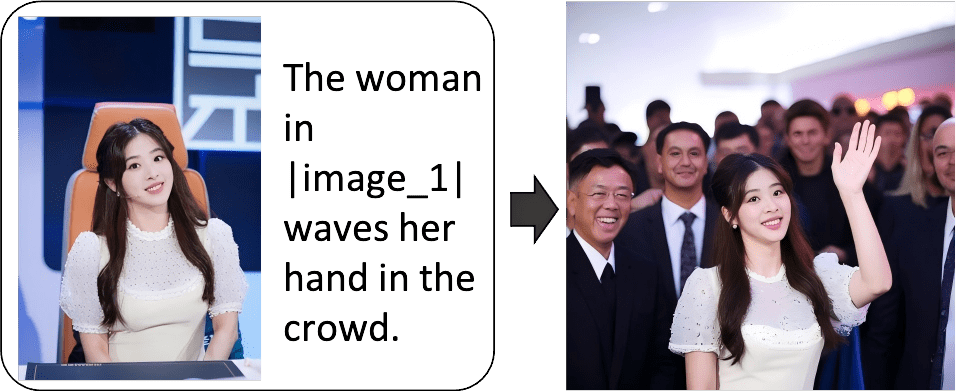

Specified Character Generation

OmniGen is similar to models such as InstandID, Pulid, etc. in its ability to generate role-consistent images, etc., i.e., input an image with a single object, understand and follow instructions, and output a new image based on that object.

Unlike InstandID and Pulid, OmniGen can also specify generation from multiple characters.

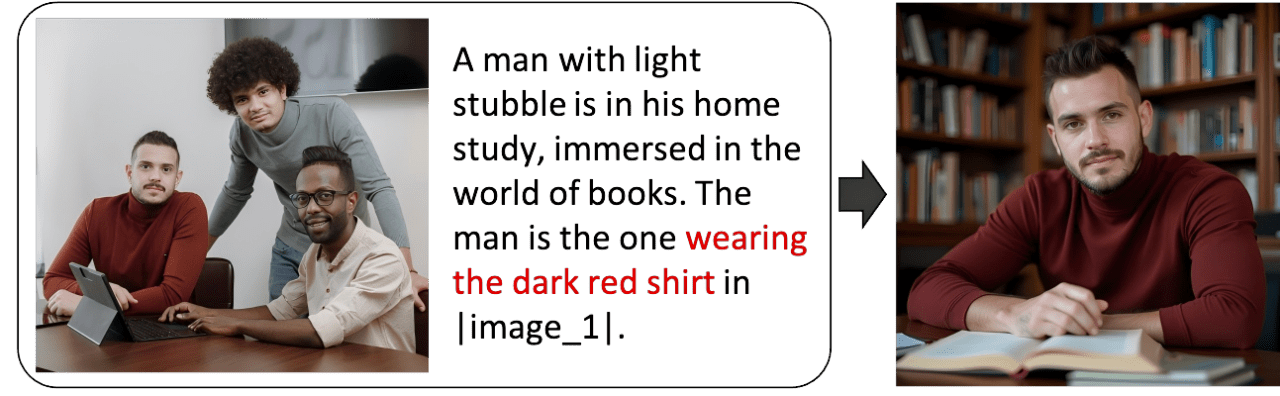

Fingerprint generation

This is the most unique feature of OmniGen: the ability to recognize the object referred to by the command and generate a new image from an image containing multiple objects.

OmniGen simply locates the target object from multiple images (up to 3 images can be selected) based on cue word commands and generates a new image that follows the commands without any additional modules or operations.

Generic image condition generation

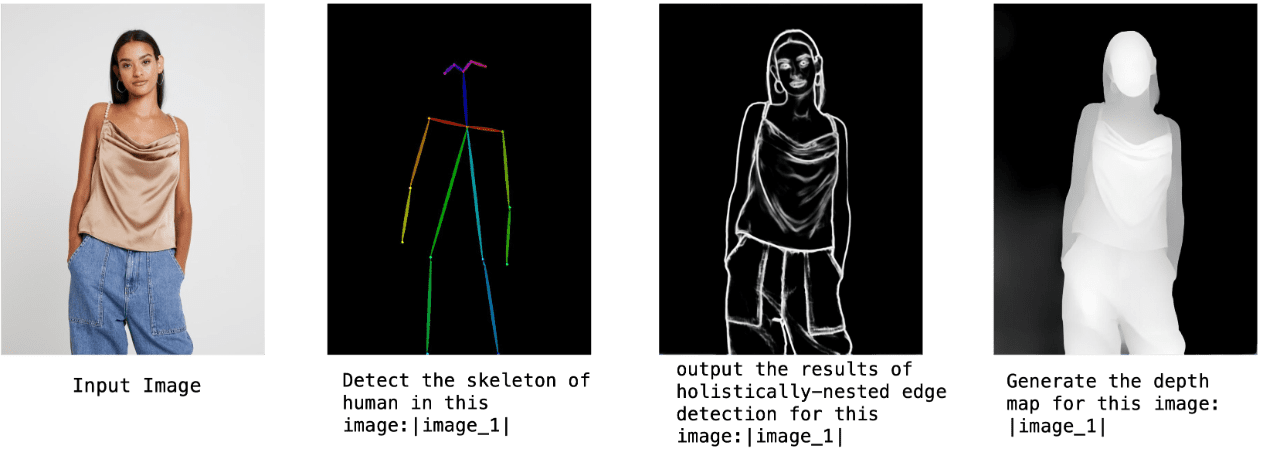

This is OmniGen's ability to support ControlNet-like generation of images based on specific conditions. Currently it is mainly based on a reference character skeletonOpenposegeneration, and another ability to generate from a reference character depth map.

Unlike the mainstream Venn diagram models that require Controlnet for condition control, OmniGen accomplishes the entire ControlNet process with a single model: OmniGen directly extracts visual conditions from the original diagram and generates an image based on the extracted conditions without the need for an additional processor. What's more, OmniGen generates an image based on the reference image and cues with a single click, unlike ControlNet, which needs to generate a skeleton or depth map first.

Other Control Component Functions

In addition to the above OmniGen 1.0 has been able to realize the function, the official also said that OmniGen there are more features, such as more Controlnet function, line, soft edge generation and so on.

Classical computer vision tasks

Image denoising, edge detection, pose estimation, etc.

Can even be like LLM has some context learning ability (In-context Learning), according to the understanding of the operation.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...