OmAgent: an intelligent body framework for building multimodal smart devices

General Introduction

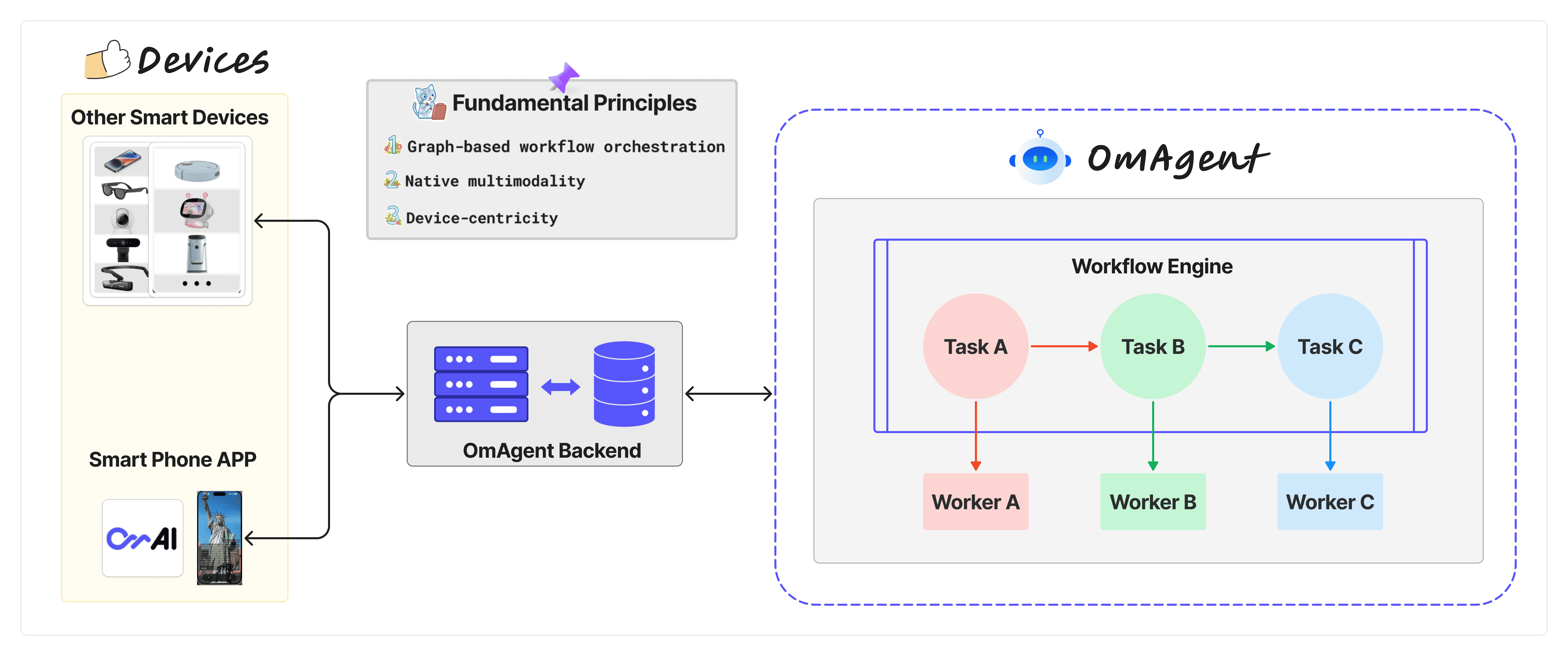

OmAgent is a multimodal intelligent body framework developed by Om AI Lab, aiming to provide powerful AI-powered features for smart devices. By integrating state-of-the-art multimodal base models and intelligent body algorithms, the project enables developers to create efficient, real-time interactive experiences on a wide range of smart devices.OmAgent supports not only text and image processing, but also complex video comprehension for a wide range of scenarios ranging from smartphones to future robots. At its core, it optimizes end-to-end computation to ensure natural and smooth interactions between users and devices.

Function List

- Multimodal model support: Integration of commercial and open source multimodal base models to provide powerful AI support.

- Device Connectivity Simplified: Simplifies the process of connecting to physical devices such as cell phones, glasses, etc., and supports developers in creating apps that run on the device.

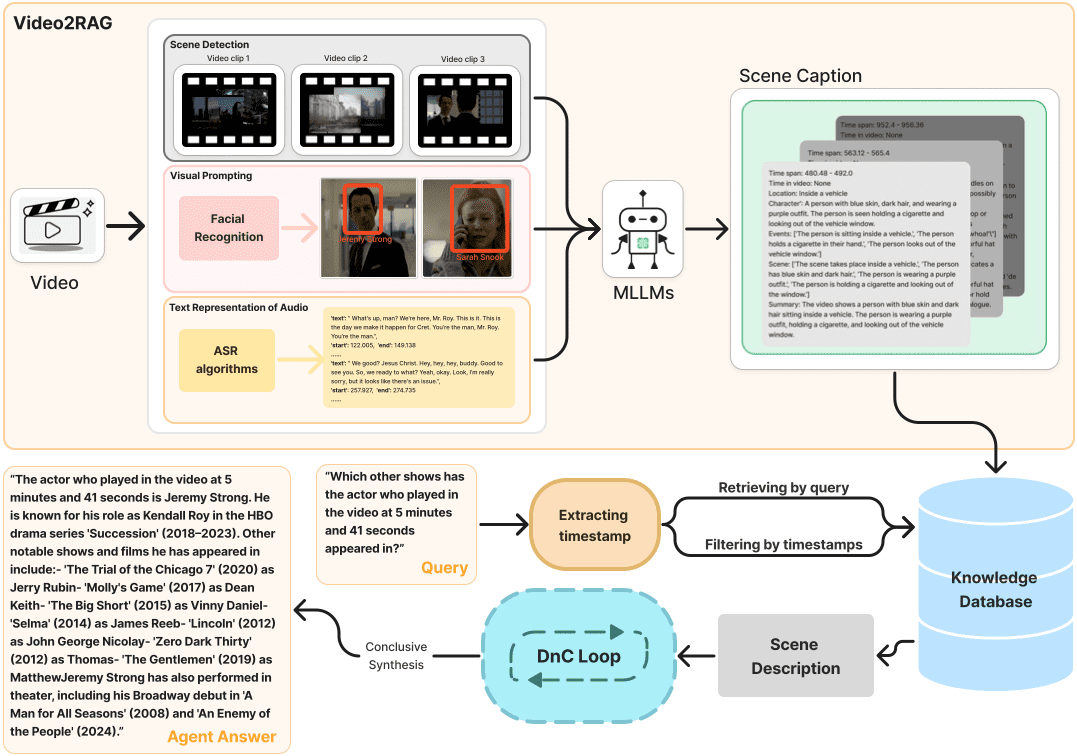

- Complex Video Understanding: Utilizing divide-and-conquer algorithms to provide deep parsing and understanding of video content.

- Workflow orchestration: Use the Conductor workflow engine to support complex orchestration logic such as loops and branches.

- Task and worker management: Logical orchestration and node execution in workflows through Task and Worker concepts.

- Highly efficient audio and video processing: Optimize audio and video processing to ensure a real-time interactive experience.

Using Help

Installation process

OmAgent is an open source project hosted on GitHub and the installation process is as follows:

- clone warehouse::

- Open a terminal and execute the following command to clone OmAgent's GitHub repository:

git clone https://github.com/om-ai-lab/OmAgent.git - Go to the cloned directory:

cd OmAgent

- Open a terminal and execute the following command to clone OmAgent's GitHub repository:

- Environment Configuration::

- Create and activate a Python environment (conda is recommended):

conda create -n omagent python=3.10 conda activate omagent - Install the required dependencies:

pip install -r requirements.txt - If a specific configuration is required (e.g. bing search API), modify the

configs/tools/websearch.ymlfile, add yourbing_api_keyThe

- Create and activate a Python environment (conda is recommended):

Tutorials

Developing Intelligent Bodies

- Creating Intelligentsia::

- It can be done from the

examplesFind the sample project in the directorystep1_simpleVQA, learn how to build a simple multimodal visual quizzing intelligence. - Follow the steps in the example to write your own intelligent body logic.

- It can be done from the

- connected device::

- With OmAgent's app backend service, intelligences can be deployed to devices. Refer to

app使用文档in the section on device connectivity to ensure that communication between devices and intelligences is seamless.

- With OmAgent's app backend service, intelligences can be deployed to devices. Refer to

- Video comprehension::

- utilization

video_understandingSample projects to understand how OmAgent can be utilized to process and understand video content. Special attention is paid to the use of a divide-and-conquer strategy (Divide-and-Conquer Loop) for intelligent video querying and analysis.

- utilization

- Workflow management::

- By creating and editing

container.yamlfile to configure your workflow. Each workflow can contain multiple nodes, each of which can be a separate task or a complex logical branch. - Uses Conductor as the workflow engine and supports the

switch-case,fork-join,do-whileand other complex operations.

- By creating and editing

- Tasks and workers::

- During development, define the

Taskto manage the workflow logic.Workerthen performs the specific operation logic. EachSimpleTaskequivalent to aWorker, through which intelligent body functions can be flexibly constructed and extended.

- During development, define the

Running Intelligence

- running example::

- In the cloned project directory, run the sample script like this:

python run_demo.py - The results will be saved in the

./outputsfolder.

- In the cloned project directory, run the sample script like this:

- Debugging and Testing::

- Use GitHub Actions for automated testing and deployment to ensure that your intelligences are stable in different environments.

in-depth study

- View Document: OmAgent's detailed API documentation and usage tutorials can help you understand and utilize the framework in greater depth.

- Community Support: Join the Om AI Lab community to participate in discussions, get support and share your work.

By following these steps, developers can benefit from OmAgent's wide range of capabilities to create sophisticated AI intelligences that can run on a wide variety of smart devices, providing solutions for a wide range of tasks from simple Q&A to complex video analysis.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...