Ollama access to local AI Copilot programming assistant

summary

This document describes how to locally build something like Copilot s programming assistant to help you write more beautiful, efficient code.

From this course you will learn to use Ollama Integration of local programming assistants, which include

- Continue

- Aider

Attention:

- We are going to talk about the installation and basic usage of VScode. jetbrain is used in much the same way as VScode, just refer to vscode.

- The features and configurables available with jetbrain are not as rich as those available with VScode, so it is recommended to use VScode.

- Building Copilot locally has higher requirements for the machine, especially for the auto-completion part of the code, so if you don't have any special needs, it is recommended that you buy an API to use it.

I. Introduction to AI Programming

1.1 Why do we program with AI?

The Big Language Model has been around for a long time, but the area in which it has performed best has been programming for the following reasons:

- A clear standard of right and wrong. In the programming world, code either works or it doesn't. This black-and-white nature makes it easier for AI to judge the quality of its output.

- Precise language. Programming languages don't have much ambiguity. Every symbol, every keyword has a clear meaning. This kind of precision is what AI models excel at.

- Targeted missions. When writing code, we usually have a clear goal. For example, "Write a function that computes the Fibonacci sequence." Such clear instructions make it easier for the AI to focus on the problem.

- Generalization of knowledge. Once you learn the basic concepts of a programming language, much of it can be transferred to other languages. This transferability of knowledge makes AI models particularly efficient at learning in the programming domain.

1.2 How do we program with AI?

- Using the Big Model Dialog Application (not explained further here)

- Using the Integrated Development Environment (IDE)

- Continue

- Using command line tools

- Aider

II. IDE Continue Access

2.1 Installation of required models

- Install Ollama

- Install the following models

- Installation of the Universal Question and Answer (UQA) model

ollama pull llama3:8b - Installation of automatic code-completion models.

ollama pull starcoder2:3b - Install the embedding model.

ollama pull nomic-embed-text

- Installation of the Universal Question and Answer (UQA) model

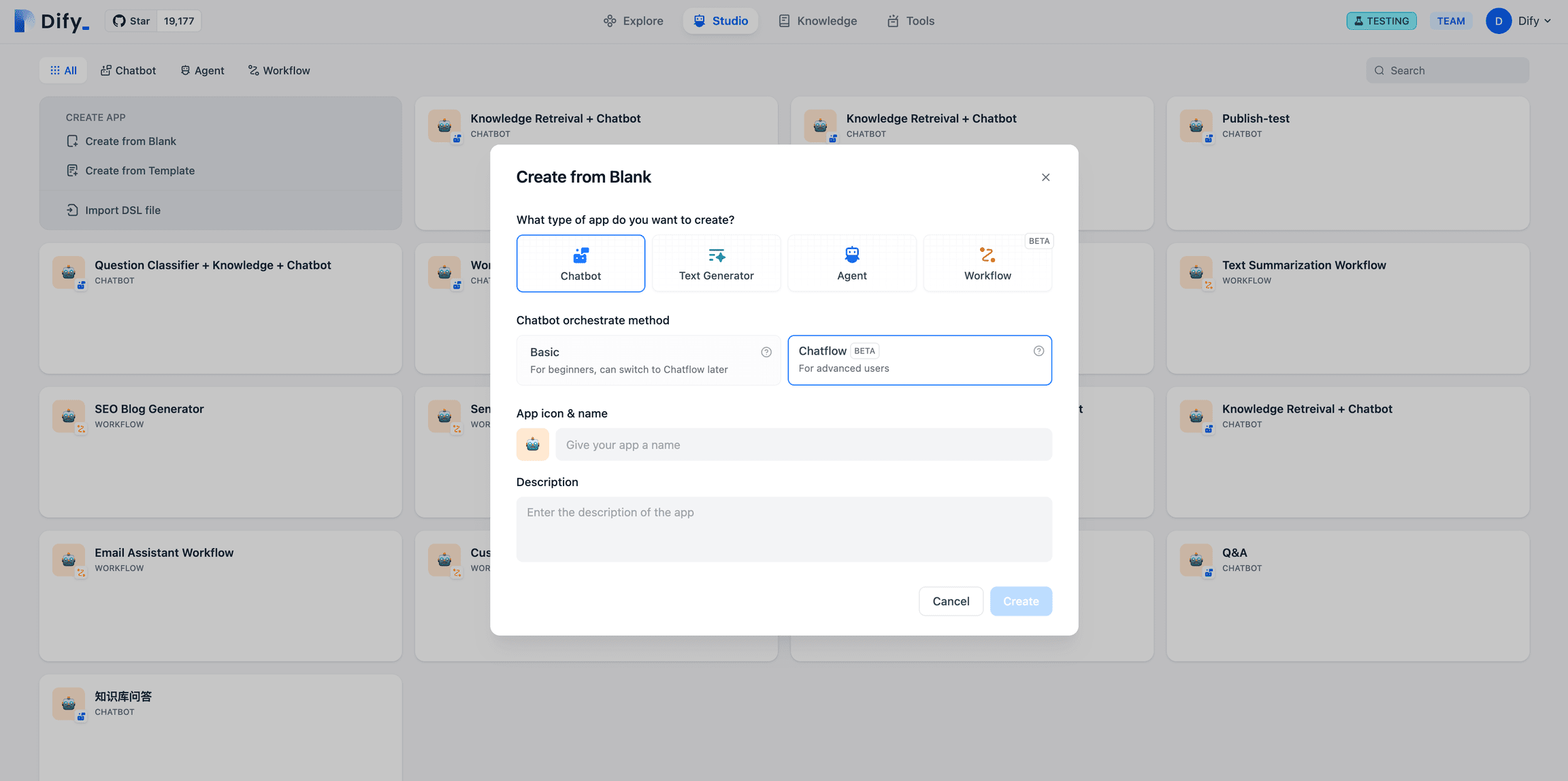

2.2 Installing Continue in VScode and Configuring Local Ollama

- Installing Models in the VScode Plugin Library

Search for Continue and see the following icon, click on install or Install

- (Recommended Action) It is highly recommended to move "Continue" to the right sidebar of VS Code. This helps to keep the file explorer open when using Continue, and allows you to toggle the sidebar with a simple keyboard shortcut.

- Configuration Ollama

Way 1: Click Select model---- select Add model---- click Ollama---- select Autodetect

This will automatically select the model we just downloaded on Ollama for the code reasoning tool.

Mode 2: Click the Setup button as shown in the figure to enter the configuration page, paste the following configuration{ "models": [ { "title": "Ollama", "provider": "ollama", "model": "AUTODETECT" } ], "tabAutocompleteModel": { "title": "Starcoder 2 3b", "provider": "ollama", "model": "starcoder2:3b" }, "embeddingsProvider": { "provider": "ollama", "model": "nomic-embed-text" } }

2.3 Basic usage guidelines

- Easy to understand code

- VS Code.

cmd+L( MacOS ) /ctrl+L( Windows ) - JetBrains.

cmd+J( MacOS ) /ctrl+J( Windows )

- Using the Tab Key to Autocomplete Code

- VS Code.

tab( MacOS ) /tab( Windows ) - JetBrains.

tab( MacOS ) /tab( Windows )

- AI quickly modifies your code

- VS Code.

cmd+I( MacOS ) /ctrl+I( Windows ) - JetBrains.

cmd+I( MacOS ) /ctrl+I( Windows )

- Ask questions based on the current code directory

- VS Code.

@codebase( MacOS ) /@codebase( Windows ) - JetBrains : future support

- Ask a question based on the official documentation

- VS Code.

@docs( MacOS ) /@docs( Windows ) - JetBrains.

@docs( MacOS ) /@docs( Windows )

2.4 References

Official website: https://docs.continue.dev/intro

Command line environment Aider access

Aider is a command line based programming assistant that has top level system access and feature usage than an IDE, but it also means it's harder to get started.

The following tutorials will teach you to install Aider and connect to the Ollama service

Also, make sure:

- Have git installed and experience with git.

- Experience with python

3.1 Installing Aider

- Aider has a lot of dependencies on python environments, so it is recommended that you use conda to create a new environment or python to create a new virtual environment, as demonstrated by the following example using conda.

- Start a new conda virtual environment (same for python virtual environment)

conda create -n aider_env python=3.9 - Enter this virtual environment

conda activate aider_env - Follow these commands to install

python -m pip install aider-chat

- Start a new conda virtual environment (same for python virtual environment)

3.2 Setting up Ollama and starting it

- Start the command line and set the environment variables as follows

export OLLAMA_API_BASE=http://127.0.0.1:11434 # Mac/Linux环境 setx OLLAMA_API_BASE http://127.0.0.1:11434 # Windows环境,设置以后重启shell工具 - cd to access your local repository, if not, Aider will automatically initialize the git repository in the current directory.

#example cd D:\Myfile\handy-ollama\handy-ollama\handy-ollama - Run Aider with the following command

aider --model ollama/<你的模型名字> #example #aider --model ollama/llama3:8bYou can also use the .env file to configure Aider information.

for example## 指定OPENAI_KEY(如果有的话) OPENAI_API_KEY=xxx ## 指定你使用model AIDER_MODEL=ollama/llama3:8bMore configuration references:https://aider.chat/docs/config/dotenv.html

- Successful if you enter the following page

- You can now chat with him or send him some commands and it can modify your code directly

3.3 Basic use

- You can ask it for some basic information about this repository

- Add Files

/add <file1> <file2> ...Then you can do some questioning based on these documents

- You can use Aider without adding any files and it will try to figure out which files need to be edited based on your request. (But thought is often bad)

- Add related files to make changes or answer questions about a specific code or file. (Don't add a bunch of extra files. If you add too many files, the generation will not be as effective and will cost you more money. Token ).

Example:

- Use Aider to make changes directly to code files or other files, such as adding a summary to the last line of the Readme.

View the actual document

- If you don't want Aider to actually manipulate the file, you can prefix the dialog with the operator /ask

- Delete all added files with /drop

3.4 References

A list of commonly used slash commands:

| command | descriptive |

|---|---|

/add | Add files to the chat so GPT can edit or review them in detail |

/ask | Ask questions about the code base without editing any of the files |

/chat-mode | Switch to new chat mode |

/clear | Clear Chat History |

/clipboard | Add images/text from clipboard to chat (optionally provide names for images) |

/code | Requesting changes to the code |

/commit | Submit edits made to the repository outside of chat (submission message optional) |

/diff | Displays the difference in changes since the last message |

/drop | Remove files from chat session to free up context space |

/exit | Exit the application |

/git | Run the git command |

/help | Ask a question about aider |

/lint | Code checking and fixing of files provided or in chat (if no files are provided) |

/ls | Lists all known files and indicates which files are included in the chat session |

/map | Print the current warehouse information |

/map-refresh | Force the warehouse information to be refreshed and printed |

/model | Switching to a new language model |

/models | Search the list of available models |

/quit | Exit the application |

/read-only | Add file to chat, fyi only, not editable |

/run | Run the shell command and optionally add the output to the chat (alias: !) |

/test | Run the shell command and add the output to the chat on a non-zero exit code |

/tokens | Report the number of tokens currently in use in the chat context |

/undo | Undo the last git commit if it was done by aider |

/voice | Record and transcribe voice input |

/web | Capture web pages, convert to markdown and add to chat |

For more usage, please refer to https://aider.chat/docs/usage.html

IV. Integrated development environment RooCline access (new)

4.1 Cline and RooCline

Cline It is the hottest ai programming plugin for vscode in the world. It uses AI assistance in a different way than most tools on the market. Rather than focusing solely on code generation or completion, it operates as a system-level tool that can interact with the entire development environment. This feature is especially important when dealing with complex debugging scenarios, large-scale refactoring or integration testing, as we can see in the Cline section of the reference. Today we are going to introduce Roo Cline.

RooCline is an enhanced version of Cline focused on improving development efficiency and flexibility. It supports multiple languages, multiple models (e.g. Gemini, Meta, etc.) and provides features such as smart notifications, file handling optimization, and missing code detection. In addition, RooCline supports running in parallel with Cline for developers who need to work on multiple tasks at the same time.

even though Roo Cline It is still not as large as Cline, but it is growing fast. It adds some extra experimental features to Cline, and can also self-write parts of its code, requiring only a small amount of human intervention.

And Cline consumes a high amount of Token, which more or less affects the daily development cost.

4.2 Installing RooCline on VScode and Configuring Local Ollama

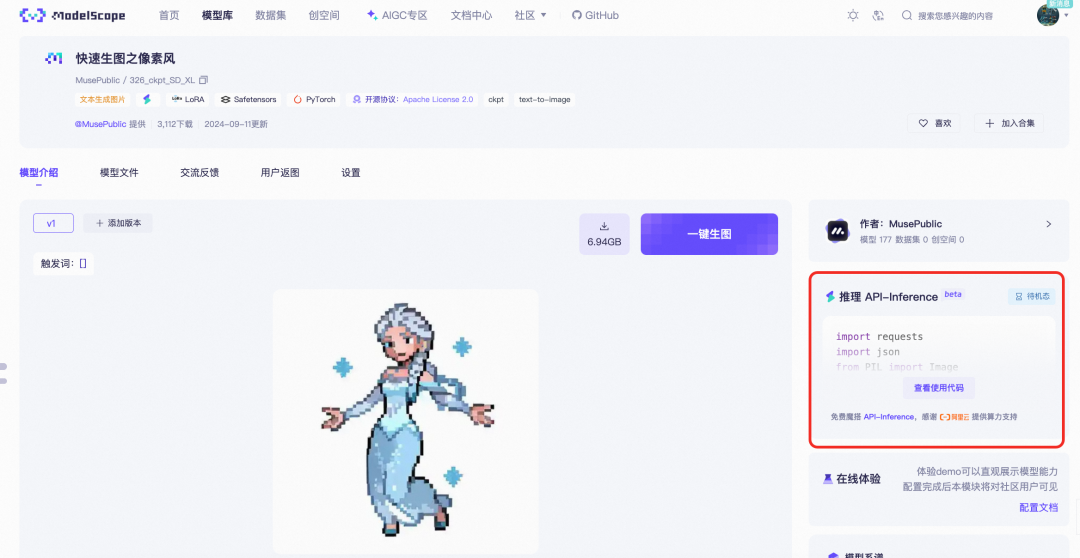

[](https://github.com/datawhalechina/handy-ollama/blob/main/docs/C7/1.%20%E6%90%AD%E5%BB%BA%E6%9C%AC%E5%9C%B0%E7%9A%84%20AI%20Copilot%20%E7%BC%96%E7%A8%8B%E5%8A%A9%E6%89%8B.md#42-%E5%9C%A8-vscode-%E5%AE%89%E8%A3%85-roocline-%E4%BB%A5%E5%8F%8A%E9%85%8D%E7%BD%AE%E6%9C%AC%E5%9C%B0-ollama)- Ollama installation models (in deepseek-r1:1.5b for example)

ollama run deepseek-r1:1.5b - Installing Models in the VScode Plugin Library

Search for Roo Cline, see the following icon, click install or install

- Configuration ollama

- Click on the Roo cline icon on the right

- Click on the Settings icon

- Input model name

- Click Done

- Now you can use vscode as you would any other integrated editor + ai.

Next, we'll cover some features that are unique to Roo cline!

4.3 Roo Cline Features

Note that these features rely on certain large modeling capabilities, especially when applied at the control system level. The deepseek-r1:1.5b in our example does not have the ability to perform these operations and will report too much complexity. It is recommended to access deepseek api access (it's cheap).

The following 1~2 functions can be accessed by clicking the Prompt icon in the function area.

- Support for arbitrary APIs/models

- OpenRouter/Anthropic/Glama/OpenAI/Google Gemini/AWS Bedrock/Azure/GCP Vertex

- Native models (LM Studio/Ollama) and any OpenAI-compatible interface

- Different models can be used for different patterns (e.g., high-level model for architectural design, economic model for day-to-day coding)

- Session-level usage tracking (Token consumption and cost statistics)

- Customization mode: customization by mode Roo Code roles, commands, and permissions:

- built-in mode

- Code Mode: default multifunctional coding assistant

- Architect Patterns: system-level design and architectural analysis

- Ask Model: In-depth research and questions and answers

- User Creation Mode

- Type "Create a new mode for" to create a customized role.

- Each mode can have independent commands and skill sets (managed in the Prompts tab)

- Advanced Features

- File type restrictions (e.g. ask/architect mode only edits markdown)

- Customized file rules (e.g., operate only .test.ts test files)

- Switching between modes (e.g., auto-to-code mode for specific functions)

- Autonomous creation of new models (with role definitions and file limits)

- built-in mode

- Granularity Control Roo cline Permissions

Click on the Settings screen to enter: you can control the Roo cline permissions in the Auto-Approve Setting.

Note that this controls which actions can be performed automatically without your consent. Otherwise, you will be asked for a click to confirm before proceeding.

- File and Editor Operations

- Project file creation/editing (show difference comparison)

- Automatic response to code errors (missing imports, syntax issues, etc.)

- Tracking changes via editor timeline (for easy review/rollback)

- Command Line Integration

- Package management/build/test commands

- Monitor output and automatically adapt to errors

- Continuously running development servers in the background Support for approval policies: line-by-line confirmation/automatic approval of routine operations

- Browser Automation

In the third step, select the following options to give Roo Cline web automation capabilities

- Launching local/remote web applications

- Tap/Enter/Scroll/Screenshot operations

- Collect console logs to debug runtime issues For end-to-end testing and visual verification

- MCP Tool Extension

Model Context Protocol (MCP) is a capability extension protocol for Roo that allows Roo to communicate with local servers that provide additional tools and resources. It is the equivalent of putting an "external brain" on the programming assistant.

Local/cloud dual mode: you can use your computer's local tools and services, but also can connect to the community to share the ready-made modules

Dynamically create a tool: just tell Roo to "make a tool that looks up the latest npm documentation" and it will automatically generate the docking code.

Examples:- Picking up community resources: using wheels built by others

For example, accessing the existing "Weather API Query Tool".

Direct use of tools validated by the open source community - Self-built private tools: customized to business needs

Say, "Make a tool that grabs internal company logs."

Roo is automatically generated:# 示例自动生成的工具框架 class LogTool(MCPPlugin): def fetch_logs(self, service_name): # 自动对接公司日志系统 # 返回最近1小时的关键错误日志 ...Once you've vetted it, the tool will appear on Roo's skills list!

- Security mechanism: all new tools need to be manually reviewed and authorized before activation, to prevent the arbitrary call of sensitive interfaces

- Picking up community resources: using wheels built by others

- Context@: a way to provide additional context:

@file: Embedded file content@folder: Contains the full catalog structure@problems: Introduce workspace errors/warnings@url: Get URL documentation (to markdown)@git: Provides Git commit logging/discrepancy analysis to help focus on key information and optimize token usage.

References (recommended reading)

Roo Cline github address: https://github.com/RooVetGit/Roo-Code Now renamed to Roo CodeMore new features for you to discover!

Cline Introduction:Cline (Claude Dev): VSCode Plugin for Automated Programming

AI Programming Tools Comparison:Comparison of Trae, Cursor, Windsurf AI Programming Tools

Complete an AI service website from scratch without writing a single line of code using ai programming tools: https://cloud.tencent.com/developer/article/2479975

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...