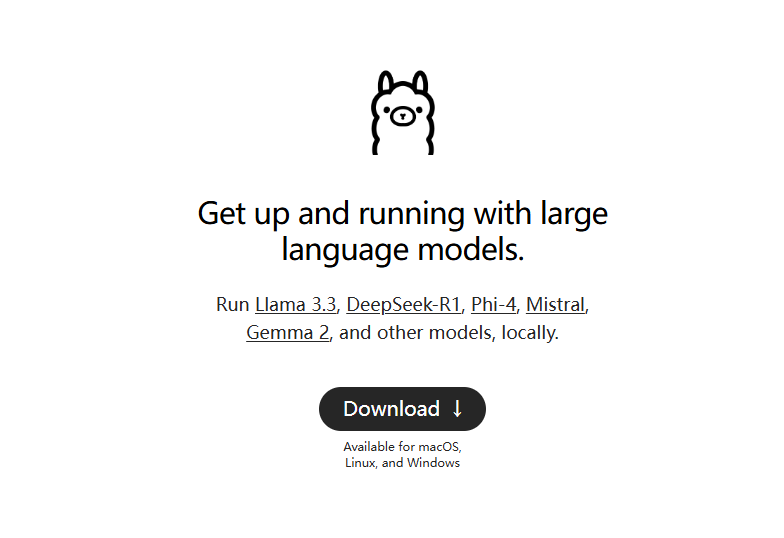

Ollama: Native One-Click Deployment of Open Source Large Language Models

Ollama General Introduction

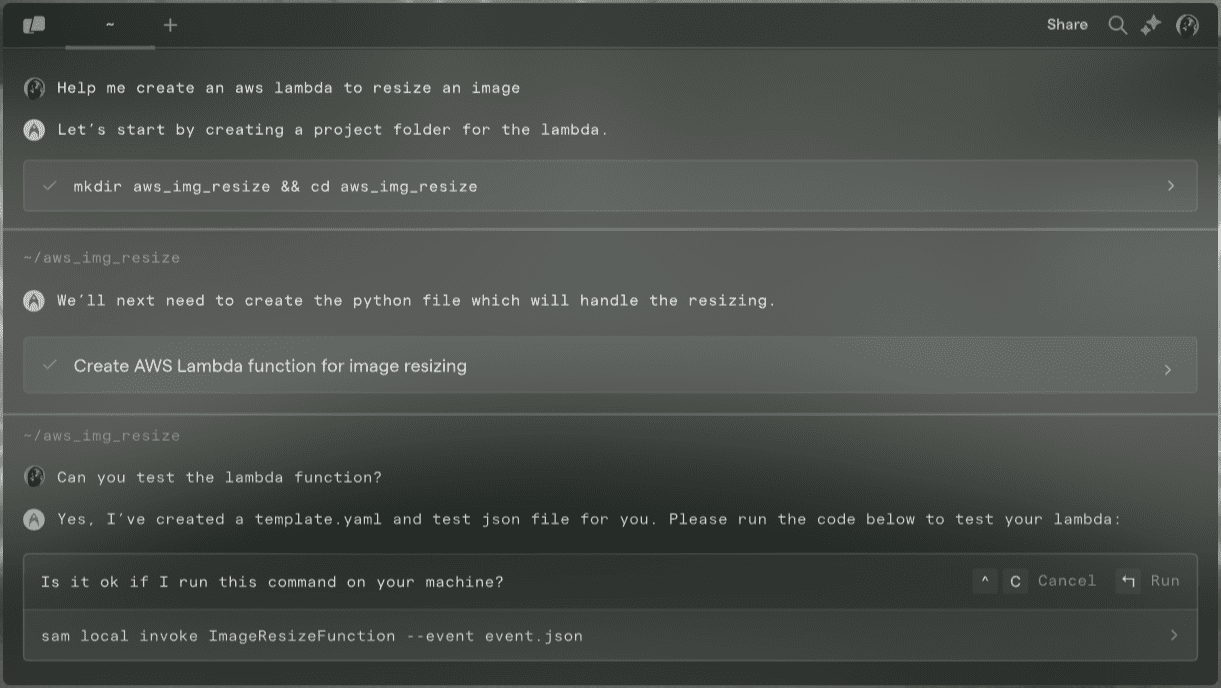

ollama is a lightweight framework for running native language models, allowing users to easily build and run large language models. It offers multiple quick start and installation options, supports Docker, and includes a rich set of libraries for users to choose from. It is easy to use, provides a REST API, and has a variety of plugins and extensions that integrate with the community.

ollama is a pure command line tool, personal computer use, recommended deployment of local chat interface, such as: Open WebUI, Lobe Chat, NextChat

Modify the default installation directory: https://github.com/ollama/ollama/issues/2859

Ollama Feature List

Getting Large Language Models Up and Running Fast

Support macOS, Windows, Linux systems

Provides libraries such as ollama-python, ollama-js, etc.

Including Llama 2. Mistral, Gemma et al. preconstructed model

Supports both local and Docker installations

Provide customized model function

Support for model conversion from GGUF and PyTorch

Provide CLI operation guide

Provide REST API support

Commonly used ollama commands

Pull model: ollama pull llama3.1

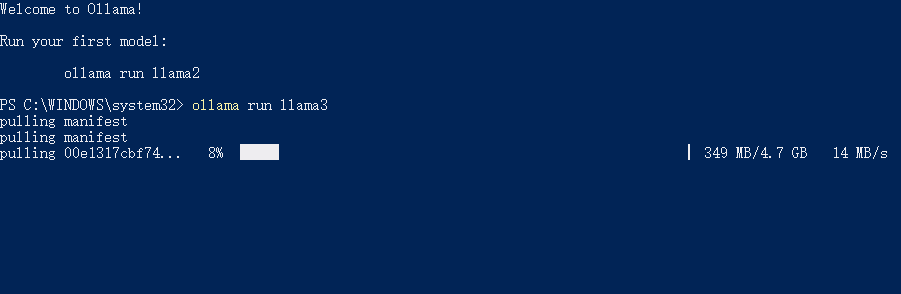

Run model: llama run llama3.1

Delete model: llama rm llama3.1

List all available models: ollama list

Query API service address: ollama serve (default http://localhost:11434/)

Ollama Help

Installation scripts and guides available through the ollama website and GitHub page

Installation using the provided Docker image

Model creation, pulling, removing and copying via CLI operations

Initialize and run local builds

Run the model and interact with it

Some of the models supported by Ollama

| Model | Parameters | Size | Download |

|---|---|---|---|

| Llama 2 | 7B | 3.8GB | ollama run llama2 |

| Mistral | 7B | 4.1GB | ollama run mistral |

| Dolphin Phi | 2.7B | 1.6GB | ollama run dolphin-phi |

| Phi-2 | 2.7B | 1.7GB | ollama run phi |

| Neural Chat | 7B | 4.1GB | ollama run neural-chat |

| Starling | 7B | 4.1GB | ollama run starling-lm |

| Code Llama | 7B | 3.8GB | ollama run codellama |

| Llama 2 Uncensored | 7B | 3.8GB | ollama run llama2-uncensored |

| Llama 2 13B | 13B | 7.3GB | ollama run llama2:13b |

| Llama 2 70B | 70B | 39GB | ollama run llama2:70b |

| Orca Mini | 3B | 1.9GB | ollama run orca-mini |

| Vicuna | 7B | 3.8GB | ollama run vicuna |

| LLaVA | 7B | 4.5GB | ollama run llava |

| Gemma | 2B | 1.4GB | ollama run gemma:2b |

| Gemma | 7B | 4.8GB | ollama run gemma:7b |

Ollama Download

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...