Oliva: a voice-controlled multi-intelligence product search assistant

General Introduction

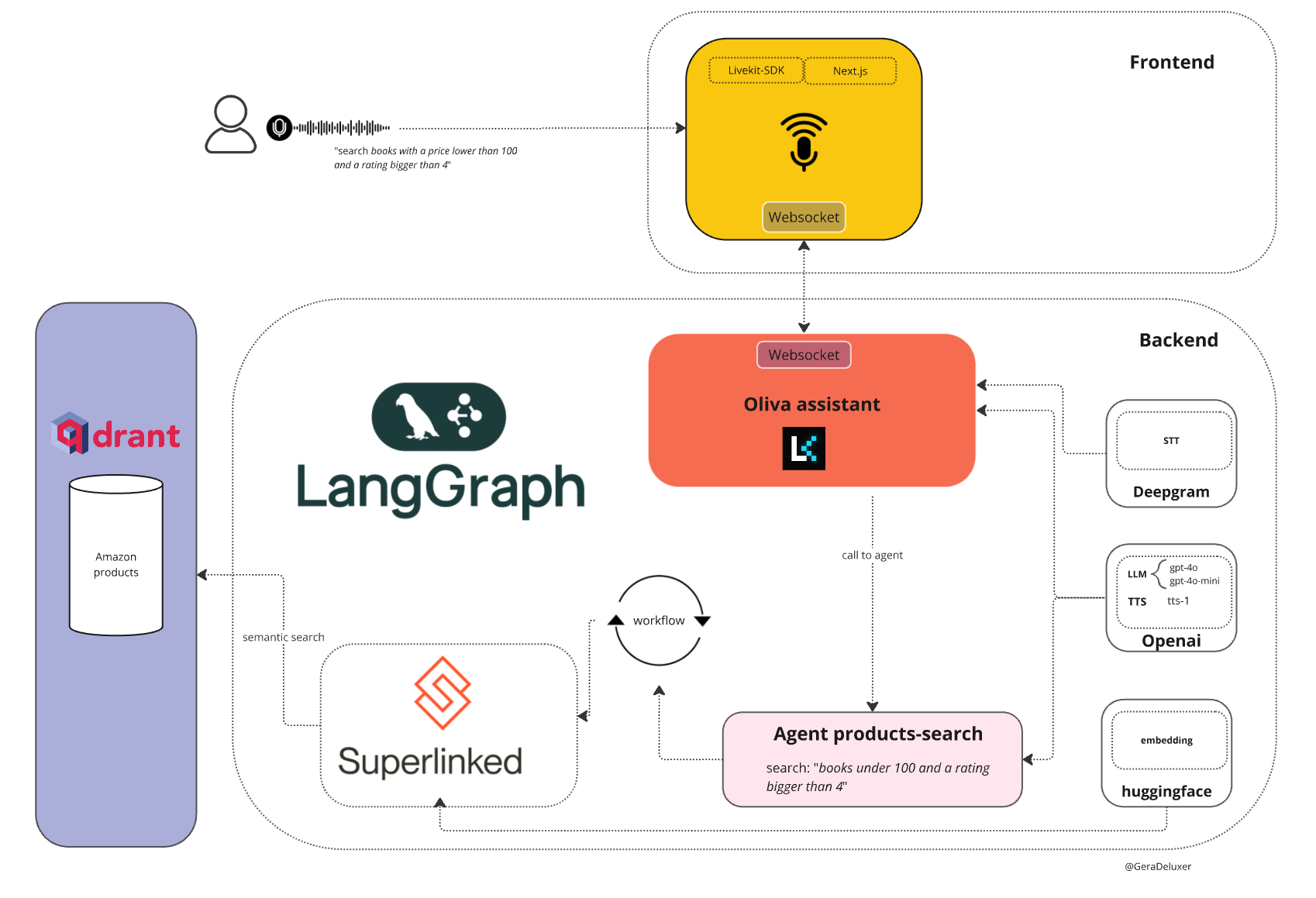

Oliva is an open source multi-intelligence assistant tool developed by Deluxer on GitHub. It helps users search for product information in the Qdrant database through the collaboration of multiple AI intelligences. The main features are voice operation support, combined with LangChain and Superlinked technology to provide efficient semantic search function.Oliva is suitable for developers or researchers, the code is completely open, users can download, modify and deploy. It is not only a practical tool, but also a platform to learn about multi-intelligence architecture. The project relies on modern technology stacks such as Livekit and Deepgram, which support real-time voice interaction.

Function List

- Voice-controlled search: the user inputs commands by voice and the intelligent body completes the search task.

- Multi-Intelligence Collaboration: multiple AI intelligences divide up the processing tasks, coordinated by a supervisor intelligence.

- Semantic search support: Based on LangChain and Superlinked, it realizes accurate content retrieval.

- Open source code: complete code is provided and users are free to modify and extend the functionality.

- Local or cloud deployment: support for running locally or on a server with high flexibility.

Using Help

Oliva is a GitHub-based open source project that requires configuration of the environment and dependencies before use. The following is a detailed installation and usage guide to help you get started quickly.

Installation process

- Preparing the Python Environment

Oliva requires Python 3.12 or later. Check the version:

python --version

If you don't have the right version, go to the Python website and download and install it.

- cloning project

Open https://github.com/Deluxer/oliva, click the "Code" button, copy the HTTPS link, and run it:

git clone https://github.com/Deluxer/oliva.git

You need to install Git first, download address: Git official website.

- Go to the project directory

Download and go to the folder:

cd oliva

- Creating a Virtual Environment

To avoid dependency conflicts, it is recommended to create a virtual environment:

python -m venv .venv

Activate the environment:

- Windows:

.venv\Scripts\activate - Mac/Linux:

source .venv/bin/activate

- Installation of dependencies

utilizationuvTool synchronization dependencies:

uv sync

This will automatically create the virtual environment and install all dependencies such as LangChain, Superlinked, etc. If you don't have uv, installed first:

pip install uv

- Configuring the Qdrant Database

Run Qdrant with Docker:

docker run -p 6333:6333 -p 6334:6334 -e QDRANT__SERVICE__API_KEY=your_api_key -v "$(pwd)/qdrant_storage:/qdrant/storage:z" qdrant/qdrant

You need to install Docker first, download it from: Docker official website. Place the your_api_key Replace with a custom key.

- Setting up a Livekit account

exist Livekit Cloud Register for the following information:

LIVEKIT_URL=wss://your-project.livekit.cloud

LIVEKIT_API_KEY=your_key

LIVEKIT_API_SECRET=your_secret

Save these values to an environment variable.

- Configuring Environment Variables

Copy the example file and edit it:

cp .env.example .env

exist .env The file is filled with Livekit and Deepgram (Deepgram needs to be in the Deepgram Official Website (Register for access).

- Populating the database

on the basis of tabular-semantic-search-tutorial instructions to set up the data, or just unzip theassets/snapshot.zipsnapshot to Qdrant.

Main Functions

- Launching the voice assistant

Run it in the project directory:

make oliva-start

This will start the voice assistant service. Make sure Qdrant and Livekit are running.

- Connecting to the front-end interface

interviews Agent Playground, enter your Livekit project credentials to connect to the assistant. Or run it locally: - clone (loanword) Agent Playground Warehouse::

git clone https://github.com/livekit/agents-playground.git - Install the dependencies and start:

npm install npm run start

Enter a voice command in the interface, such as "Search for a product".

- semantic search

There are two ways to search: - Use Superlinked:

make agent-search-by-superlinked - Use JSON files:

make agent-search-by-json

Search results are returned by intelligences from the Qdrant database.

Featured Function Operation

- voice interaction

Oliva supports entering commands by voice. After startup, in the Agent Playground Click on the microphone icon in Deepgram, say what you want (e.g., "Find a cell phone"), Deepgram converts the speech to text, and the intelligences process it and return the results. The supervisor intelligence will decide which intelligence will perform the task. - Multi-Intelligence Collaboration

The project uses a graphical workflow (LangGraph) containing multiple nodes (e.g., search, generate) and conditional edges. The configuration file is in theapp/agents/langchain/config/in which intelligent body behavior can be adjusted. The core logic in theapp/agents/Catalog. - Custom extensions

Want to add features? Editapp/agents/implementations/in the code of the smart body. For example, to add a new tool you need to add theapp/agents/langchain/tools/Defined in.

caveat

- Ensure that the network is open, Livekit and Deepgram require an internet connection.

- The project has many dependencies, and initial configuration may require multiple debugging sessions.

- Check GitHub commits to make sure you're using the latest code.

With these steps, you can run Oliva in its entirety and experience the voice-controlled search function.

application scenario

- Product Information Inquiry

Users ask for product details by voice and Oliva returns results from the Qdrant database, suitable for e-commerce scenarios. - Technical Learning

Developers study multi-intelligent body architectures and modify code to test different workflows. - Live Demo

Demonstrate voice assistant functionality in meetings to highlight AI collaboration capabilities.

QA

- What should I do if my voice assistant doesn't respond?

Check that the Livekit and Deepgram keys are configured correctly and make sure that the network connection is working. - What should I do if Qdrant fails to start?

Verify that Docker is running and that ports 6333 and 6334 are not occupied. - Can it be used offline?

Voice function requires internet connection, search function can run offline if data is localized.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...