Effective Use of Language Models in Obsidian Notes

Today I read an article on Hacker News about a new plugin for Obsidian that integrates with ChatGPT. There are quite a few of these tools on the market and I'm happy to see the different ways people are applying them to Obsidian. they can help people make connections and give you more depth to your note content. Some commenters feel that this will replace the work you would otherwise do on your own, however I think this actually provides you with novel and amazing capabilities.

Talking to notes

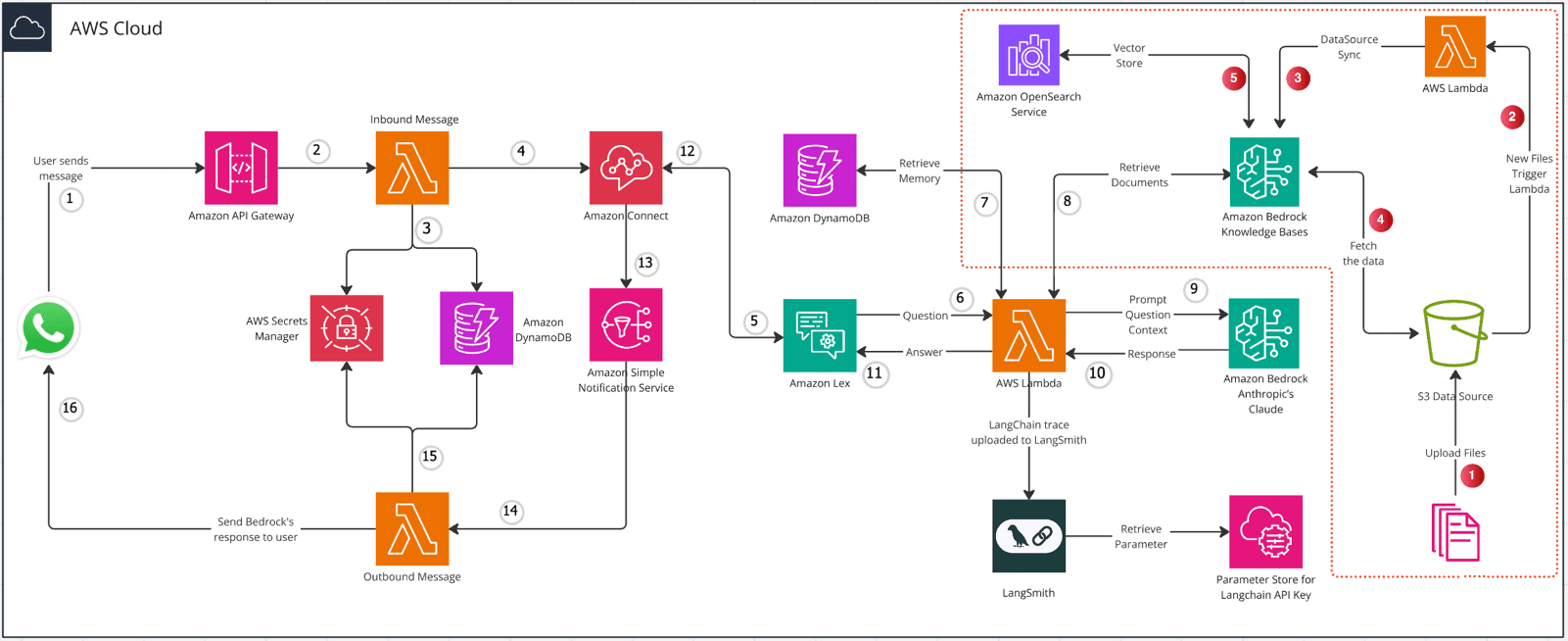

Perhaps the most direct and most important thing you want to do is to have a conversation with the contents of your notes. Ask questions to gain insight. It would be very convenient to be able to aim this model directly at your notes and then all is well. But most models can't handle everything at once.

When you have a question, not all notes are relevant. You need to find the relevant parts and then submit them to the model.Obsidian provides a search function, but it only searches for exact words and phrases, what we need to search for are concepts. This is where embedded indexes come in. Creating an index is actually quite simple.

Building an indexer

When you create an Obsidian plugin, you can set it to perform certain actions at startup, and then perform other actions when you execute commands, open notes, or do other activities in Obsidian. So we want the plugin to understand your notes right off the bat, and it should save its progress so that it doesn't need to generate the index again. Let's look at a code sample that shows how to index our notes. I am using here the [Llama Index], [LangChain] is also an excellent choice.

import { VectorStoreIndex, serviceContextFromDefaults, storageContextFromDefaults, MarkdownReader } from "llamaindex" ;

const service_context = serviceContextFromDefaults({ chunkSize: 256 })

const storage_context = await storageContextFromDefaults({ persistDir: ". /storage" });const mdpath = process.argv[2];

const mdreader = new MarkdownReader();

const thedoc = await mdreader.loadData(mdpath)

First, we have to initialize an in-memory data warehouse. This is the storage facility that comes with the Llama Index, but Chroma DB is also a popular choice. The second line of code indicates that we want to persist everything in the index. Next, get the file path and initialize a reader, then read the file.Llama Index understands Markdown, so it can read and index the content correctly. It also supports PDFs, text files, Notion documents, and more. It not only stores words, but also understands the meaning of words and their relationship to other words in the text.

await VectorStoreIndex.fromDocuments(thedoc, { storageContext: storage_context, serviceContext: service_context });

Now, this part of the code utilizes one of OpenAI's services, but it is separate from ChatGPT and uses a different model and product.Langchain also offers alternatives to do this locally, albeit a little slower.Ollama also offers embedding. You can also use these services on a super-fast self-hosted instance in the cloud, and then shut down the instance when the indexing is complete.

Search Notes

We have indexed this file.Obsidian can list all files so we can run this process over and over again. We are persisting the data, so we only need to operate once. Now how do we ask the question? We need some code that goes to our notes and finds the relevant part, submits it to the model, and uses that information to get an answer.

const storage_context = await storageContextFromDefaults({ persistDir: ". /storage" });

const index = await VectorStoreIndex.init({ storageContext: storage_context });

const ret = index.asRetriever();

ret.similarityTopK = 5

const prompt = process.argv[2];

const response = await ret.retrieve(prompt);

const systemPrompt = `Use the following text to help come up with an answer to the prompt: ${response.map(r => r.node.toJSON().text).join(" - ")}`

In this code, we use the processed content to initialize the index. The line `Retriever.retrieve` will use the hints to find all relevant snippets of notes and return the text to us. Here we set to use the first 5 best matches. So I will get 5 text snippets from the notes. With this raw data, we can generate a system prompt to guide the model on how it should answer when we ask a question.

const ollama = new Ollama().

ollama.setModel("ollama2");

ollama.setSystemPrompt(systemPrompt);

const genout = await ollama.generate(prompt);

Next, we started working with models. I used a model I created a few days ago in [npm] on the library. I set the model to usellama2, it has been downloaded to my machine with the command `ollama pull llama2`. You can experiment with different models to find the answer that works best for you.

To get a quick answer, you should choose a small model. But at the same time, you should also choose a model with an input context size large enough to hold all the text fragments. I set up up to 5 text snippets of 256 tokens. The system prompt includes our text snippets. Simply ask a question and get an answer within seconds.

Great, now our Obsidian plugin is able to display the answers appropriately.

What else can we do?

You could also consider simplifying the text content, or finding the best keywords to match your text and adding them to the metadata so you can better link your notes together. I've tried crafting 10 useful questions and answers and sending them to [Anki] You may want to try different models and prompts to accomplish these different purposes. It is very easy to change the cues and even the model weights based on the needs of the task.

I hope this post has given you some insight into how to develop your next great plugin for Obsidian or any other note-taking tool. As you can see in [ollama.com] seen, using the latest native AI tools is a breeze, and I want you to show me your creativity.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...