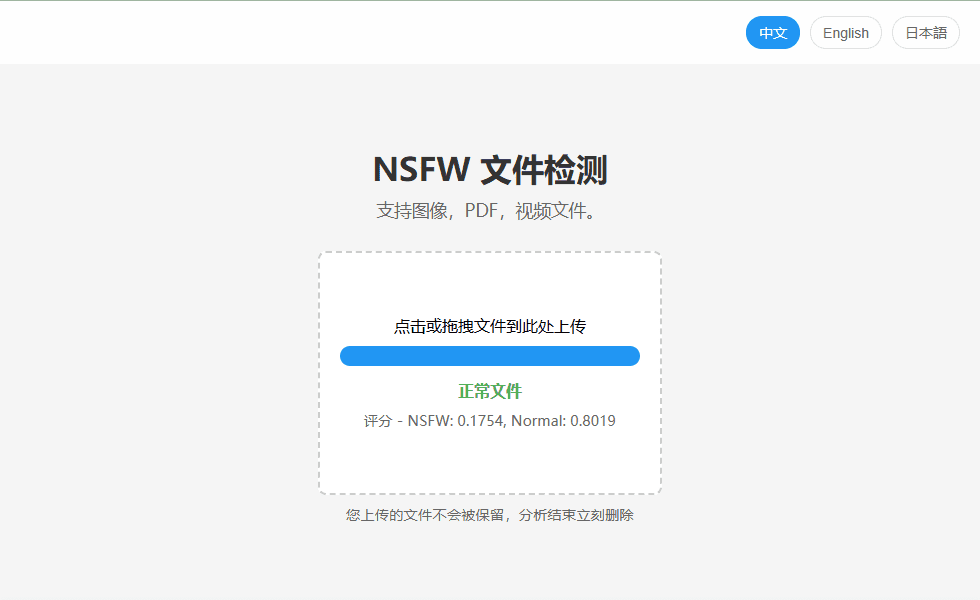

NSFW Detector: Detects if a file contains NSFW content to protect data security.

General Introduction

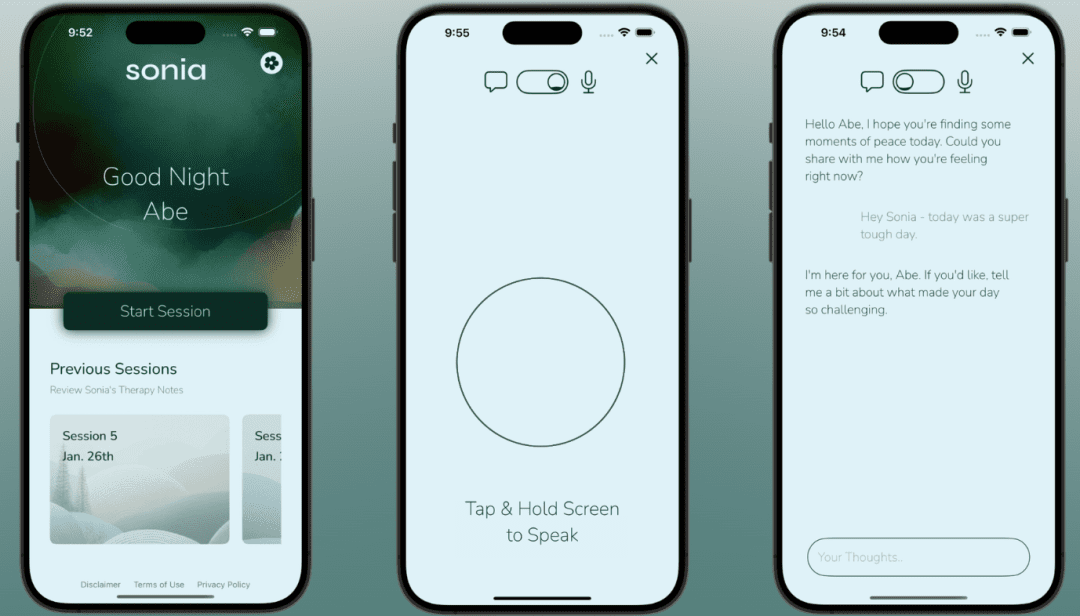

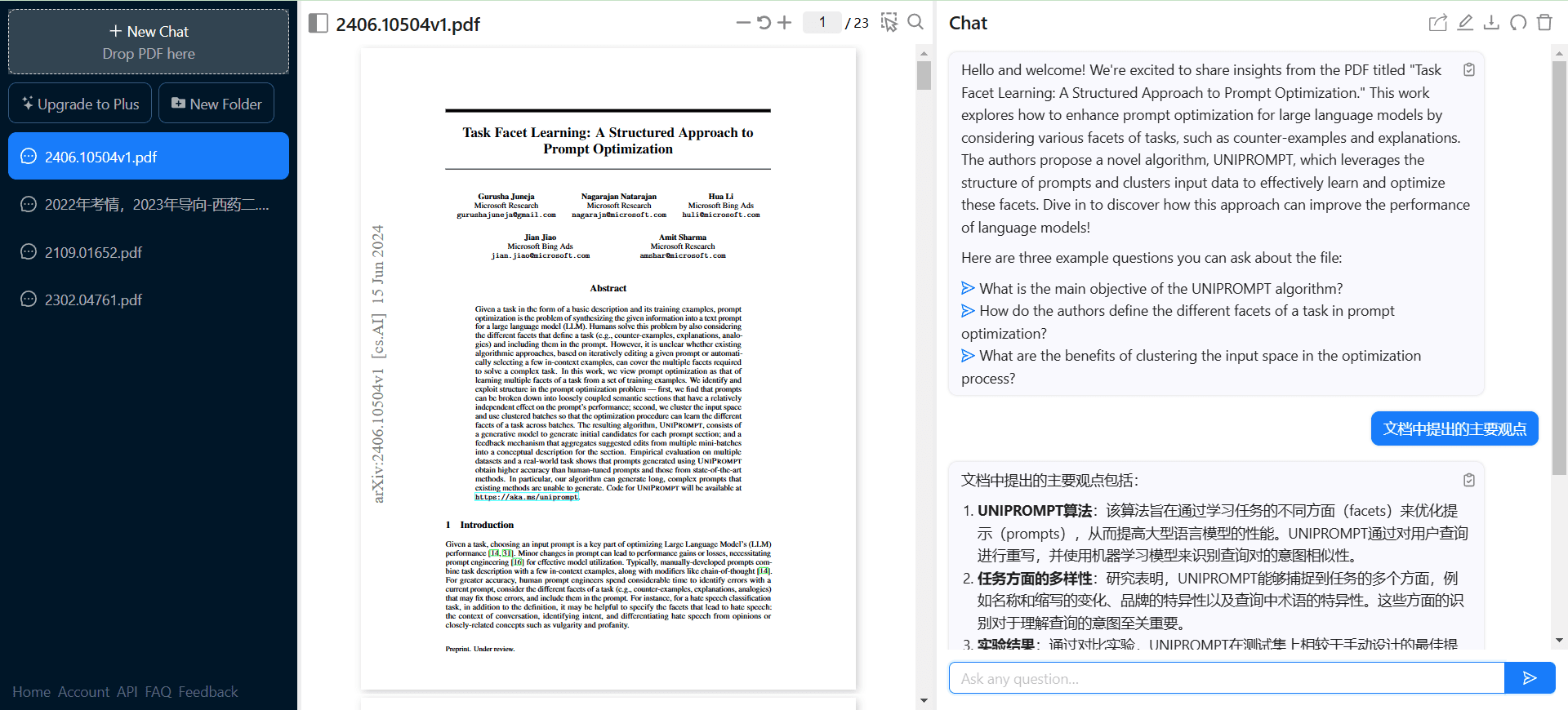

NSFW Detector is an AI-based discomfort content detection tool, mainly used to detect whether images, videos, PDF files, etc. contain discomfort content. The tool utilizes the Falconsai/nsfwimagedetection model and uses Google's vit-base-patch16-224-in21k model for detection.NSFW Detector supports CPU operation without GPU and is suitable for most servers. It provides services through an API for easy integration with other applications and supports Docker deployment for distributed deployment. With high accuracy and data security, the tool can run locally to protect user data.

Function List

- AI detection: Provides highly accurate detection of uncomfortable content based on AI models.

- Multi-file type support: Supports detection of images, videos, PDF files and files in compressed packages.

- CPU operation: No GPU support is required and works with most servers.

- API Services: Detection services are provided through an API for easy integration with other applications.

- Docker Deployment: Supports Docker deployment for distributed deployments.

- local operation: Protecting user data security, the detection process is done locally.

Using Help

Installation and Deployment

- Docker Deployment::

- Start the API server:

bash

docker run -d -p 3333:3333 --name nsfw-detector vxlink/nsfw_detector:latest - If you need to detect a file with a local path to the server, you can mount the path to the container:

bash

docker run -d -p 3333:3333 -v /path/to/files:/path/to/files --name nsfw-detector vxlink/nsfw_detector:latest

- Start the API server:

Using APIs for Content Inspection

- Detecting Image Files::

curl -X POST -F "file=@/path/to/image.jpg" http://localhost:3333/check

- Detecting local file paths::

curl -X POST -F "path=/path/to/image.jpg" http://localhost:3333/check

Testing using the built-in web interface

- Visit the following address:

http://localhost:3333

configuration file

- Edit Configuration File::

- exist

/tmpdirectory to create a file namedconfigfile and configure the behavior of the detector as needed. - Configuration example:

bash

nsfw_threshold=0.5

ffmpeg_max_frames=100

ffmpeg_max_timeout=30

- exist

Performance Requirements

- Up to 2GB of RAM is required to run the model.

- When processing a large number of requests at the same time, more memory may be required.

- Supported architectures: x86_64, ARM64.

Supported file types

- Image (support)

- Video (support)

- PDF files (support)

- Files in the zip package (support)

Public API

If you don't want to deploy it yourself, you can use the public API service provided by vx.link:

curl -X POST -F "file=@/path/to/image.jpg" https://vx.link/public/nsfw

Note that the public API has a request rate limit of 30 requests per minute.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...