Key RAG technical terms you should know explained

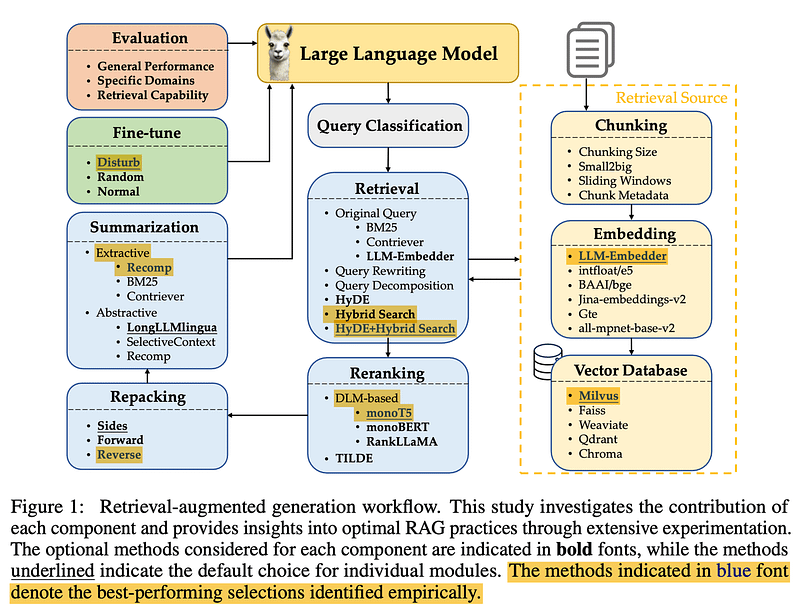

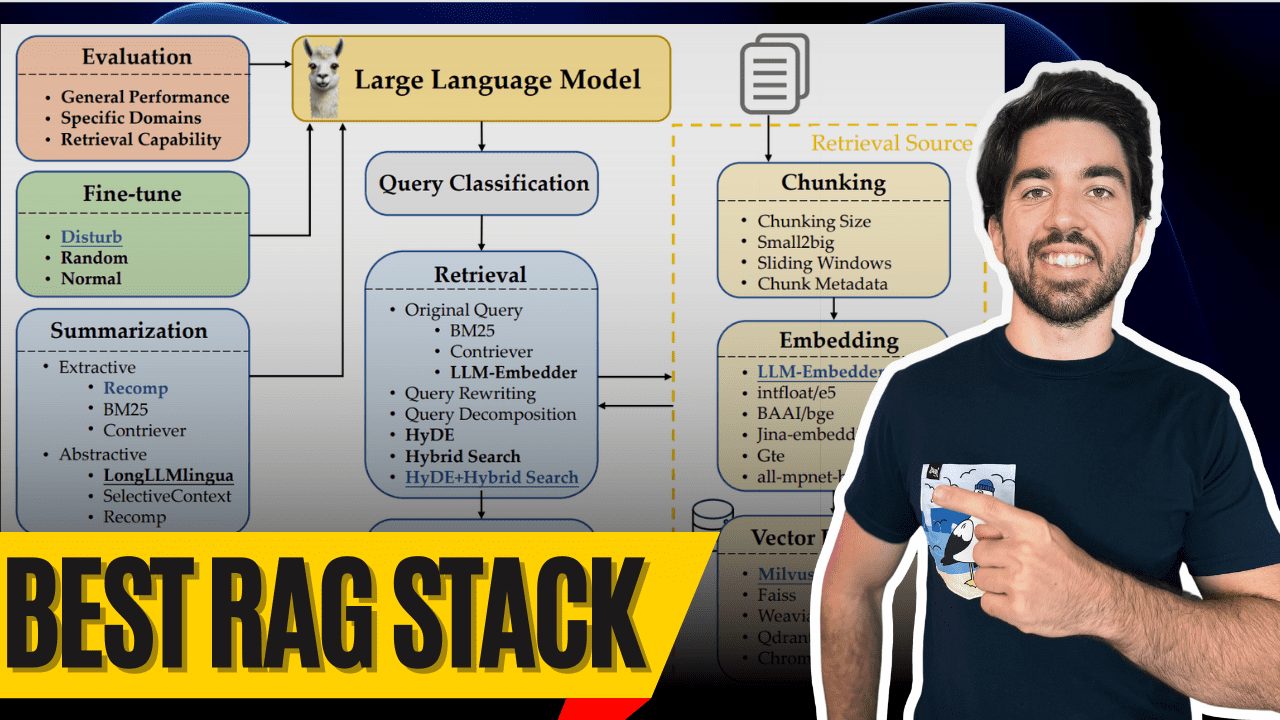

Good morning, everyone! I'm Louis-Francois, co-founder and CTO of Towards AI, and today we're going to dive into what is probably the best Retrieval-Augmented Generation (RAG) stack available today - thanks to the great research of Wang et al. (2024). This research provides a great opportunity to build optimal RAG The system provides valuable insights, and I'm here to parse out the mysteries for you.

So what makes a truly top-notch RAG system? Its components, of course! Let's take a look at these best components and how they work so you too can build a top-notch RAG system, with a multimodal egg at the end.

Query classification

Query Classification

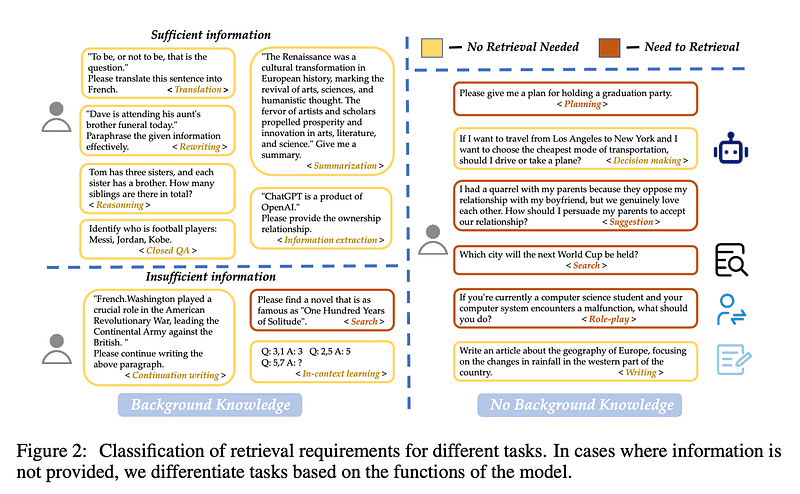

Let's start with the Query classification Start. Not all queries need to be retrieved -- because the big language model sometimes already knows the answer. For example, if you ask "Who is Lionel Messi?" the big language model can already answer you, without the need for retrieval!

Wang et al. created 15 task categories to determine whether a query provides enough information or needs to be retrieved. They trained a binary classifier to differentiate between tasks, labeling tasks "sufficient" as not needing to retrieve and tasks "insufficient" as needing to retrieve. In this image, yellow means no retrieval is needed, and red means some document needs to be retrieved!

chunking

Chunking

Next. chunking. The challenge here is finding the optimal chunk size for your data. Too long? You'll add unnecessary noise and cost. Too short? You'll miss out on contextual information.

Wang et al. found that chunk sizes between 256 and 512 token Between works best. Keep in mind, though, that this depends on the type of data - so be sure to run your evaluation! Pro tip: Use the small2big(start with small chunks when searching, then use larger chunks when generating), or try the sliding window to overlap tokens between chunks.

Metadata and Hybrid Search

Metadata & Hybrid Search

Utilize your metadata! Add titles, keywords, and even hypothetical questions. Then work with the Hybrid Search, which combines vector search (for semantic matching) with the classical BM25 keyword search, your system will perform brilliantly.

HyDE (Generate Pseudo-documents to Enhance Search) is cool and gives better results, but it's extremely inefficient. Stick with hybrid search for now - it's better at balancing performance and is especially good for prototyping.

embedding model

Embedding Model

Selecting the right embedding model It's like finding the right pair of shoes. You wouldn't wear soccer shoes to play tennis.FlagEmbedding's LLM-Embedder The best fit in this study - a good balance between performance and size. Not too big and not too small - just right.

Note that they only tested the open source model, so the Cohere and OpenAI's models are not considered. Otherwise, Cohere is probably your best bet.

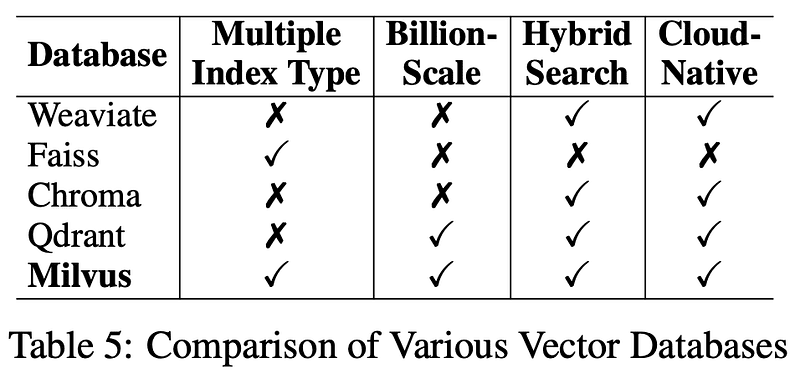

vector database

Vector Database

Now to the database. For long-term use, theMilvus is their vector database of choice. It's open source, reliable, and an excellent choice for keeping your retrieval system running smoothly. I've also provided links in the description below.

Query Conversion

Query Transformation

Before retrieving, you must first conversions user's query! Either through the Query Rewrite to improve clarity, or Query decomposition Breaking down complex problems into smaller ones and retrieving each sub-problem, or even generating pseudo-documents (e.g. HyDE done) and use them in the retrieval process - this step is critical to improving accuracy. Keep in mind that more conversions will increase latency, especially with HyDE.

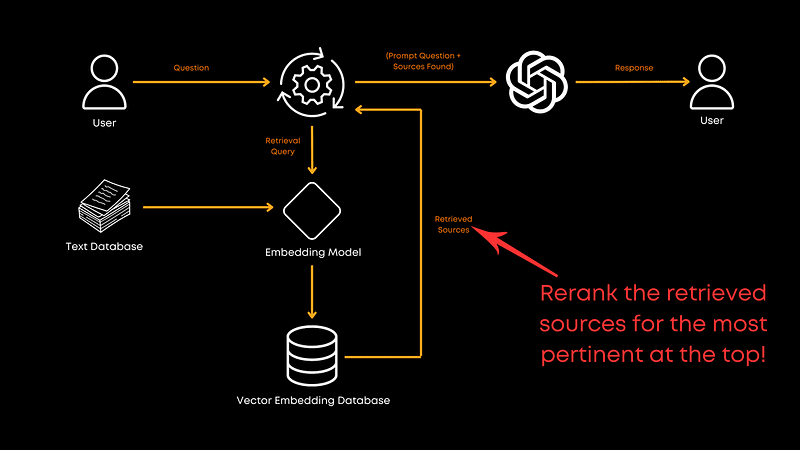

rearrangement

Reranking

Now let's talk. rearrangement. Once you've retrieved the documents, you need to make sure that the most relevant ones are at the top, and that's where reordering comes in.

In this study.monoT5 is the best performing option between performance and efficiency. It fine-tunes the T5 model, reordering documents based on their relevance to the query to ensure that the best matches come first.RankLLaMA Best overall performance, but TILDEv2 The fastest speed. If you're interested, the paper has more information about it.

Document repackaging

Document Repacking

After reordering, you'll need to do some Document repackaging Wang et al. recommend using the "reverse" method, i.e., arranging documents in ascending order of relevance. Liu et al. (2024) This approach of placing relevant information at the beginning or end was found to improve performance. Repackaging optimizes how information is presented to the large language model after the reordering process to help the model better understand the order in which information is provided, rather than just based on a theoretical order of relevance.

summarize

Summarization

Then, before calling the big language model, you need to pass the summarize to remove redundant information. Long prompts sent to large language models are costly and often unnecessary. Summarization can help remove redundant or unnecessary information, thereby reducing costs.

You can use a program like Recomp Tools like this perform extractive compression to select useful sentences, or generative compression to consolidate information from multiple documents. However, if speed is your priority, you might consider skipping this step.

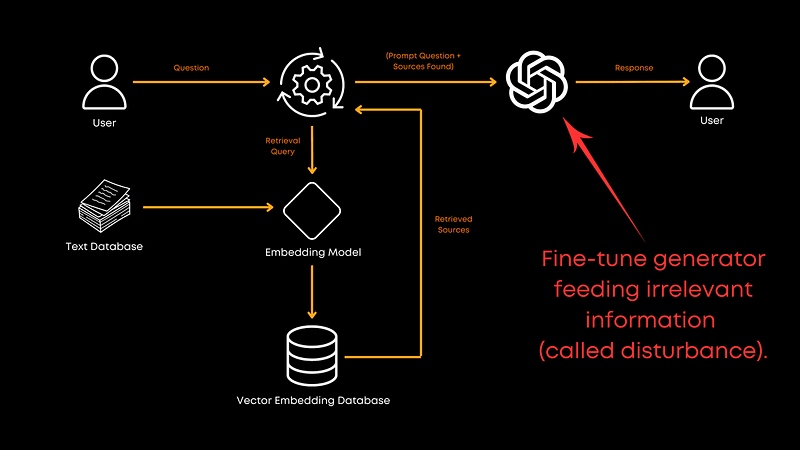

Fine-tuning generator

Fine-tuning the Generator

Finally, is it appropriate to provide a new name for the generating Fine-tuning the Great Language Model? It absolutely should be! Fine-tuning with a mix of correlated and randomized documents improves the generator's ability to handle irrelevant information. This makes the model more robust and helps provide better overall responses. Although the exact ratio is not provided in the paper, the results are pretty clear: fine-tuning is worth it! Of course, it also depends on your specific domain.

multimodal

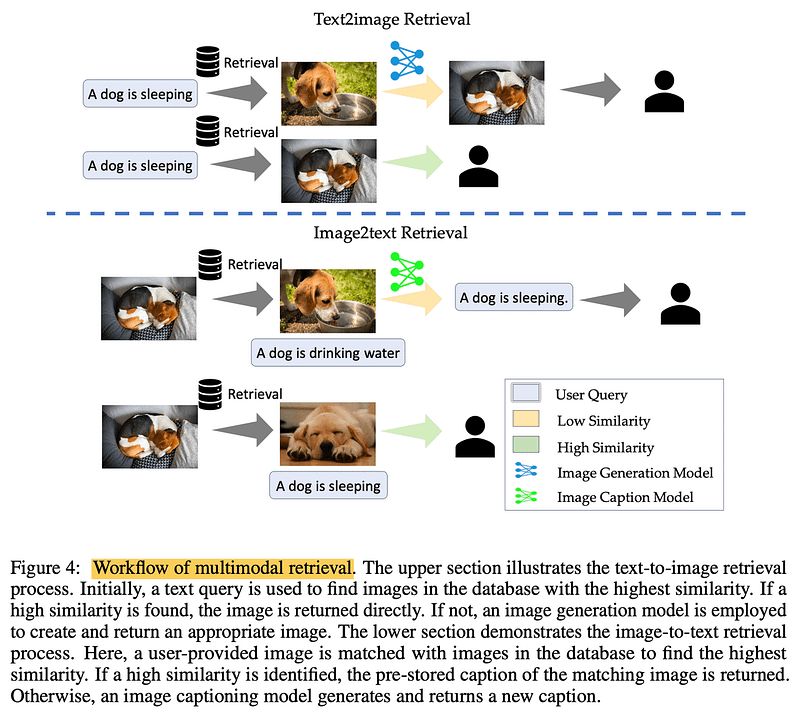

Multimodalities

When processing images? Realization multimodal Retrieval. For text-to-image tasks, querying the database for similar images can speed up the process. In image-to-text tasks, matching similar images can retrieve accurate, preexisting descriptions. The key is rooted in real and verified information.

reach a verdict

In short, this paper by Wang et al. provides us with a solid blueprint for building efficient RAG systems. Keep in mind, however, that this is only one paper and does not cover all aspects of the RAG pipeline. For example, the paper does not explore the joint training of retrievers and generators, which may unlock more potential. Also, the paper does not delve into chunking techniques that have not been investigated due to cost issues, but this is a direction worth exploring.

I highly recommend checking out the full paper for more information.

As always, thanks for reading. If you found this breakdown helpful, or have any comments, let me know in the comments section and we'll see you next time!

bibliography

Building LLMs for Production. https://amzn.to/4bqYU9b

Wang et al., 2024 (Thesis reference). https://arxiv.org/abs/2407.01219

LLM-Embedder (Embedding model). https://github.com/FlagOpen/FlagEmbedding/tree/master/FlagEmbedding/llm_embedder

Milvus (vector database): https://milvus.io/

Liu et al., 2024 (document repackaging). https://arxiv.org/abs/2307.03172

Recomp (Summarization tool). https://github.com/carriex/recomp

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...