MOSS-TTSD - Tsinghua Lab's open source speech generation model for bilingual dialogs

What is MOSS-TTSD

MOSS-TTSD is an open source spoken dialog speech generation model developed by the Speech and Language Laboratory of Tsinghua University. MOSS-TTSD can transform textual dialog scripts into natural, smooth and expressive conversational speech, and supports bilingual generation in English and Chinese. The model is based on an advanced semantic-phonetic neural network audio codec and a large-scale pre-trained language model trained with over 1 million hours of single person speech data and 400,000 hours of conversational speech data.MOSS-TTSD supports zero-sample speech cloning, which generates accurate conversational switches based on the dialog scripts, and enables tone cloning without additional samples.MOSS-TTSD is suitable for AI podcasts, and is also a good candidate for the AI podcasts and the AI podcasts. MOSS-TTSD is suitable for AI podcasts, movie and TV dubbing, long-form interviews, news reports and e-commerce live broadcasts, etc. MOSS-TTSD is completely open source and supports free commercial use.

Key Features of MOSS-TTSD

- Natural and smooth conversational speech generation: The ability to translate textual dialog into natural, expressive speech that accurately captures the rhyme and intonation of the dialog.

- Zero-sample multi-speaker tone cloning: No additional voice samples are needed to generate tones of different interlocutors according to the dialog script, enabling smooth dialog switching.

- Bilingual support: Supports high-quality speech generation in both Chinese and English to meet the needs of multilingual scenarios.

- Long-form speech generation: Based on a low-bit-rate codec, it can generate up to 960 seconds of speech in a single pass, avoiding the unnatural transitions of spliced speech.

- Open Source and Business Readiness: The model weights, inference code, and API interfaces are completely open source and support free commercial use, facilitating rapid application deployment for developers and enterprises.

MOSS-TTSD's official website address

- Project website:: https://www.open-moss.com/en/moss-ttsd/

- Github repository:: https://github.com/OpenMOSS/MOSS-TTSD

- HuggingFace Model Library:: https://huggingface.co/fnlp/MOSS-TTSD-v0.5

- Online Experience Demo:: https://huggingface.co/spaces/fnlp/MOSS-TTSD

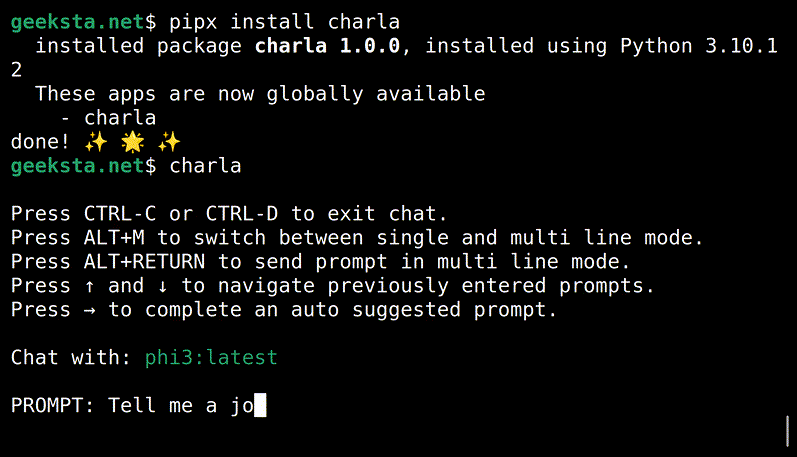

How to use MOSS-TTSD

- environmental preparation::

- Installing NVIDIA Drivers: Ensure that the latest versions of NVIDIA drivers and CUDA Toolkit are installed.

- Installing Python and Dependencies::

pip install torch torchvision torchaudio transformers soundfile- Getting the model: Download models from Hugging Face::

git clone https://huggingface.co/fnlp/MOSS-TTSD-v0.5- Load models and generate speech

from transformers import AutoModelForTextToSpeech, AutoTokenizer

import soundfile as sf

# 加载模型和分词器

model_name = "fnlp/MOSS-TTSD-v0.5"

model = AutoModelForTextToSpeech.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

# 输入文本

text = "你好,这是一个测试对话。"

inputs = tokenizer(text, return_tensors="pt")

# 生成语音

audio = model.generate(**inputs)

# 保存语音文件

sf.write("output.wav", audio.numpy(), model.config.sampling_rate)- Operational environment check: Check GPU Support::

import torch

print(torch.cuda.is_available())Core Benefits of MOSS-TTSD

- Natural and smooth speech generation: The ability to convert textual dialog into natural flowing, expressive speech that accurately captures the rhyme and intonation of the dialog.

- Multi-talker tone cloning: Supports zero-sample tone cloning, which generates tones of different interlocutors without additional voice samples, enabling natural dialog switching.

- Bilingual support: Supports high-quality speech generation in both Chinese and English to meet the needs of multilingual scenarios.

- Efficient data processing and pre-training: Combined with large-scale speech data for training, it is based on an optimized training framework to ensure the high quality and efficiency of the generated speech.

- Open Source and Business Readiness: The model is fully open source and supports free commercial use, facilitating rapid deployment and application by developers.

- Wide range of application scenariosIt is suitable for various scenarios such as AI podcasting, movie and TV dubbing, long-form interviews, news reporting and e-commerce live streaming.

- technological innovation: Enhances the performance and efficiency of speech generation based on an innovative speech discretization encoder, XY-Tokenizer, and a low bit rate codec.

People for whom MOSS-TTSD is intended

- content creator: Use it to produce AI podcasts, video voiceovers, newscasts, and more, quickly generating natural and smooth conversational speech.

- Film & TV Production Team: Conduct dialog dubbing for film and television productions, supporting multi-speaker tone cloning to enhance production efficiency.

- news media: Generate natural conversational speech for newscasting to enhance the attractiveness and readability of news.

- e-commerce practitioner: Engage viewers and boost engagement with digital human conversational bandwagons in live e-commerce broadcasts.

- Technology Developer: Secondary development with open source models, integration into various speech applications, and expansion of functionality.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...