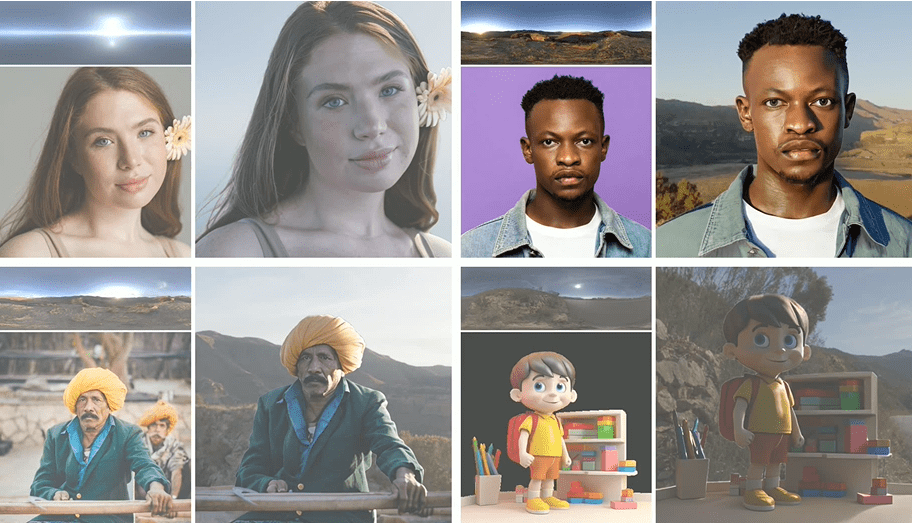

MOFA Video: Motion Field Adaptation Technology Converts Still Images to Video

General Introduction

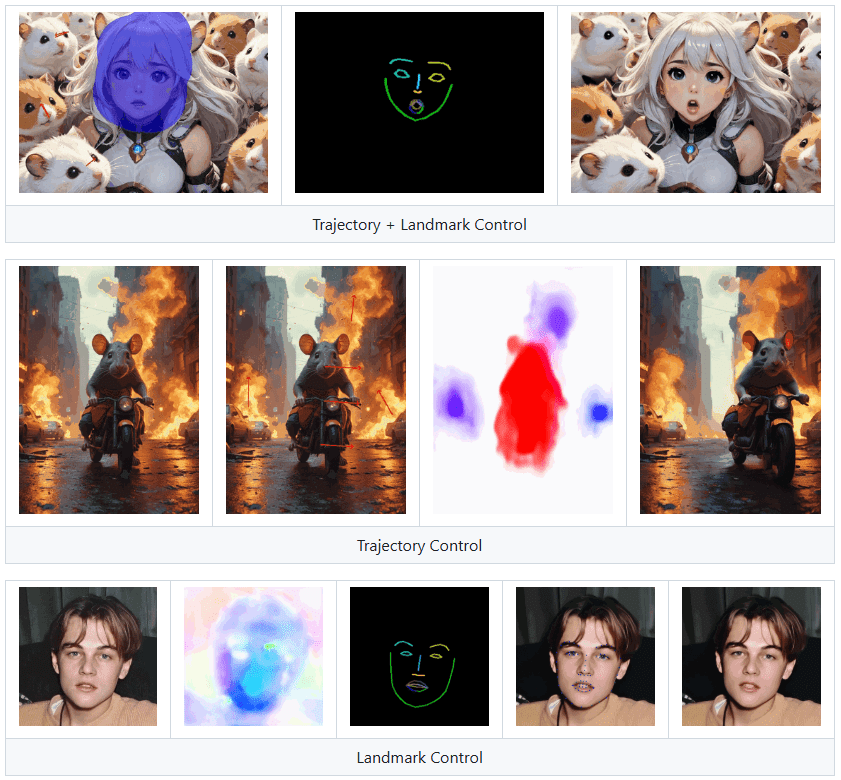

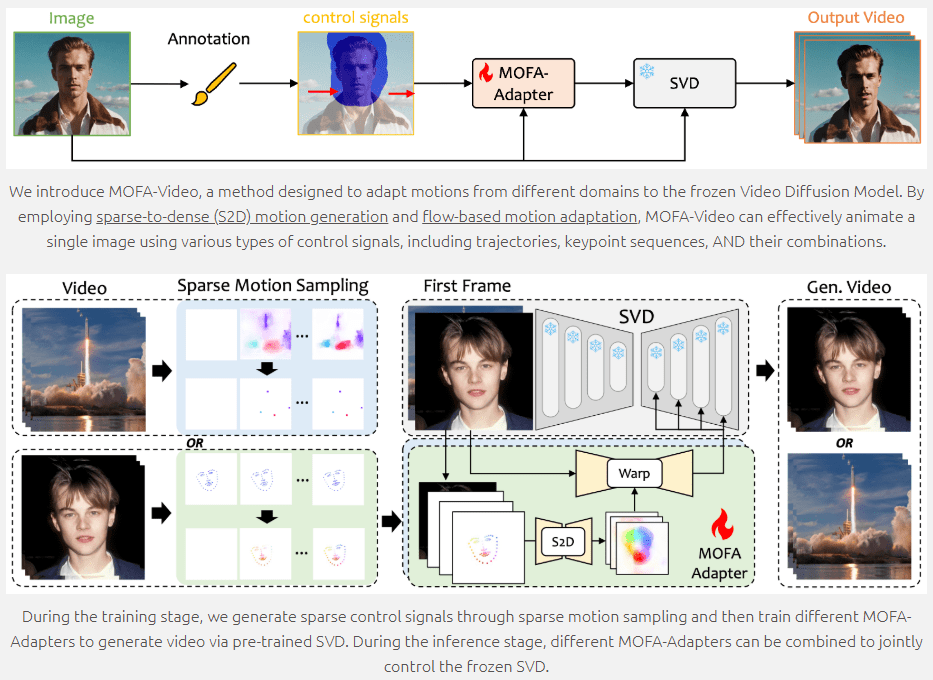

MOFA-Video is a state-of-the-art image animation generation tool that utilizes generative motion field adaptation techniques to convert static images into dynamic video. Developed in collaboration with the University of Tokyo and Tencent AI Lab, and to be presented at ECCV 2024, MOFA-Video supports a variety of control signals, including trajectories, keypoint sequences, and combinations thereof, enabling high-quality image animation effects. Users can access the code and related resources through the GitHub repository to get started easily.

Landmark Control can make people in images talk, but is not suitable for use as a cloned digital person.

Function List

- Image Animation Generation: Converts still images into moving video

- Multiple control signals: supports trajectories, key point sequences and combinations thereof

- Motion Field Adaptation: Animation through sparse to dense motion generation and flow-based motion adaptation

- Training and inference scripts: providing complete code for training and inference

- Gradio demo: online demo and checkpoint downloads

- Open source: making code and resources publicly available on GitHub

Using Help

Environmental settings

- clone warehouse

git clone https://github.com/MyNiuuu/MOFA-Video.git

cd ./MOFA-Video

- Create and activate a Conda environment

conda create -n mofa python==3.10

conda activate mofa

- Installation of dependencies

pip install -r requirements.txt

pip install opencv-python-headless

pip install "git+https://github.com/facebookresearch/pytorch3d.git"

- Download Checkpoints Download checkpoints from the HuggingFace repository and place them in the

./MOFA-Video-Hybrid/models/cmp/experiments/semiauto_annot/resnet50_vip+mpii_liteflow/checkpointsCatalog.

Demo with Gradio

- Using audio to drive facial animation

cd ./MOFA-Video-Hybrid

python run_gradio_audio_driven.py

- Driving Facial Animation with Reference Video

cd ./MOFA-Video-Hybrid

python run_gradio_video_driven.py

The Gradio interface will display instructions, so follow the instructions on the interface to perform the reasoning operation.

Training and reasoning

MOFA-Video provides complete training and inference scripts, which can be customized as needed. For detailed instructions, please refer to the README file in the GitHub repository.

Main function operation flow

- Image Animation Generation: Upload a static image, select the control signals (trajectory, key point sequence, or a combination thereof), and click the Generate button to generate a dynamic video.

- Multiple control signals: Users can choose different combinations of control signals to realize richer animation effects.

- Motion Field Adaptation: Ensure smooth and natural animation effects through sparse to dense motion generation and flow-based motion adaptation techniques.

MOFA-Video provides rich functions and detailed instructions for users to generate image animation and customize training according to their needs, and easily achieve high-quality image animation effects.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...