ModelScope Swift: a lightweight infrastructure for efficiently fine-tuning and deploying large models.

General Introduction

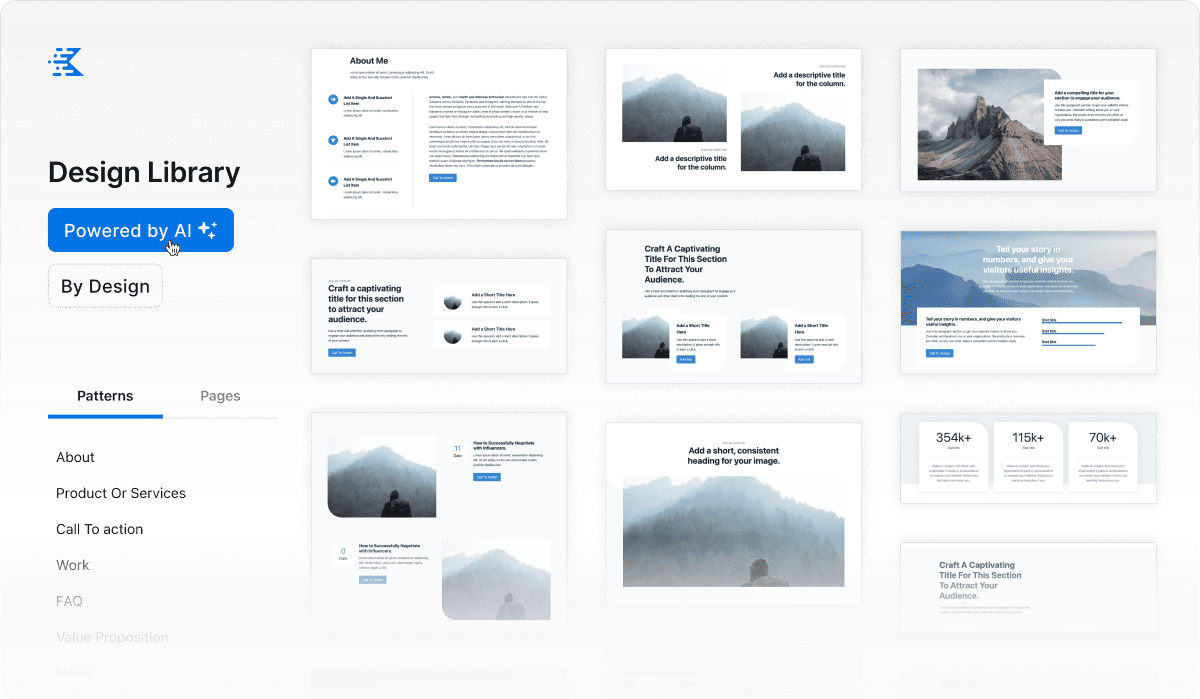

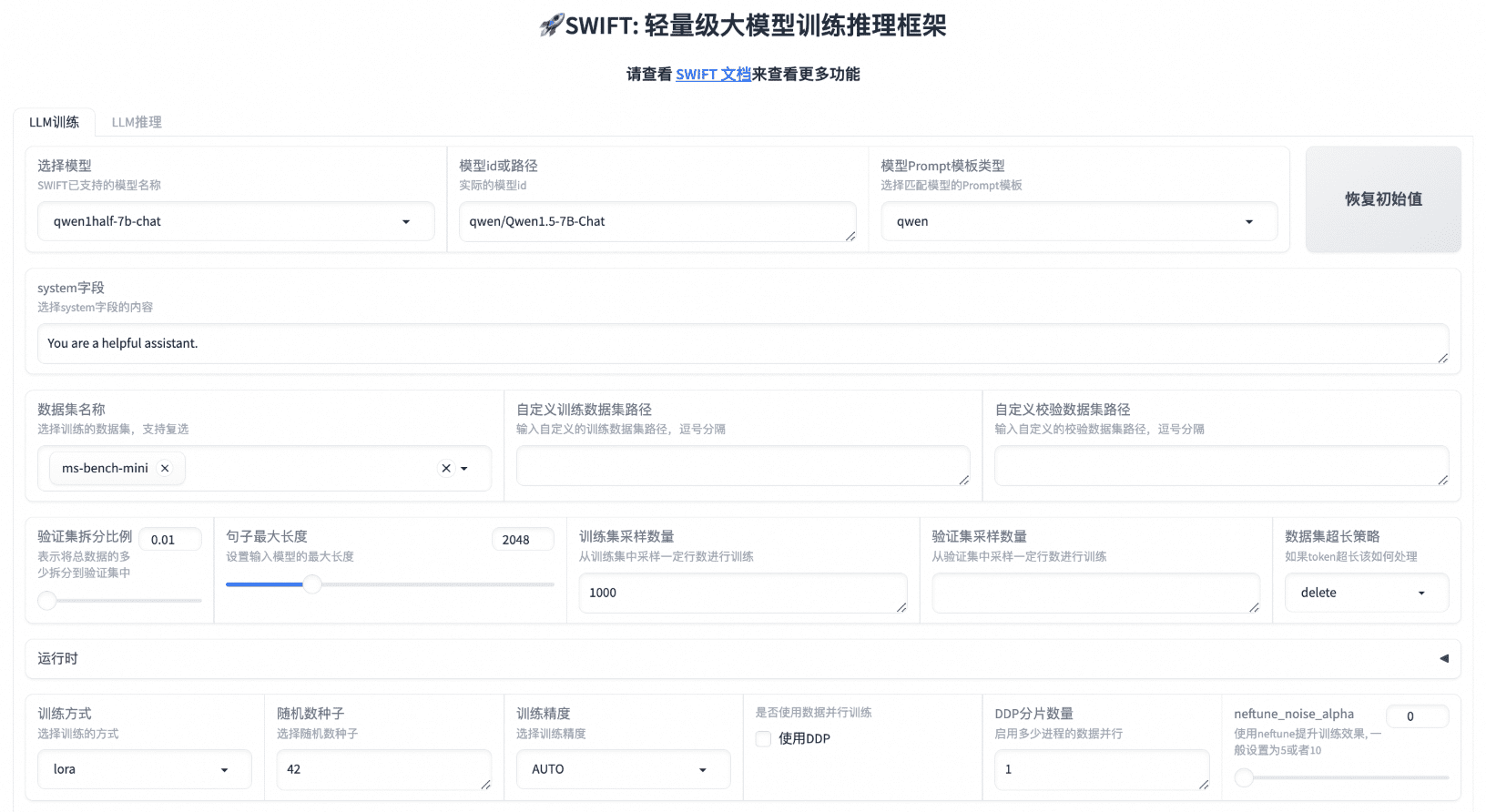

ModelScope Swift (MS-Swift for short) is an efficient lightweight infrastructure designed for fine-tuning, reasoning, evaluation and deployment of Large LLMs (LLMs) and Multimodal Large Models (MLLMs). The framework supports more than 400 LLMs and 100+ MLLMs, providing a complete workflow from model training, evaluation to application.MS-Swift not only supports PEFT (Parameter Efficient Fine-Tuning) technology, but also provides a rich library of adapters to support the latest training techniques, such as NEFTune, LoRA+, LLaMA-PRO, and so on. For users unfamiliar with deep learning, MS-Swift also provides a Gradio-based web interface for easy control of training and inference.

Function List

- Supports training, inference, evaluation and deployment of 350+ LLMs and 100+ MLLMs

- Provides adapter libraries for the latest training technologies such as PEFT, LoRA+, LLaMA-PRO and more!

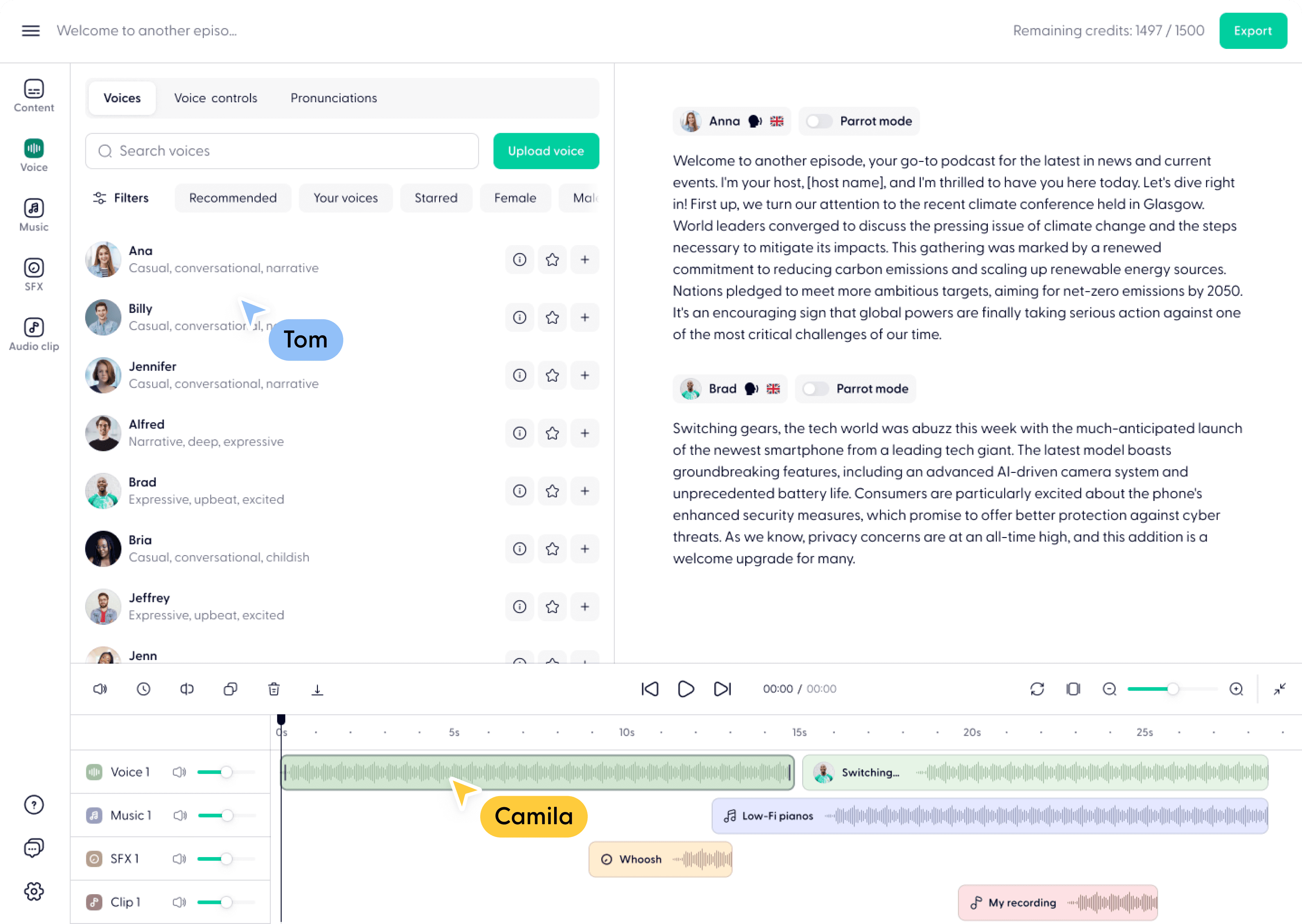

- Gradio-based web interface for easy control of training and inference

- Supports multi-GPU training and deployment

- Provides detailed documentation and deep learning courses

- Supports a wide range of hardware environments, including CPUs, RTX series graphics cards, A10/A100 and other computing cards

- Supports a variety of training methods, such as full-parameter fine-tuning, LoRA fine-tuning, quantization training, etc.

- Provide support for multiple datasets and models for different training tasks

Using Help

Installation process

MS-Swift can be installed in the following three ways:

- Use the pip command to install:

# 安装所有功能 pip install 'ms-swift[all]' -U # 仅安装LLM相关功能 pip install 'ms-swift[llm]' -U # 仅安装AIGC相关功能 pip install 'ms-swift[aigc]' -U # 仅安装适配器相关功能 pip install ms-swift -U - Installation via source code:

git clone https://github.com/modelscope/swift.git cd swift pip install -e '.[llm]' - Install using a Docker image.

Using the Web Interface

MS-Swift provides a Gradio-based web interface that users can launch with the following command:

SWIFT_UI_LANG=en swift web-ui

The web interface supports multi-GPU training and deployment, and users can easily control the training and inference process.

Training and reasoning

MS-Swift supports a variety of training and inference methods, here are some sample commands:

- Single GPU training:

CUDA_VISIBLE_DEVICES=0 swift sft --model_type qwen1half-7b-chat --dataset blossom-math-zh --num_train_epochs 5 --sft_type lora --output_dir output --eval_steps 200 - Multi-GPU training:

NPROC_PER_NODE=4 CUDA_VISIBLE_DEVICES=0,1,2,3 swift sft --model_type qwen1half-7b-chat --dataset blossom-math-zh --num_train_epochs 5 --sft_type lora --output_dir output - Reasoning:

CUDA_VISIBLE_DEVICES=0 swift infer --model_type qwen1half-7b-chat

Detailed Documentation

MS-Swift provides extensive documentation and deep learning courses, and users can visit the following links for more information:

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...