Model Context Provider CLI: Command line tool for using MCP services in any large model, does not depend on Claude.

General Introduction

The Model Context Provider CLI (mcp-cli) is a protocol-level command-line tool for interacting with model context provider servers. The tool allows users to send commands, query data, and interact with a variety of resources provided by the server. mcp-cli supports several providers and models, including OpenAI and Ollama, with the default models being gpt-4o-mini and qwen2.5-coder, respectively. The tool requires Python 3.8 or later, and the installation of the appropriate The tool requires Python 3.8 or higher, and the appropriate dependencies need to be installed. You can use the tool by cloning the GitHub repository and installing the necessary dependencies.

Function List

- Support for protocol-level communication with model context provisioning servers

- Dynamic tools and resources to explore

- Support for multiple providers and models (OpenAI and Ollama)

- Provides an interactive mode that allows users to dynamically execute commands

- Supported commands include: ping, list-tools, list-resources, list-prompts, chat, clear, help, quit/exit

- Supported command line parameters include: --server, --config-file, --provider, --model

Using Help

Installation process

- Cloning Warehouse:

git clone https://github.com/chrishayuk/mcp-cli

cd mcp-cli

- Install UV:

pip install uv

- Synchronization dependencies:

uv sync --reinstall

Usage

- Start the client and interact with the SQLite server:

uv run main.py --server sqlite

- Run the client with the default OpenAI provider and model:

uv run main.py --server sqlite

- Using specific configurations and Ollama The provider runs the client:

uv run main.py --server sqlite --provider ollama --model llama3.2

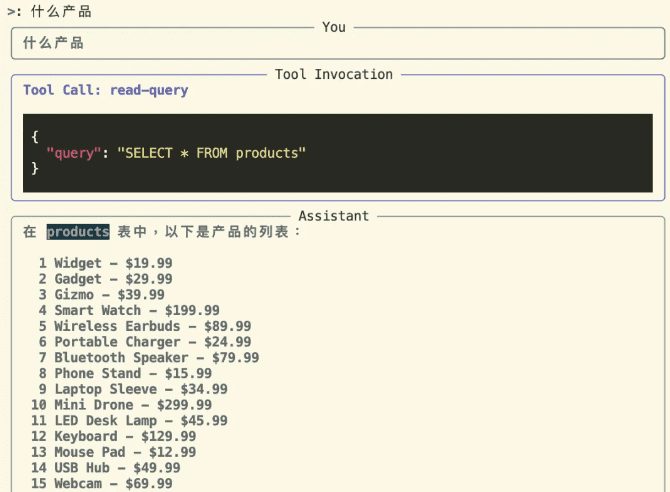

interactive mode

Enter interactive mode and interact with the server:

uv run main.py --server sqlite

In interactive mode, you can use the tool and interact with the server. The provider and model specified at startup will be displayed as follows:

Entering chat mode using provider 'ollama' and model 'llama3.2'...

Supported commands

ping: Check if the server is respondinglist-tools: Show available toolslist-resources: Show available resourceslist-prompts: Show available tipschat: Enter interactive chat modeclear: Clear the terminal screenhelp: Displays a list of supported commandsquit/exit: Exit Client

Using OpenAI Providers

If you wish to use an OpenAI model, you should set the OPENAI_API_KEY environment variable, which can be found in the .env file or set as an environment variable.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...