Mistral AI releases Small 3.1 model: another upgrade in open source multimodal capabilities

Mistral AI recently announced the launch of its latest model Mistral Small 3.1, and claimed that it was the best choice among equivalent models available.

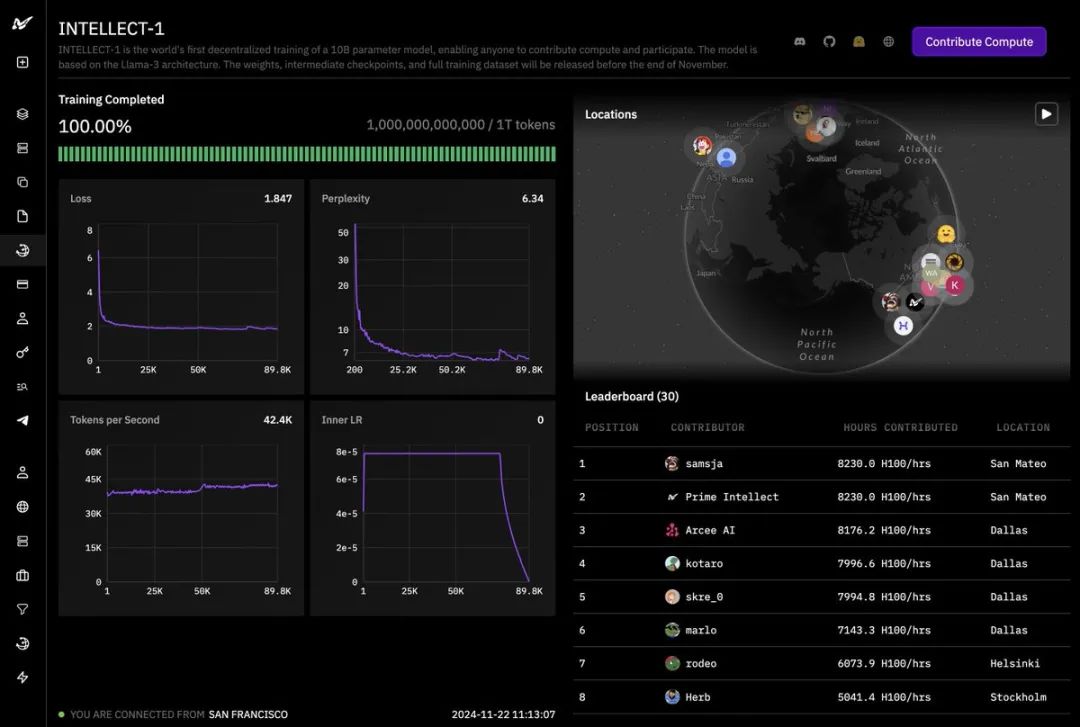

This new model builds on the Mistral Small 3 with significant improvements in text performance, multimodal understanding, and contextual processing, with a context window that extends to 128k tokens.Official Mistral AI data shows that Small 3.1 outperforms comparable models such as Gemma 3 and GPT-4o Mini, while maintaining the 150-per-second tokens The speed of reasoning.

One of the biggest highlights of Mistral Small 3.1 is the adoption of the Apache 2.0 open source license, which allows the model to be more widely used and studied.

Modern AI applications are placing greater demands on model capabilities, requiring models to be able to process text, understand multimodal inputs, support multiple languages, and manage long contexts while also being low-latency and cost-effective.Mistral AI believes that Mistral Small 3.1 is the first closed-source small model to meet or exceed the performance of leading closed-source small models in all of these dimensions. open source models.

According to performance data published by Mistral AI, Mistral Small 3.1 performs well in a number of benchmark tests. To ensure data comparability, Mistral AI used figures reported by other vendors ранее where possible, otherwise the model was evaluated through its generic evaluation tool.

Instruction compliance performance

Text Command Benchmarking

Below is a comparison of the text instruction performance of the Mistral Small 3.1 with other models involved, including the Gemma 3-it (27B), Cohere Aya-Vision (32B), GPT-4o Mini, and Claude-3.5 Haiku.

Performance Data Tables

Multimodal instruction benchmarking

Below are the results of the Multimodal Command Bench test, with MM-MT-Bench scaled to a score between 0 and 100. Models also included in the comparison are Gemma 3-it (27B), Cohere Aya-Vision (32B), GPT-4o Mini, and Claude-3.5 Haiku.

Performance Data Tables

multilingualism

Mistral Small 3.1 also shows advantages in multilingual processing, comparing it to models such as Gemma 3-it (27B), Cohere Aya-Vision (32B) and GPT-4o Mini.

Performance Data Tables

long context processing capability

For long context processing, Mistral Small 3.1 was compared with Gemma 3-it (27B), GPT-4o Mini, and Claude-3.5 Haiku to validate its performance in processing long texts.

Performance Data Tables

Pre-training performance

Mistral AI has also released a pre-trained base model for Mistral Small 3.1.

All pre-training data

Mistral Small 3.1 Base (24B) was compared to Gemma 3-pt (27B) for pre-training performance.

Performance Data Tables

application scenario

Mistral Small 3.1 is positioned as a versatile model designed to handle a wide range of generative AI tasks, including command following, dialog assistance, image understanding, and function calling, and Mistral AI believes it lays a solid foundation for both enterprise and consumer AI applications.

Key attributes and capabilities

- Lightweighting. Mistral Small 3.1 runs on a single RTX 4090 graphics card or a Mac device with 32GB of RAM. This makes it ideal for device-side scenarios.

- Rapid Response Dialog Assist. Ideal for virtual assistants and other applications that require fast, accurate responses.

- Low Latency Function Calls. Ability to quickly execute functions in an automated or agent workflow.

- Fine-tuning for specific areas. Mistral Small 3.1 can be fine-tuned for specific domains to create accurate models of specialized domains. This is particularly useful in areas such as legal counseling, medical diagnostics and technical support.

- Foundations of Advanced Reasoning. Mistral AI says the community's ability to build on open source Mistral models is impressive. In the past few weeks, some great inference models built on Mistral Small 3 have emerged, such as Nous Research's DeepHermes 24B.. To this end, Mistral AI has released the base model and command model checkpoints for Mistral Small 3.1 to further support downstream customization of the model.

Mistral Small 3.1 has a wide range of application scenarios covering enterprise and consumer applications that require multimodal understanding, such as document validation, diagnostics, device-side image processing, visual inspection for quality checking, object detection in security systems, image-based customer support, and general assistants.

usability

Mistral Small 3.1 is available for download on the Hugging Face website:Mistral Small 3.1 Base cap (a poem) Mistral Small 3.1 Instruct. For enterprise deployments requiring private and optimized inference infrastructure, contact Mistral AI.

Users can also use Mistral AI's developer platform to La Plateforme Try API calls. The model is also available on Google Cloud Vertex AI.Mistral Small 3.1 will be coming to NVIDIA NIM and Microsoft Azure AI Foundry in the coming weeks.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...