MiniMax First open source MiniMax-01, 4M ultra-long context, new architecture, challenges Transformer

"MoE" plus "unprecedented large-scale introduction into production environments". Lightning Attention", plus "software and engineering refactoring from framework to CUDA level", what do you get?

The answer is a new model that ties the capabilities of the top models and boosts the context length to the 4 million token level.

On January 15, MiniMax, the big model company, officially released the long-teased new model series: MiniMax-01, which consists of the basic language big model MiniMax-Text-01 and the visual multimodal big model MiniMax-VL-01, developed by integrating a lightweight ViT model on top of it.

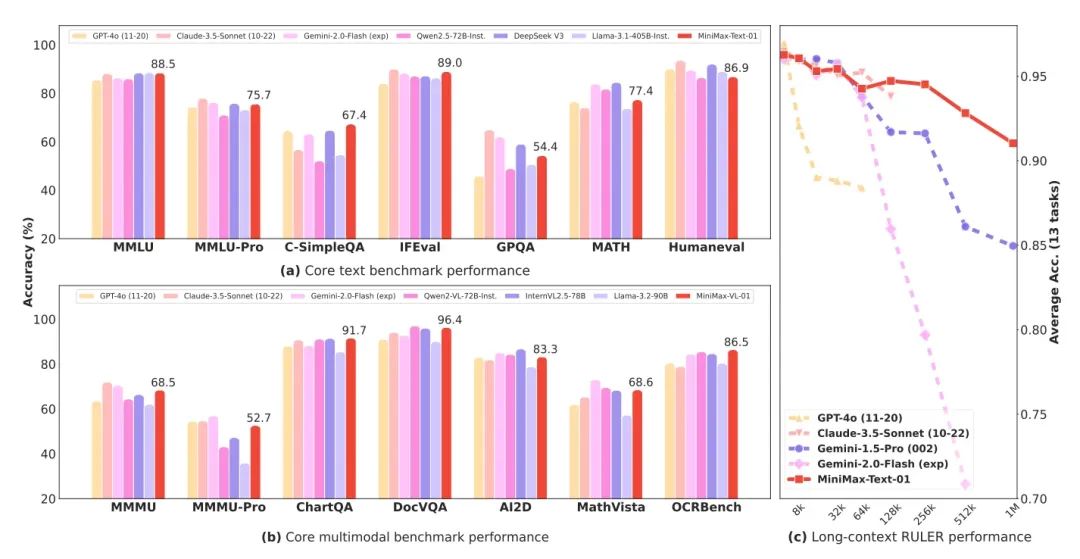

MiniMax-01 is a MoE (Mixed Expert) model with 456 billion total parameters and 32 Experts, which is on par with GPT-4o and Claude 3.5 sonnet in terms of combined power on several mainstream review sets, while at the same time having 20-32 times the context length of today's top models, and as the input lengths get longer, it is the one with the longest performance decay. is the model with the slowest performance decay. That is, it's a real 4 million token context.

- Basic Language Large Model MiniMax-Text-01, Visual Multimodal Large Model MiniMax-VL-01

- New Lightning Attention Architecture, Square to Linear, Dramatically Reduces Inference Costs

- Heavyweight open source, text model parameters up to 456 billion, 32 experts

- Ultra-ultra-long 4 million word long context and performance that matches top offshore models

- The model, code, and technical report have all been released, very sincerely!

Both the web experience and the API are also now live and can be experienced and commercialized online at the address at the end of the article.

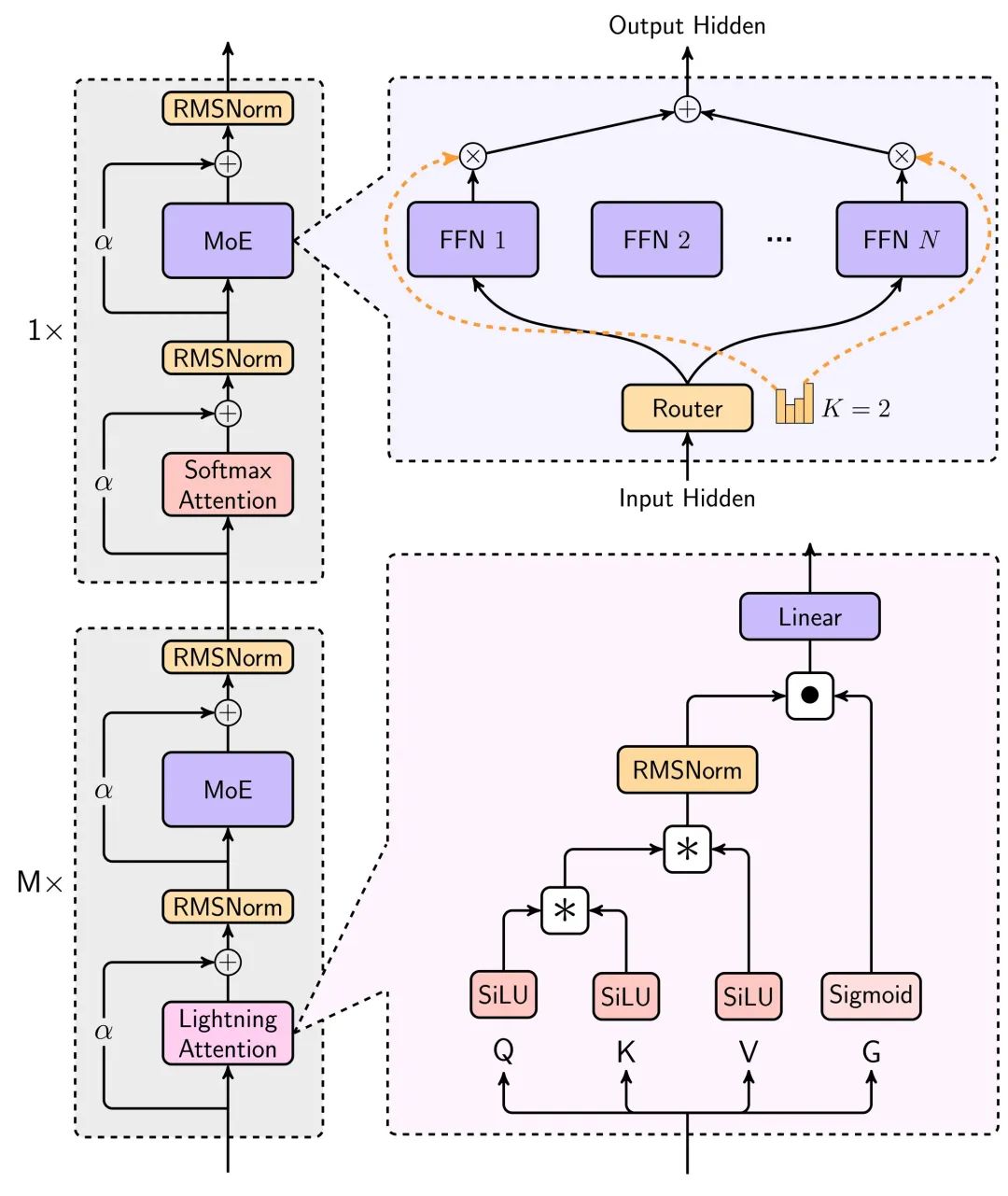

New technology: Lightning attention

The most surprising thing about this model is the new linear attention architecture.

We all know that in the actual AI use, long text is crucial, the long memory of character chat, AI Coding to write code, Agent to complete a variety of tasks, which scene are inseparable from the long text.

The big models have been dropping in price, but the longer the context in which they are used, the slower and more expensive they become.

The root of this problem is Transformer The architecture has a quadratic computational complexity. The arithmetic consumption of reasoning is exponentially higher as the context increases.

This time, MiniMax's new model uses the Lightning attention mechanism, a linear attention mechanism that can significantly reduce the computation and inference time for long texts.

The main architectural diagram for this modeling can be seen in the technical report.

The reduction in model inference resource consumption by this architecture is huge, as can be seen by the following graph comparing inference times for long text, where linear attention is used to allow inference times to slowly increase near-linearly rather than exponentially as the context continues to rise.

A more detailed description can be found in the technical report at the end of the article.

The computation is reduced, the price comes down, and performance can't be lost for long text to be truly usable.

The graph below shows Text-1's performance on the very long text review set, which surprisingly exceeds the performance of Gemini 2.0 Flash.

Another very interesting point in the technical report is that the model's In-Context Learning ability, which gradually increases as the context improves, is a huge help for AI writing as well as tasks that require long memories.

Overall, the application of Lightning attention mechanism makes the usability of long text for large models increase dramatically, and the price has the opportunity to drop another order of magnitude, in the future, it is very worth looking forward to.

Model performance, tie the first line

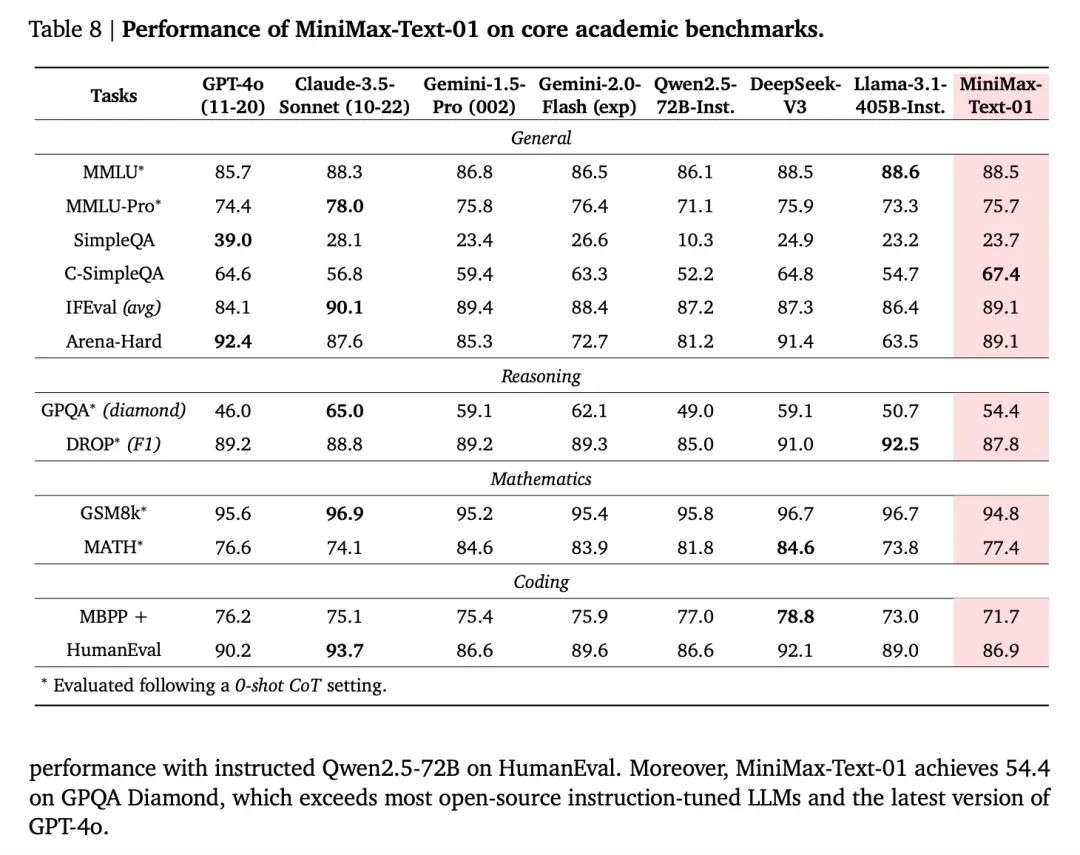

In terms of model performance metrics, as an open source model, it catches up with the best overseas closed source models in many metrics.

And since the model has the feedback from the Conch platform for optimization and iteration, the user's experience in real scenarios is also guaranteed.

The official use of real user use scenarios to build a test set, you can see that the performance in real scenarios is also very bright, especially prominent scenarios are three: creative writing, knowledge quizzes, long text.

In addition, the visual understanding model MiniMax-VL-01 performance, basically in every index to equal or exceed the overseas top models, especially practical OCR and chart scenes.

Next Transformer Moment

When generational iterations of models are no longer ferocious, context length and logical reasoning are becoming the two most focused directions.

In terms of context, Gemini was once the longest. Moreover, DeepMind's CEO Demsi Hassabis has revealed that within Google, the Gemini model has already achieved 10 million token lengths in experiments and is believed to eventually "reach infinite lengths," but what's stopping Gemini from doing so now is its corresponding cost. But what's stopping Gemini from doing it now is the cost of doing it. In a recent interview, he said that Deepmind now has a new approach to solving this cost problem.

So, whoever can get the context length up first while knocking down the cost will probably have the upper hand. Judging from the results demonstrated by MiniMax-01, it has really gained a qualitative improvement in efficiency.

In this detailed technical report, one of the figures that gives an idea of how efficiently the hardware is being used - inference, MiniMax has 75% MFUs on H20 GPUs. that's a pretty high number.

MFU (Machine FLOPs Utilization) refers to the actual utilization of hardware computational power (FLOPs, i.e., number of floating point operations per second) by a model during operation. In short, MFU describes whether a model fully utilizes the hardware performance. A high utilization rate will definitely bring cost advantages.

The MiniMax 01 is certainly one of the rare surprises in the recent dead-on-arrival "wall-to-wall" debate.Another one that has generated a lot of discussion recently is DeepSeek V3. As mentioned above, two important directions today, one in inference and one in longer contexts, are represented by Deepseek V3 and MiniMax-01 respectively.

Interestingly, in terms of the technical route, to some extent both are optimizing the core attention mechanism in Transformer that laid the foundation for today's prosperity, and it's a bold refactoring, hardware and software in one. deepSeek V3 has been described as milking Nvidia's cards dry, and it's critical that MiniMax is able to achieve such a high inference MFU as well. They optimize the training framework and hardware directly.

According to MiniMax's report, they developed a CUDA kernel for linear attention directly on their own, step-by-step and in depth, from scratch, and developed various supporting frameworks for this purpose to optimize the efficient use of GPU resources. Both companies achieved their goals through a tighter integration of hardware and software capabilities.

Another interesting observation is that these two outstanding companies are both companies that have already invested in the research and development of large model technology before the emergence of ChatGPT, and the amazing thing about these two models is not in the "catching up with GPT4" mode that we are used to seeing in the past, but rather, according to their own judgment on the evolution of the technology, they made heavy investment and even some bets on innovation, and delivered the answer after a series of sustained solid work. Instead, based on their own judgment on the evolution of technology, they made heavy investment and even some bets on innovation, and delivered the answer after a series of sustained and solid work.

And none of these answers are just for themselves either, they're all trying to prove that some concept that was once stuck in the lab can have the effect it promises when deployed at scale in real-world scenarios, and by doing so, allow more people to continue to optimize it.

This is reminiscent of when Transformer came along.

When the Attention mechanism had also gone viral in the lab, but the controversy still raged on, it was Google, who believed in its potential, that really piled on the arithmetic and resources to take it from a theoretical experiment to a real thing realized in large-scale deployments. The next thing is that people flocked to the proven route to today's prosperity.

With Transformer stacking more layers on top of each other and using more power, and today's MiniMax-01 trying to overhaul the old attention mechanism, it's all a bit of déjà vu. Even the famous title of the paper "Attention is all you need", which was coined by a Google researcher at the time to emphasize the attention mechanism, is a perfect fit for MiniMax: linear attention is all you need ---. -

"The model currently still retains 1/8th of the normal softmax attention. We are working on more efficient architectures that will eventually remove softmax attention entirely, making it possible to achieve unlimited context windows without computational overload."

Model Price

Input: $1/million token

Output: $8/million tokens

Basically use it with your eyes closed.

Model Resources

Code:https://github.com/MiniMax-AI/MiniMax-01

Model:https://huggingface.co/MiniMaxAI/MiniMax-Text-01, https://huggingface.co/MiniMaxAI/MiniMax-VL-01

Technical report:https://filecdn.minimax.chat/_Arxiv_MiniMax_01_Report.pdf

Web-side:https://hailuo.ai

API. https://www.minimaxi.com/

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...