In-depth review of the 10 best text-to-speech projects

-Open source text-to-speech (TTS) project: for applications to inject realistic "voice" sound

In the wave of artificial intelligence, Text-to-Speech (TTS) technology has become an important bridge connecting the digital world and human senses. From human-machine dialogues in intelligent assistants, to voice guidance in navigation systems, to reading aids, TTS technology is breaking the limitations of text with its unique charm, making information delivery more intuitive and efficient.

The spirit of open source drives the rapid development of TTS technology. More and more developers and researchers are joining the open source community to build and improve the TTS ecosystem. In this article, we will focus on a number of high-profile open source TTS projects, analyze their technical characteristics and application potential, and help readers find the most suitable "sound" engine for their own needs in a wide range of choices.

Overview of open source TTS projects

The following is an introduction to a series of open source TTS projects with their own advantages. They differ in terms of language coverage, timbre fidelity, functionality, etc. Readers can choose according to the actual application scenarios:

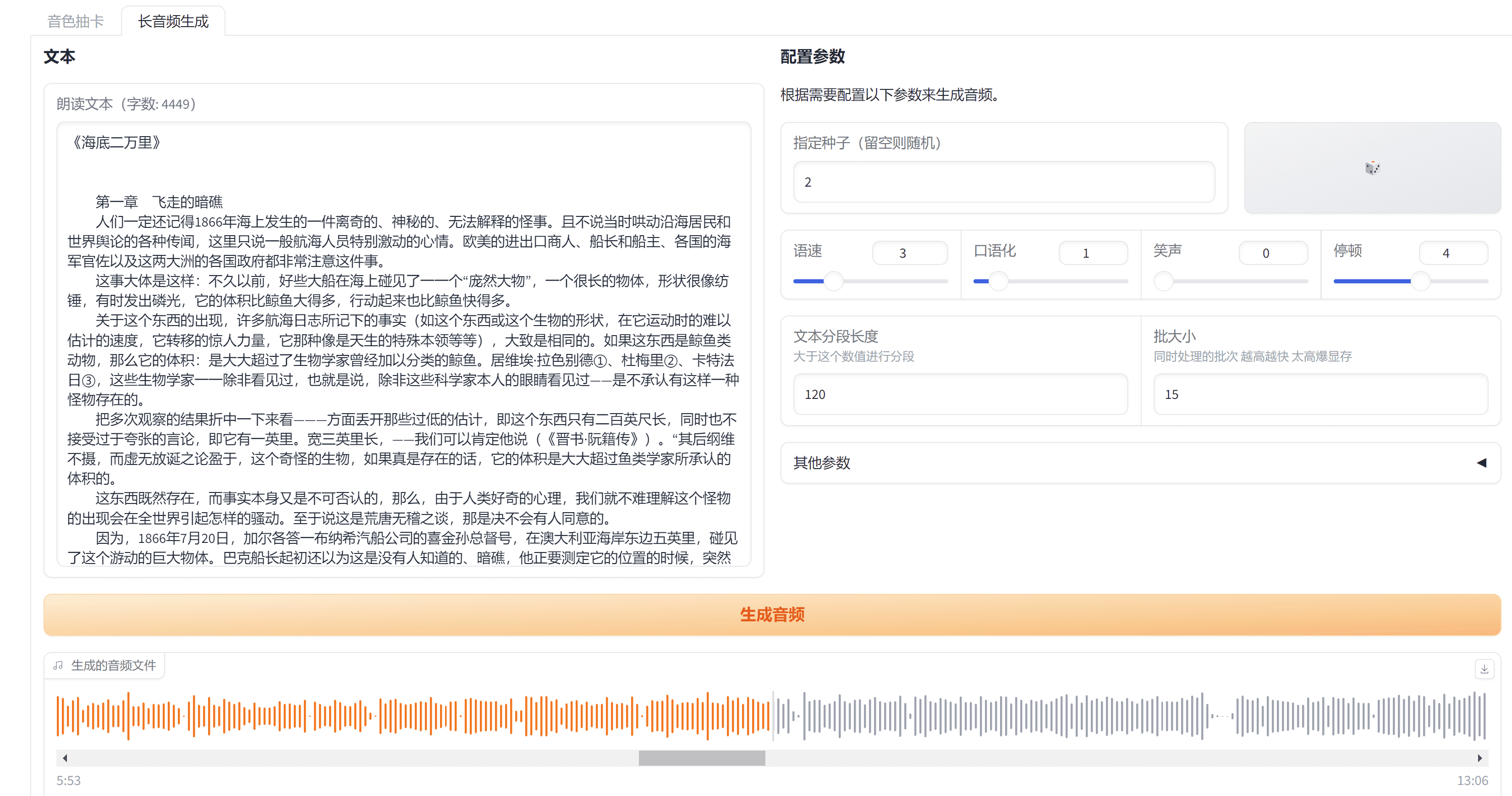

1. ChatTTS: natural speech synthesis for conversational scenarios

Project Features: ChatTTS Focusing on optimizing the effect of speech synthesis in conversational scenarios, its core strengths includeExcellent mixed-context processing of Chinese and Englishcap (a poem)Multi-talker simulation. It supports six language configurations including Chinese, English, and Japanese, and can synthesize mixed Chinese and English texts smoothly and naturally, which is especially important for application scenarios that need to deal with multi-language dialog content. The multi-speaker feature allows ChatTTS to simulate the voices of different characters, giving the dialog system a richer expressiveness.

Potential application scenarios: Intelligent customer service systems, conversational AI assistants, multilingual learning tools, audiobook creation, and more.

Advantage: Conversation scene optimization, natural and smooth mixed Chinese and English reading, support for multiple speaker tones.

Aspects to focus on: Compared to some projects that pursue extreme sound quality, ChatTTS may focus more on the naturalness and functionality of the conversation, and the sound quality performance may vary in specific scenarios.

GitHub address: https://github.com/2noise/ChatTTS

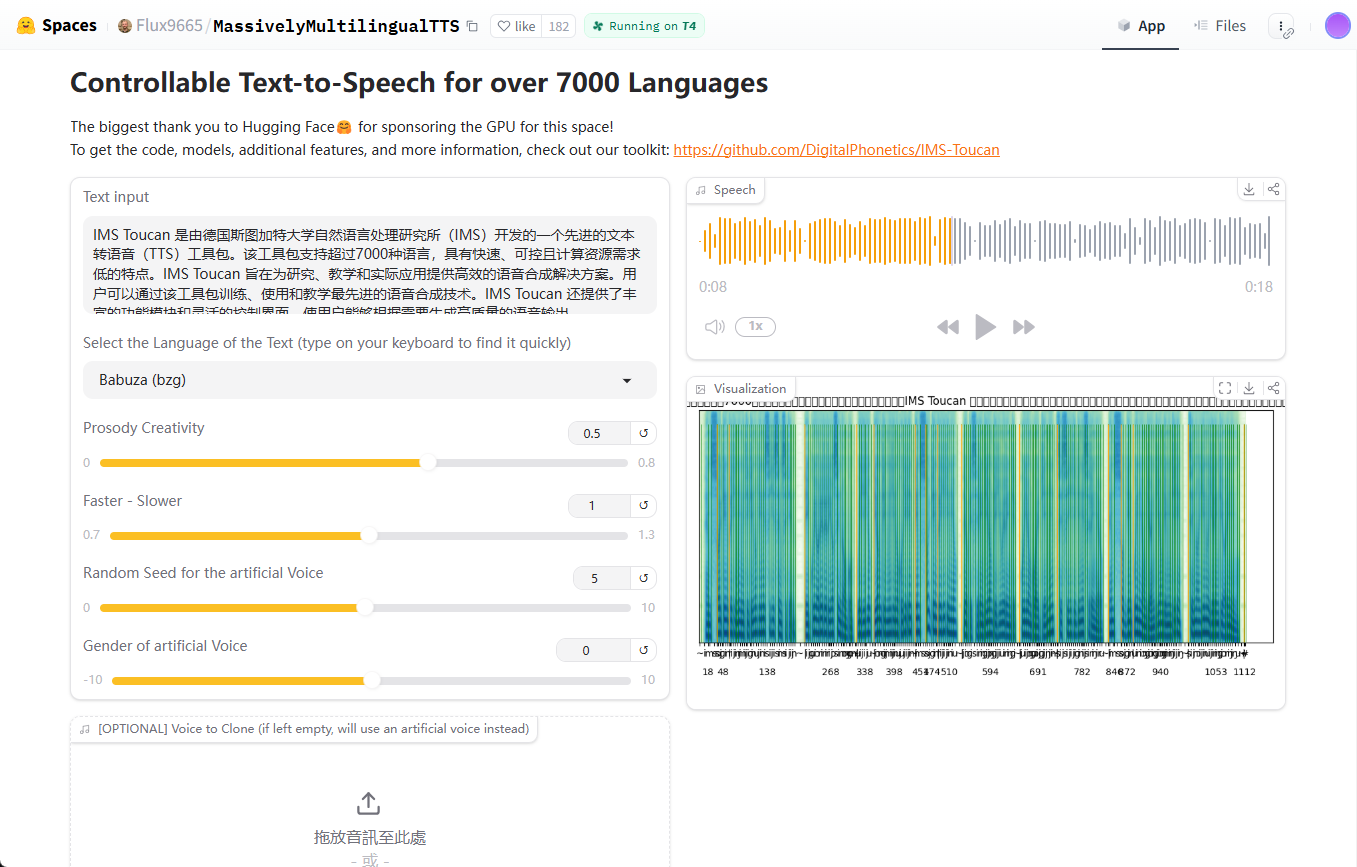

2. IMS Toucan: Synthesizing Capabilities Across Language Boundaries

Project Features:IMS Toucan by means ofExtensive language supportis known for its claim to be able to synthesize speech in over 7,000 languages. This impressive language coverage makes it ideal for building global applications. IMS Toucan also hasMulti-speaker speech synthesisfunction, which can simulate the voice characteristics of different speakers and provide a rich selection of tones.

Potential application scenarios: Globalization application deployment, multilingual education platform, rare language speech resource development, linguistics research, etc.

Advantage: Extremely high language coverage, support for multiple speakers, active open source community.

Aspects to focus on: Such a wide range of language support may mean that the refinement of sound quality in a given language may not be as good as models that focus on fewer languages. Practical testing is recommended to evaluate the effectiveness of support for the target language.

GitHub address: https://github.com/DigitalPhonetics/IMS-Toucan

3. Fish Speech: The Mastery of Chinese Speech Synthesis

Project Features: Fish Speech specialize inChinese, English and Japaneseof speech synthesis, especially inChinese Speech ProcessingExcellent performance in terms of. The project emphasizes that its speech synthesis quality is close to that of a real person, thanks to the use of about 150,000 hours of trilingual data for training. If your application scenario is mainly in Chinese and you have high requirements for naturalness and expressiveness of speech, Fish Speech is worth checking out.

Potential application scenarios: Chinese voice assistant, Chinese content creation platform, Chinese audiobooks, and Chinese voice navigation.

Advantage: Excellent Chinese speech synthesis quality, high naturalness, open source community friendly to Chinese support.

Aspects to focus on: Language support is focused on Chinese, English, and Japanese; support for other languages may require further evaluation.

GitHub address: https://github.com/fishaudio/fish-speech

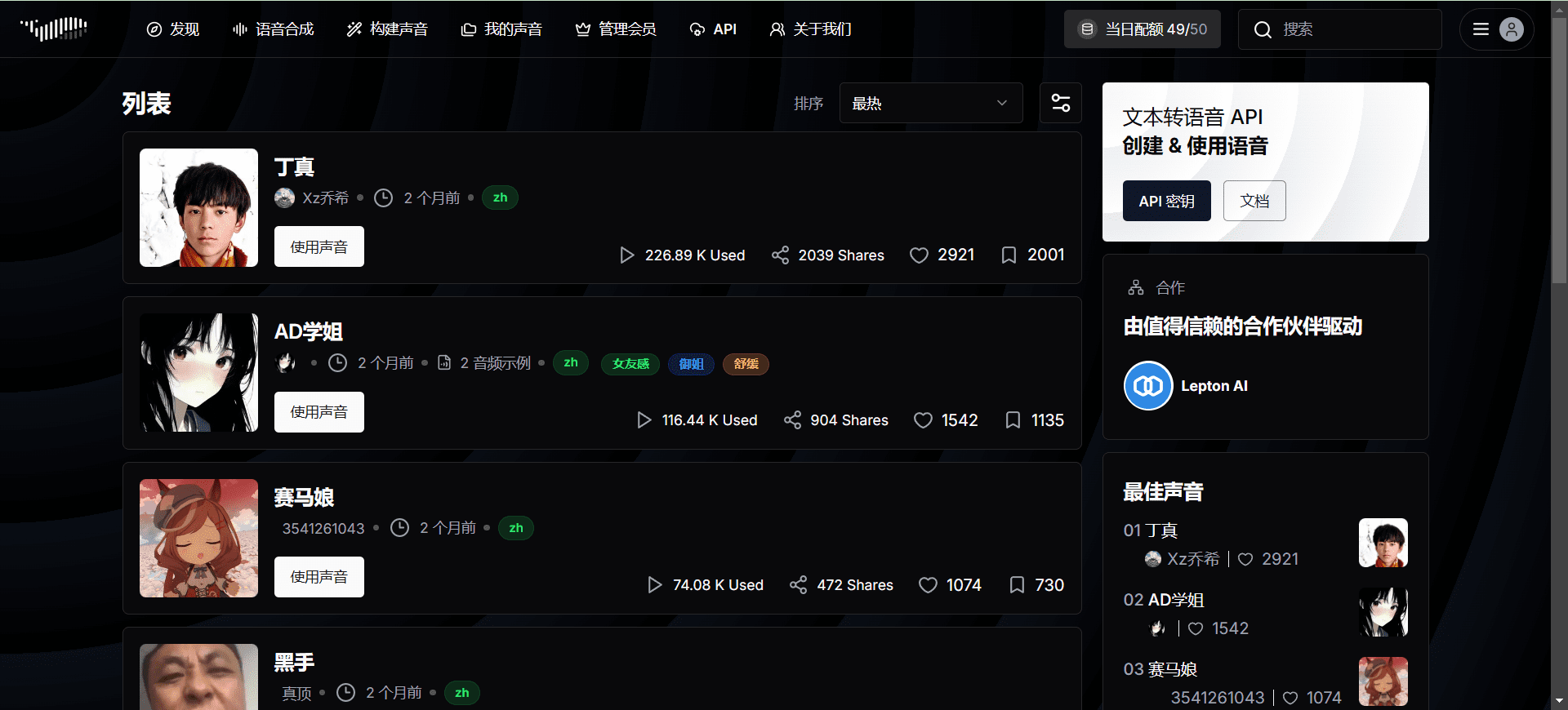

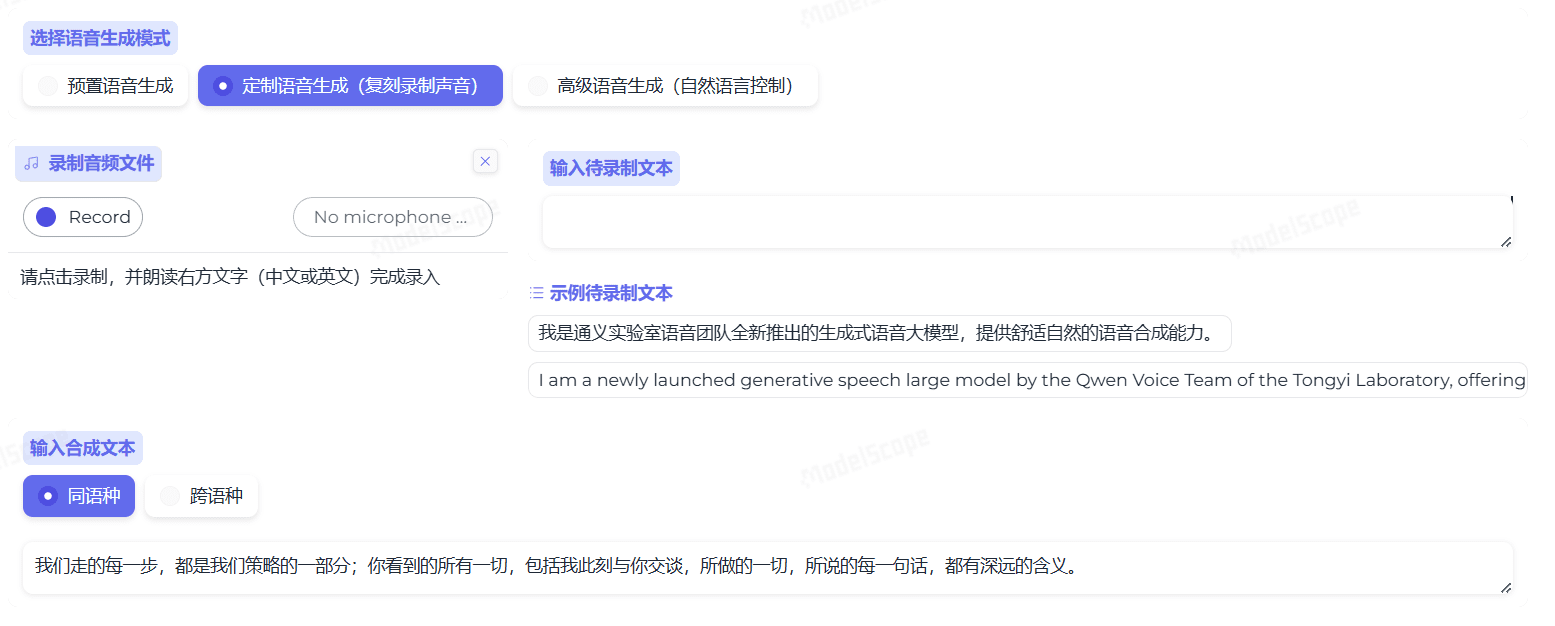

4. FunAudioLLM: A New Model of LLM-Enabled Voice Interaction

Project Features: FunAudioLLM is open-sourced by Alibaba, and its innovation lies in the deep integration of TTS technology and large-scale language modeling (LLM), aiming to achieveMore natural and smooth voice interaction between people and LLMs. It not only focuses on high-quality speech generation, but also emphasizes the synergy between speech understanding and generation in LLM applications, exploring the next generation of speech interaction paradigms. Of particular interest here are CosyVoice , has excellent fast speech cloning capabilities.

Potential application scenarios: New-generation smart speakers, smart assistants with advanced voice interaction capabilities, LLM-based dialog systems, smart home control centers, etc.

Advantage: Backed by Ali, strong technical strength, LLM combined with the innovative direction, is expected to realize a more intelligent voice interaction experience.

Aspects to focus on: As a relatively new program, the maturity and stability of the model may still be under development and refinement.

GitHub address: https://github.com/FunAudioLLM

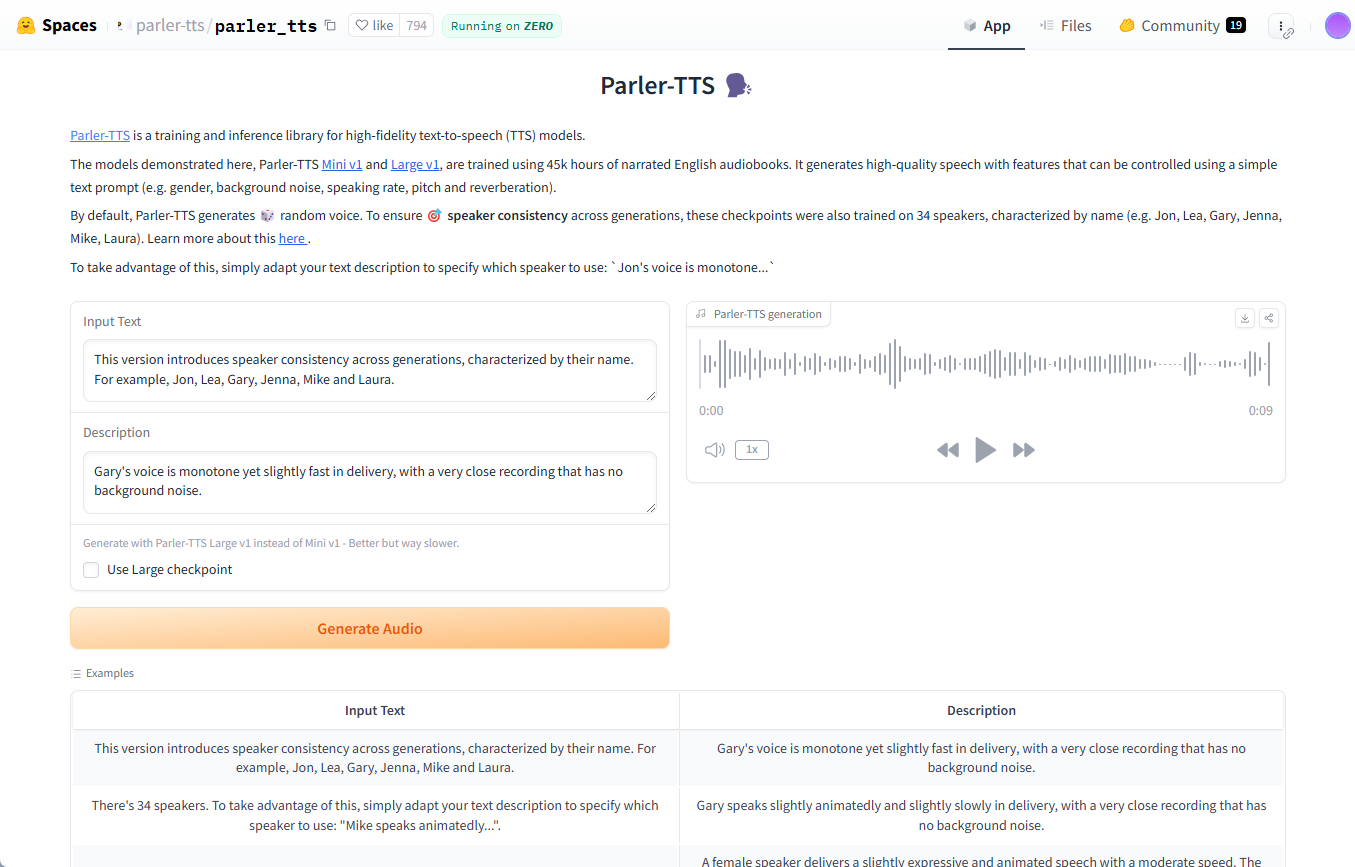

5. Parler-TTS: the fusion of lightweight and stylized speech

Project Features: Parler-TTS emphasize onlight-weight class (in athletics)cap (a poem)Stylized speech synthesis. It generates high-quality, natural-looking speech that mimics the gender, pitch, speed, and other personalized characteristics of the target speaker, while specifying the speaker's style. This allows Parler-TTS to run efficiently on resource-constrained devices and gives speech synthesis a richer sense of personalization and expressiveness.

Potential application scenarios: Mobile applications, embedded systems, applications requiring personalized speech, speech cloning and style migration studies, etc.

Advantage: The model is lightweight, has low resource consumption, supports stylized speech generation, and is able to mimic the speaker's timbre characteristics.

Aspects to focus on: Being a lightweight model, it may not be as good as some of the larger models in the quest for extreme sound quality.

GitHub address: https://github.com/huggingface/parler-tts

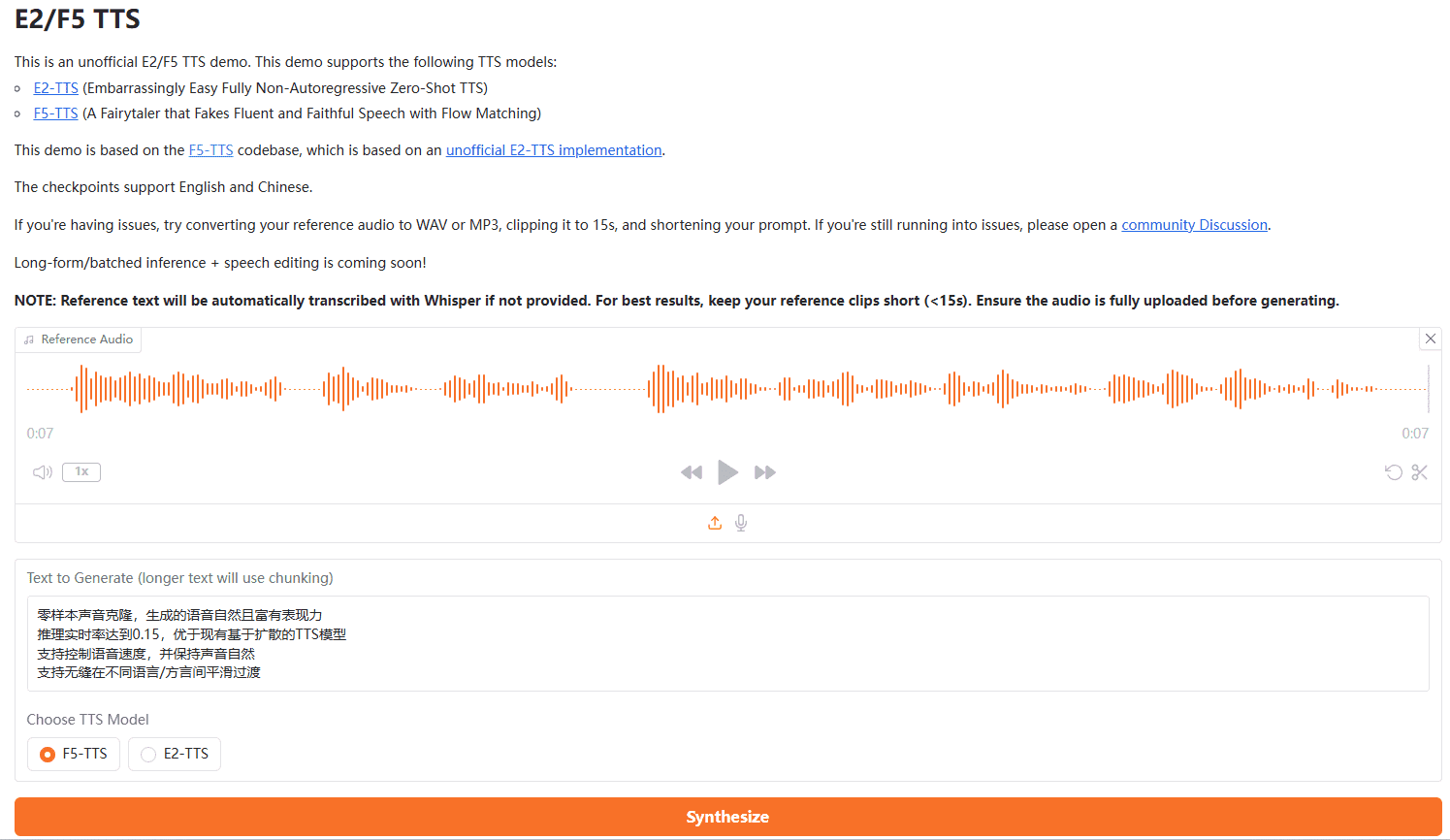

6. F5-TTS: real-time efficient zero-sample sound cloning

Project Features: F5-TTS Jointly open-sourced by Shanghai Jiao Tong University and the University of Cambridge, the mainZero sample sound cloningcap (a poem)real-time speech synthesis. Its inference real-time rate reaches 0.15, meaning that the synthesis speed is much faster than real-time, and can meet the needs of latency-sensitive applications. In addition, the F5-TTS supportsspeech controlcap (a poem)Smooth transitions across languages/dialectsThe RTF=0.15 means that it takes only 0.15 seconds to synthesize 1 second of speech. The term "Real-Time Factor 0.15" usually refers to the Real-Time Factor (RTF), where the smaller the value, the faster the synthesis; RTF=0.15 means that it takes only 0.15 seconds to synthesize a 1-second speech.

Potential application scenarios: Real-time voice interaction system, game character dubbing, live interactive applications, multi-language conferencing system, instant voice translation, etc.

Advantage: Real-time inference is fast, supports zero-sample voice cloning, controlled speech rate and smooth transitions across languages.

Aspects to focus on: The sound quality and cloning of zero-sample clones may be affected by the quality of the reference audio.

GitHub address: https://github.com/SWivid/F5-TTS

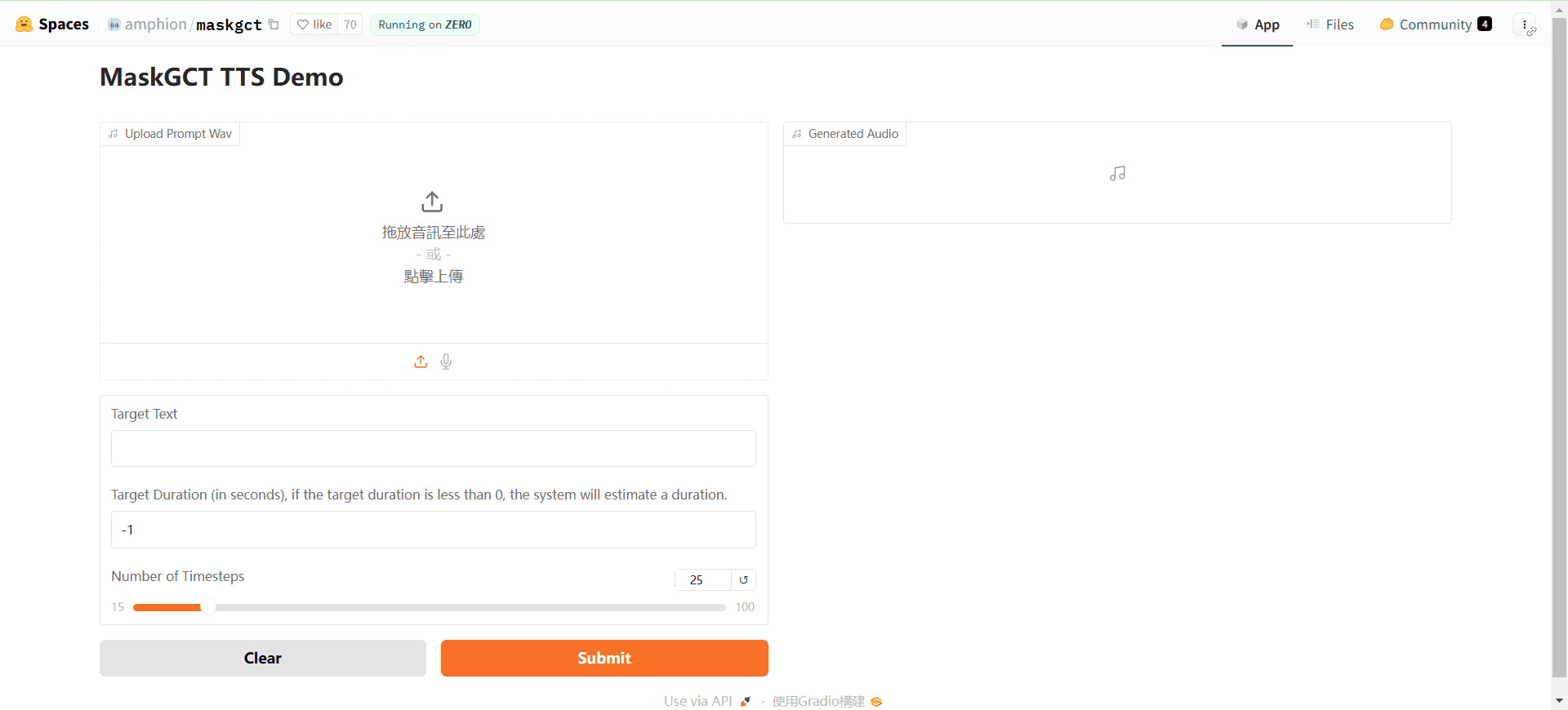

7. MaskGCT: Versatile zero-sample TTS with non-autoregressive architecture

Project Features: MaskGCT is antotally non-autoregressiveTTS model with the same powerfulzero sampleFeatures. It is feature rich and supportsCross-language translation and dubbing, speech cloning, language conversion, emotion controland many other advanced functions. The non-autoregressive architecture allows it to have higher generation speed and efficiency while guaranteeing the quality of synthesis, while the diversified functions make its application scenarios more extensive.

Potential application scenarios: Multi-language film dubbing, voice content localization, personalized voice customization service, voice copyright protection technology, emotional voice interaction system, cross-language communication tools, etc.

Advantage: Non-autoregressive architecture, fast generation, rich functionality, support for cross-language, speech cloning, emotion control and many other advanced features.

Aspects to focus on: The functionality is more complex and may require a certain amount of technical skill to fully navigate its advanced features.

GitHub address: https://github.com/open-mmlab/Amphion/tree/main/models/tts/maskgct

8. OuteTTS (formerly Smol TTS): a lightweight and flexible TTS for the LLaMa architecture

Project Features: OuteTTS (also often referred to as Smol TTS) based on LLaMa ArchitectureBuilt to be azero-sample speech cloningmodel. Its main features are that it is lightweight, flexible, and easy to deploy and use. OuteTTS is a worthwhile entry-level option for developers who want to try out zero-sample cloning quickly, but don't want to use overly complex models.

Potential application scenarios: Rapid development of lightweight applications, prototyping, customization of personal voice assistants, and experimentation with voice cloning techniques.

Advantage: Based on the LLaMa architecture, the model is lightweight, easy to deploy, and supports zero-sample speech cloning.

Aspects to focus on: As a lightweight model, sound quality and feature richness may be relatively limited. Items often appear under the names OuteTTS or Smol TTS, referring to the same item.

GitHub address: https://github.com/edwko/OuteTTS

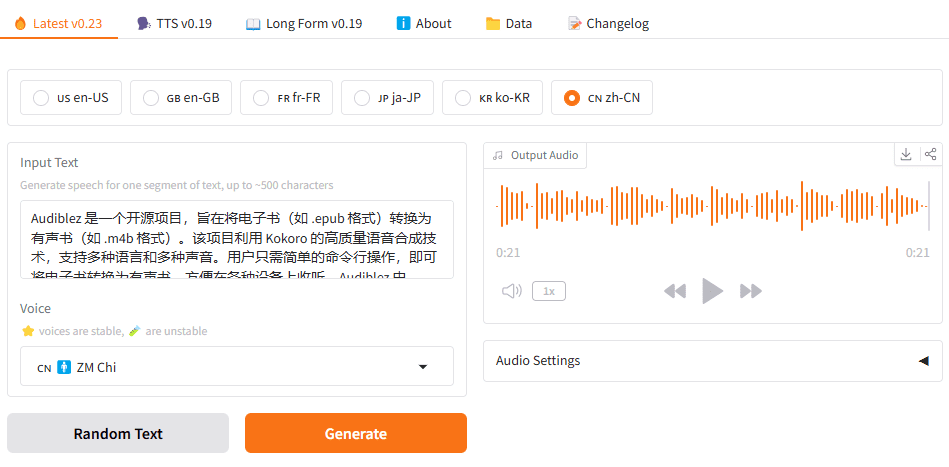

9. Kokoro: small number of references, compact model with multilingual support

Project Features: Kokoro is an open source TTS model with a relatively small number of parameters, only 82 million parameters, and trained on a relatively small audio dataset. Despite the small size of the model, Kokoro still shows goodMulti-language supportcapabilities, demonstrating the potential of small models in the multilingual TTS space. Kokoro may be a viable option if multilingual TTS functionality needs to be deployed in resource-constrained environments.

Potential application scenarios: Low resource device applications, embedded systems, rapidly deployable multi-language capabilities, cost-sensitive TTS solutions, and more.

Advantage: The model has a small number of participants, low resource requirements, supports multiple languages, and is easy to deploy.

Aspects to focus on: Limited by model size and amount of training data, sound quality and naturalness may fall short of large models.

GitHub address: https://github.com/hexgrad/kokoro

10. Llasa: high-fidelity zero-sample speech cloning technology

Project Features: Llasa is an open source audio lab from the Hong Kong University of Science and Technology (HKUST).Zero-sample speech cloning and TTS modelingLlasa supports both the generation of speech from plain text and high-precision cloning using a given reference speech. It supports both speech generation from plain text and high-precision speech cloning using a given reference speech.Llasa focuses on enhancing theFidelity and naturalness of speech cloningLlasa is a voice cloning technology that strives to achieve highly realistic tone reproduction under zero-sample conditions. If you have high demands on the quality of voice cloning technology, Llasa is worth studying and applying.

Potential application scenarios: High-precision voice cloning, character dubbing and voice customization, personalized voice content generation, voice content copyright protection, and emotional voice synthesis.

Advantage: High-quality zero-sample speech cloning with high speech naturalness and similarity, produced by the Audio Laboratory of the Hong Kong University of Science and Technology with strong technical strength.

Aspects to focus on: Larger model sizes (1 billion parameter level) may place higher demands on computational resources.

Model Download Address: https://huggingface.co/HKUSTAudio/Llasa-1B

How to choose the right open source TTS project for you?

With so many great open source TTS projects out there, it's critical to choose the one that best meets your needs. Here are some key considerations to help you make an informed decision:

- Language coverage: Which languages does your application need to support? Prefer projects that support the target language.

- Voice quality and naturalness: What are your expectations for the sound quality and naturalness of synthesized speech? It is recommended to listen to the demo provided by each project to visualize the speech effect of different models and make a comprehensive evaluation by combining subjective evaluation indexes (e.g. MOS - Mean Opinion Score) and objective evaluation data.

- Functional Characterization Requirements: Does your application require advanced features such as zero-sample cloning, multiple speakers, emotion control, speech rate adjustment, etc.? Select a program with the appropriate features based on your actual needs.

- Performance and efficiency considerations: Does your application scenario have real-time requirements? What are the constraints on the inference speed and resource consumption of the models? For example, real-time interactive applications need to choose models with fast inference speed; resource-constrained devices need to consider lightweight models.

- Ease of Use and Documentation Improvement: Is the project's documentation thorough and easy to understand? Does it provide easy deployment and usage? For novice developers, choosing a project with clear documentation that is easy to get started with can effectively reduce learning costs.

- Community activity and maintenance: Is the project's open source community active? Are there ongoing updates and maintenance? An active community usually means more timely technical support and faster iteration.

- License Agreement: Be sure to pay attention to the project's open source license agreement to find out whether it allows commercial use and whether commercial use is subject to specific terms. Common open source licenses include MIT License, Apache 2.0 License, GPL License, etc. Different licenses have different restrictions on commercial use.

- Hardware resource requirements: Different TTS models have different hardware resource requirements. Some large models may require high performance GPUs to run smoothly, while lightweight models can run in a CPU environment. Choose the right model according to your hardware conditions.

We recommend that you combine the above factors and carefully evaluate and test each program according to your specific application scenario and technical capabilities. Many of the programs provide pre-trained models and demo examples, so you can experience them for yourself and choose the one that best fits your needs.

concluding remarks

The proliferation of open source TTS projects has energized speech technology innovation and provided developers with a wealth of choices. Whether you are a commercial developer, an academic researcher or a technology enthusiast, you can find the ideal "voice" engine in the open source community to give your application a more vivid and natural voice interaction experience. With the continuous progress of technology, we have reason to expect that more innovations will emerge in the open source TTS field in the future, and continue to promote the popularization and application of voice technology.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...