FREE!!! Github joins forces with Azure to make top closed source open source model API calls, including o1, free to developers

The dense code on the screen was interspersed with configuration information for various model APIs, and the coffee on the table had long since gotten cold.

This is true for many developers when trying to build AI apps: cumbersome environment configuration, costly APIs, and insufficient documentation support ......

"How great it would be to have a unified platform that makes it easy for all developers to use a variety of AI models."

This wish, today, has finally become a reality.

GitHub has officially launched the GitHub Models service, bringing an AI development revolution to over 100 million developers worldwide.

Let's dive into this game-changing new product.

A quiet development revolution

In today's rapidly evolving AI landscape, the role of the developer is changing profoundly.GitHub has officially announced the GitHub Copilot Free Program, which is now available to all users!

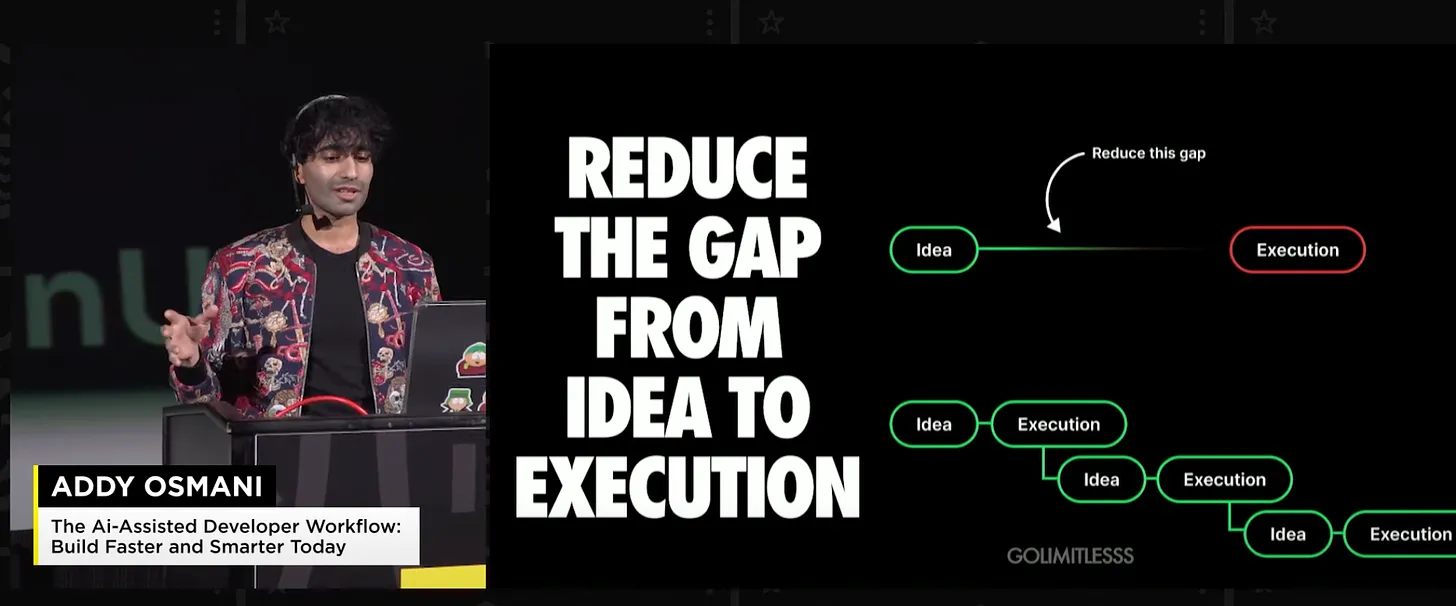

The shift from the traditional "coder" to the "AI engineer" is not just a change in title, but a revolution in the entire software development paradigm.

The emergence of GitHub Models captures this historic turning point.

Why GitHub Models?

No longer needed when you need to use AI models in your projects:

- Switching between platforms to find the right model

- Configure different environments and dependencies for each model

- Worry about high API call costs

- Getting bogged down by complex deployment processes

All these problems are elegantly solved in GitHub Models.

Powerful models at your fingertips

The model lineup is deluxe

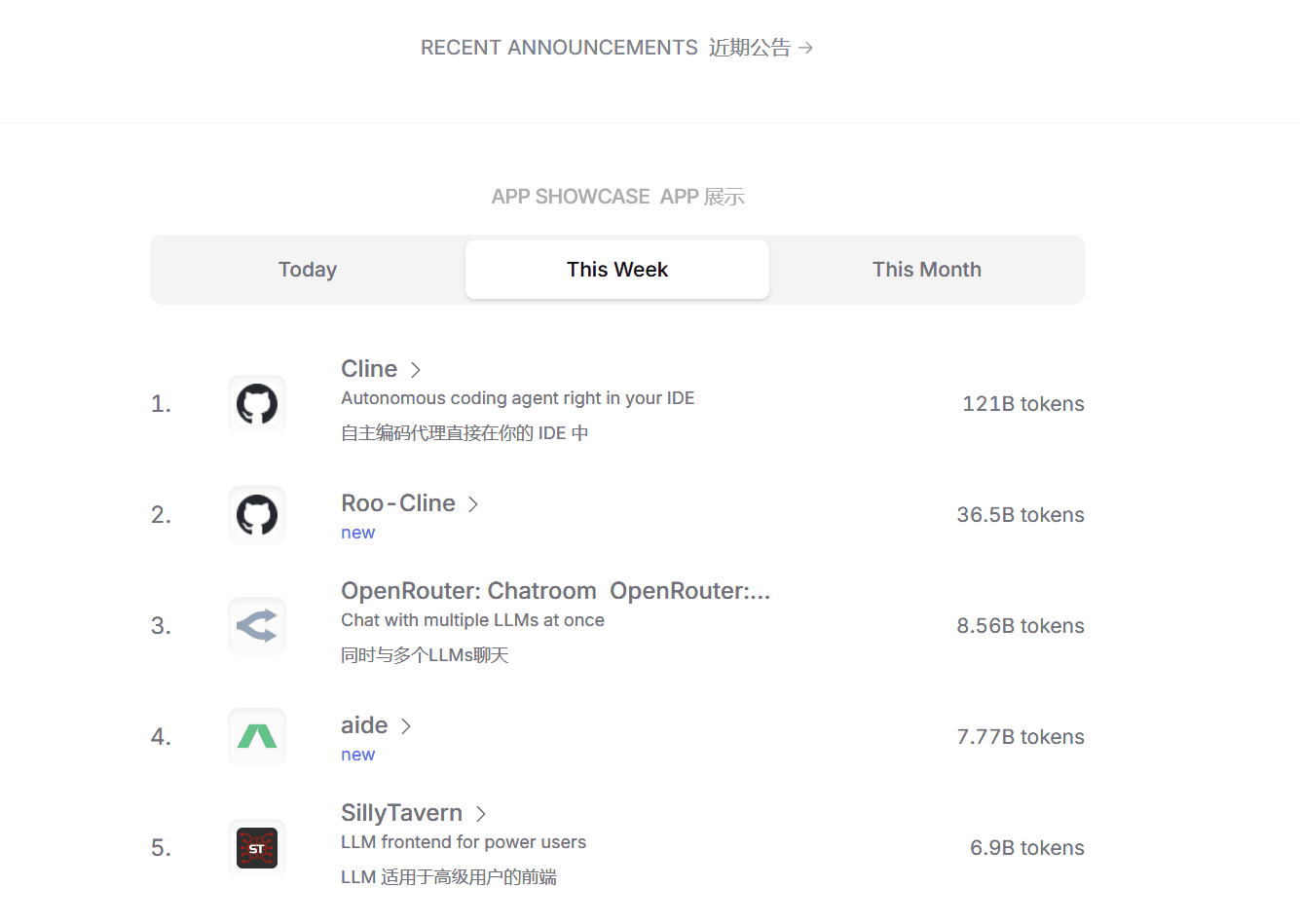

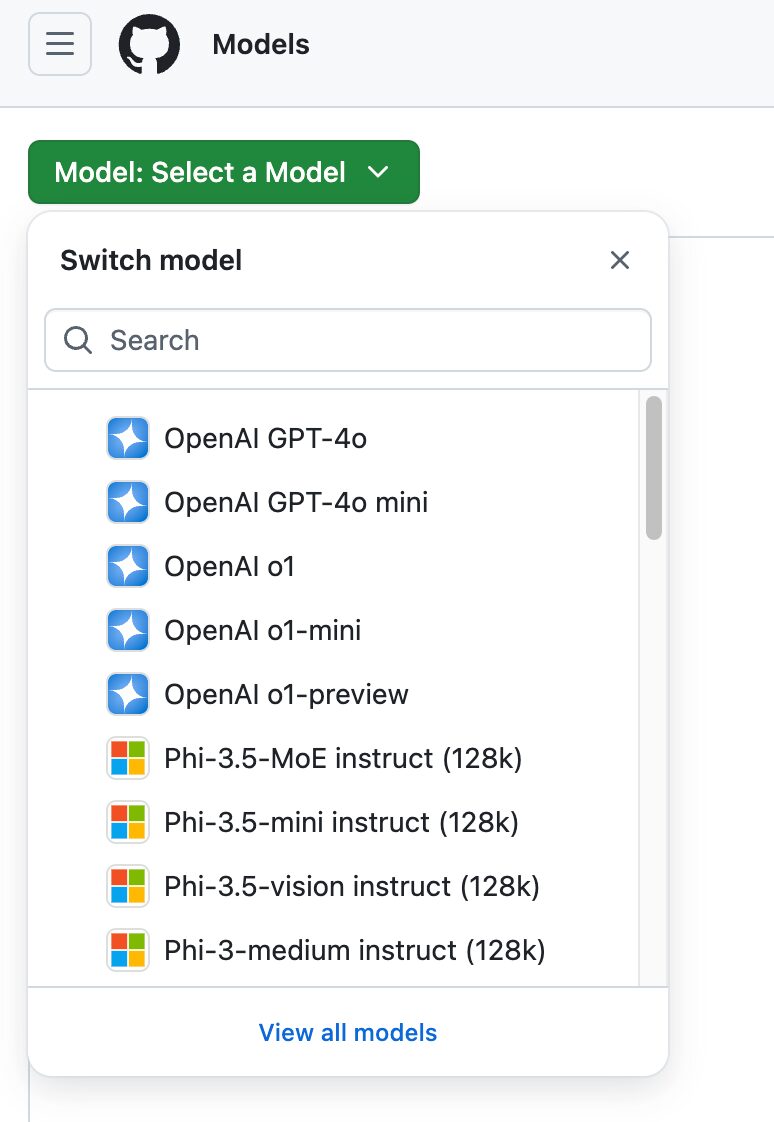

GitHub Models offers an impressive library of models:

- Llama 3.1: Meta's latest open-source big model excels in several benchmarks

- GPT-4o: one of OpenAI's most powerful commercial models with multimodal input support

- GPT-4o mini: a more lightweight version for applications that require a fast response time

- Phi 3: Microsoft's efficient model that performs amazingly well on specific tasks

- Mistral Large 2: Known for low latency, suitable for real-time application development

Each model has its own unique advantages, and developers can choose the one that best suits their specific needs.

Surprisingly easy to use

Imdoaa from our group, a tech lead at a startup, shared his experience using GitHub Models:

"Previously, we had particular headaches in selecting and testing AI models. Either we needed to pay high fees or spend a lot of time deploying open source models. With GitHub Models, none of these problems exist anymore. We can quickly compare the results of different models in playground and find the one that best suits our needs. The best part is that the entire process from experimentation to deployment is done within the GitHub ecosystem and the experience is unbelievably smooth."

In-depth understanding of the three core strengths

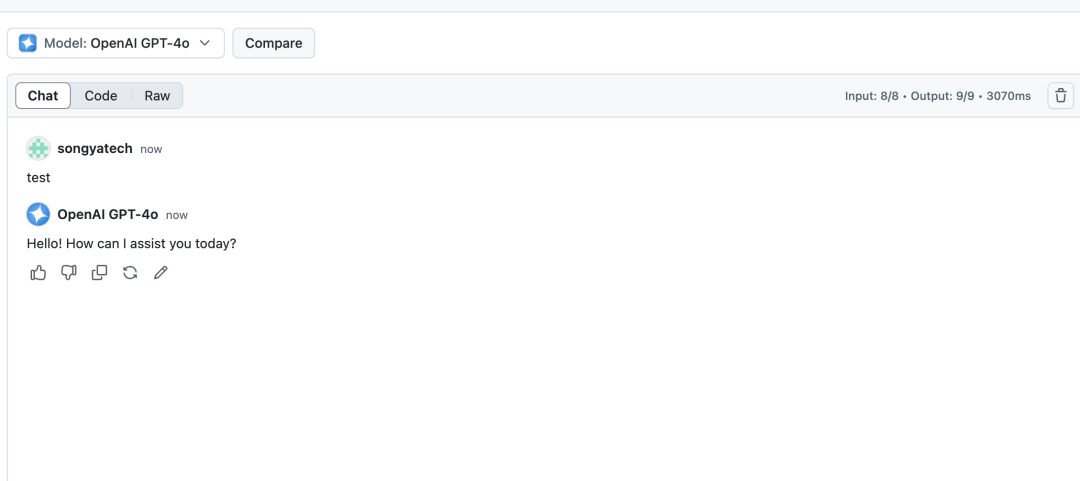

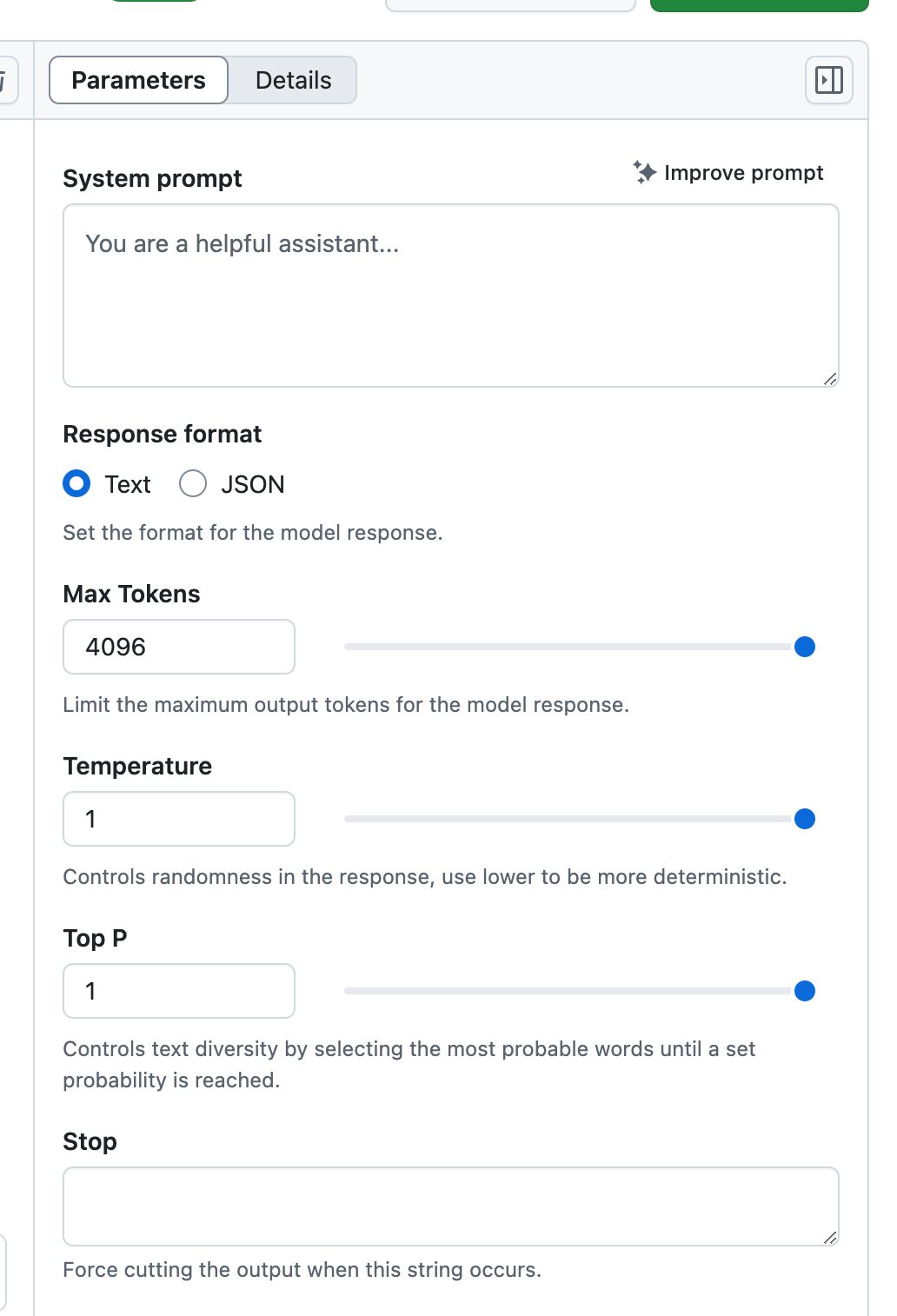

1. Revolutionary Playground environment

Playground is more than a simple model testing environment, it is a complete AI lab:

- Real-time parameter adjustment::

- Temperature control

- Maximum Token Number Setting

- Top-p Sampling Adjustment

- Optimization of system prompt words

- Multi-model comparison::

Multiple models can be opened at the same time for comparison testing to visualize the difference in performance of different models with the same input. - History Saving::

All experimental procedures are recorded for subsequent review and optimization.

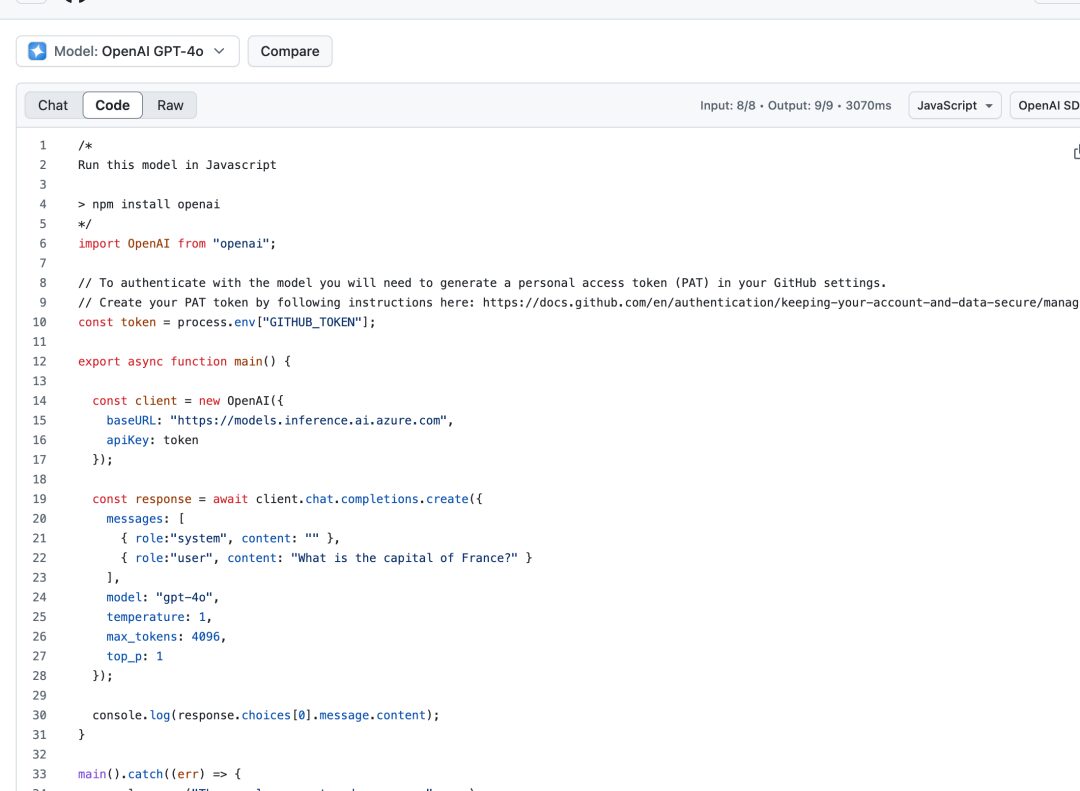

2. Seamless Codespaces integration

Codespaces integration makes the development process incredibly smooth:

- Pre-configured environment::

All necessary dependencies and configurations are ready out of the box. - Multi-language support::

Provides sample code and SDK for Python, JavaScript, Java and other mainstream languages.

- version control::

Direct integration with GitHub repositories makes code change management easier.

3. Enterprise-level deployment assurance

With Azure AI support, enterprise-level deployments are made simple and reliable:

- Globalized deployment::

Over 25 Azure regions to choose from, ensuring speedy access for users worldwide. - Safety Compliance::

Conforms to enterprise-level security standards and supports data encryption and access control. - scalability::

Automatically scale resources based on demand to ensure service stability.

In-depth analysis of the use of quotas

The design of usage quotas for different versions reflects GitHub's product strategy:

Free version and Copilot Individual

- Requests per minute: 10

- Daily quota: 50

- Token Limitations:

- Input: 8000

- Output: 4000

- Concurrent requests: 2

This quota is suitable for individual developers for project validation and learning.

Copilot Business

- Keep the same concurrency and token limits

- Daily requests raised to 100

- Suitable for the development needs of small teams

Copilot Enterprise

- Requests per minute: 15

- Daily quota: 150

- Larger token limit:

- Input: 16000

- Output: 8000

- Concurrent requests: 4

- Suitable for enterprise-level application development

Practice Tips

Development Efficiency Improvement Tips

- Intelligent Caching Policy

# 示例代码:实现简单的结果缓存 import hashlib import json class ModelCache: def __init__(self): self.cache = {} def get_cache_key(self, prompt, params): data = f"{prompt}_{json.dumps(params, sort_keys=True)}" return hashlib.md5(data.encode()).hexdigest() def get_or_compute(self, prompt, params, model_func): key = self.get_cache_key(prompt, params) if key in self.cache: return self.cache[key] result = model_func(prompt, params) self.cache[key] = result return result - Batch Processing Optimization

Organize request batches wisely to avoid frequent API calls. - Error Handling Best Practices

Implement an intelligent retry mechanism to handle temporary failures.

Safety Recommendations

- Access Token Management

- Regular rotation Personal Access Token

- Use of the principle of least privilege

- Avoid hard-coding tokens in code

- data security

- Desensitize data before sending to model

- Realization of data access audits

- Regular checking of security logs

look forward to

GitHub CEO Thomas Dohmke's vision is to help the world reach its goal of 1 billion developers in the next few years. Behind this ambition is a strong belief in the democratization of AI.

Start your AI Engineer journey today!

- Application Preparation

- Visit the official GitHub Models page

- Submit a waiting list request

- Prepare GitHub account and personal access token

- Environment Configuration

import OpenAI from "openai"; const token = process.env["GITHUB_TOKEN"]; const client = new OpenAI({ baseURL: "https://models.inference.ai.azure.com", apiKey: token }); - Start the experiment

- Testing different models in playground

- Quick start project with sample code

- Progressive expansion of application functionality

concluding remarks

The evolution of AI technology is reshaping the future of software development. the launch of GitHub Models is more than just the birth of a new product, it's the beginning of a new era. It gives every developer the opportunity to participate in the AI revolution and change the world with innovative ideas and practices.

Now, what's in front of you is no longer the question of whether to start AI development, but how to better utilize the powerful platform of GitHub Models to go farther on the path of an AI engineer. The opportunity is there, are you ready?

As one senior developer said, "GitHub Models is not just a tool, it's a key to unlock the AI era. With it, every developer can become an AI engineer and every idea can become a reality."

Now it's time to start your AI development journey.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...