Meta: An Adaptation (Practical Application) Approach to Large Language Models

Original text:

https://ai.meta.com/blog/adapting-large-language-models-llms/

https://ai.meta.com/blog/when-to-fine-tune-llms-vs-other-techniques/

https://ai.meta.com/blog/how-to-fine-tune-llms-peft-dataset-curation/

This is the first in a three-part series of blog posts on adapting open source Large Language Models (LLMs). In this article, we will learn about the various methods available for adapting LLMs to domain data.

introductory

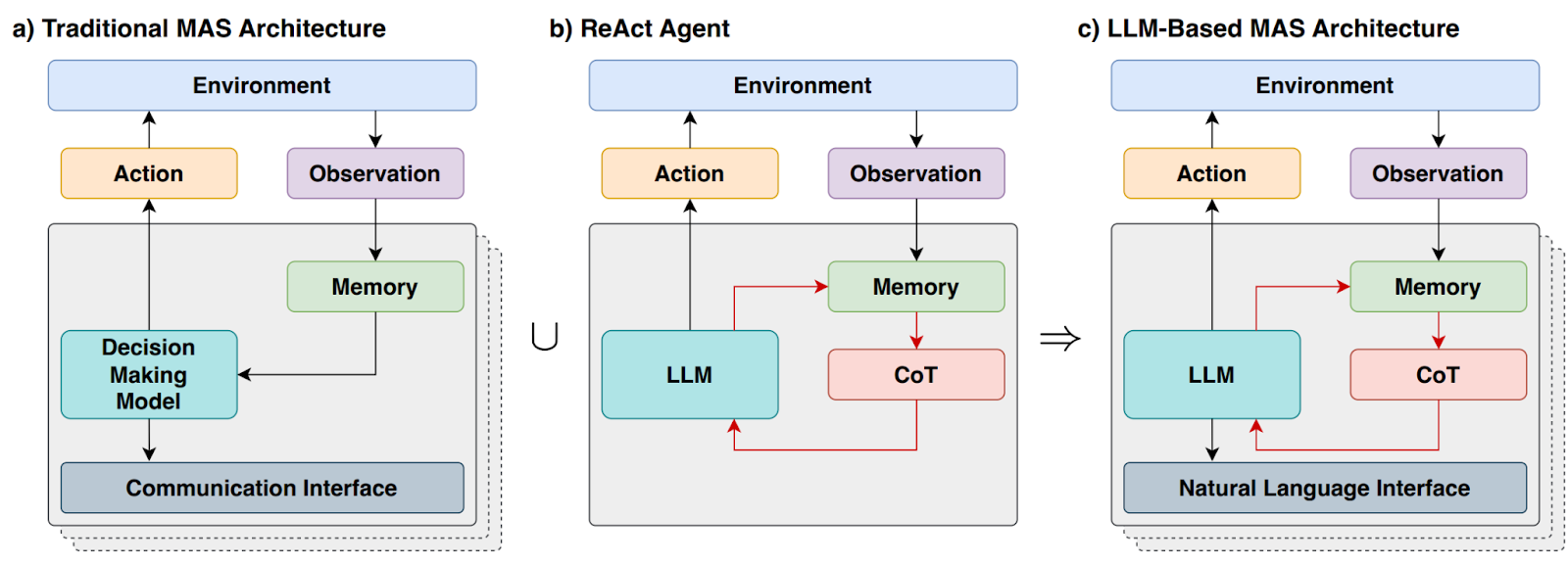

Large Language Models (LLMs) are used in a wide range of language tasks and natural language processing (NLP). benchmarkingThe number of product use cases based on these "generic" models is increasing. The number of product use cases based on these "generic" models is growing. In this blog post, we'll provide guidance for small AI product teams looking to adapt and integrate LLMs into their projects. Let's start by clarifying the (often confusing) terminology around LLMs, then briefly compare the different adaptation approaches available, and finally recommend a step-by-step flowchart to determine the right approach for your use case.

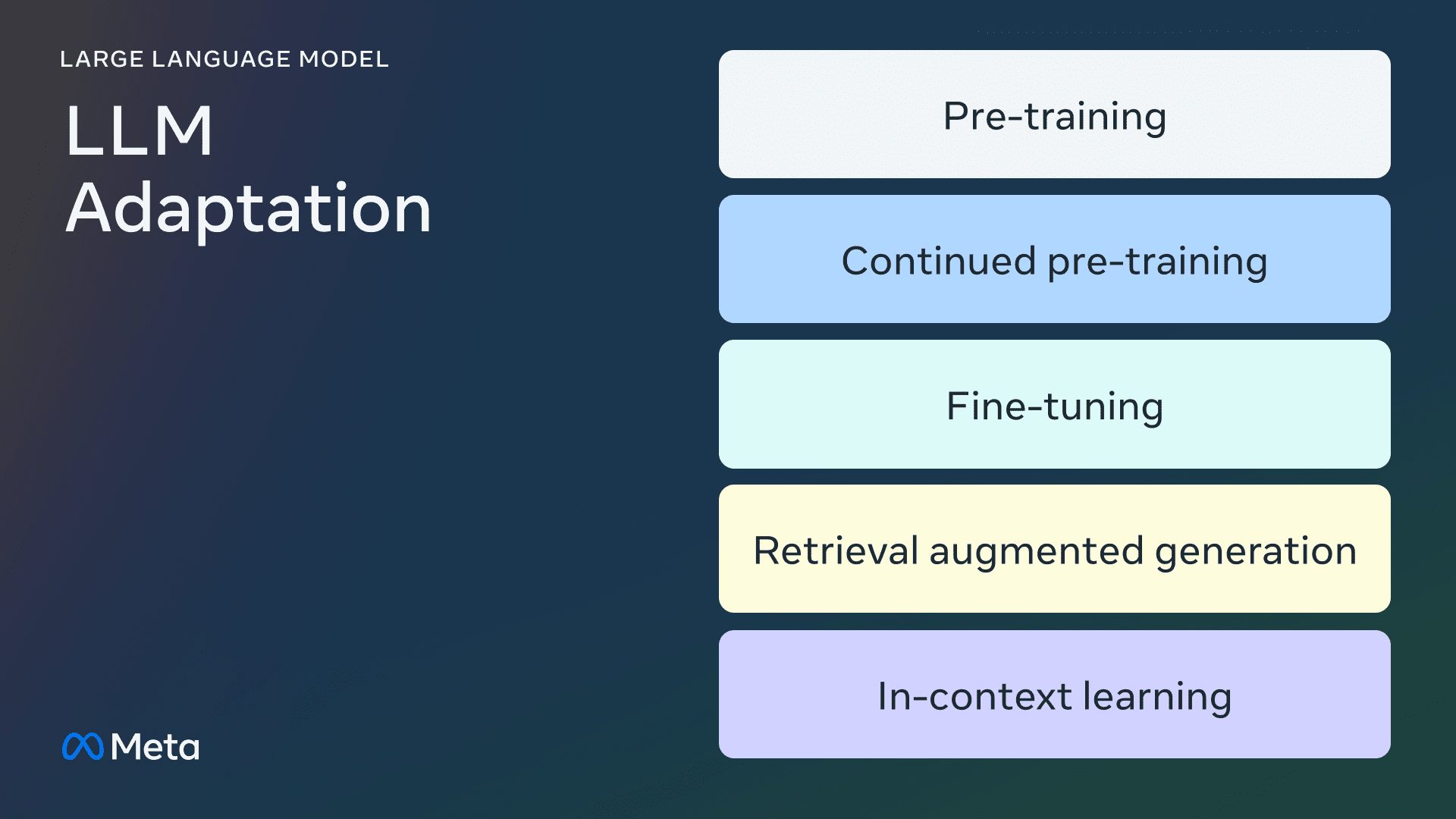

LLM adaptation method

pre-training

Pre-training is the use of trillions of data tokens The process of training the LLM from scratch. The model is trained using a self-supervised algorithm. Most commonly, training predicts by autoregression the next token to perform (aka causal language modeling). Pre-training typically takes thousands of GPU hours (105 - 107 [...]).source1, source2]) are distributed across multiple GPUs. The pre-trained output model is called basic modelThe

Continuous pre-training

Continuous pre-training (also known as two-stage pre-training) involves further training the base model using new, unseen domain data. The same self-supervised algorithm is used as for the initial pre-training. Usually involves all model weights and mixing a portion of the original data with the new data.

trimming

Fine-tuning is the process of adapting pre-trained language models using annotated datasets in a supervised manner or using reinforcement learning-based techniques. There are two main differences compared to pre-training:

- Supervised training on annotated datasets - containing correct labels/answers/preferences - not self-supervised training

- Fewer tokens are needed (thousands or millions, rather than the billions or trillions needed for pre-training), with the main goal of enhancing command following, human alignment, task performance, etc.

There are two dimensions to understanding the current fine-tuning grid: the percentage of parameters changed and the new capabilities added as a result of the fine-tuning.

Percentage of parameters changed

There are two types of algorithms depending on the number of parameters changed:

- Completely fine-tuned: As the name suggests, this involves changing all the parameters of the model, including the traditional fine-tuning of smaller models such as XLMR and BERT (100 - 300 million parameters), as well as the Llama 2The fine-tuning of large models such as GPT3 (1 billion+ parameters).

- Parameter Efficient Fine Tuning (PEFT): Unlike fine-tuning all LLM weights, the PEFT algorithm only fine-tunes a small number of additional parameters or updates a subset of the pre-training parameters, typically 1 - 6% of the total parameters.

Ability to add to the base model

The purpose of performing fine-tuning is to add capabilities to pre-trained models - e.g., instruction adherence, human alignment, etc. Llama 2 for dialog tuning is a fine-tuned model with the ability to add instruction adherence and alignment capabilities to typical exampleThe

Search Enhanced Generation (RAG)

Organizations can also adapt to LLMs by adding domain-specific knowledge bases.RAG is essentially "search-driven LLM text generation".RAG, launching in 2020, uses dynamic cueing contexts retrieved based on a user's question and injected with LLM hints to direct it to use the retrieved content instead of pre-trained - and potentially outdated - knowledge.RAG can also be adapted to LLMs by adding domain-specific knowledge bases.RAG is essentially "search-driven LLM text generation". -knowledge.Chat LangChain is a popular example of a RAG-powered LangChain document Q&A chatbot.

Contextual Learning (ICL)

With ICL, we adapted the LLM by placing prototypical examples in the prompts, and several studies have shown that "demonstration by example" is effective. These examples can contain different types of information:

- Input and output text only - i.e., less sample learning

- Reasoning traces: add intermediate reasoning steps; cf. thought chain (CoT) Tips

- Planning and Reflection Trail: add information that teaches LLM to plan and reflect on its problem-solving strategies; see ReACT

There are a variety of other strategies for modifying prompts thatTip Engineering GuideContains a comprehensive overview.

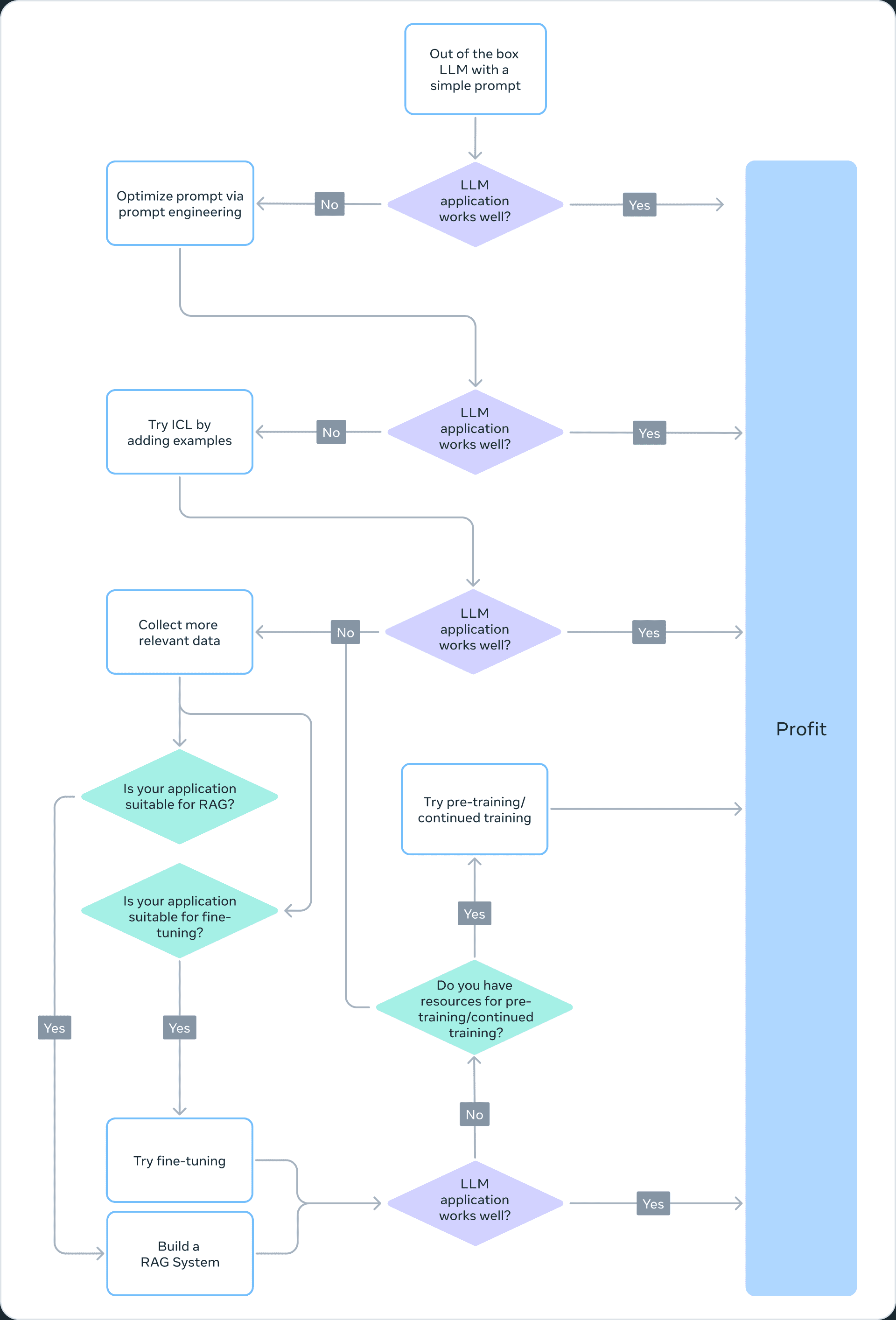

Choosing the right adaptation method

In order to decide which of the above methods is appropriate for a particular application, you should consider various factors: the modeling capabilities required for the task being pursued, the training cost, the inference cost, the type of dataset, and so on. The following flowchart summarizes our recommendations to help you choose the right Large Language Model (LLM) adaptation method.

❌ Pre-training

Pre-training is an important part of LLM training, using token prediction variants as loss functions. Its self-supervised nature allows training on large amounts of data. For example, Llama 2 was trained on 2 trillion tokens. This requires massive computational infrastructure: Llama 2 70B cost 1,720,320 GPU hours. Therefore, we do not recommend pre-training as a viable method for LLM adaptation for teams with limited resources.

Since pretraining is computationallyprohibitive, updating the weights of models that have been pretrained may be an effective way to adapt the LLM for a specific task. Any approach to updating pretrained model weights is susceptible to the phenomenon of catastrophic forgetting, a term used to describe a model forgetting previously learned skills and knowledge. For example.this studyshows that models fine-tuned in the medical domain have decreased performance in instruction following and common question and answer tasks. Other studies have also shown that general knowledge gained through pre-training may be forgotten during subsequent training. For example.this studySome evidence of LLM knowledge forgetting is provided from the perspectives of domain knowledge, reasoning, and reading comprehension.

❌ Continuous pre-training

Considering catastrophic forgetting, recent developments suggest that continuous pretraining (CPT) can further improve performance at a fraction of the computational cost required for pretraining. CPT may be beneficial for tasks that require LLMs to acquire new conversion skills. For example.It is reported that..., continuous pretraining has been successful in adding multilingualism.

However, CPT is still an expensive process that requires significant data and computational resources. For example, the Pythia suite went through a second stage of pre-training, which resulted in the creation of the FinPythia-6.9BThe model was designed specifically for financial data. This model designed specifically for financial data underwent 18 days of CPT using a dataset containing 24 billion tokens. in addition, CPT is prone to catastrophic forgetting. Therefore, we do not recommend continuous pretraining as a viable approach to LLM adaptation for teams with limited resources.

In conclusion, the use of self-supervised algorithms and unlabeled datasets to adapt LLMs (as done in pre-training and continuous pre-training) is resource-intensive and costly, and is not recommended as a viable approach.

✅ Full Fine Tuning and Parametric Efficient Fine Tuning (PEFT)

Fine-tuning with smaller labeled datasets is a more cost-effective approach than pre-training with unlabeled datasets. By adapting the pre-trained model to a specific task, the fine-tuned model has been shown to achieve state-of-the-art results across a wide range of applications and specializations (e.g., legal, medical, or financial).

Fine-tuning, especially Parameter Efficient Fine-Tuning (PEFT), requires only a fraction of the computational resources required for pre-training/continuous pre-training. Thus, this is a viable LLM adaptation method for teams with limited resources. In this series ofPart 3In it, we delve into the details of fine-tuning, including full fine-tuning, PEFT, and a practical guide on how to do it.

✅ Retrieve Augmented Generation (RAG)

RAG is another popular LLM adaptation method. If your application needs to extract information from a dynamic knowledge base (e.g. a quiz bot), RAG may be a good solution. The complexity of a RAG-based system lies mainly in the implementation of the retrieval engine. The reasoning cost of such systems can be higher because the hints include retrieved documents and most providers use a token-based model. In this series ofPart 2in which we discuss RAG more broadly and compare it to fine-tuning.

✅ Contextual Learning (ICL)

This is the most cost-effective way to adapt LLM.ICL does not require any additional training data or computational resources, making it a cost-effective approach. However, similar to RAG, the cost and latency of inference may increase due to processing more tokens at inference time.

summarize

Creating an LLM-based system is an iterative process. We recommend starting with a simple approach and gradually increasing the complexity until you reach your goal. The flowchart above outlines this iterative process and provides a solid foundation for your LLM adaptation strategy.

a thank-you note

We would like to thank Suraj Subramanian and Varun Vontimitta for their constructive feedback on the organization and preparation of this blog post.

Part II: To fine-tune or not to fine-tune

This is the second in a series of blog posts on adapting Open Source Large Language Models (LLMs). In this post, we will discuss the following question, "When should we fine-tune and when should we consider other technologies?"

introductory

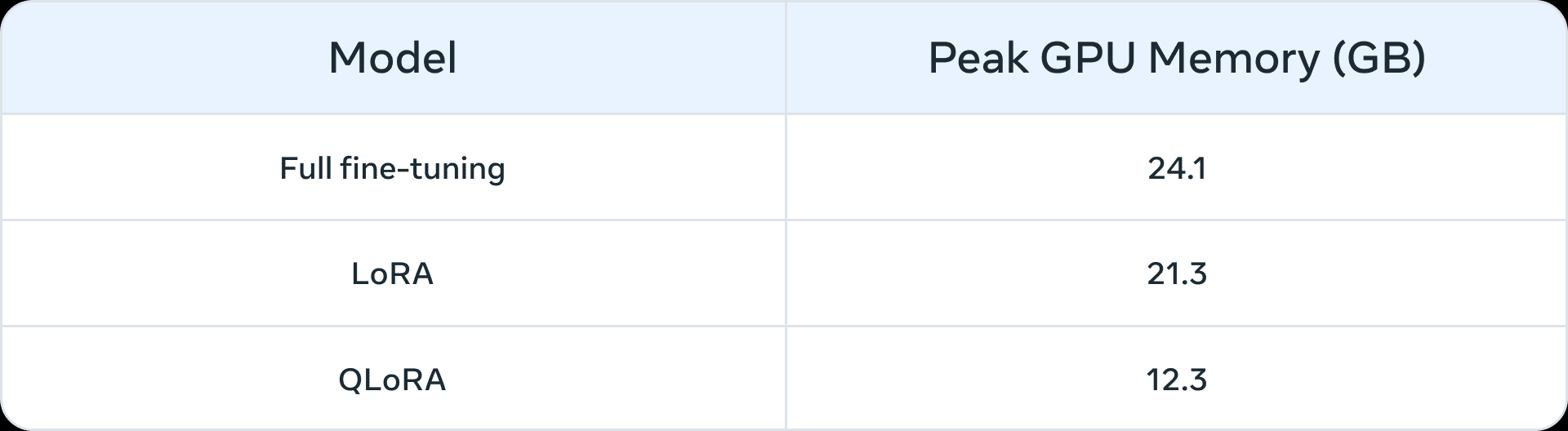

Prior to the rise of large language models, fine-tuning was commonly used for smaller scale models (100 million - 300 million parameters). The most advanced domain applications were built using supervised fine-tuning (SFT) - i.e., using labeled data from your own domain and downstream tasks to further train pre-trained models. However, with the advent of larger models (> 1 billion parameters), the issue of fine-tuning becomes more subtle. Most importantly, larger models require more resources and commercial hardware for fine-tuning. Table 1 below provides a list of peak GPU memory usage for fine-tuning the Llama 2 7B and Llama 2 13B models under three scenarios. You may notice that models like QLoRA Such algorithms make it easier to fine-tune large models using limited resources. For example, Table 1 shows the peak GPU memory for three fine-tuning scenarios (full fine-tuning, LoRA, and QLoRA) on Llama 2 7B. Similar memory reductions as a result of parameter efficient fine-tuning (PEFT) or quantization were also achieved on Llama 1. reporting Over. In addition to computing resources, catastrophic forgetting (for more information, see this series of Part I) is a common pitfall of full-parameter fine-tuning. the PEFT technique aims to address these shortcomings by training a small number of parameters.

Table 1: Memory (GB) on LLama 2 7B for different fine-tuning methods (source (of information etc)QLoRA is quantized using a 4-bit NormalFloat.

Table 1: Memory (GB) on LLama 2 7B for different fine-tuning methods (source (of information etc)QLoRA is quantized using a 4-bit NormalFloat.

Prototypes that may benefit from fine-tuning

We identified the following scenarios as common use cases that could benefit from fine-tuning:

- Tone, style and format customization: Use cases may require a big language model that reflects a specific personality or serves a specific audience. By fine-tuning the biglanguage model using a custom dataset, we can shape the chatbot's responses to more closely align with the specific requirements or intended experience of its audience. We may also want to structure the output in a specific way-for example, in JSON, YAML, or Markdown format.

- Improve accuracy and deal with edge cases: Fine-tuning can be used to correct illusions or errors that are difficult to correct through cue engineering and contextual learning. It can also enhance a model's ability to perform new skills or tasks that are difficult to express in cues. This process can help correct failures where models are unable to follow complex cues and improve their reliability in producing the desired output. We provide two examples:

- Accuracy of Phi-2 on Sentiment Analysis of Financial Data Upgraded from 34% to 85%The

- ChatGPT Accuracy on Reddit Comment Sentiment Analysis Increase of 25 percentage points (from 48% to 73%), using only 100 examples.

Typically, for smaller initial accuracy numbers (< 50%), fine-tuning with a few hundred examples will result in a significant improvement.

- Address areas of underrepresentation: Although big language models are trained on large amounts of generalized data, they may not always be well versed in the nuanced jargon, terminology, or idiosyncrasies of each niche domain. For diverse domains such as law, medicine, or finance, fine-tuning has been shown to help improve the accuracy of downstream tasks. We provide two examples:

- As this writings It was pointed out that a patient's medical record contains highly sensitive data that is not usually found in the public domain. Therefore, systems based on large language models for summarizing medical records need to be fine-tuned.

- Fine-tuning of under-represented languages, such as Indic languages, using PEFT techniques Helpful in all tasksThe

- Cost reduction: Fine-tuning allows for the distillation of skills from larger models such as the Llama 2 70B/GPT-4 into smaller models such as the Llama 2 7B, reducing cost and latency without compromising quality. In addition, fine-tuning reduces the need for lengthy or specific cues (such as those used in cue engineering), thus saving tokens and further reducing costs. For example, thethis article Shows how cost savings can be realized by refining the more expensive GPT-4 model to fine-tune the GPT-3.5 rater.

- New Tasks/Capabilities: Often, new capabilities can be realized through fine-tuning. We provide three examples:

- Fine-tuning large language models to Better use of the context of a given searcher Or ignore it altogether

- Fine-tuning large language models Rubrics to Evaluate Other Large Language ModelsThe assessment of indicators such as truthfulness, compliance or usefulness

- Fine-tuning large language models to Add Context Window

Comparison with technologies for adaptation in other areas

Fine-tuning vs. Contextual (Less Sample) Learning

Contextual learning (ICL) is a powerful way to improve the performance of systems based on large language models. Given its simplicity, you should try ICL before undertaking any fine-tuning activities. in addition, ICL experiments can help you evaluate whether fine-tuning will improve the performance of downstream tasks. Common considerations when using ICL are:

- As the number of examples to be shown increases, so does the inference cost and latency.

- With more and more examples, large language models Often overlooked are some of theThis means that you may need a RAG-based system to find the most relevant examples based on the input. This means you may need a RAG-based system to find the most relevant examples based on the input.

- Large language models may spit out knowledge provided to them as examples. This problem also exists when fine-tuning.

trimming vs. and RAG

The general consensus is that when the underlying performance of a large language model is unsatisfactory, you might "start with RAG, evaluate its performance, and move to fine-tuning if it is found to be insufficient," or "RAG may have advantages" over fine-tuning (source (of information etc)). However, we believe that this paradigm is an oversimplification because in many cases RAG is not only not an alternative to fine-tuning, but more of a complementary approach to fine-tuning. Depending on the characterization of the problem, one or possibly both approaches should be tried. The use of this article framework, here are some questions you might ask to determine whether fine-tuning or RAG (or possibly both) is right for your problem:

- Does your application need external knowledge? Fine-tuning does not usually apply to injecting new knowledge.

- Does your app require a custom tone/behavior/vocabulary or style? For these types of requirements, fine-tuning is usually the right approach.

- How tolerant is your application to hallucinations? In applications where suppression of falsehoods and imaginative fabrications is critical, the RAG system provides built-in mechanisms to minimize hallucinations.

- How much labeled training data is available?

- How static/dynamic is the data? If the problem requires access to a dynamic corpus of data, fine-tuning may not be the right approach, as knowledge of large language models becomes outdated quickly.

- How much transparency/interpretability is needed for large language modeling applications?The RAG can essentially provide references which are useful for interpreting large language modeling outputs.

- Cost and complexity: does the team have expertise in building search systems or prior experience in fine-tuning?

- How diverse are the tasks in your app?

In most cases, a hybrid solution of fine-tuning and RAG will produce the best results - and then the question becomes the cost, time, and additional standalone gains of doing both at the same time. Refer to the questions above to guide your decisions on whether RAG and/or fine-tuning are needed, and conduct internal experiments by analyzing errors to understand possible metrics gains. Finally, the exploration of fine-tuning does require a robust data collection and data improvement strategy, which we recommend as a prerequisite to begin fine-tuning.

a thank-you note

We would like to thank Suraj Subramanian and Varun Vontimitta for their constructive feedback on the organization and preparation of this blog post.

Part III: How to fine-tune: focusing on effective datasets

This is the third post in a series of blogs about adapting open source Large Language Models (LLMs). In this article, we'll explore some rules of thumb for organizing quality training datasets.

introductory

Fine-tuning LLMs is a combination of art and science, and best practices in the field continue to emerge. In this blog post, we will focus on the design variables of fine-tuning and provide directional guidance on best practices for fine-tuning models in resource-constrained settings. We suggest the following information as a starting point for developing a strategy for fine-tuning experiments.

Full Fine-Tuning vs. Parametric Efficient Fine-Tuning (PEFT)

The full amount of fine tuning and PEFT in academe cap (a poem) practical application All of them have been shown to improve downstream performance when applied in new domains. Choosing one of them ultimately depends on available computational resources (in terms of GPU hours and GPU memory), performance on tasks other than the target downstream task (the learn-to-forget trade-off), and the cost of manual annotation.

Full-volume fine-tuning is more likely to suffer from two problems:model crash cap (a poem) cataclysmic forgetting.. Model collapse is when the model output converges to a finite set of outputs and the tails of the original content distribution disappear. Catastrophic forgetting, as in this series Part I discussed, can cause the model to lose its power. Some early empirical studies have shown thatCompared to the PEFT technique, the full-volume fine-tuning technique is more prone to these problems, although more research is needed.

The PEFT technique can be designed to act as a natural regularizer for fine-tuning.PEFT typically requires relatively few computational resources to train downstream models and is easier to use in resource-constrained scenarios with limited dataset sizes. In some cases, full fine-tuning performs better on specific tasks, but usually at the cost of forgetting some of the capabilities of the original model. This "learning-forgetting" trade-off between performance on specific downstream tasks and performance on other tasks is a major issue in the This paper. An in-depth comparison of LoRA and full-volume fine-tuning is presented in.

Given the resource constraints, the PEFT technique may provide a better performance improvement/cost ratio than full fine-tuning. If downstream performance is critical in a resource-constrained situation, full-volume fine-tuning will be the most effective. In either case, it is critical to keep the following key principles in mind to create high-quality datasets.

Data set organization

In fine-tuning experiments in the literature, datasets are critical to obtaining the benefits of fine-tuning. There is more nuance here than just "better quality and more examples", and you can wisely invest in dataset collection to improve performance in resource-constrained fine-tuning experiments.

Data quality/quantity

- Quality is critical: The general trend we see is that quality is more important than quantity - i.e., it is better to have a small set of high-quality data than a large set of low-quality data. The key principles of quality are consistent labeling, no errors, mislabeled data, noisy inputs/outputs, and a representative distribution compared to the whole. When fine-tuning theLIMA data set of several thousand carefully curated examples performed better than the 50K machine-generated Alpaca dataset.OpenAI fine-tuning documentation It is suggested that even a dataset of 50 to 100 examples may have an impact.

- More difficult language tasks require more data: Relatively difficult tasks, such as text generation and summarization, are harder to fine-tune and require more data than simple tasks such as classification and entity extraction. "Harder" in this context can mean a variety of things: more tokens in the output, higher order human capabilities required, multiple correct answers.

- Effective, high-quality data collection: Due to the high cost of data collection, the following strategies are recommended to improve sample efficiency and reduce costs

- Observe Failure Patterns: Observe examples of previous ML capabilities that have failed and add examples that address those failure patterns.

- Human-computer collaboration: this is a cheaper way to scale data annotation. We use LLM to automate the generation of base responses, which human annotators can annotate in less time.

Data diversity

Simply put, if you over-train the model with a particular type of response, it will be biased to give that response, if not the most appropriate answer. The rule of thumb here is to make sure, as much as possible, that the training data reflects how the model should behave in the real world.

- Repeat: has been discoveries is the cause of model degradation in fine-tuning and pre-training. Achieving diversity through de-duplication usually improves performance metrics.

- Input Diversity: Increase diversity by paraphrasing input. In the Fine-tuning SQLCoder2 The team reformulated the plain text that accompanies SQL queries to introduce syntactic and semantic diversity. Similarly, thereverse instruction translation was used for manually written text by asking the LLM "What question might this be an answer to?" to generate a question and answer dataset.

- Data set diversity: When fine-tuning for more general downstream tasks - e.g., multilingual adaptation - the use of diverse datasets has been shown to improve the trade-off between forgetting of the model's original capabilities and learning of new ones. Targeting different languages such as Hindi (language) cap (a poem) Austronesian (language) The fine-tuning model uses a rich language-specific dataset, as well as other instruction fine-tuning datasets, such as the FLAN,Alpaca, Dolly, etc. to introduce diversity.

- Standardized output: Removing whitespace from output and other formatting tricks have proven helpful.SQLCoder2 Removing whitespace from the generated SQL lets the model focus on learning important SQL concepts rather than tricks like spaces and indentation. If you want a specific tone in the answer." The helpdesk chatbot is..." , then add these to the dataset for each example.

LLM-based data pipeline

In order to organize high-quality, diverse datasets, data pipelines often use LLMs to reduce annotation costs. The following techniques have been observed in practice:

- Assessment: Train the model with a high-quality dataset and use it to label your larger dataset to filter out high-quality examples.

- Generate: Seed the LLMs with high-quality examples and prompt to generate similar high-quality examples.Best Practices for Synthetic Datasets is beginning to take shape.

- Human-computer collaboration: Use LLMs to generate an initial set of outputs and let humans improve the quality by editing or selecting preferences.

Debugging your dataset

- Evaluate your dataset for bad output: If the model still performs poorly in certain areas, add training examples that show the model directly how to handle those areas correctly. If your model has syntax, logic, or style issues, check your data for the same issues. For example, if the model now says "I'll set up this meeting for you" (when it shouldn't), see if the existing example teaches the model to say it can do something new that it can't actually do.

- Double check the balance of the positive/negative categories: You may get too many rejections if the 60% helper response in the data says "I can't answer that question" but only the 5% response should say that when reasoning.

- Comprehensiveness and consistency: Make sure your training examples contain all the information needed for the response. If we want the model to praise the user based on their personal characteristics, and the training examples contain the assistant's praise for characteristics that did not appear in the previous dialog, the model may learn to fudge the information. Make sure that all training examples are in the same format as expected when reasoning. Check for consistency in the training examples. If multiple people created the training data, model performance may be limited by the level of interpersonal consistency. For example, in a text extraction task, the model may not be able to do better if people only agree on the extracted segments of 70%.

reach a verdict

Fine-tuning is a key aspect in the development of a large language model that requires a delicate balance between art and science. The quality and curation of the dataset plays an important role in the success of fine-tuning, theSmall fine-tuned models of large languages tend to outperform larger models on specific tasks. Once the decision has been made to fine-tune Llama Fine Tuning Guide provides a solid starting point. The proprietary nature of the fine-tuning dataset portfolio has hindered the sharing of best practices and open source progress. As the field continues to evolve, we anticipate the emergence of generalized best practices while maintaining the creativity and adaptability of fine-tuning.

a thank-you note

We would like to thank Suraj Subramanian and Varun Vontimitta for their constructive feedback on the organization and preparation of this blog post.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...