MCP Configuration and Hands-On: Connecting AI and Common Applications Tutorials

In the near future.MCP(Model Context Protocol) has garnered a lot of attention in the tech enthusiast and developer communities. This technology aims to simplify the way Large Language Models (LLMs) interact with a variety of external tools and services, promising to reshape the way we utilize AI to process information and complete tasks. However, as with many emerging technologies, theMCP configuration currently still has a learning curve for non-expert users.

In this article, we will take an in-depth look at MCP basic concepts, provide a thorough configuration guide, and demonstrate, through a series of practical examples, how to utilize the MCP Connecting AI models with commonly used applications (e.g., notes, search, design tools, etc.) is intended to help users more smoothly understand and apply this promising technology. The paper will focus on demonstrating the use of AI models in a general-purpose chat client Chatwise operations in the AI Programming IDE, and will also cover operations in the AI Programming IDE Windsurf The configuration steps in the

Understanding the core values of MCP

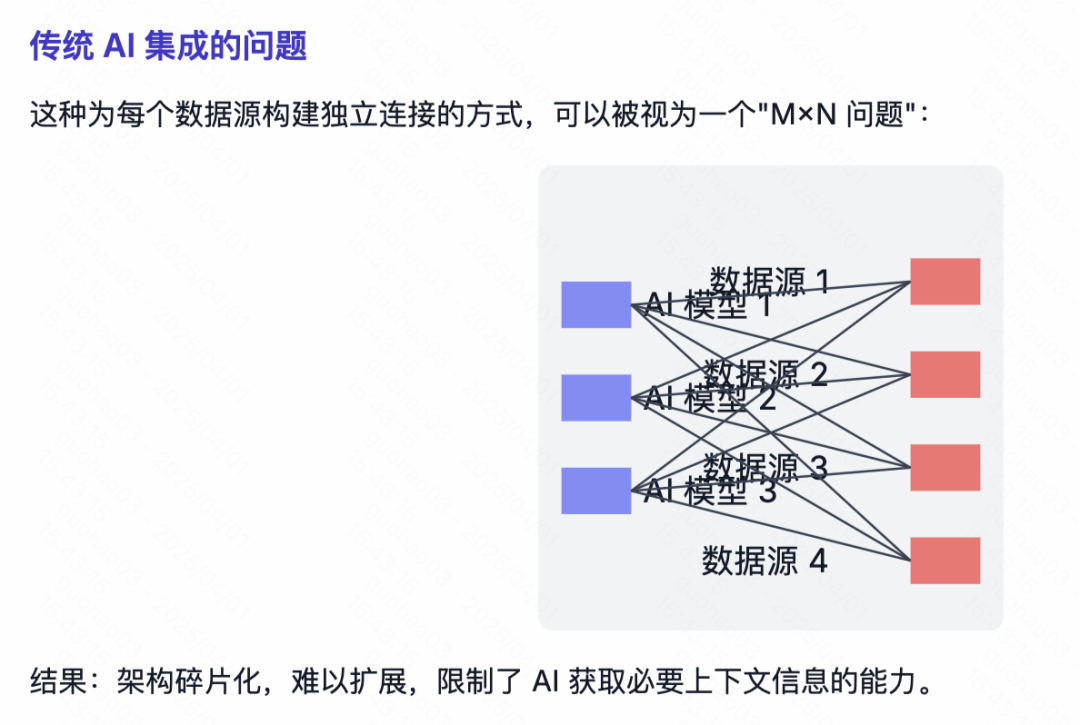

Before diving into the configuration details, it is necessary to understand the MCP problem it is trying to solve and its core values. In the past, when the need to make LLM Using specific tools (e.g., accessing mail, querying databases, controlling software) often requires separate adaptation development for each model and each tool. This is because different tools have API(Application Programming Interface) data formats vary, making integration complex, time-consuming, and the addition of new features relatively slow.

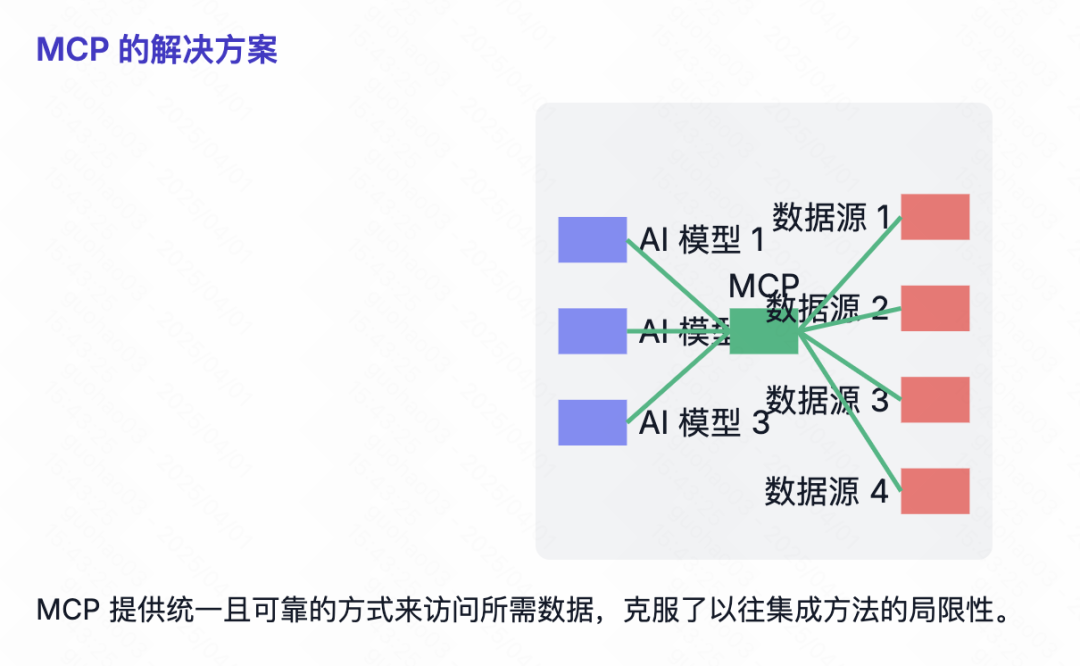

MCP The emergence of the protocol aims to change this by creating a unified set of standards for data exchange. It defines how applications should send data to LLM Provides contextual information and specification of available tools. Any compatible MCP general agreement LLM or AI applications can, in principle, be used in conjunction with all support for the MCP of the tool for interaction.

This standardization simplifies the otherwise complex bi-directional adaptation effort (model adapted to the tool, and the tool needs to consider adapting to a different model) into a uni-directional adaptation (mainly the tool or application side following the MCP (Norms). More importantly, for those who already have a public API of existing applications, third-party developers can also build on their API establish MCP encapsulation layer to make it compatible with MCP ecology without waiting for official native support.

One can refer to the concept shown in the figure below, which graphically demonstrates that MCP How to act as an intermediate layer to unify LLM Communication with diversity tools.

Pre-configuration

At the beginning of the configuration MCP Before the service, users need to understand and prepare some basic environment. It is important to note that different operating systems (especially the Windows together with macOS) There may be differences and potential complexities in the configuration of the environment and network settings. Users who lack programming experience may need to seek additional technical support if they encounter insurmountable problems during configuration.

MCP Two main modes of operation currently exist:

Stdio(Standard Input/Output) mode: Mainly used for local services. In this mode.LLMInteracts with locally running programs or scripts via standard input and output streams, suitable for manipulating local files and controlling local software such as 3D modeling software.Blender(and other scenarios where there is no online API).SSE(Server-Sent Events) mode: Mainly used for remote services. When the tool itself provides onlineAPI(e.g., when accessing theGooglemail, calendars, and other cloud services), usually using theSSEMode.LLMCommunicate with the remote server via a standard HTTP event stream.

SSE Configuration of the schema is usually more straightforward, usually only need to provide a service link (URL) can be, the configuration process is relatively simple, this paper will not focus on. Currently, the community is flooded with MCP The service mostly uses Stdio mode, its configuration is relatively more complex and requires some preparation.

configure Stdio modal MCP services, which usually rely on specific command-line tools to start and manage the MCP service processes. Common startup commands involve uvx cap (a poem) npxThe

- mounting

uv(provide)uvxcommand)::uvIt's one of those things that's made up ofAstralThe Python package installer and parser, developed by the company, is claimed to be extremely fast, and some of theMCPThe tool uses it to run.- Windows user: Press

WinPress the "PowerShell" button, search for "PowerShell", right-click and select "Run as administrator", paste and execute the following command. It is recommended to reboot your computer when finished.powershell -ExecutionPolicy ByPass -c "irm https://astral.sh/uv/install.ps1 | iex" - macOS Users: Open the Launchpad, search for and launch the Terminal application, paste and execute the following command.

curl -LsSf https://astral.sh/uv/install.sh | sh

- Windows user: Press

- mounting

Node.js(provide)npxcommand)::npxbeNode.jspackage executor that allows the user to runnpmpackage commands without having to install those packages globally. Accessing theNode.jsOfficial website (https://nodejs.org/), download the LTS (Long Term Support) version for your operating system and follow the standard procedure to complete the installation.

Once you have completed the above environment preparation, you can start getting and configuring specific MCP Served.

Getting MCP Service Configuration Information

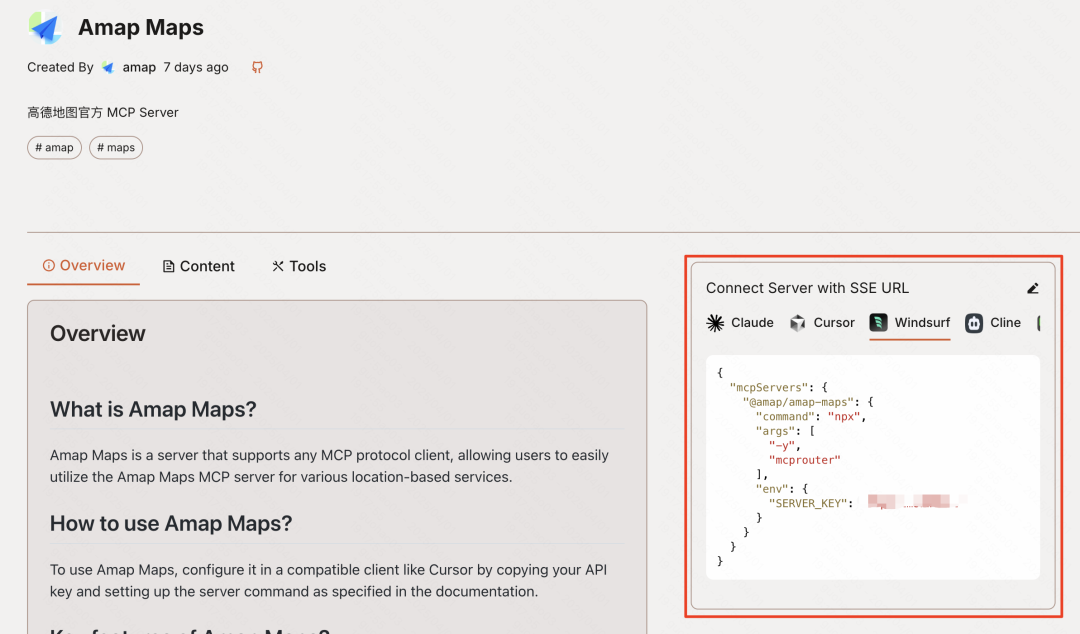

in the wake of MCP The growing popularity of the program has seen the emergence of a number of MCP Aggregator sites for services such as MCP.so cap (a poem) Smithery.ai (https://smithery.ai/), etc. These platforms collect and display a variety of community- or developer-supplied MCP Services.

These aggregator sites are usually used in a similar way: users can browse or search the site for desired MCP Services. For those who need API services with keys or other credentials, the site will usually explain how to get this information in the introductory area (the cases section below will also provide some common services' API (Access Guidelines). The user enters the necessary API After the key or parameter is generated, the site automatically generates the key used for importing into different clients (such as the Windsurf maybe Chatwise(used for emphasis) JSON Configuration code or corresponding command line arguments.

The next sections of the configuration will each describe how the Windsurf cap (a poem) Chatwise These two clients add and configure MCP Service. Users can select the appropriate guide to operate according to the client they are using.

Configuring MCP Services: Windsurf Guide

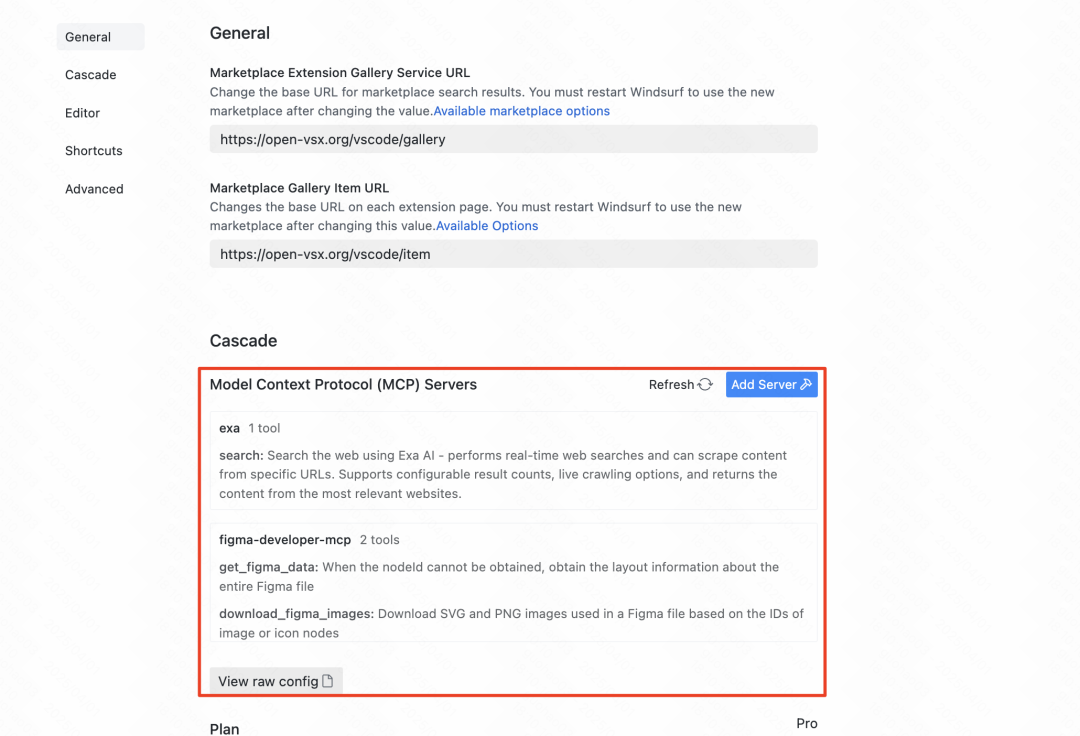

For the use of Windsurf Users of this AI programming IDE, configure the MCP Services are relatively integrated.

- Open Settings: Click on the user's avatar in the upper right corner of the interface and select "Windsurf Settings".

- Find MCP Configuration: Find the "MCP Servers" option in the Settings menu.

- Add Service: Click the "Add Server" button on the right.

Windsurf One of the conveniences of this program is that it has some commonly-used MCP service, users can enable it directly. At the same time, since the Windsurf is a popular IDE that many MCP The aggregator site will provide the adaptation directly Windsurf (used form a nominal expression) JSON Configuration code.

If you need to add a list other than the built-in MCP service, you can click the "Add custom server" button.

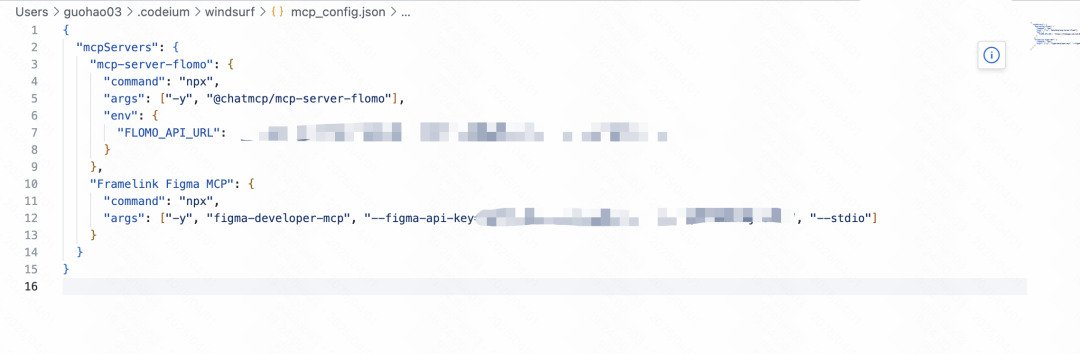

After clicking on theWindsurf will open a JSON Configuration file (mcp_servers.json). Users will need to copy from the aggregator site the MCP service JSON The configuration code is pasted into this file.

take note of: When editing this file to add multiple custom servers, the new server's JSON object (usually keyed by the service ID and containing the command, env and other attributes of the object) is added to the mcpServers object internally, alongside other pre-existing service objects, and ensure that the entire JSON The syntax of the file is structured correctly (e.g., objects are separated by commas). The following mcp_servers.json The basic structure of the

{

"mcpServers": {

"server_id_1": {

// Server 1 configuration details

},

"server_id_2": {

// Server 2 configuration details

}

// Add more servers here, separated by commas

}

}

If there is any doubt about the JSON If you are not familiar with the syntax, you can copy the entire contents of the configuration file and ask an AI assistant (such as Claude maybe Deepseek) Check for syntax errors and fix them. Paste the fix back into the file and be sure to save the changes.

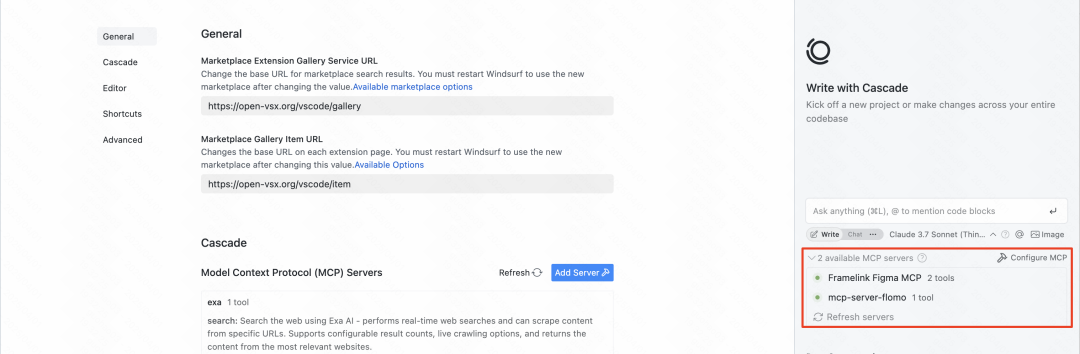

When the configuration is complete, return to the Windsurf (used form a nominal expression) MCP Servers Settings screen. If configured correctly, you should see in the list the added MCP service name and its status indicator shows green. A red indicator means that there is a problem with the configuration and you need to check the JSON file or related commands, environment variables.

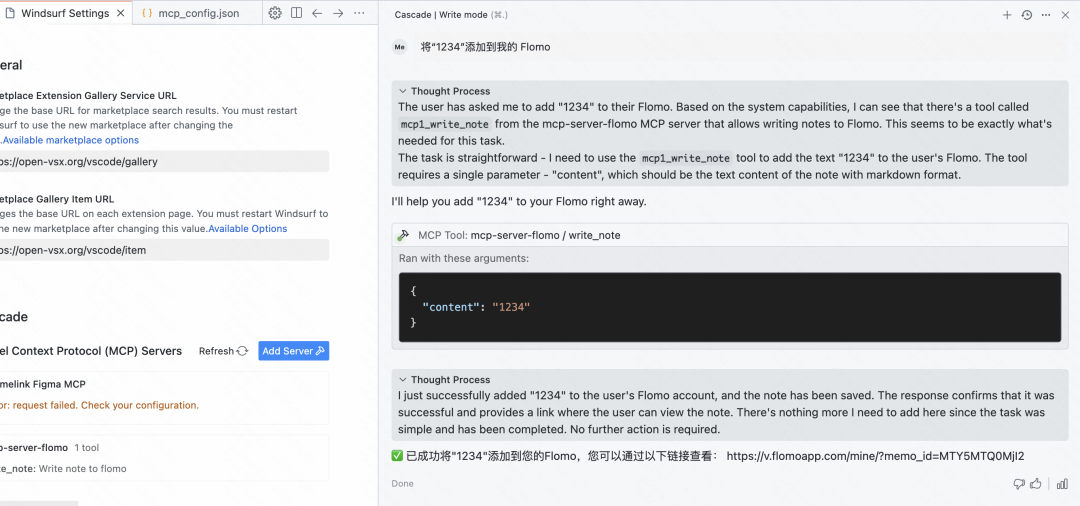

Successfully added and enabled MCP After the service, users can directly Windsurf in the chat or code editing interface, and make requests to the AI through natural language that require the use of the tool. For example, the AI could be instructed to add a piece of content directly to the user's Flomo In the notes.

Configuring MCP Services: Chatwise Guide

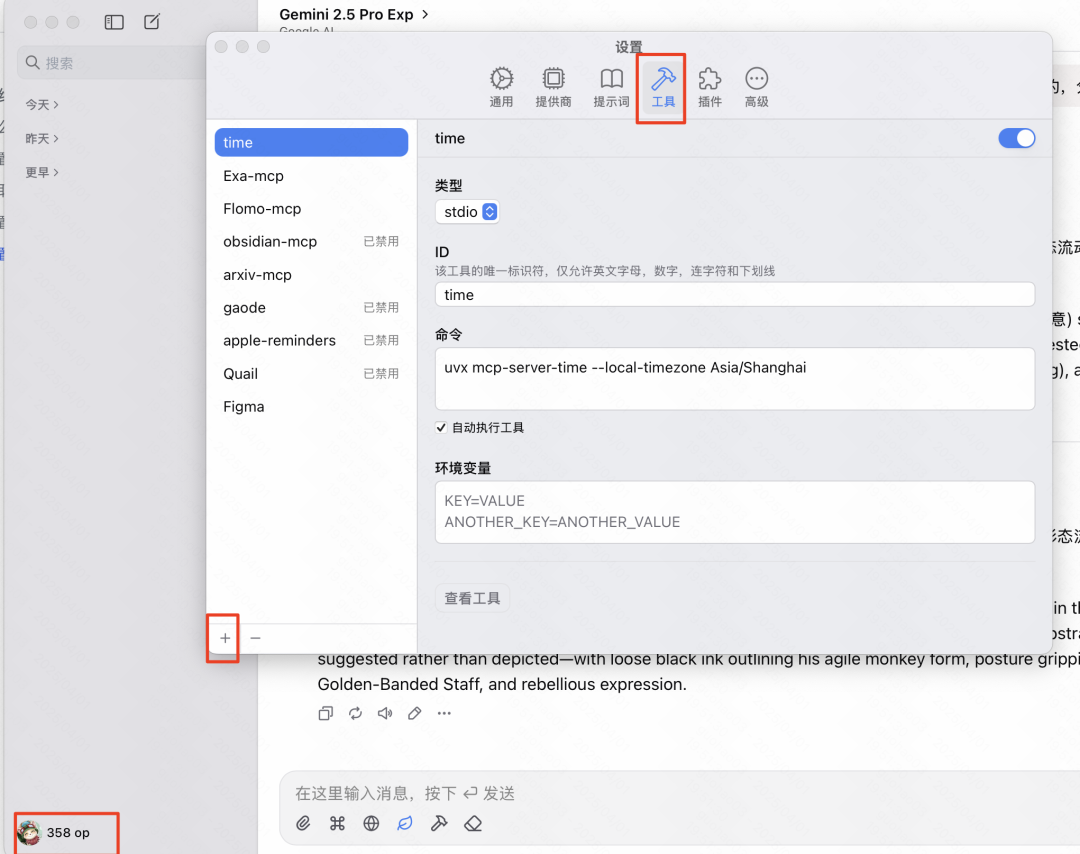

For the broader community of non-developer users, theChatwise Such an AI chat client might be a more commonly used tool.Chatwise (used form a nominal expression) MCP The configuration interface is relatively intuitive, but setting the command line and environment variables correctly is key.

Convenient JSON import:

Thankfully.Chatwise Recent updates have supported importing directly from the clipboard JSON configuration, greatly simplifying the configuration process.

- exist

ChatwiseClick on the "+" icon in the lower left corner to add. - Select the Import Json from Clipboard option.

- If the clipboard already contains a file from the

MCPAggregation sites orWindsurfValid for replication in configurationsJSONCode.Chatwisewill attempt to parse and auto-populate the configuration information.

Manually configure the process:

If it is not possible to use the JSON To import or if you need to make manual adjustments, you can follow the steps below:

- Open Settings: Click

ChatwiseUser avatar in the lower left corner to access the settings menu. - Find Tool Management: Find the "Tools" or similar administrative portal in Settings.

- Add New Tool: Click on the "+" icon in the lower left corner of the interface to start adding new

MCPServices.

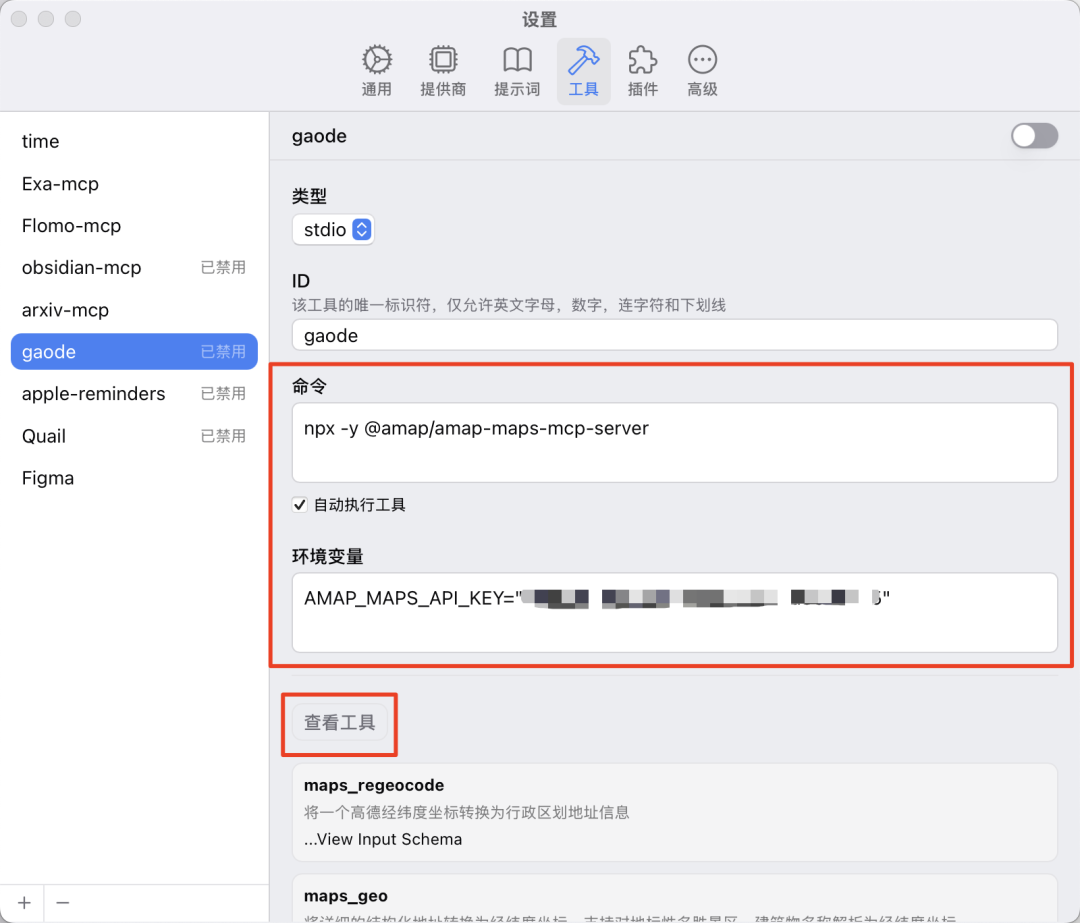

At this point, the following key information is usually required:

- Type: general selection

Stdio, unless it is clearly known to beSSEservice and get the corresponding URL. if the configuration source is theJSONformat, which usually corresponds to theStdioMode. - ID: for this

MCPThe service sets a unique, easily recognizable name (e.g., theFigma,ExaSearch). - CommandThis is the startup.

MCPThe complete command line instructions required to serve the process. - Environment Variables: Certain

MCPThe service needs to be passed in via an environment variableAPIkey or other configuration parameters.

Get commands and environment variables:

If only JSON Configuration code, how to convert Chatwise What is the required format of "commands" and "environment variables"? An effective way to do this is to utilize the AI model itself. This can be done by combining the captured JSON The configuration code is provided to the LLM (e.g. Claude maybe Deepseek), and instructed it: "Please send this MCP serviced JSON The configuration is converted to one that applies to the Chatwise Manually add the tool's 'command' string and list of 'environment variables' (in the format KEY=VALUE, one per line)".

For example, for a gauntlet MCP (used form a nominal expression) JSON configuration, AI can help isolate the following information:

- command:

npx -y @amap/amap-maps-mcp-server - environment variable:

AMAP_MAPS_API_KEY=YOUR_API_KEY(of whichYOUR_API_KEYrequires the user to replace the key with their own)

Fill in the commands and environment variables provided by the AI into the Chatwise of the corresponding input box.

Testing and Enabling:

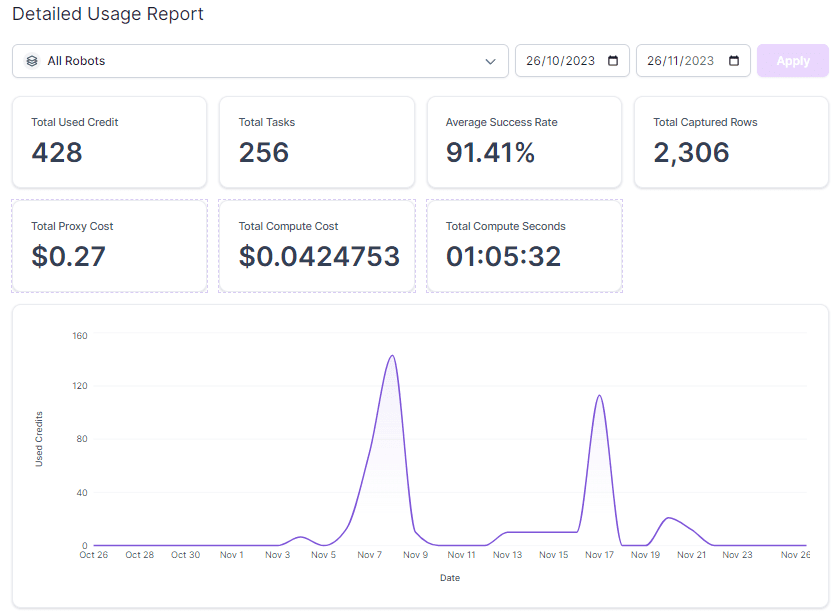

Once the configuration is complete, there is usually a "Test Tool" or "Debug" button. Click on it to verify that the configuration is correct.

- successes: If configured correctly, the system will list the

MCPThe specific tools provided by the service (i.e., the functions it can perform). - fail (e.g. experiments): If there is a problem (such as a command error,

API(invalid key, missing environment variable, etc.), the system will display an error message. Users can troubleshoot according to the error message, or submit the error message to AI again to request a solution.

After the debugging passes, save the configuration. The configuration is saved in the Chatwise of the main chat interface, which can usually be managed and enabled via a tool icon next to the input box (e.g. a hammer icon) MCP Services.

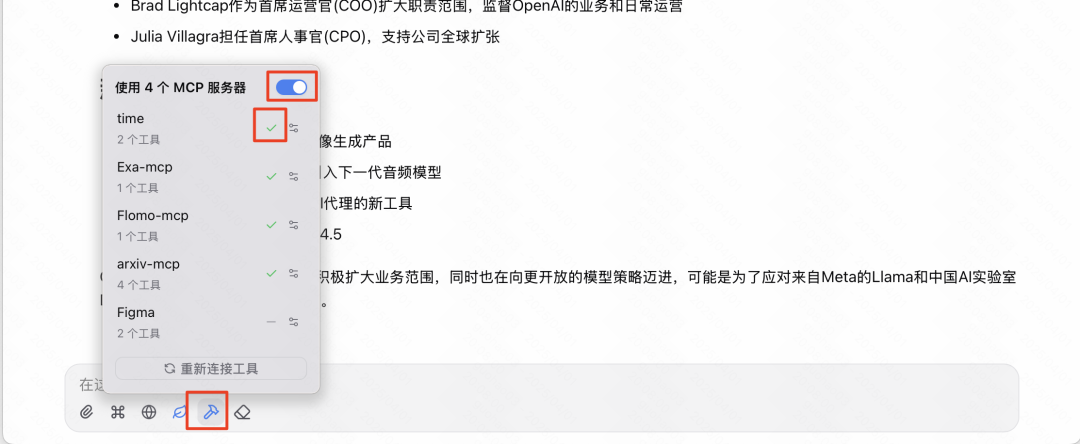

start using MCP Once the service is enabled (note: it may be necessary to turn on the master switch and then individually check the service to be activated), the user can interact with the AI via natural language in chat. When the user's request involves an enabled MCP When a service functions, the AI automatically invokes the appropriate tool to obtain information or perform an action.

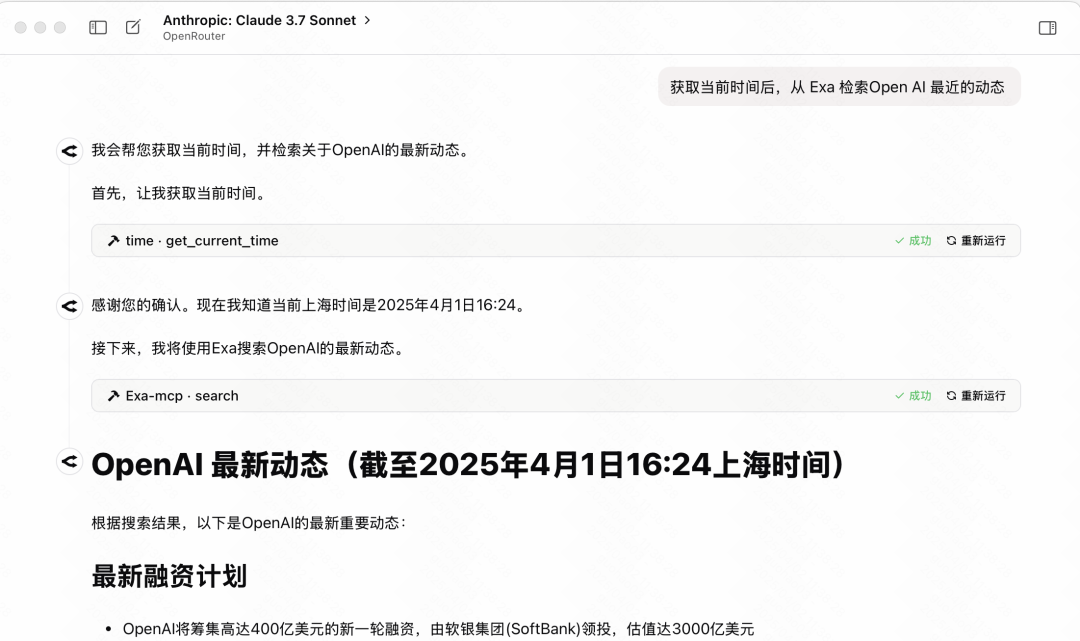

For example, ask a question that requires the current time and recent news, configure the Time cap (a poem) Exa look for sth. MCP The model may first call the Time service gets the current date and then calls the Exa Service search for related news.

For no potential risk MCP For services, you can consider checking the "Automatically execute tools" option, so that the AI will directly execute the tools when it determines that they are needed, without the need for the user to manually confirm them, thus improving the efficiency of the interaction.

MCP Application Cases and Parameter References

Next, a few practical MCP service cases, including their origins, functional profiles, and the Chatwise Configure the required parameters in the These configurations have been tested and verified.

Case 1: Generating Web Code from Figma Designs

- MCP Services:

Framelink Figma MCP Server(Source: https://mcp.so/server/Figma-Context-MCP/GLips) - functionality: Allow the AI to access the specified

FigmaDesign draft content and generate front-end web code (HTML/CSS/JS) based on the design draft. - Chatwise Parameters:

# 类型: Stdio # ID: FigmaMCP # 命令: npx -y figma-developer-mcp --stdio --figma-api-key=YOUR_FIGMA_API_KEY # 环境变量: (无,API Key直接在命令中提供)take note of:: Will

YOUR_FIGMA_API_KEYReplace the user's ownFigmaAPI Key. - Get Figma API Token:

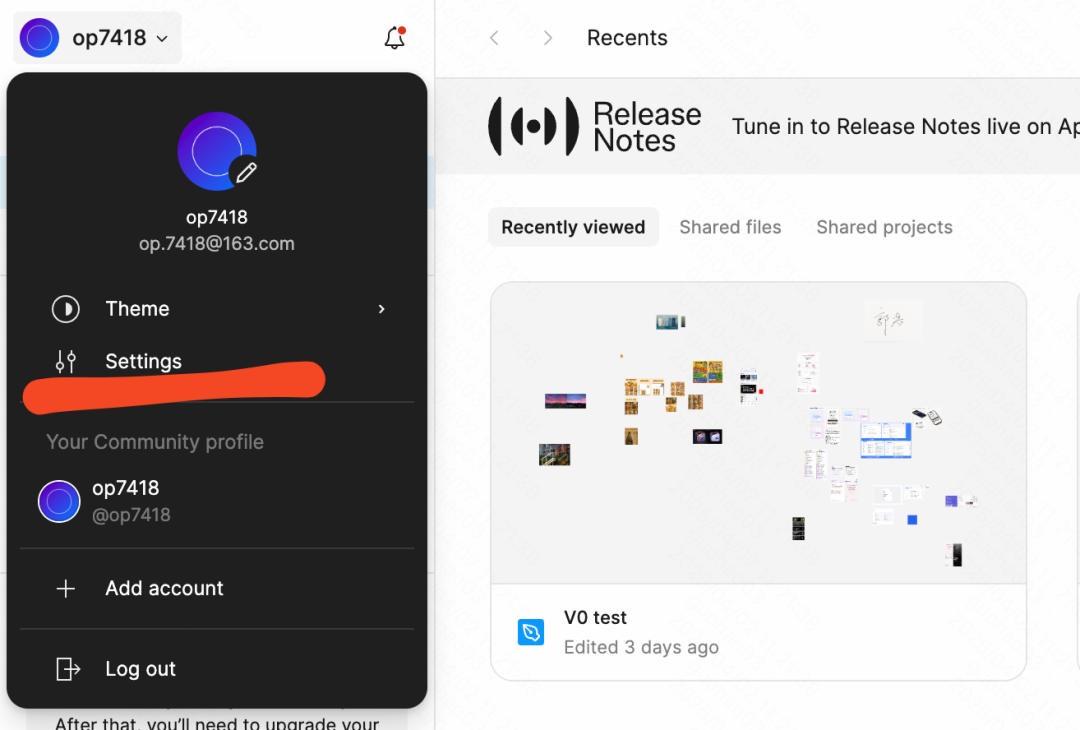

- log in

Figma, click on your avatar in the upper left corner and go to "Settings". - Find the "Personal access tokens" section in the settings.

- Creates a new Token, with the scope of authority (Scopes) according to the

MCPThe service documentation requires the setting, and it is usually sufficient to select read-only permissions (e.g.File content: read,Dev resources: read). - generating Token Be sure to copy it immediately afterward and save it properly, as it is only displayed once.

- log in

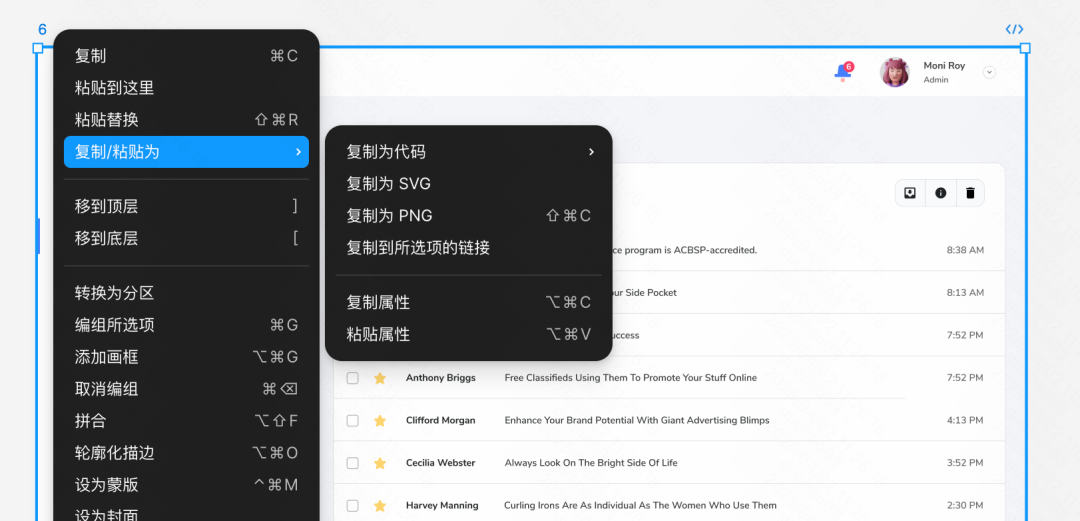

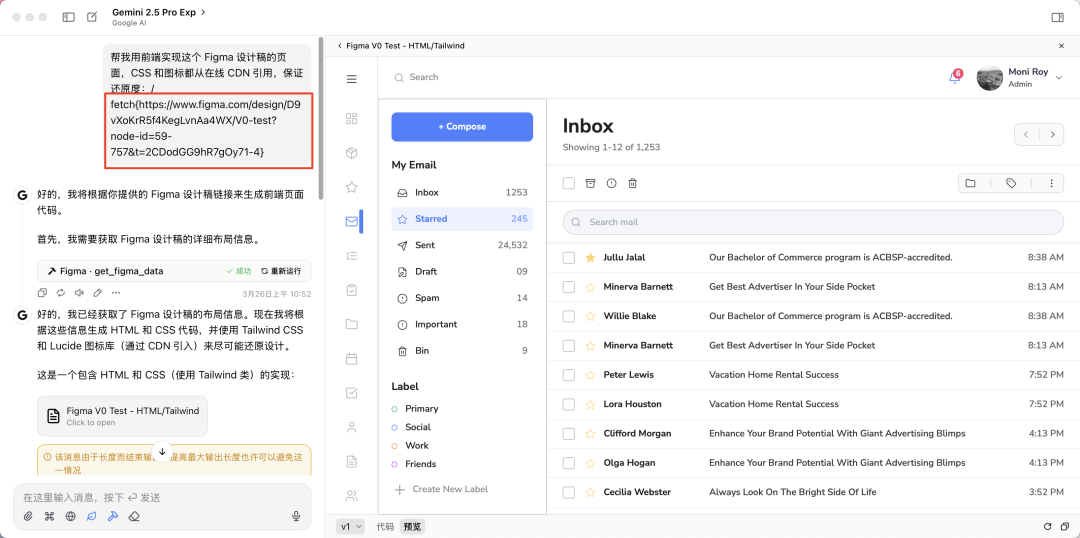

- Usage Process:

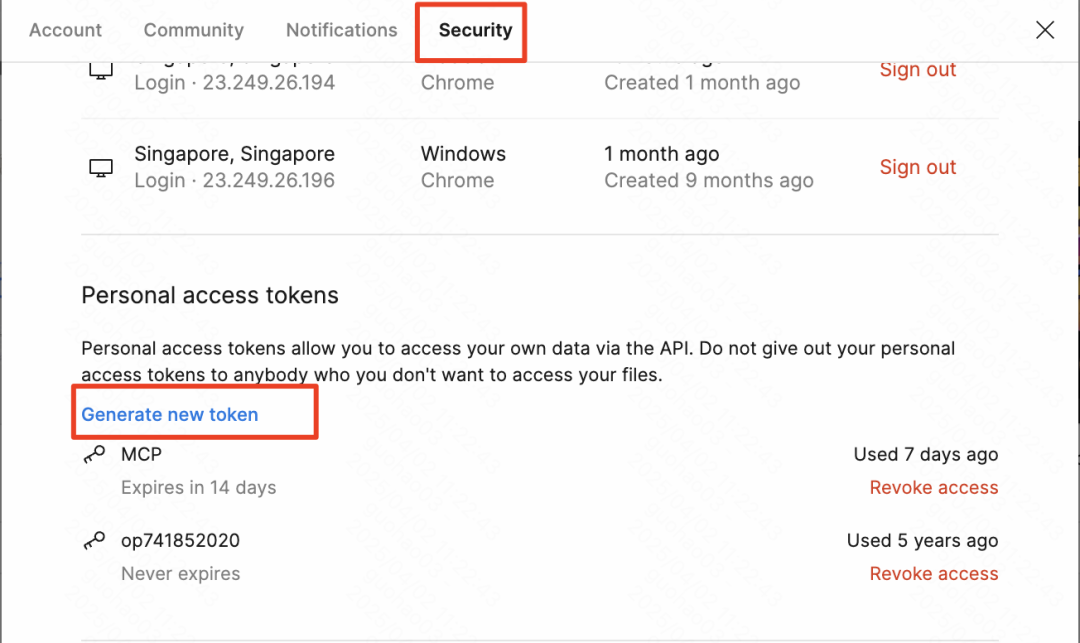

- exist

FigmaOpen your design in the "Web Designer" section and select the frame or component that you want to convert to a web page. - Right click on the selected element and choose "Copy/Paste as" > "Copy link to selection".

- exist

ChatwiseenableFigma MCPServices. - Place the copied

FigmaThe link is pasted into the chat box and the AI is instructed to "Please generate web code based on this Figma design link". It is recommended to use models with strong code generation capabilities, such asGoogle(used form a nominal expression)Gemini 1.5 PromaybeAnthropic(used form a nominal expression)Claude 3Series Models.

- exist

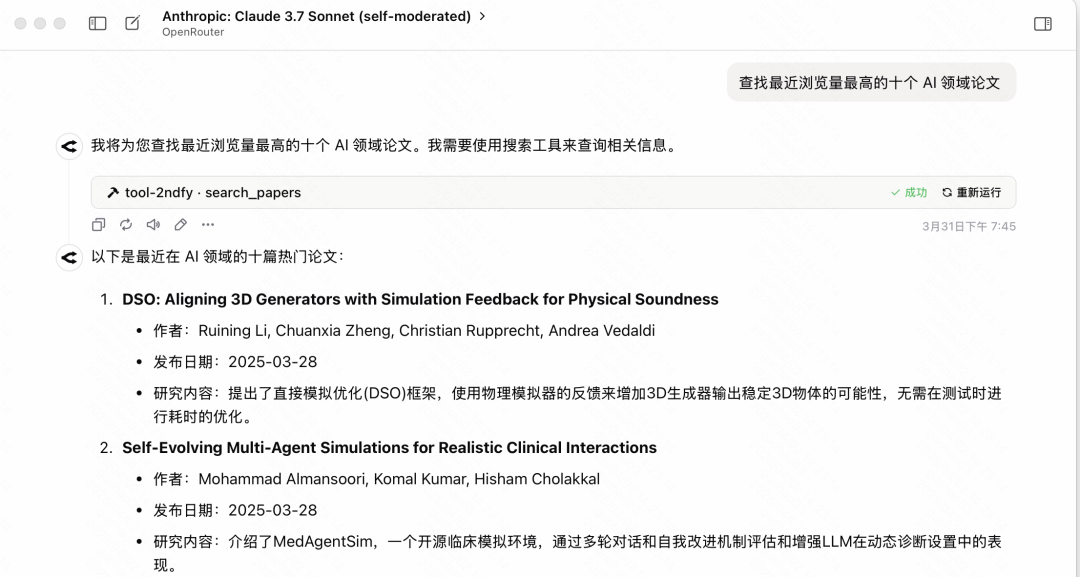

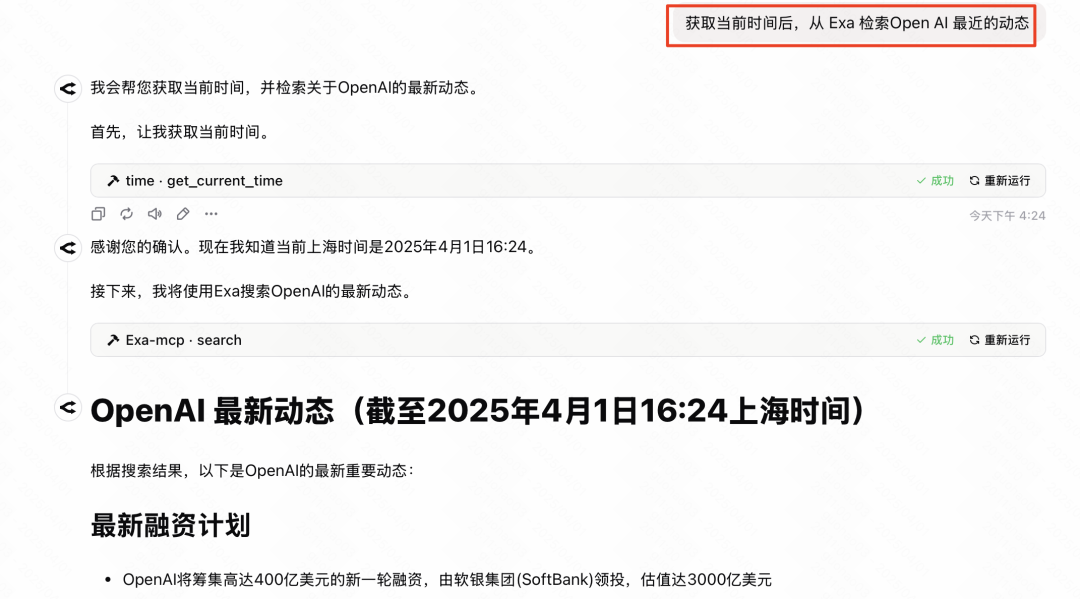

Case 2: Building a Custom AI Search Function (Exa + Time)

- functionality: Combined use of two

MCPservices that give AI the ability to sense the current time and perform web searches to answer time-sensitive questions or make queries based on the most up-to-date information. - Required MCP services:

- Time MCP: Get the current date and time. This is usually done by the model provider (e.g.

Anthropicbecause ofClaude) provides an official realization, or a community version. - Exa Search MCP: Use

Exa(formerly)Metaphor)APIConduct quality web searches.

- Time MCP: Get the current date and time. This is usually done by the model provider (e.g.

- Chatwise Parameters - Time MCP:

# 类型: Stdio # ID: TimeMCP # 命令: uvx mcp-server-time --local-timezone Asia/Shanghai # 环境变量: (无)take note of:

--local-timezoneThe parameter value should be set to the user's time zone, for exampleAsia/Shanghai,America/New_Yorketc. - Chatwise Parameters - Exa Search MCP:

# 类型: Stdio # ID: ExaSearchMCP # 命令: npx -y exa-mcp-server # 环境变量: EXA_API_KEY=YOUR_EXA_API_KEYtake note of:: Will

YOUR_EXA_API_KEYReplace the user's ownExaAPI Key. - Get Exa API Key:: Access

ExaYou can create an API Key for free by registering an account on the API Key management page of the official website (https://dashboard.exa.ai/api-keys). - Usage Process:

- exist

ChatwiseThe same time you enable theTime MCPcap (a poem)Exa Search MCPThe - Ask the AI questions that require a combination of current time and web searches, such as, "

OpenAIAny important news releases lately?" - AI should be able to automatically call

Time MCPConfirm the "recent" time range and use theExa Search MCPSearch for relevant news and then integrate the information to answer. For some models, if it fails to actively acquire the time, it can be explicitly prompted when asking a question, e.g., "Please search for... in conjunction with today's date."

- exist

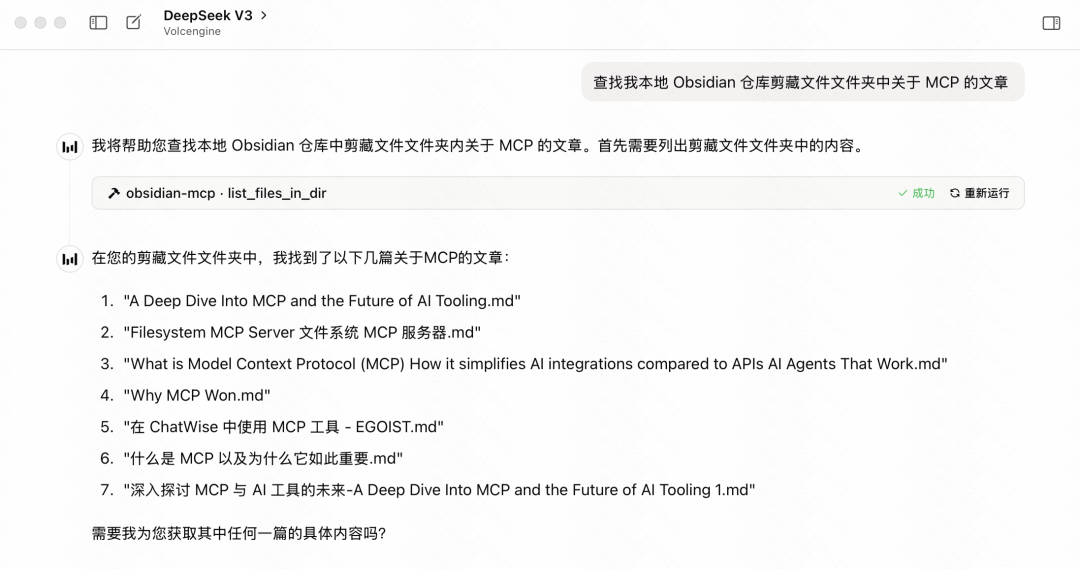

Case 3: Connecting Obsidian's note-taking library to AI

- MCP Services:

Obsidian Model Context Protocol(Source: https://mcp.so/zh/server/mcp-obsidian/smithery-ai) - functionality: Allows AI to retrieve and analyze user local

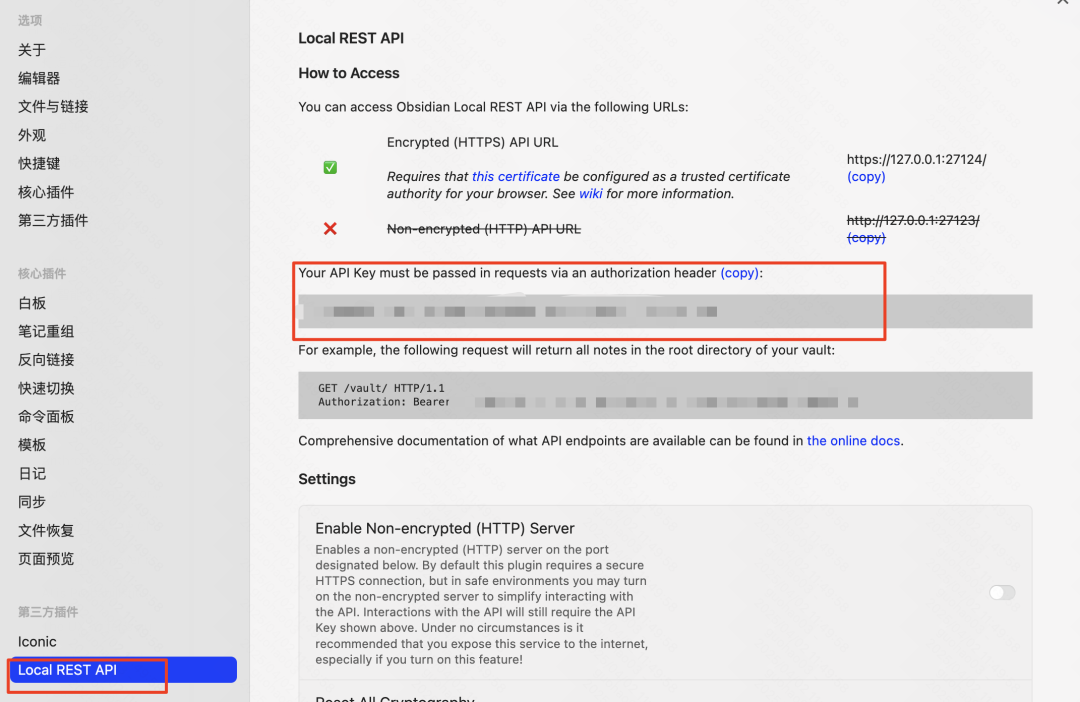

ObsidianThe content in the notes library enables Q&A, summarization, and other functions based on the personal knowledge base. - pre-conditions: Needed in

ObsidianInstall and enable theLocal REST APICommunity Plugin.- show (a ticket)

ObsidianSettings > Third Party Plugins > Community Plugin Marketplace. - Search and install

Local REST APIPlug-ins. - After installation, make sure the plugin is enabled in the "Installed Plugins" list.

- show (a ticket)

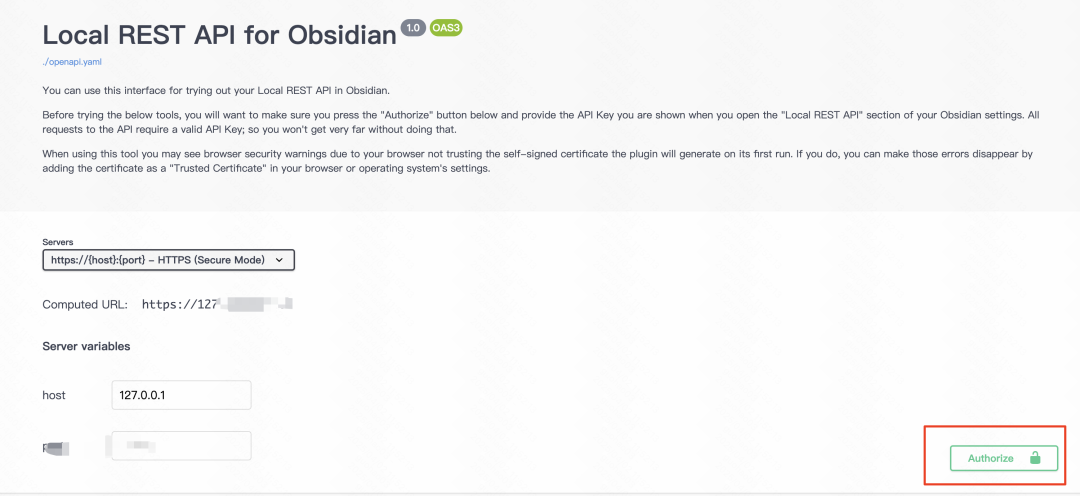

- Getting a Local REST API Key and Authorization:

- exist

Local REST APIplugin's settings page, find the generatedAPI KeyAnd copy.

- Visit the license page provided with the plugin: https://coddingtonbear.github.io/obsidian-local-rest-api/

- Click the "Authorize" button at the bottom right corner of the page, and enter the

API Key, completing the authorization to makeMCPThe service can be accessed through theAPIinterviewsObsidianThe

- exist

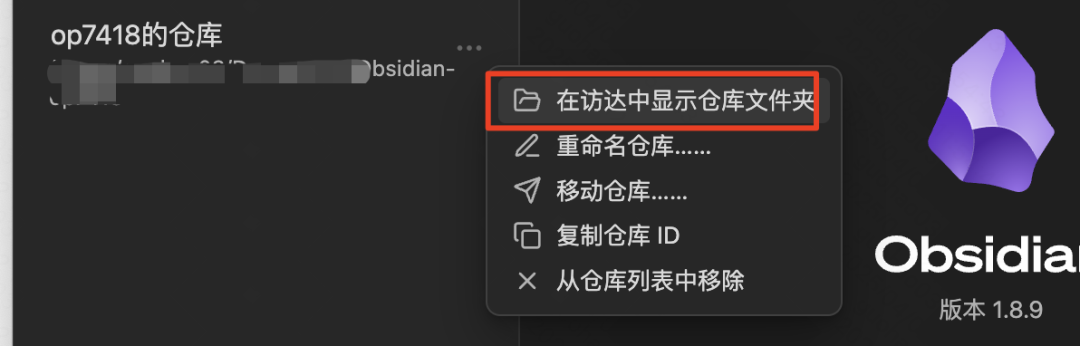

- Get Obsidian repository path:

- exist

ObsidianClick on the current warehouse name in the lower left corner and select "Manage Warehouse".

- Find your repository, click on the option next to it (usually three dots or a similar icon), and select "Show in File Manager" (Windows) or "Show repository folder in Access" (macOS).

- Copy the full path to this repository folder. On macOS, you can press and hold the

OptionRight-click on the folder and select "Copy 'Folder Name' to Path Name".

- exist

- Chatwise Parameters:

# 类型: Stdio # ID: ObsidianMCP # 命令: uv tool run mcp-obsidian --vault-path "YOUR_OBSIDIAN_VAULT_PATH" # 环境变量: OBSIDIAN_API_KEY=YOUR_OBSIDIAN_LOCAL_REST_API_KEYtake note of:

- commander-in-chief (military)

"YOUR_OBSIDIAN_VAULT_PATH"Replace it with the one you copiedObsidianWarehouse folder of thefull path, it is better to put quotation marks on both sides of the path, especially if the path contains spaces. - commander-in-chief (military)

YOUR_OBSIDIAN_LOCAL_REST_API_KEYReplaced by a change fromLocal REST APIFetched in the plugin settingsAPI KeyThe - The original documentation suggests that the documentation of this service is not clear, and the configuration process may require the user to debug according to the actual situation.

- commander-in-chief (military)

- Usage Process:

- exist

ChatwiseenableObsidian MCPServices. - Ask the AI a question and ask it to do the same thing when you

ObsidianFind, summarize, or analyze relevant content in a specific folder or globally in your notes library. For example, "Please look in my 'Project Notes' folder to find information about theMCPAgreement article and summarizes its key points."

- exist

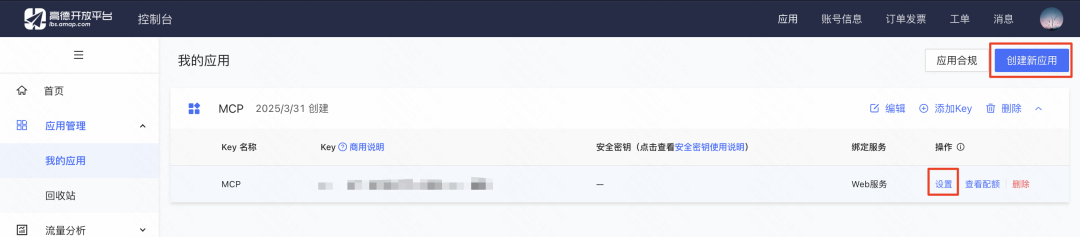

Case 4: Retrieving locations and generating display web pages using the Goldmap MCP

- MCP Services: Gao De Maps MCP Service (

@amap/amap-maps-mcp-server) - functionality:: Utilization of Golder Maps

APIPerform geolocation queries (e.g., search for nearby Points of Interest POIs), obtain information such as latitude and longitude, and can be combined with other capabilities (e.g., code generation for AI) to create geo-information-based applications. - pre-conditions: You need to sign up for a High-Tech Open Platform developer account and create an app to get the

API KeyThe- Visit the Golder Open Platform (https://console.amap.com/dev/key/app).

- Register and complete the individual developer certification.

- Create a new application in the console.

- Add a Key for the application and select the Service Platform as "Web Services".

- Copy the generated

API KeyThe

- Chatwise Parameters:

# 类型: Stdio # ID: AmapMCP # 命令: npx -y @amap/amap-maps-mcp-server # 环境变量: AMAP_MAPS_API_KEY=YOUR_AMAP_API_KEYtake note of:: Will

YOUR_AMAP_API_KEYReplace it with your requested Goldmap Web service.API KeyThe

- Usage Flow (Example):

- exist

ChatwiseEnable AutoNavi Maps inMCPThe - First, it may be necessary to have the AI obtain the latitude and longitude of your current location or a specified location (if the

MCP(Support). - Then, instruct the AI to use Goldmap

MCPSearch for a specific type of location nearby, e.g., "Please help me find the 5 highest rated cafes near my current location and list their names, addresses, and ratings." - advanced: Once the café list information is obtained, it can be further combined with the AI's code generation capabilities (which may require other

MCPor model-native capabilities), the AI is asked to organize this information into a simple HTML page for presentation. You can refer to the related cue word engineering tips for a more aesthetically pleasing visualization.

- exist

Case 5: Retrieving and downloading papers from Arxiv

- MCP Services:

arxiv-mcp-server - functionality:: Allow AI to directly search academic preprint sites

Arxivon the paper, and can follow instructions to download the specified paper'sPDFfile to the local. - Chatwise Parameters:

# 类型: Stdio # ID: ArxivMCP # 命令: uv tool run arxiv-mcp-server --storage-path "/path/to/your/paper/storage" # 环境变量: (无)take note of:

- commander-in-chief (military)

"/path/to/your/paper/storage"Replace it with the one you wish to save the downloaded paper inLocal Folder PathPlease make sure that the path exists and that the program has write permissions. Make sure the path exists and the program has write access. WindowsUsers are required to use theWindowsstyle paths, such as"C:\Users\YourUser\Documents\Papers"The

- commander-in-chief (military)

- Usage Process:

- exist

ChatwiseenableArxiv MCPServices. - Send commands to the AI to retrieve and download papers. For example, "Please retrieve the paper from

ArxivSearch for 10 papers on 'Transformer Model Optimization' in the last month, list the titles and abstracts, and download them to the specified directory."

- exist

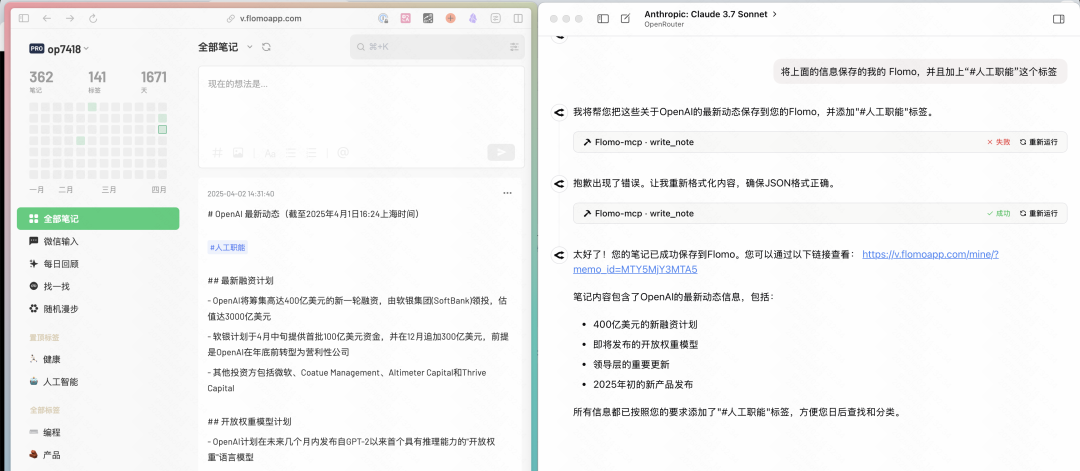

Case 6: Rapid creation of Flomo notes through dialogs

- MCP Services:

@chatmcp/mcp-server-flomo(Contributed by community developers such as Tease) - functionality: Allow AI to take content, summaries, or other content generated in a chat and

MCPThe information obtained by the tool is sent directly to the user'sFlomoaccount and can be assigned labels. - pre-conditions:: Needed from

FlomogainAPIAccess link (Webhook URL).- interviews

FlomoSettings for Web or App >Flomo APIThe - Reproduction of the provided

APIAccess Link.

- interviews

- Chatwise Parameters:

# 类型: Stdio # ID: FlomoMCP # 命令: npx -y @chatmcp/mcp-server-flomo # 环境变量: FLOMO_API_URL=YOUR_FLOMO_API_URLtake note of:: Will

YOUR_FLOMO_API_URLReplace it with the one you got from theFlomoacquiredAPIAccess Link. - Usage Process:

- exist

ChatwiseenableFlomo MCPServices. - After dialoguing with the AI, if you wish to save a particular piece of valuable content, you can directly instruct the AI: "Please send the information you just wrote about the

MCPConfigured points are saved to myFlomo, and labeled #AI #MCP." - It can also be combined with other

MCPUse, for example, "Please search for the latest AI industry news and send a summary of the results to myFlomo."

- exist

Conclusion: Prospects and Challenges for MCP

MCP The emergence of the protocol symbolizes that the AI tool ecosystem is moving towards greater openness and interconnectivity, with as much potential as it was when the HTTP protocols harmonize Web access standards.MCP It is expected to become a key protocol to unify AI's interaction with the outside world. This standardization is not only a technological advancement, but may also lead to a paradigm shift in AI applications, transforming AI from a passive and responsive role to an intelligent agent that can actively invoke tools and perform complex tasks.

pass (a bill or inspection etc) MCPTheoretically, complex AI workflows that would otherwise require large teams to build are gradually becoming customizable and assembled by individual users with a certain level of technical understanding. This is undoubtedly an important step in the democratization of AI capabilities.

However.MCP It is still in the early stages of development, and the complexity of its configuration is one of the main barriers to widespread adoption. Technological innovation is often accompanied by ease-of-use challenges - a balance needs to be found between the pursuit of openness and flexibility and the pursuit of a simple, intuitive user experience. This is common in the early stages of emerging technology development. As the ecosystem matures, the toolchain improves, and more out-of-the-box solutions become available (e.g., one-click "installs" are possible in the future), it will be easier for users to use them. MCP tools"), this contradiction is expected to be gradually alleviated.

For avid explorers of cutting-edge technology, now is the time to start experimenting with and understanding the MCP This type of technology, even if the process may encounter some ups and downs, is an investment in future skills. In an era of increasing popularity of AI, proficiency in the use of AI tools and understanding of their underlying workings will be key to improving individual and organizational effectiveness.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...