MAI-UI - Ali Tongyi Labs Open Source Universal GUI Intelligent Body Base Model

What is MAI-UI

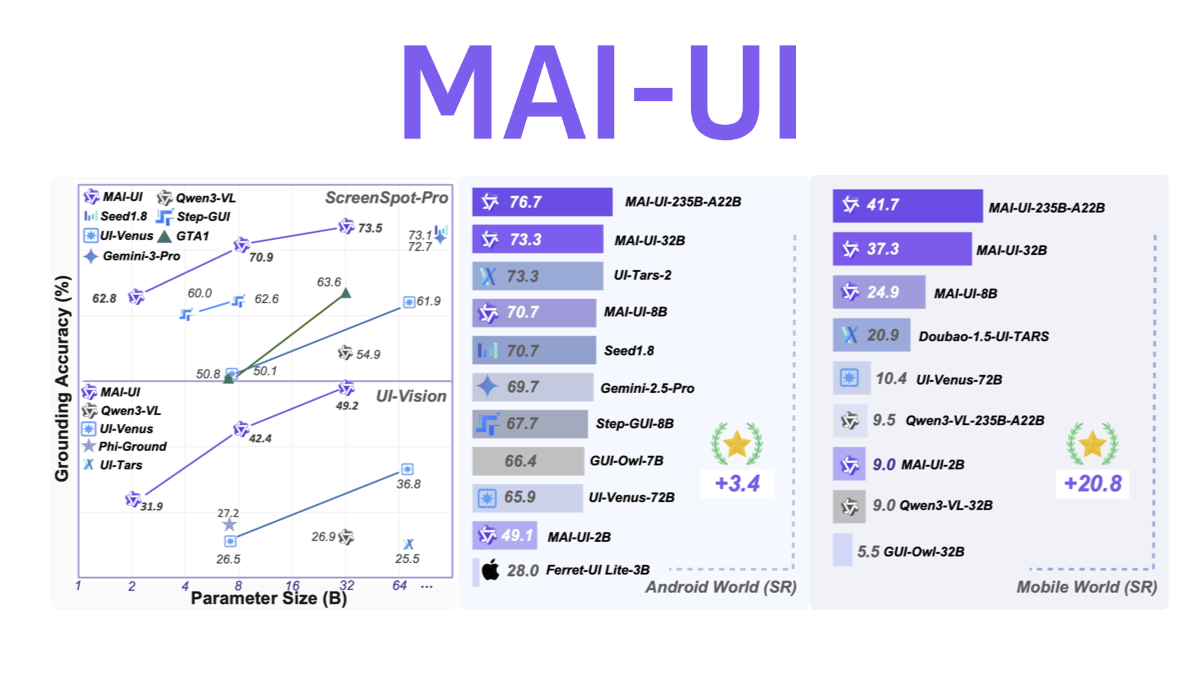

MAI-UI is an open source generalized GUI intelligent body base model from Alibaba Tongyi Labs, with four major capabilities: cross-application operation, fuzzy semantic understanding, active user interaction, and multi-step process coordination. It adopts end-cloud collaboration architecture, where lightweight models reside in the device to handle daily tasks, and complex tasks can invoke large models in the cloud, while safeguarding privacy and security.MAI-UI has topped five authoritative reviews, including ScreenSpot-Pro, and has set a new record with a success rate of 76.71 TP3T on Android task execution in particular. Innovations include active interaction mechanism (it will ask the user when the instruction is unclear), MCP tool invocation (it replaces tedious UI operation through API), and dynamic environment adaptability based on online reinforcement learning, and it has open-sourced version 2B and 8B, and it supports one-click deployment by Docker.

Functional features of MAI-UI

- Complex tasking: Complex tasks such as checking tickets, synchronizing information in communication groups, and adjusting meeting schedules can be accomplished.

- Active Interaction Capability: Proactively ask users questions for clarification when instructions are not clear.

- Calling structuring tools: Support for calling map search, route planning APIs and other structured tools, replacing the tedious click-and-click operations of the interface.

MAI-UI's core strengths

- Multi-parameter scale version: The model family contains 2B, 8B and other versions with different parameter scales, of which the 2B and 8B models have been open-sourced.

- Cross-platform applicability: Applicable to interface interaction scenarios of different operating systems such as cell phones and computers.

- Excellent review performance: Achieved current leading scores in several GUI comprehension and task execution benchmarks such as ScreenSpot-Pro and AndroidWorld.

What is MAI-UI's official website?

- Project website:: https://tongyi-mai.github.io/MAI-UI//

- GitHub repository:: https://github.com/Tongyi-MAI/MAI-UI

- HuggingFace Model Library:: https://huggingface.co/Tongyi-MAI/models

- arXiv Technical Paper:: https://arxiv.org/pdf/2512.22047

People for whom MAI-UI is intended

- Researchers in Artificial Intelligence and Machine Learning: The MAI-UI model can be used to conduct research related to multimodal interactions, explore how to further improve the model's understanding of the interface and task execution capabilities, and promote the technological development in this field.

- Software Development Engineer: Developers focusing on developing applications with complex interactive functions can add intelligent interactive functions to their applications by integrating MAI-UI to enhance the user experience, such as realizing smarter operation guidance and task automation in scenarios such as office software, life service applications, and so on.

- Human-computer interaction designer: With the help of MAI-UI to test and optimize the interface design, to understand how the model interacts with different interface elements, to design an interface that is more in line with the user's operating habits and easier for the intelligent body to understand and operate, and to improve the usability and interactive efficiency of the interface.

- Enterprise Application Developers: For enterprises that need to automate task flow and intelligent interactions in their internal systems, MAI-UI can help develop customized solutions, such as cross-module intelligent operations and data flow in Enterprise Resource Management (ERP), Customer Relationship Management (CRM), and other systems, to improve work efficiency.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...