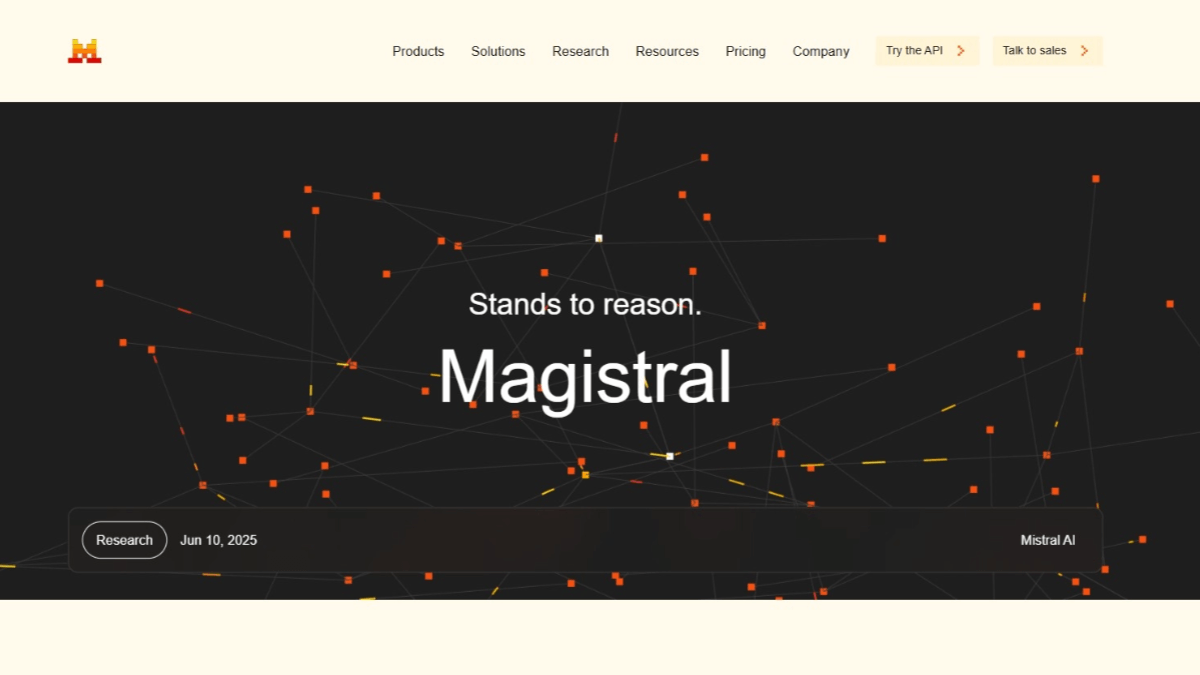

Magistral - Series of inference models from Mistral AI

What's Magistral?

Magistral, yes. Mistral AI Introducing inference models that focus on transparent, multilingual, and domain-specific inference capabilities. The model is available in both an open source version (Magistral Small) and an enterprise version (Magistral Medium), the latter of which excelled in AIME2024 testing with a score of 73.61 TP3T and a majority vote score of 901 TP3T. Magistral supports multiple languages including English, French, Spanish, German, Italian, Arabic, Russian and Simplified Chinese. Simplified Chinese and other languages, providing a traceable thought process that is suitable for applications in a variety of fields, including law, finance, healthcare, and software development. The model is based on deep and reinforcement learning techniques, and the inference is fast, making it suitable for large-scale real-time inference and user feedback.

Magistral's main features

- Transparent reasoning: Supports multi-step logical reasoning and provides a clear thought process for users to understand the decision basis of the model.

- Multi-language support: Covering a wide range of languages including English, French, Spanish, German, Italian, Arabic, Russian and Simplified Chinese.

- fast inference: Based on Le Chat Flash Answers feature for fast inference for real-time application scenarios

Magistral's official website address

- Project website::https://mistral.ai/news/magistral

- HuggingFace Model Library::https://huggingface.co/mistralai/Magistral-

- Technical Papers::https://mistral.ai/static/research/magistral.pdf

How to use Magistral

- Getting the model: Open source access to the HuggingFace model library. Load models with HuggingFace's transformers library.

- Installation of dependencies: Ensure that the necessary libraries, such as transformers and torch, are installed in your environment:

pip install transformers torch- Loading Models: Load the model with HuggingFace's transformers library:

from transformers import AutoModelForCausalLM, AutoTokenizer

# 加载模型和分词器

model_name = "mistralai/Magistral" # 替换为具体的模型名称

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)- Reasoning with models: The following is a simple example of reasoning:

# 输入文本

input_text = "请解释什么是人工智能?"

inputs = tokenizer(input_text, return_tensors="pt")

# 生成推理结果

outputs = model.generate(**inputs, max_length=100)

result = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(result)- Adjustment parameters: Adjust inference parameters as needed, such as max_length (maximum length of generated text), temperature (to control the diversity of the generated text), and so on.

Magistral's core strengths

- Transparent reasoning: Provide a clear and traceable thought process so that the user can clearly see each step of the model's reasoning logic.

- Multi-language support: Multiple languages are supported, including English, French, Spanish, German, Italian, Arabic, Russian and Simplified Chinese, crossing language barriers.

- fast inference: Magistral Medium's inference is up to 10x faster than most competitors, making it suitable for large-scale real-time inference and rapid decision-making scenarios.

- adaptable: Adapt to a variety of application scenarios, including legal, financial, healthcare, software development and content creation.

- Enterprise level support: Strong enterprise-level support and services are provided to ensure the stability and reliability of the model in real-world applications.

- Open Source and Flexibility: Suitable for individual developers and small projects, offering a high degree of flexibility and customization.

Who is Magistral for?

- Legal professionals: Attorneys, legal staff, and legal researchers conduct case analysis, contract review, and legal research to improve efficiency and accuracy.

- financial practitioner: Financial analysts, risk assessors and compliance officers at financial institutions perform financial forecasting, risk assessment and compliance checks to optimize the decision-making process.

- health worker: Physicians, medical researchers, and medical data analysts assist in medical diagnosis, treatment plan development, and medical data analysis to improve the quality of medical services and research efficiency.

- software developer: Software engineers and project managers optimize the software development process, including project planning, code generation and system architecture design, to improve development efficiency and code quality.

- content creator: Advertising copywriters, novelists, and news editors as creative writing and copy generation tools to stimulate creativity and improve writing productivity.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...