MagicArticulate: generating skeletal structure animation assets from static 3D models

General Introduction

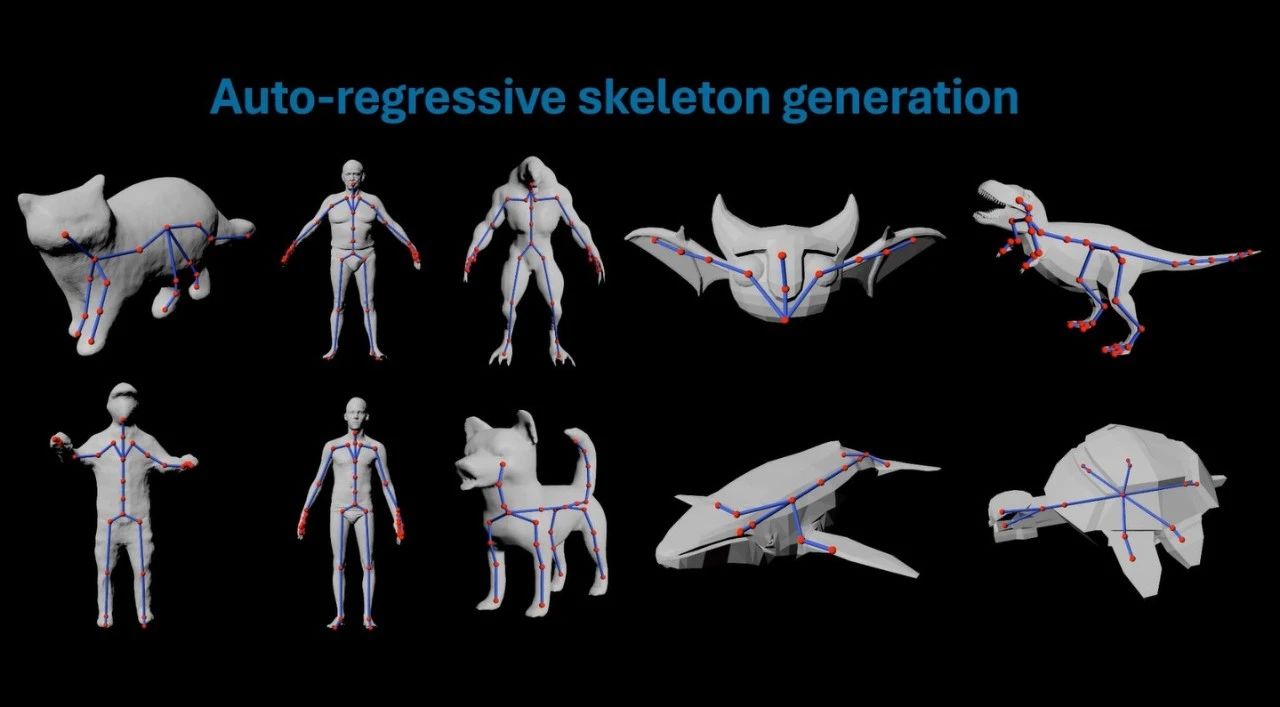

MagicArticulate is an AI framework developed by ByteDance in collaboration with Nanyang Technological University (NTU) that focuses on rapidly transforming static 3D models into animation-enabled digital assets. It greatly simplifies the complex process of traditional 3D animation production by automatically generating skeletal structures and skinning weights for models through advanced autoregressive Transformer and functional diffusion modeling. Whether you are a game developer, VR/AR designer or film animator, this tool helps users save time and improve efficiency. The website showcases its core technical achievements, including the large-scale dataset Articulation-XL and open-source code for technical enthusiasts and professionals to explore and use.

Function List

- Large-scale dataset support: Provides the Articulation-XL dataset, containing over 33,000 3D models with joint annotations.

- Automatic Bone Generation: Generate flexible skeletal structures for static models using autoregressive Transformer techniques.

- Skin weight prediction: Automatic generation of skin weights for natural deformations based on a functional diffusion model.

- Multi-category model processing: Support for generating animated assets for a wide range of 3D objects such as humanoids, animals, machinery, and more.

- Open Source Code and Models: GitHub links are provided for users to download code and pre-train models.

Using Help

MagicArticulate is a technology-driven tool designed to make animating 3D models easy and efficient. Below is a detailed guide to help you get a full grasp of its features, from visiting the website to actually working with it.

Access and Installation Process

The MagicArticulate website can be viewed without installation, but to run the framework and experience the features, you need to configure your environment locally. The steps are as follows:

- Access to the website

In your browser, typehttps://chaoyuesong.github.io/MagicArticulate/This page contains a brief description of the project and links to downloads. The page contains a project overview, technical highlights and download links. - Download source code

- Find the GitHub link at the bottom of the site or in the sidebar (usually the

https://github.com/ChaoyueSong/MagicArticulate). - Click the "Code" button and select "Download ZIP" to download the zip file, or use the Git command:

git clone https://github.com/ChaoyueSong/MagicArticulate.git - Extract the files to a local directory, for example

MagicArticulate/The

- Find the GitHub link at the bottom of the site or in the sidebar (usually the

- Configuring the runtime environment

- Checking the Python version: Make sure Python 3.8+ is installed, command:

python --versionThe - Creating a Virtual Environment(Recommended):

python -m venv magic_env source magic_env/bin/activate # Linux/Mac magic_env\Scripts\activate # Windows - Installation of dependencies: Go to the project directory and run it:

pip install -r requirements.txtAvailable if supported by Conda:

conda env create -f environment.yml conda activate magicarticulate - dependency: Depending on the README, it may be necessary to install PyTorch or other libraries to ensure GPU support to improve performance.

- Checking the Python version: Make sure Python 3.8+ is installed, command:

- Launch Framework

Once the configuration is complete, run the sample script (refer to the GitHub documentation for specific commands), for example:

python main.py --input [3D模型路径] --output [输出路径]

Main function operation flow

Below are the core functions of MagicArticulate and detailed instructions:

1. Use of the Articulation-XL data set

- Functional Description: Provides 33,000+ 3D models with joint annotations for training or testing.

- procedure::

- Find the dataset download link on the website or GitHub (permission may be required).

- Download and unzip it locally, e.g.

data/articulation_xl/The - Modify the configuration file or command line to specify the path:

python process.py --dataset_path data/articulation_xl/

- After running, load the dataset and view the annotation results, which can be used for validation or model input.

2. Automatic generation of skeletal structures

- Functional Description: Automatically generate bones for static 3D models to support diverse joint requirements.

- procedure::

- Prepare a static 3D model (OBJ, FBX, etc. format supported) and put it into the input directory (e.g.

input/). - Run the bone generation command:

python generate_skeleton.py --input input/model.obj --output output/

- Check the output directory (

output/) to generate a model file containing bones. - Open it up in Blender or Maya and test the bones to see if they meet the animation requirements.

3. Prediction of skinning weights

- Functional Description: Generate naturally deformed skin weights for models containing bones.

- procedure::

- Use the model file generated in the previous step.

- Run the weight prediction script:

python predict_weights.py --input output/model_with_skeleton.obj --output output/

- Outputs model files containing weights that can be used directly for animation binding.

- Load it in 3D software and test the animation effects (e.g. walking, rotating).

Application Scenarios and Operation Examples

Rapid Prototyping

- take: Game designers need to quickly validate character animations.

- manipulate: Upload character model (OBJ format), run bone generation and weight prediction, generate walking animation prototype in 5 minutes, import to Unity for testing.

VR/AR animation generation

- take: Generating interaction actions for virtual robots.

- manipulate: Input robot model, generate bones and weights, export to VR development tools (e.g. Unreal Engine), bind user interaction commands.

Movie & TV Animation Auxiliary

- take: Generate initial animations for fantasy creatures.

- manipulate: Upload the creature model, run the framework to generate bones and weights, import into Maya for keyframe fine-tuning, and generate a preview animation.

Tips for use

- Model Preparation: Ensure that the input model is a single object to avoid complex multiple components affecting the results.

- performance optimization: Running on GPUs can be substantially faster, CPUs may run slower.

- Adjustment of results: If the bones or weights are not ideal, the parameters (e.g., number of joints) can be modified, as described in the documentation.

- Question Feedback: Submit an issue at GitHub Issues or contact developer Chaoyue Song for support.

With these steps, MagicArticulate can help you quickly convert static 3D models into animated assets for all types of users from beginners to professional designers.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...