LongCat-Flash-Omni - A Fully Modal Large Language Model for Meituan Open Source

What is LongCat-Flash-Omni?

LongCat-Flash-Omni is a member of the American Legion. LongCat The team released an open source fully modal large language model. With a parameter scale of 560 billion (27 billion activated parameters), it realizes millisecond-level real-time audio and video interaction capabilities while maintaining a large number of parameters. The model is based on the efficient architectural design of the LongCat-Flash series, and innovatively integrates a multimodal perception module and a speech reconstruction module, supporting a variety of modal tasks such as text, image, and video comprehension, as well as speech perception and generation, etc. LongCat-Flash-Omni has reached the open-source state-of-the-art level in full-modal benchmarks (SOTA), and has achieved the highest level of open-source performance in key unimodal tasks such as text, image, and audio, video and other key unimodal tasks. It adopts a progressive early multimodal fusion training strategy, gradually incorporating different modal data to ensure strong all-modal performance without unimodal performance degradation. The model supports 128K tokens of context windows and over 8 minutes of audio/video interactions, and is capable of multimodal long term memory and multiple rounds of dialog.

Features of LongCat-Flash-Omni

- Multimodal interaction capabilities: It supports a variety of modal tasks such as text, image, video comprehension and speech perception and generation, and is capable of realizing multimodal interactions in complex scenarios.

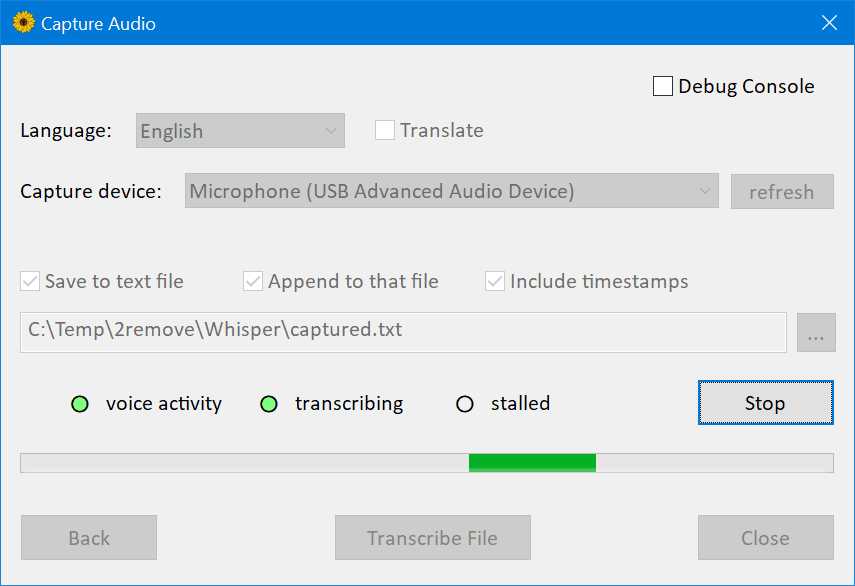

- Real-time audio and video interactionIt has millisecond-level real-time audio and video interaction capabilities, supports 128K tokens of context windows and over 8 minutes of audio and video interaction, and has multi-modal long-time memory and multi-round dialog capabilities.

- Efficient Architecture DesignBased on the efficient architectural design of LongCat-Flash series, it innovatively integrates multimodal sensing module and speech reconstruction module, with a total of 560 billion parameters (27 billion activated parameters), which realizes low-latency interactions while maintaining a large number of parameters.

- Progressive multimode fusion training: A progressive early multimodal fusion training strategy is used to gradually incorporate different modal data to ensure strong all-modal performance without unimodal performance degradation.

- Open Source and Community Support: It is open-sourced on Hugging Face and GitHub for developers to freely explore and use, and provides both a web experience and mobile app support for real-time interaction and functionality.

Core Benefits of LongCat-Flash-Omni

- Full modal coverage: It supports multiple modalities such as text, image, video and speech, and is the first large language model to achieve full modal coverage in the open source domain.

- Low Latency Interaction: Even at a parameter scale of 560 billion, real-time audio and video interactions at the millisecond level can still be realized, solving the pain point of large model inference latency.

- Powerful unimodal performance: Demonstrates extreme competitiveness in key unimodal tasks such as text, image, audio and video, all at the open source state-of-the-art (SOTA) level.

- end-to-end architecture: A fully end-to-end design, from multimodal perception to integrated text and speech generation, improves overall efficiency and performance.

- Effective Training Strategies: Gradual incorporation of different modal data through progressive early multimodal fusion training strategies ensures strong all-modal performance without unimodal performance degradation.

What is the official website of LongCat-Flash-Omni?

- GitHub repository:: https://github.com/meituan-longcat/LongCat-Flash-Omni

- HuggingFace Model Library:: https://huggingface.co/meituan-longcat/LongCat-Flash-Omni

- Technical Papers:: https://github.com/meituan-longcat/LongCat-Flash-Omni/blob/main/tech_report.pdf

Who is LongCat-Flash-Omni for?

- Artificial Intelligence Developers: Can leverage its powerful multimodal capabilities to develop innovative applications such as intelligent assistants, content creation tools, and more.

- research worker: Can be used in multimodal studies to explore model performance and optimization directions in different modal tasks.

- Corporate Technical Team: It can be integrated into enterprise products to enhance the user experience, such as customer service systems and smart offices.

- educator: Can be used to develop educational tools such as intelligent tutoring systems that support multimodal teaching resources.

- content creator: It can assist in creation, such as generating text, images, and video content, to improve the efficiency of creation.

- technology enthusiast: Interested in the latest AI technologies and want to experience and explore practical applications of multimodal macromodeling.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...