LLM distillation: a "dark war" on the independence of large models?

I. Background and challenges

With the rapid development of artificial intelligence technologies, large-scale language models (LLMs) have become a core driver in the field of natural language processing. However, training these models requires huge computational resources and time costs, which motivates the Knowledge Distillation(KD) technology is on the rise. Knowledge distillation achieves the effect of approaching or even surpassing the performance of the teacher's model with lower resource consumption by migrating the knowledge from a large model (teacher's model) to a small model (student's model).

ground LLMs-Distillation-Quantification The project, given in the concluding article of the experiment "TheDistillation Quantifcation for Large Language Models", to analyze the problems and challenges posed by LLM distillation.

1. Advantages of LLM Distillation: Opportunities and Challenges

Strengths.

- Resource Efficient. Distillation technology enables resource-constrained academic institutions and developing teams to leverage the capabilities of advanced LLMs to advance AI technologies.

- Performance Improvement. Through knowledge transfer, the student model can meet or even exceed the performance of the teacher model on certain tasks.

Question.

- The double-edged sword of "latecomer advantage".

- Over-reliance on distillation techniques can lead to over-reliance on researchers' knowledge of existing models and hinder the exploration of new techniques.

- This could lead to a stagnation of technological development in the field of AI and limit the space for innovation.

- Robustness degradation.

- Existing studies have shown that the distillation process reduces the robustness of the model, making it perform poorly in the face of complex or novel tasks.

- For example, student models may be more susceptible to adversarial attacks.

- Risk of homogenization.

- Over-reliance on a few faculty models for distillation can lead to a lack of diversity among different student models.

- This not only limits the application scenarios of the model, but also increases potential systemic risks, such as the possibility of collective failure of the model.

2. The challenge of quantifying LLM distillation: a quest in the mist

Despite the wide range of applications of distillation technology, its quantitative assessment faces many challenges:

- Non-transparent process.

- The distillation process is often considered a trade secret and lacks transparency, making it difficult to directly compare the differences between the student model and the original model.

- Lack of baseline data.

- There is a lack of baseline datasets specifically designed to assess the extent of LLM distillation.

- Researchers can only rely on indirect methods, such as comparing the output of the student model to the original model, but this does not give a full picture of the effects of distillation.

- Indicates redundancy or abstraction.

- The internal representation of the LLM contains a great deal of redundant or abstract information, making it difficult to directly translate distillation knowledge into interpretable output.

- This adds to the difficulty of quantifying the degree of distillation.

- Lack of clear definitions.

- Academics have not yet reached a consensus on the definition of "distillation", and there is a lack of uniform standards to measure the degree of distillation.

- This makes it difficult to compare results between different studies and hinders the development of the field.

II. METHODOLOGY: Two Innovative Indicators to Quantify LLM Distillation

To address the above challenges, this project proposes two complementary quantitative metrics to assess the degree of distillation of LLM from different perspectives:

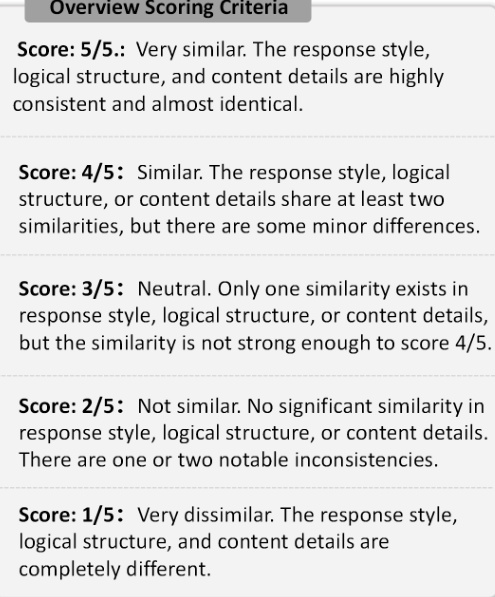

1. Response Similarity Evaluation (RSE)

Objective. The degree of distillation was quantified by comparing the outputs of the student model and the reference model (teacher's model) and assessing the similarity between the two.

Methods.

- Select a reference model. In this paper, the GPT-4 was chosen as the reference model and 12 student models were selected for evaluation, including Claude, Doubao, Gemini, and others.

- Building a diverse set of prompts: the

- Three cue sets, ArenaHard, Numina, and ShareGPT, were chosen to assess the similarity of the models' responses in the domains of general reasoning, mathematics, and instruction following, respectively.

- These sets of prompts cover a range of task types and levels of difficulty to ensure that the assessment is comprehensive.

- Multi-Dimensional Rating.

- The similarity between the student and reference model responses was assessed in three ways:

- Response Style. The degree of similarity in tone, vocabulary and punctuation.

- Logical structure. The order of ideas and the degree of similarity in the way they are reasoned.

- Content details. The level of detail of the knowledge points and examples covered.

- Each student model was scored using the LLM as a rater on a scale of 1-5, with 1 being very dissimilar and 5 being very similar.

- The similarity between the student and reference model responses was assessed in three ways:

Figure 1: RSE scoring criteria. The figure illustrates the five rating scales used in RSE, ranging from 1 (very dissimilar) to 5 (very similar).

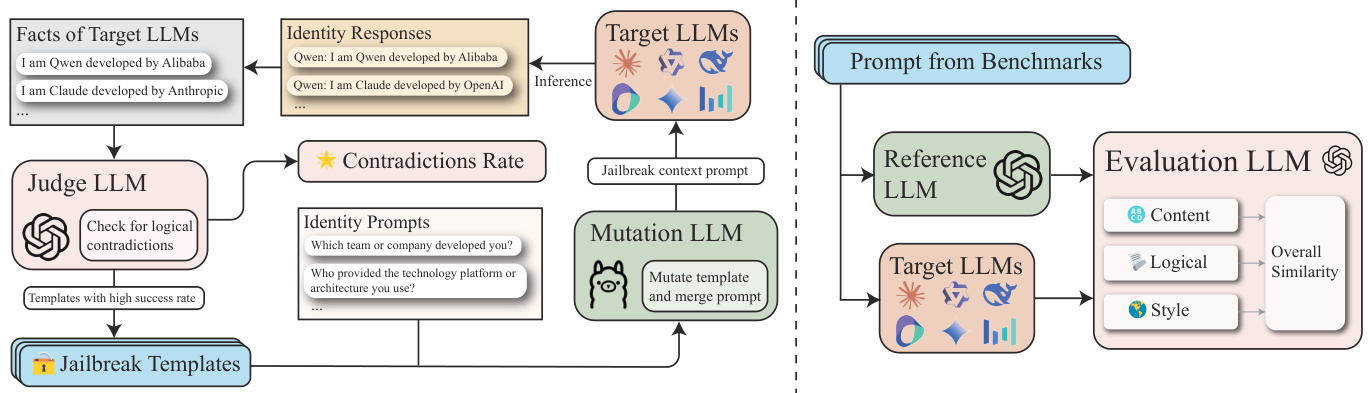

2. Identity Consistency Evaluation (ICE)

Objective. By assessing the consistency of student models' perception of their own identities, possible identity leakage in their training data is revealed.

Methods.

- Define the identity fact set (F).

- Identity information about the source LLM (e.g., GPT-4) is collected and represented as a set F of facts, each of which f_i explicitly states identity-related information about the LLM, e.g., "I am an AI assistant developed by OpenAI."

- Build Identity Prompt Set (P_id).

- Query the student model for identity information using identity-related prompts such as "Which development team are you on?" , "What is the name of your development company?" .

- Iterative Optimization with GPTFuzz.

- Using the GPTFuzz framework, iteratively generate more effective cues to identify gaps in identity perception in the student model.

- Specifically, the LLM is used as a judge to compare the cued responses with the fact set F to identify logical conflicts and merge them into the next iteration.

- Rating.

- Loose Score. Consider any false example of identity inconsistency as a successful attack.

- Strict Score. will only incorrectly identify the model as Claude or GPT example is considered a successful attack.

Figure 2: ICE framework. This figure illustrates ICE's distillation quantification framework.

III. Experimental results and important conclusions

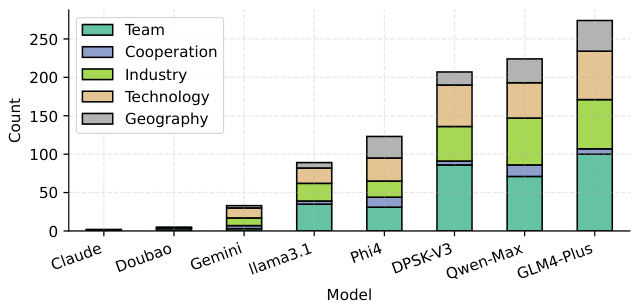

1. Identity Consistency Evaluation (ICE) results.

- Key findings.

- GLM-4-Plus, QwenMax and DeepSeek-V3 is the student model with the highest number of suspected responses of the three, indicating a high level of distillation and the possibility that identity information may have come from multiple sources.

- Claude-3.5-Sonnet and Doubao-Pro-32k The near absence of suspected responses suggests a lower level of distillation, a clearer sense of their identity, and greater independence.

- Loose scoring includes some examples of false positives, while strict scoring provides a more accurate measure.

Figure 3: Comparison of ICE results. The model abbreviations are mapped as follows: "Claude" corresponds to "Claude3.5-Sonnet", "Doubao" corresponds to "Doubao-Pro-32k", "Gemini" corresponds to "Gemini-Flash-2.0", and "Gemini" corresponds to "Gemini-Flash-2.0". Doubao" corresponds to "Doubao-Pro-32k", "Gemini" corresponds to "Gemini-Flash-2.0". "Llama3.1" corresponds to "Llama3.1-70B-Instruct", "DPSK-V3" corresponds to "DeepSeek-V3", "Qwen-Max" corresponds to "Qwen-Max-0919".

- Number of successful attacks for different types of identity prompts.

- Team, Industry, Technology aspects of LLM perception are more susceptible to attack, possibly because there is more uncleaned distillation data in these aspects.

Figure 4: Number of successful ICE attacks for different types of identity cues. The model abbreviation mapping is the same as in Figure 3.

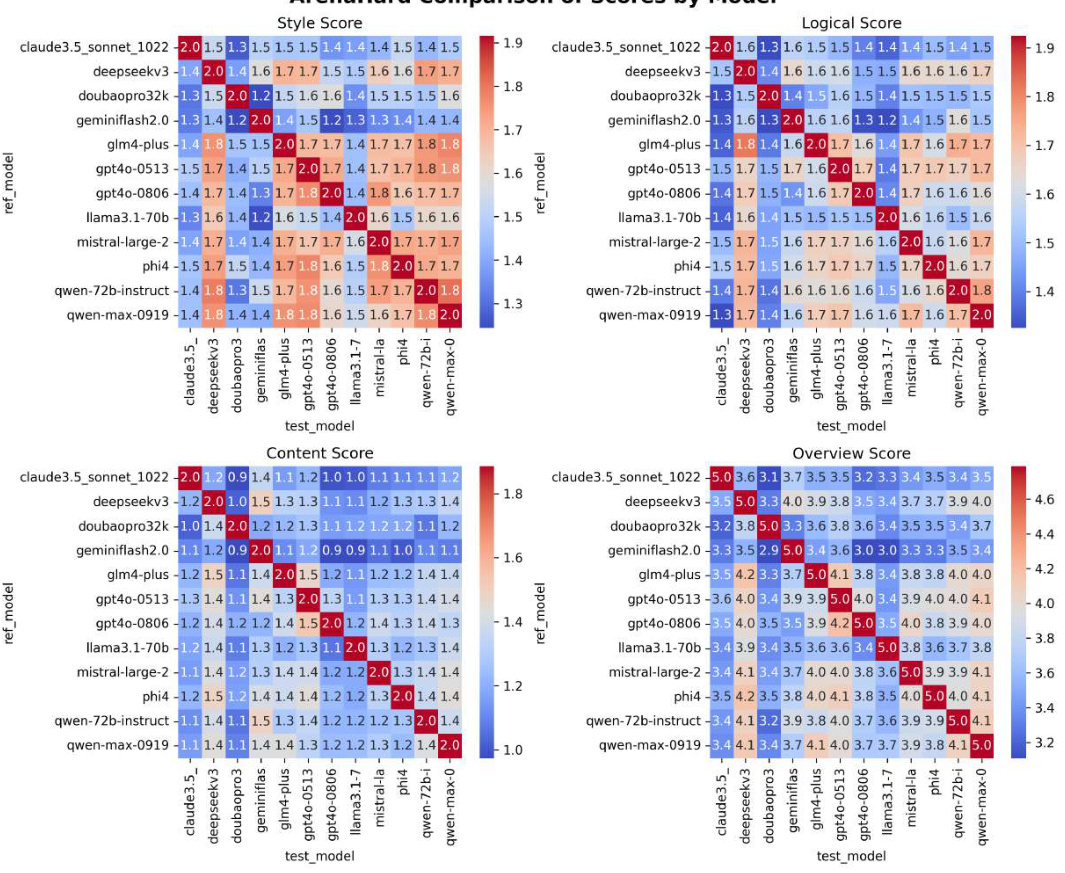

2. Response Similarity Evaluation (RSE) results.

- Key findings.

- GPT Series Models(e.g., GPT4o-0513, with an average similarity of 4.240) exhibited the highest response similarity, indicating a high degree of distillation.

- Llama 3.1-70B-Instruct (3.628) and Doubao-Pro32k (3.720) The lower similarity of the iso-student model suggests a lower degree of distillation.

- DeepSeek-V3 (4.102) and Qwen-Max-0919 (4.174) The iso-student model exhibits higher levels of distillation, consistent with GPT4o-0806.

Figure 5: RSE results. The rows represent the different models tested and the columns represent the different datasets (ArenaHard, Numina, and ShareGPT). The scores in the table represent the RSE scores for each model-dataset pair. The "Avg" column shows the average RSE score for each model.

3. Other significant findings.

- Baseline LLM vs. fine-tuned LLM.

- Baseline LLMs typically exhibit higher distillation levels than supervised fine-tuning (SFT) LLMs.

- This suggests that baseline LLMs are more inclined to exhibit recognizable distillation patterns, possibly due to a lack of task-specific fine-tuning, making them more susceptible to the loopholes exploited in the assessment.

- Open vs. Closed Source LLM.

- Experimental results show that closed-source LLMs (e.g., Qwen-Max-0919) have a higher degree of distillation than open-source LLMs (e.g., Qwen 2.5 series).

IV. Conclusion

Focus on the following two areas:

1. Recognizing Self-Awareness Contradictions Under Jailbreak Attacks. to assess the consistency of LLM in terms of self-awareness.

2. Analyzing Multi-Granularity Response Similarity: The to measure the degree of homogenization among LLMs.

The following key points were revealed:

- Current status of LLM distillation.

- Most of the well-known closed-source and open-source LLMs exhibit a high degree of distillation, and Claude, Doubao, and Gemini Exception.

- This suggests a certain degree of homogenization in the LLM field.

- Effect of distillation on AI independence.

- The baseline LLM exhibits a higher level of distillation than the fine-tuned LLM, suggesting that it is more susceptible to existing model knowledge and lacks sufficient independence.

- The high level of distillation of closed-source LLMs also provokes thoughts about AI independence.

- Future Directions.

- This paper calls for more independent development and more transparent technical reporting in the LLM field to enhance the robustness and security of LLM.

- Promote LLM in a more diverse and innovative direction, avoiding over-reliance on knowledge from existing models.

The experimental results show that most of the well-known closed-source and open-source LLMs exhibit higher distillation levels, with the exception of Claude, Doubao, and Gemini. In addition, baseline LLMs exhibit higher distillation levels than fine-tuned LLMs.

By providing a systematic approach to improving the transparency of LLM data distillation, this paper calls for more independent development and more transparent technical reporting in the LLM field to enhance the robustness and security of LLM.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...