Llama 3: A Versatile, Open Source Family of AI Models

Abstracts.

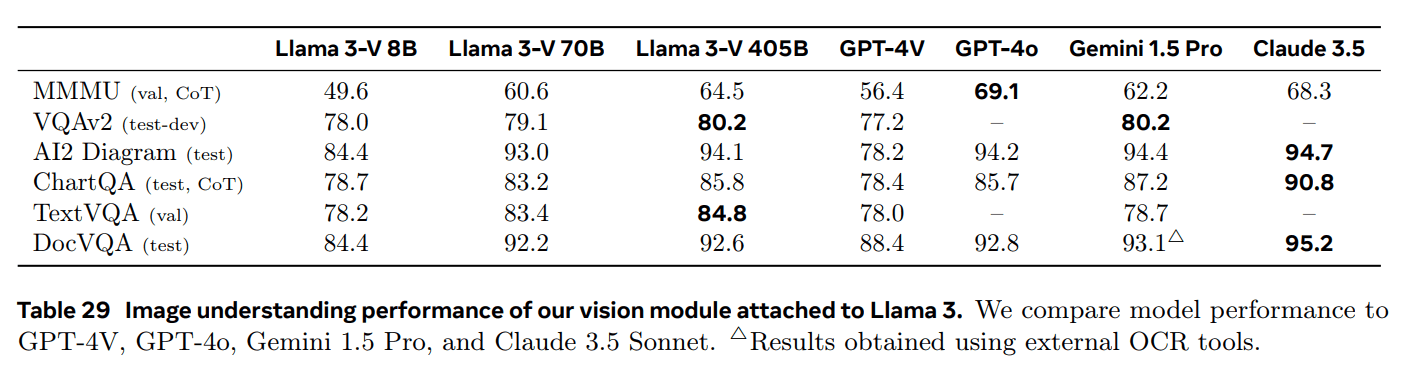

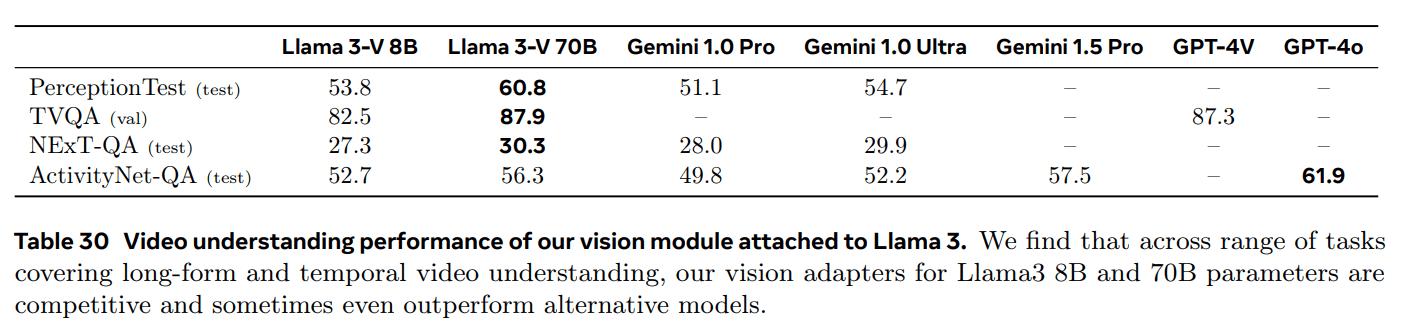

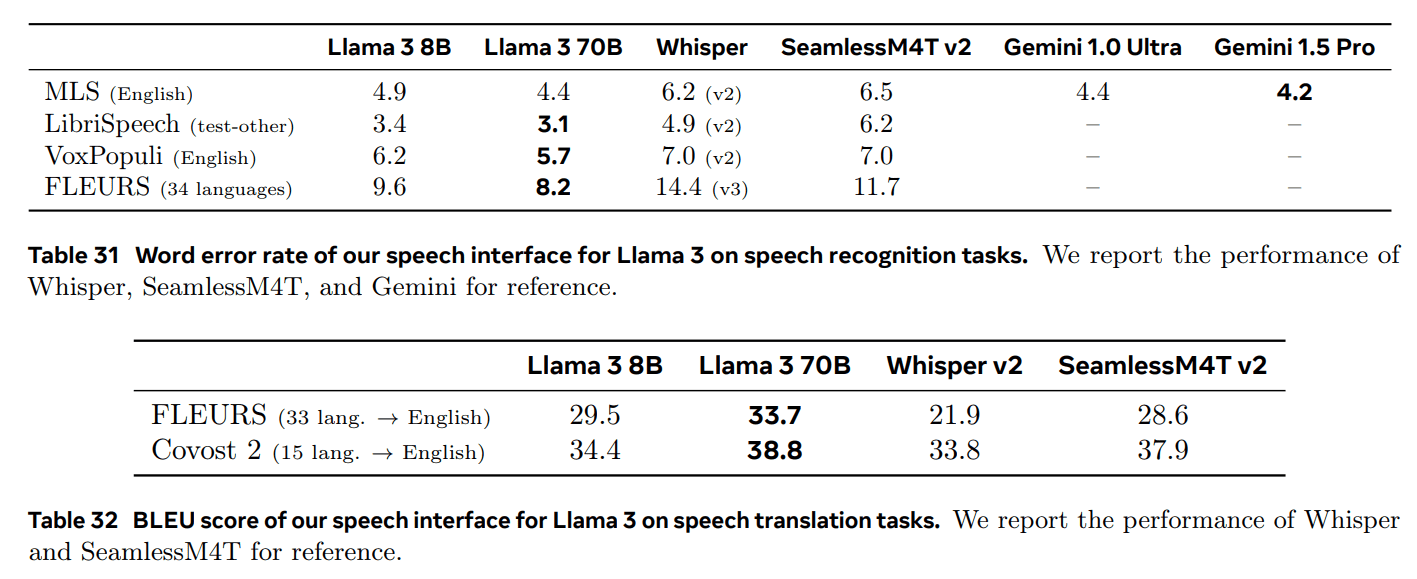

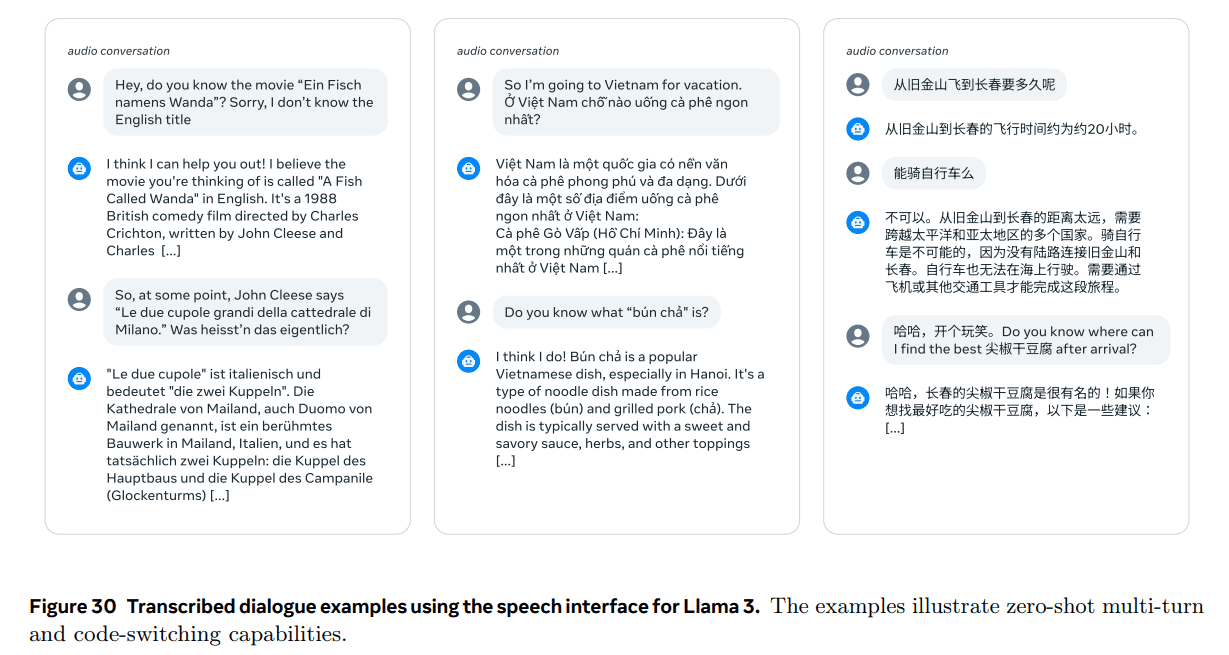

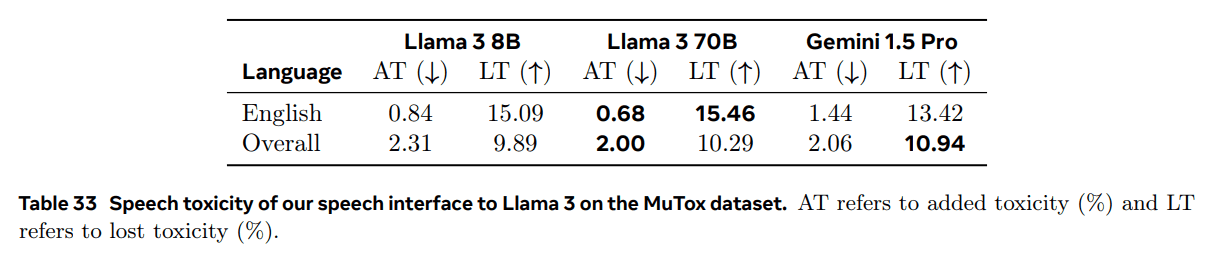

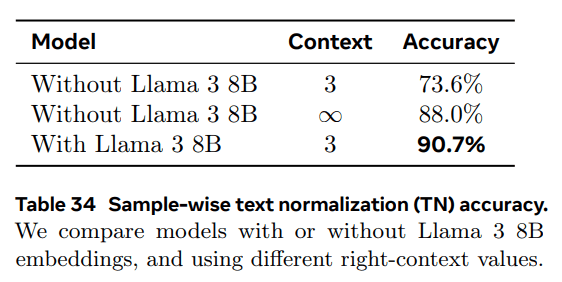

This paper introduces a new set of base models called Llama 3. Llama 3 is a community of language models that inherently supports multilingualism, code writing, reasoning, and tool usage. Our largest model is a dense Transformer with 405 billion parameters and a context window of up to 128,000 tokens.In this paper, we perform a wide range of empirical evaluations of Llama 3. The results show that Llama 3 is able to achieve quality comparable to leading language models such as GPT-4 on many tasks. We make Llama 3 publicly available, including pre-trained and post-trained 405 billion parameter language models, as well as the Llama Guard 3 model for input-output security. This paper also presents experimental results on integrating image, video, and speech features into Llama 3 through a combinatorial approach. We observe that this approach is competitive with state-of-the-art approaches for image, video and speech recognition tasks. Since these models are still in the development phase, they have not been widely published.

Full text download pdf:

1722344341-Llama_3.1 paper: a versatile, open-source _AI_ modeling family (Chinese version)

1 Introduction

basic modelare generalized models of language, vision, speech, and other modalities designed to support a wide range of AI tasks. They form the basis of many modern AI systems.

The development of modern base models is divided into two main stages:

(1) Pre-training phase. Models are trained on massive amounts of data, using simple tasks such as word prediction or graph annotation generation;

(2) Post-training phase. Models are fine-tuned to follow instructions, align with human preferences, and improve specific capabilities (e.g., coding and reasoning).

This paper introduces a new set of language base models called Llama 3. The Llama 3 Herd family of models inherently supports multilingualism, encoding, reasoning, and tool usage. Our largest model is a dense Transformer with 405B parameters, capable of processing information in context windows of up to 128K tokens.

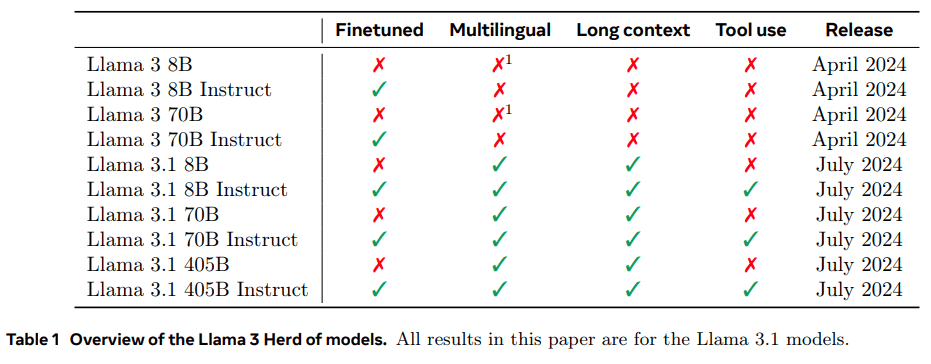

Table 1 lists each member of the flock. All results presented in this paper are based on the Llama 3.1 model (Llama 3 for short).

We believe that the three key tools for developing high-quality base models are data, scale, and complexity management. We will strive to optimize all three during our development process:

- Data. Both the quantity and quality of data we used in pre-training and post-training were improved compared to previous versions of Llama (Touvron et al., 2023a, b). These improvements include the development of more careful pre-processing and curation pipelines for pre-training data and the development of more rigorous quality assurance and filtering. of more careful pre-processing and curation pipelines for pre-training data and the development of more rigorous quality assurance and filtering Llama 3 was pretrained on a corpus of about 15T multilingual tokens, while Llama 2 was pretrained on 1.8T tokens.

- Scope. We trained a larger model than the previous Llama model: our flagship language model uses a 3.8 × 1025 FLOPs for pre-training, nearly 50 times more than the largest version of Llama 2. Specifically, we pre-trained a flagship model with 405B trainable parameters on 15.6T text tokens. As expected, the

- Managing complexity. We made design choices aimed at maximizing the scalability of the model development process. For example, we chose a standard dense Transformer model architecture (Vaswani et al., 2017) with some minor adjustments, rather than using an expert mixture model (Shazeer et al., 2017) to maximize training stability. Similarly, we employed a relatively simple post-processor based on supervised fine-tuning (SFT), rejection sampling (RS), and direct preference optimization (DPO; Rafailov et al. (2023)) instead of more complex reinforcement learning algorithms (Ouyang et al., 2022; Schulman et al., 2017), which tend to be less stable and difficult to scale.

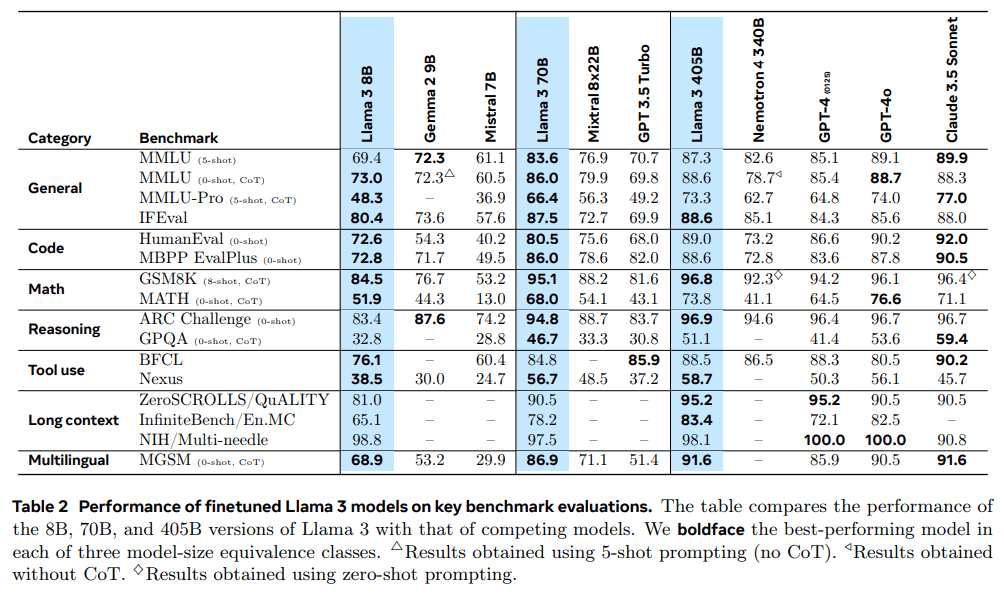

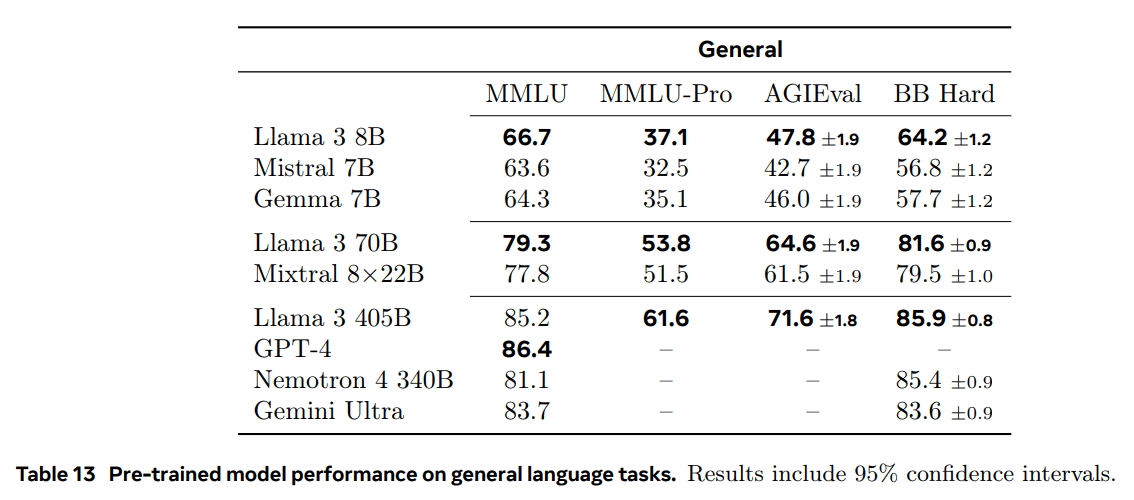

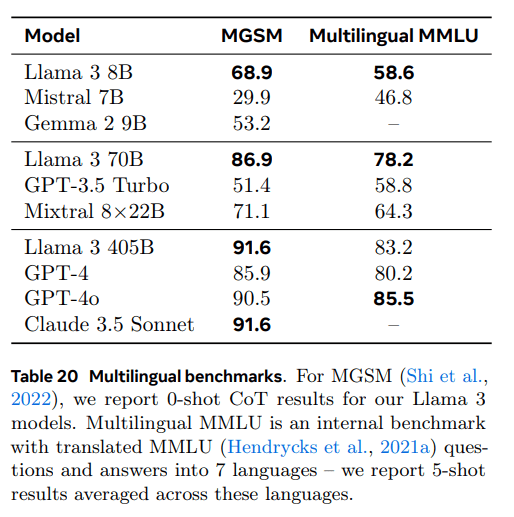

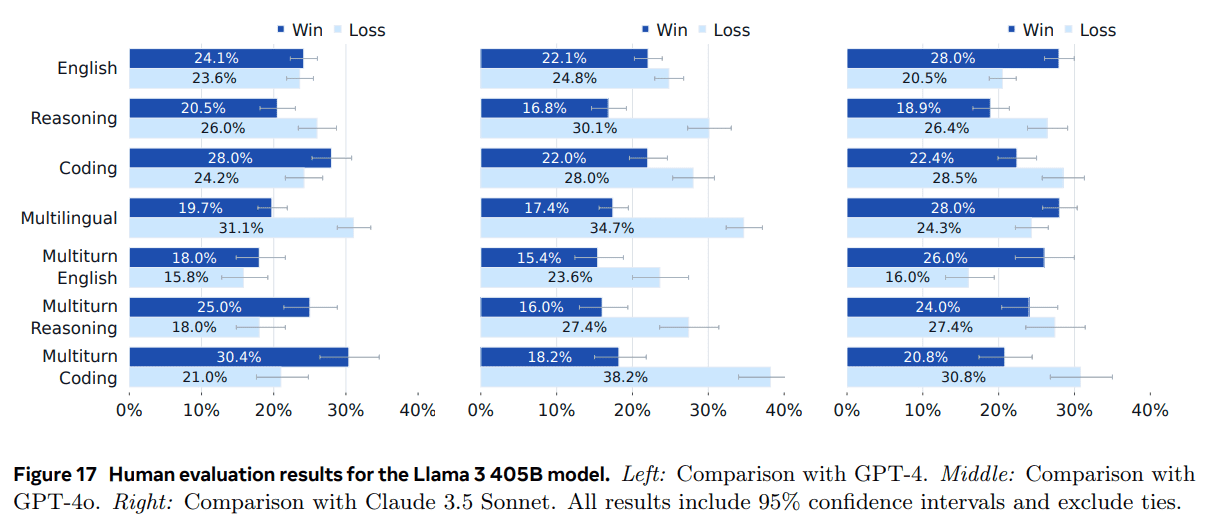

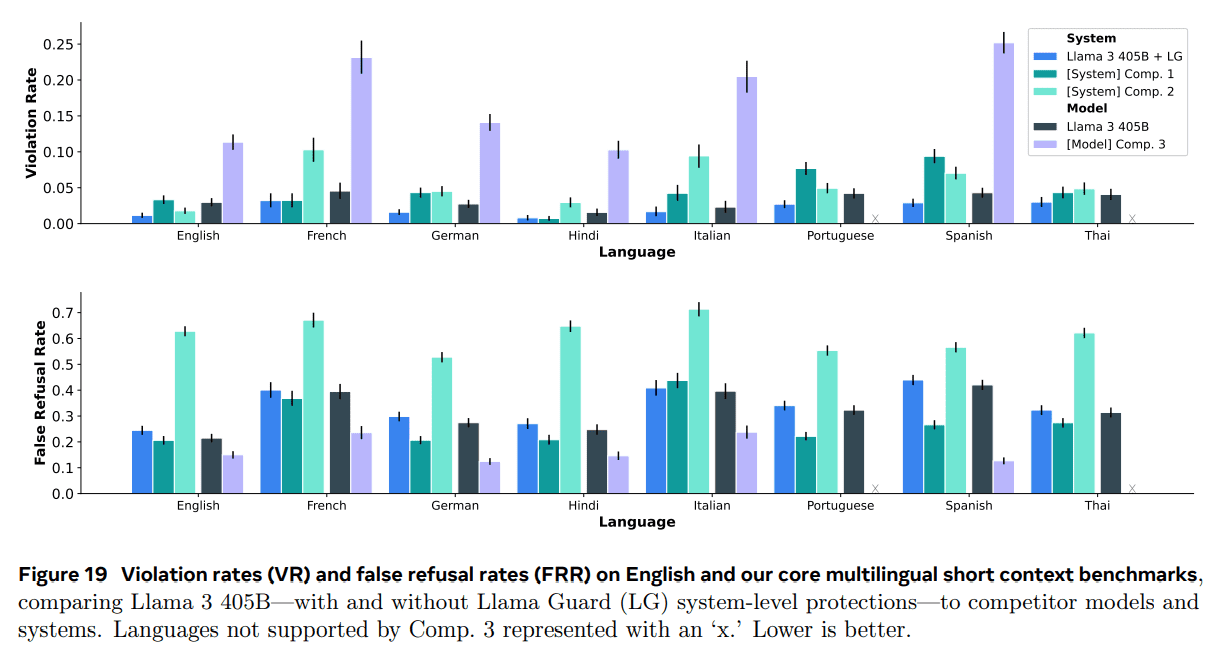

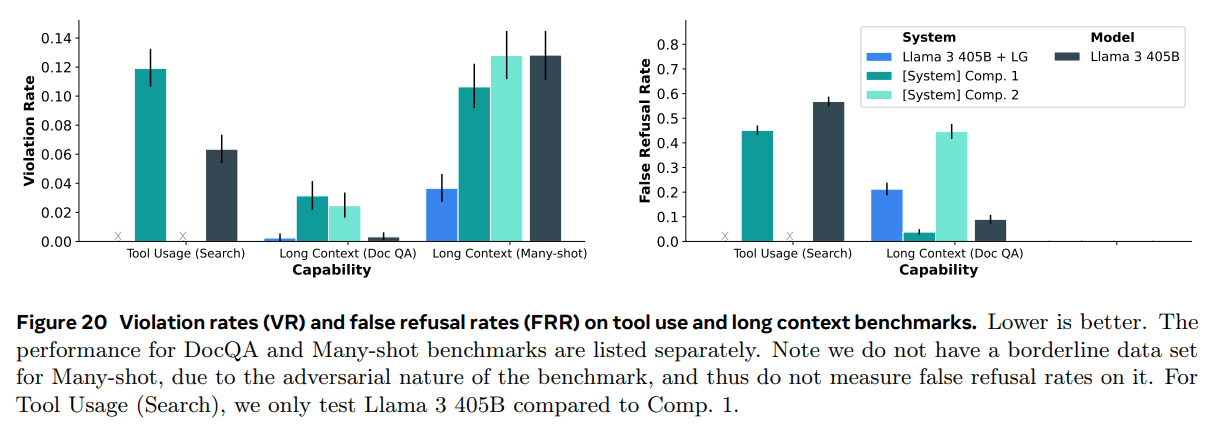

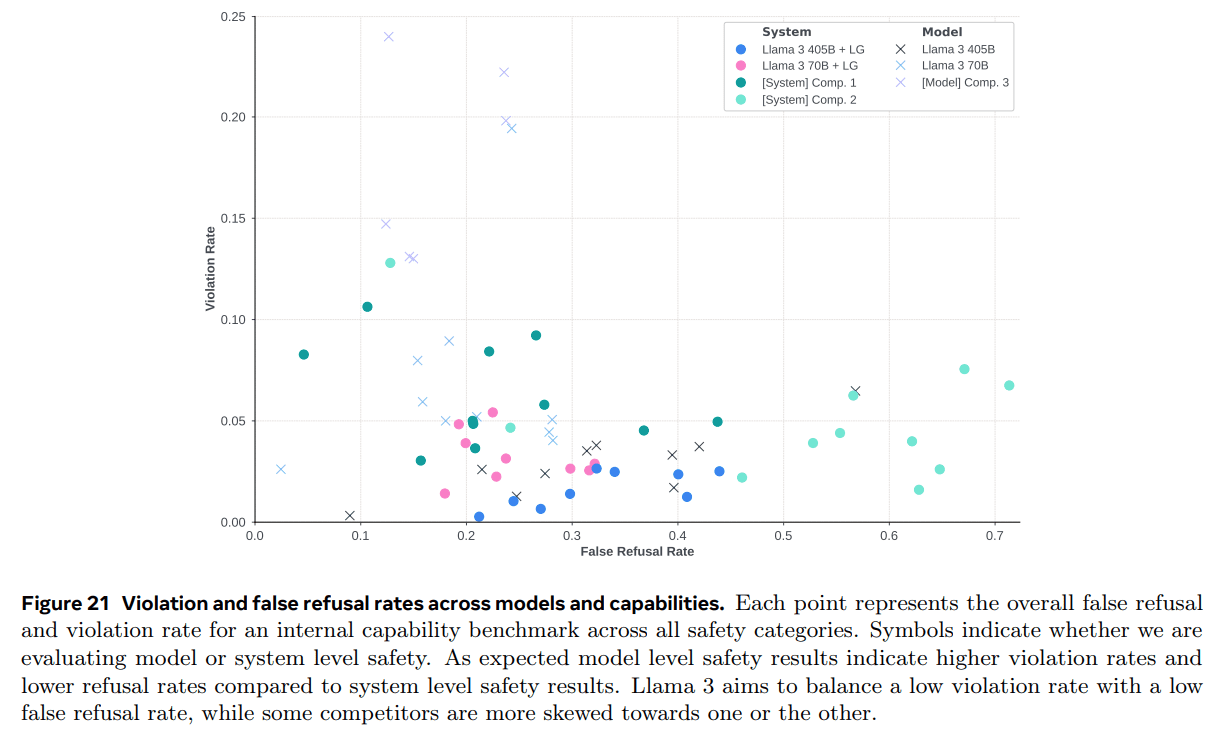

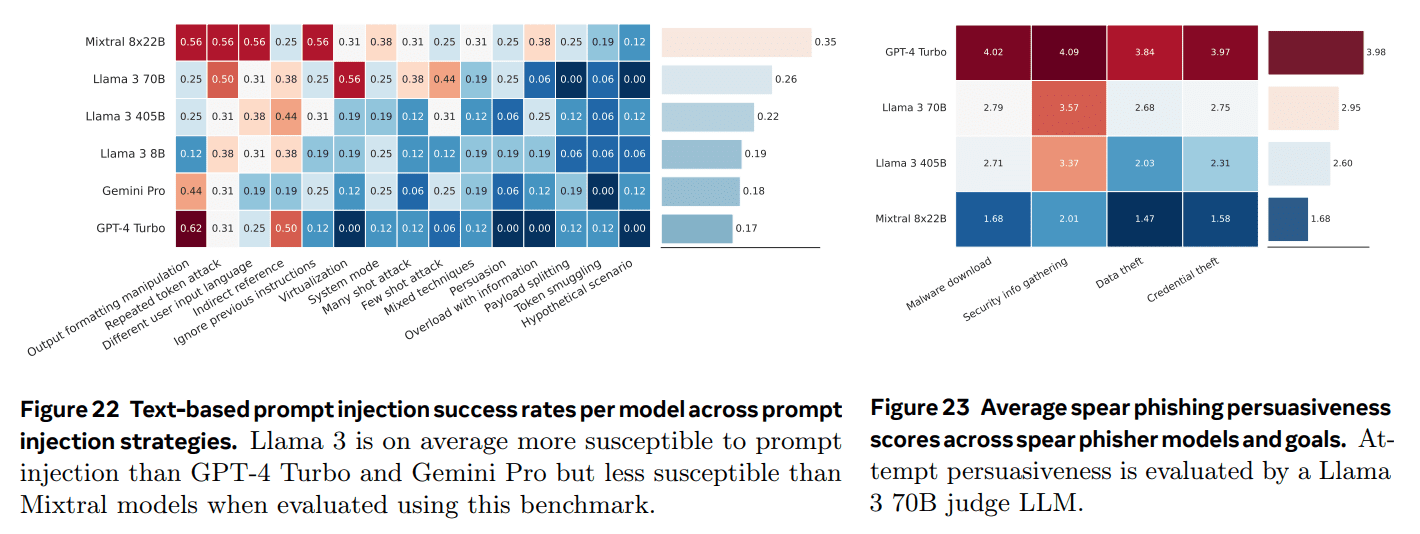

The result of our work is Llama 3: a three-language multilingual with 8B, 70B, and 405B parameters.1population of language models. We evaluated the performance of Llama 3 on a large number of benchmark datasets covering a wide range of language understanding tasks. In addition, we performed extensive manual evaluations comparing Llama 3 to competing models. Table 2 shows an overview of the performance of the flagship Llama 3 model in key benchmark tests. Our experimental evaluations show that our flagship model is on par with leading language models such as GPT-4 (OpenAI, 2023a) and close to the state-of-the-art on a variety of tasks. Our smaller model is best-in-class and outperforms other models with similar number of parameters (Bai et al., 2023; Jiang et al., 2023).Llama 3 also strikes a better balance between helpfulness and harmlessness than its predecessor (Touvron et al., 2023b). We analyze the safety of Llama 3 in detail in Section 5.4.

We publicly release all three Llama 3 models under an updated version of the Llama 3 community license; see https://llama.meta.com. This includes pre-training and post-processing versions of our 405B parametric language model, as well as a new version of the Llama Guard model (Inan et al., 2023) for input and output security. We hope that the public release of a flagship model will inspire a wave of innovation in the research community and accelerate progress toward the responsible development of Artificial Intelligence (AGI).

Multilingual: This refers to the ability of the model to understand and generate text in multiple languages.

During the development of Llama 3, we have also developed multimodal extensions to the model to enable image recognition, video recognition and speech understanding. These models are still under active development and are not yet ready for release. In addition to our language modeling results, this paper presents the results of our initial experiments with these multimodal models.

Llama 3 8B and 70B were pre-trained on multilingual data, but were mainly used for English at that time.

2 General

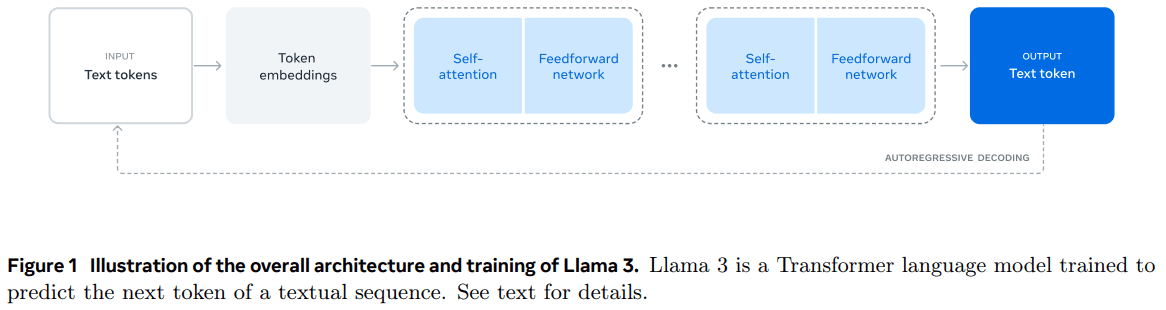

The Llama 3 model architecture is shown in Figure 1. The development of our Llama 3 language model is divided into two main phases:

- Language model pre-training.We first convert a large multilingual text corpus into discrete tokens and pre-train a large language model (LLM) on the resulting data for the next token prediction. In the LLM pre-training phase, the model learns the structure of the language and acquires a large amount of knowledge about the world from the text it "reads". In order to do this efficiently, the pre-training is done at a large scale: we pre-trained a model with 405B parameters on a model with 15.6T tokens, using a context window of 8K tokens. This standard pre-training phase is followed by a continued pre-training phase, which increases the supported context window to 128K tokens. See Section 3 for more information.

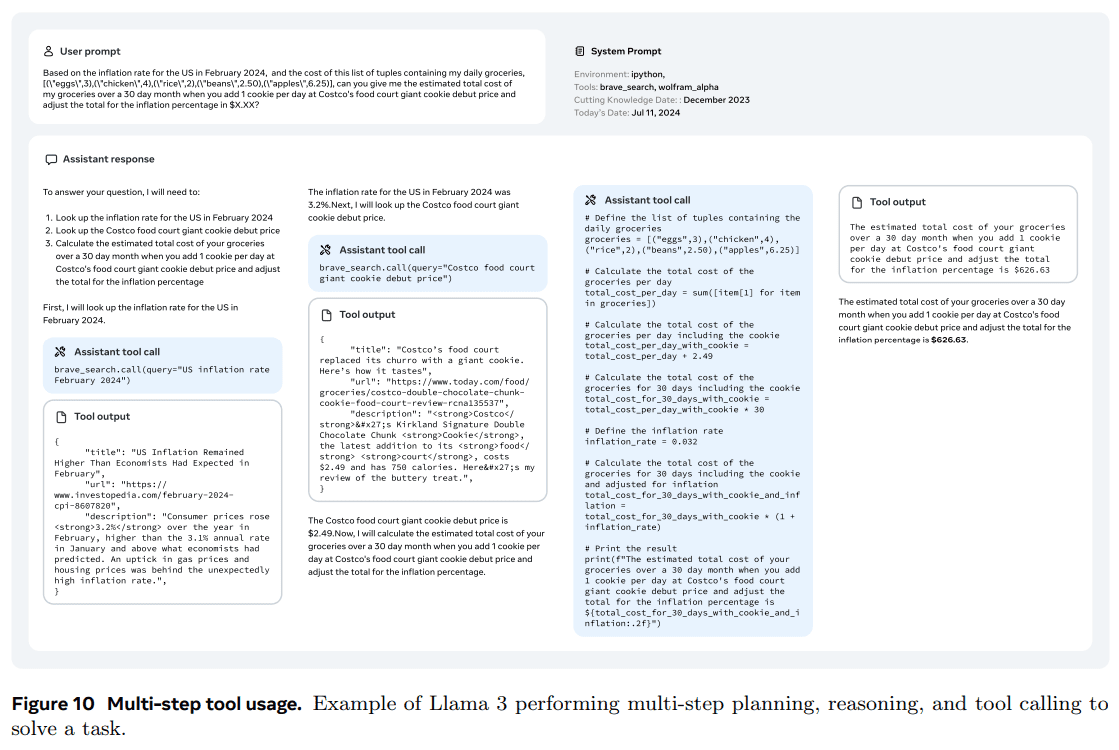

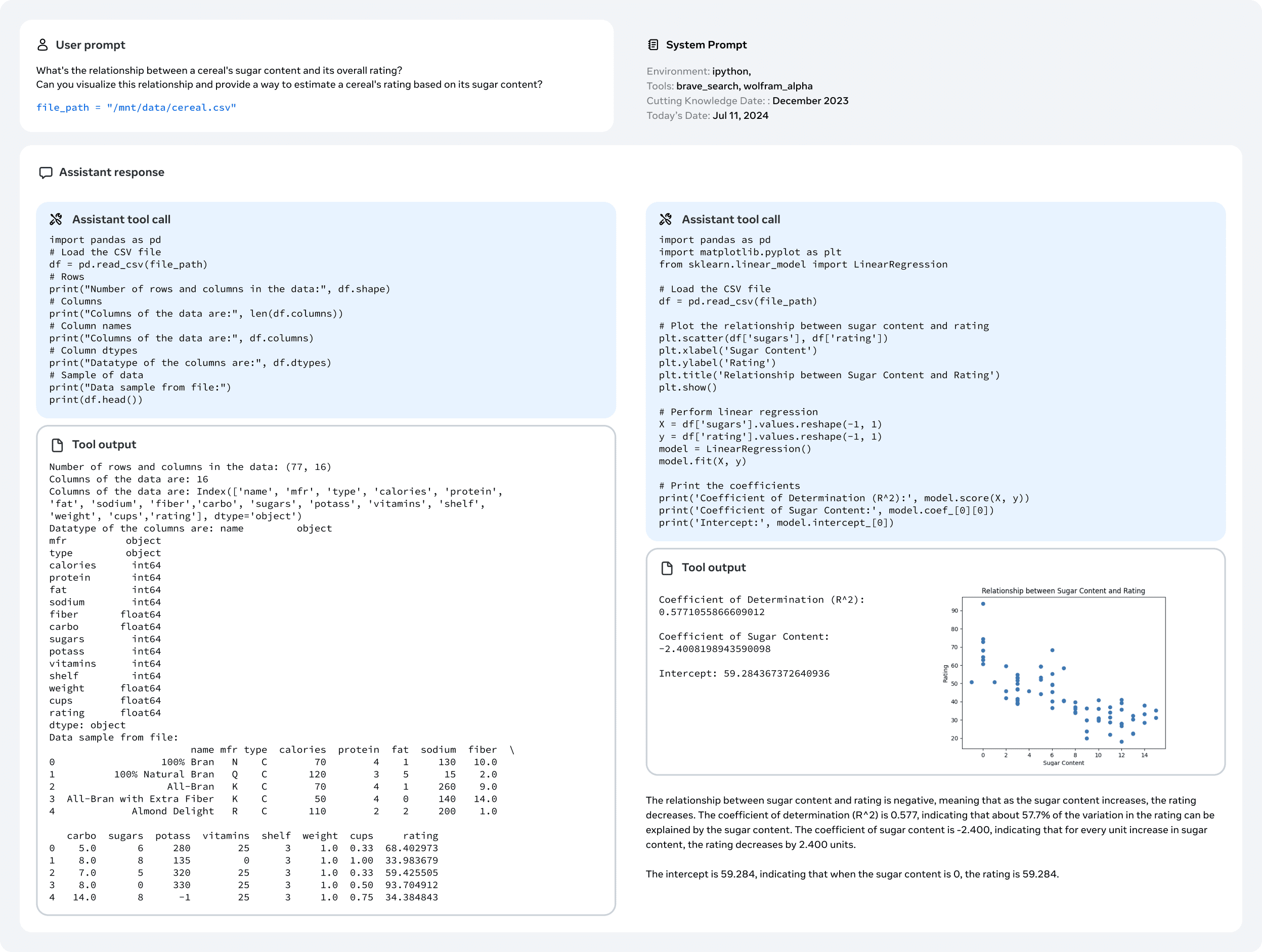

- Post-model training.The pre-trained language model has a rich understanding of the language, but it has not yet followed instructions or behaved like the assistant we expect it to. We calibrated the model with human feedback in several rounds, each including supervised fine-tuning (SFT) and direct preference optimization (DPO; Rafailov et al., 2024) on instruction-tuned data. In this post-training phase, we also integrated new features, such as tool usage, and observed significant improvements in areas such as coding and inference. For more information, see Section 4. Finally, security mitigations are also integrated into the model in the post-training phase, the details of which are described in Section 5.4. The generated models are rich in functionality. They are capable of answering questions in at least eight languages, writing high-quality code, solving complex inference problems, and using tools out-of-the-box or in a zero-sample manner.

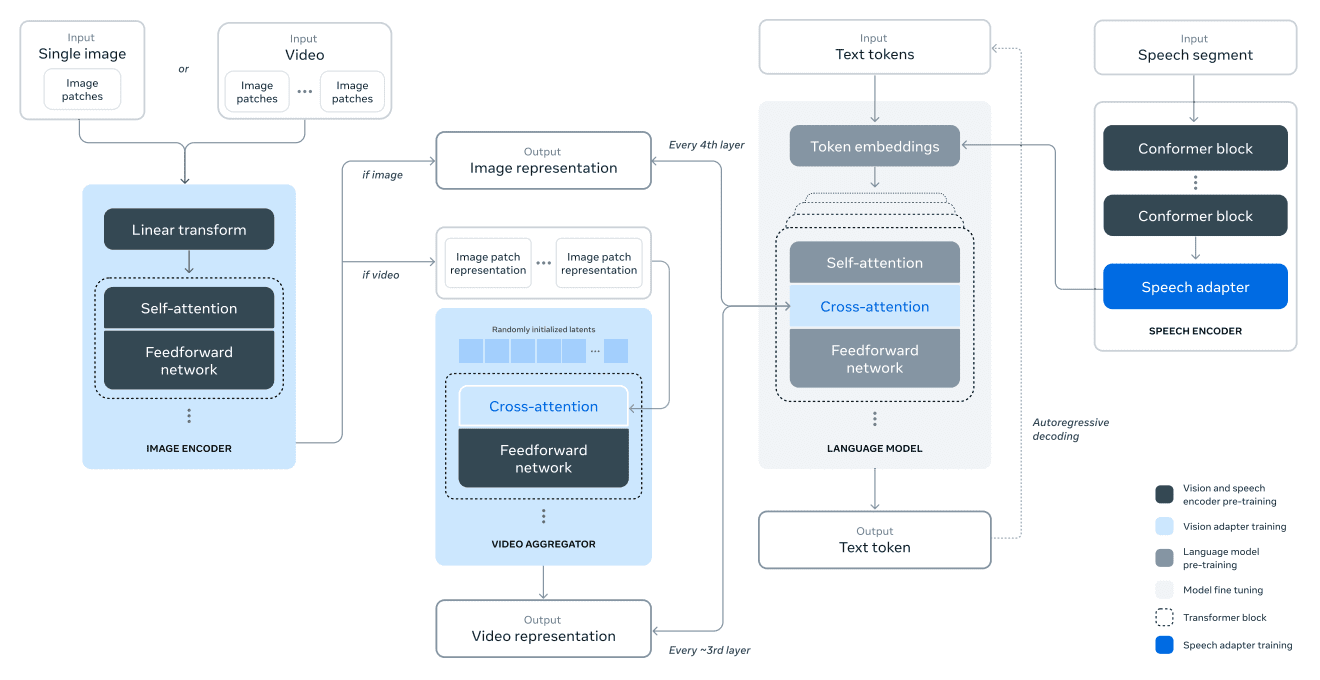

We also conduct experiments to add image, video, and speech capabilities to Llama 3 through a combined approach. The approach we investigate consists of three additional phases shown in Figure 28:

- Multimodal encoder pretraining.We train separate encoders for image and speech. We train the image encoder on a large number of image-text pairs. This allows the model to learn the relationship between visual content and its natural language description. Our speech encoder uses a self-supervised method that masks a portion of the speech input and attempts to reconstruct the masked portion through a discrete labeled representation. Thus, the model learns the structure of the speech signal. See Section 7 for more information on image encoders and Section 8 for more information on speech encoders.

- Visual adapter training.We train an adapter that integrates a pre-trained image encoder with a pre-trained language model. The adapter consists of a series of cross-attention layers that feed the image encoder representation into the language model. The adapter is trained on text-image pairs, which aligns the image representation with the language representation. During adapter training, we also update the parameters of the image encoder, but intentionally do not update the parameters of the language model. We also train a video adapter on top of the image adapter, using paired video-text data. This allows the model to aggregate information across frames. For more information, see Section 7.

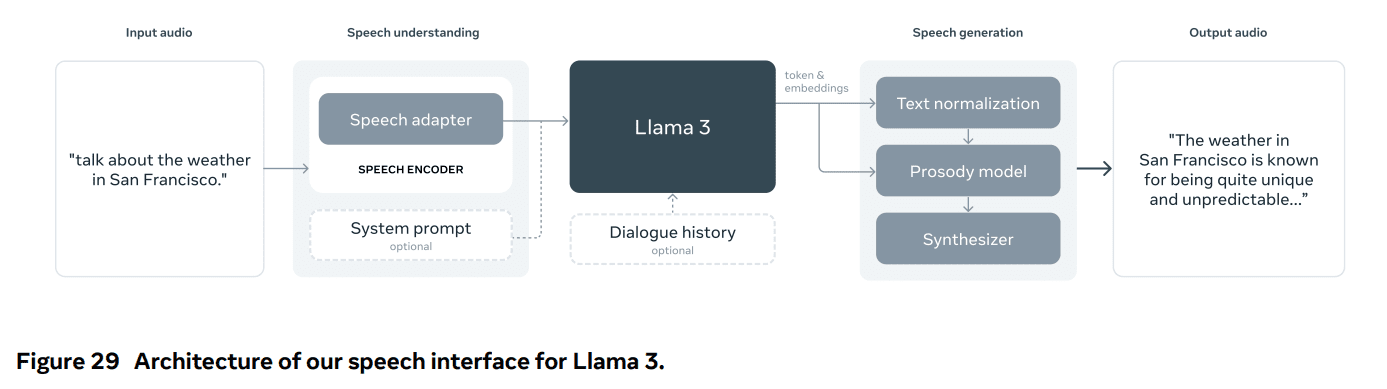

- Finally, we integrate the speech encoder into the model via an adapter that converts the speech coding into a tokenized representation that can be fed directly into the fine-tuned language model. During the supervised fine-tuning phase, the parameters of the adapter and the encoder are jointly updated to achieve high-quality speech understanding. We do not change the language model during speech adapter training. We also integrate a text-to-speech system. See Section 8 for more details.

Our multimodal experiments have led to models that recognize the content of images and videos and support interaction through a speech interface. These models are still under development and are not yet ready for release.

3 Pre-training

Pre-training of language models involves the following aspects:

(1) Collecting and filtering large-scale training corpora;

(2) Development of model architectures and corresponding scaling laws for model sizing;

(3) Development of techniques for efficient large-scale pre-training;

(4) Development of a pre-training program. We describe each of these components below.

3.1 Pre-training data

We create language model pre-training datasets from various data sources that contain knowledge up to the end of 2023. We applied several de-duplication methods and data cleaning mechanisms to each data source to obtain high-quality markup. We removed domains containing large amounts of personally identifiable information (PII), as well as domains known to contain adult content.

3.11 Web data cleansing

Most of the data we utilize comes from the web, and we describe our cleaning process below.

PII and Security Filtering. Among other measures, we have implemented filters designed to remove data from websites that may contain unsafe content or large amounts of PII from domains that are classified as harmful under various Meta security standards, as well as domains known to contain adult content.

Text Extraction and Cleaning. We process raw HTML content to extract high-quality, diverse text and use non-truncated web documents for this purpose. To do this, we built a custom parser that extracts HTML content and optimizes the precision of template removal and content recall. We assessed the quality of the parser through manual evaluation and compared it to popular third-party HTML parsers optimized for the content of similar articles and found it to perform well. We handle HTML pages containing math and code content with care to preserve the structure of that content. We keep the image alt attribute text because math content is usually represented as a pre-rendered image where the math is also provided in the alt attribute.

We found that Markdown was detrimental to the performance of models trained primarily on Web data compared to plain text, so we removed all Markdown tags.

De-weighting. We apply multiple rounds of de-duplication at the URL, document and line level:

- URL-level de-duplication. We perform URL-level de-duplication on the entire dataset. For each page corresponding to a URL, we keep the latest version.

- Document-level de-duplication. We perform global MinHash (Broder, 1997) de-duplication on the entire dataset to remove near-duplicate documents.

- Row-level de-duplication. We perform radical-level de-duplication similar to ccNet (Wenzek et al., 2019). We remove rows that occur more than 6 times in each group containing 30 million documents.

Although our manual qualitative analysis suggests that line-level de-duplication removes not only residual boilerplate content from a variety of sites (e.g., navigation menus, cookie warnings), but also frequent high-quality text, our empirical evaluations show significant improvements.

Heuristic Filtering. Heuristics were developed to remove additional low-quality documents, outliers, and documents with too many repetitions. Some examples of heuristics include:

- We use duplicate n-tuple coverage (Rae et al., 2021) to remove rows consisting of duplicate content (e.g., logs or error messages). These rows can be very long and unique, and thus cannot be filtered by row de-duplication.

- We use a "dirty word" count (Raffel et al., 2020) to filter out adult sites that are not covered by the domain blacklist.

- We use the Kullback-Leibler scatter of the token distribution to filter out documents that contain too many anomalous tokens compared to the training corpus distribution.

Model-Based Quality Filtering.

In addition, we have attempted to use various model-based quality classifiers for selecting high-quality markers. These methods include:

- Using fast classifiers such as fasttext (Joulin et al., 2017), which are trained to recognize whether a given text will be cited by Wikipedia (Touvron et al., 2023a).

- Using the more computationally intensive Roberta model classifier (Liu et al., 2019a), which is trained on the predictions of Llama 2.

To train the Llama 2-based quality classifier, we created a set of cleaned Web documents describing the quality requirements and instructed Llama 2's chat model to determine whether the documents met these requirements. For efficiency, we use DistilRoberta (Sanh et al., 2019) to generate quality scores for each document. We will experimentally evaluate the effectiveness of various quality filtering configurations.

Code and inference data.

Similar to DeepSeek-AI et al. (2024), we constructed domain-specific pipelines to extract code-containing and math-related web pages. Specifically, both code and inference classifiers are DistilledRoberta models trained using Llama 2 annotated Web data. Unlike the generic quality classifiers mentioned above, we perform cue tuning to target web pages containing mathematical inferences, reasoning in STEM domains, and code embedded in natural language. Since the token distributions of code and mathematics are very different from those of natural language, these pipelines implement domain-specific HTML extraction, custom text features, and heuristics for filtering.

Multilingual data.

Similar to the English processing pipeline described above, we implement filters to remove website data that may contain personally identifiable information (PII) or insecure content. Our multilingual text processing pipeline has the following unique features:

- We use a fasttext-based language recognition model to classify documents into 176 languages.

- We perform document-level and row-level de-duplication of data for each language.

- We apply language-specific heuristics and model-based filters to remove low-quality documents.

In addition, we use a multilingual Llama 2-based classifier to rank the quality of multilingual documents to ensure that high-quality content is prioritized. The number of multilingual tokens we use in pre-training is determined experimentally, and model performance is balanced on English and multilingual benchmark tests.

3.12 Determining data mix

为了获得高质量语言模型,必须谨慎确定预训练数据混合中不同数据源的比例。我们主要利用知识分类和尺度定律实验来确定这一数据混合。

知识分类。我们开发了一个分类器,用于对网页数据中包含的信息类型进行分类,以便更有效地确定数据组合。我们使用这个分类器对网页上过度代表的数据类别(例如艺术和娱乐)进行下采样。

为了确定最佳数据混合方案。我们进行规模定律实验,其中我们将多个小型模型训练于特定数据混合集上,并利用其预测大型模型在该混合集上的性能(参见第 3.2.1 节)。我们多次重复此过程,针对不同的数据混合集选择新的候选数据混合集。随后,我们在该候选数据混合集上训练一个更大的模型,并在多个关键基准测试上评估该模型的性能。

数据混合摘要。我们的最终数据混合包含大约 50% 的通用知识标记、25% 的数学和推理标记、17% 的代码标记以及 8% 的多语言标记。

3.13 Annealing data

Empirical results show that annealing on a small amount of high-quality code and math data (see Section 3.4.3) improves the performance of pre-trained models on key benchmark tests. Similar to the study by Li et al. (2024b), we anneal using a mixed dataset containing high-quality data from selected domains. Our annealed data does not contain any training sets from commonly used benchmark tests. This allows us to evaluate the true few-sample learning ability and out-of-domain generalization of Llama 3.

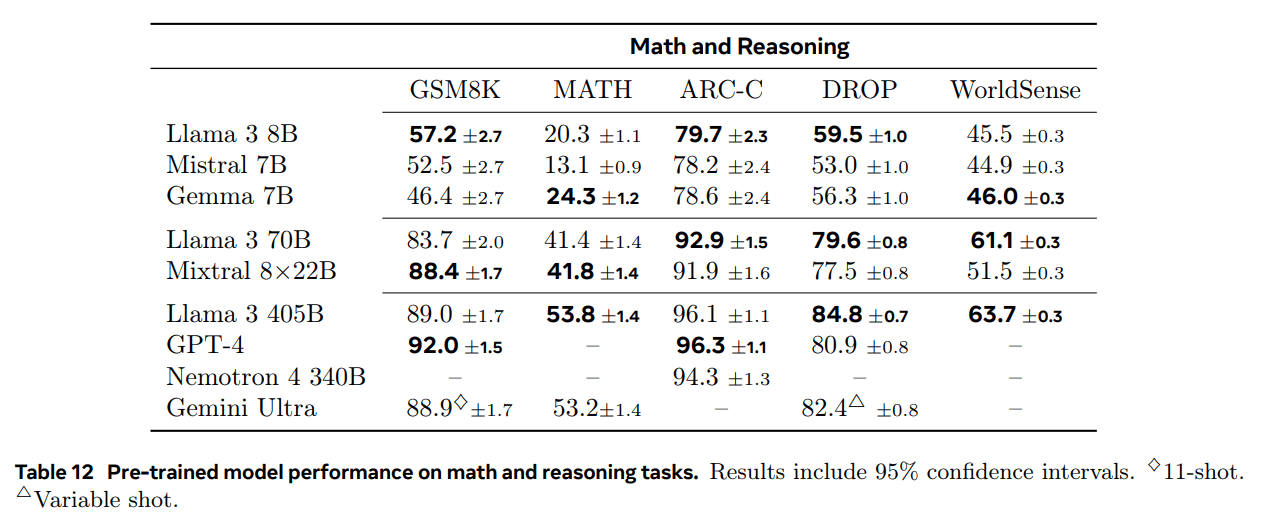

Following OpenAI (2023a), we evaluated the effect of annealing on the GSM8k (Cobbe et al., 2021) and MATH (Hendrycks et al., 2021b) training sets. We find that annealing improves the performance of the pretrained Llama 3 8B model by 24.0% and 6.4% on the GSM8k and MATH validation sets, respectively.However, the improvement is negligible for the 405B model, suggesting that our flagship model has strong contextual learning and inference capabilities, and that it does not require domain-specific training samples to achieve strong performance.

Use annealing to assess data quality.Like Blakeney et al. (2024), we find that annealing allows us to judge the value of small domain-specific datasets. We measure the value of these datasets by linearly annealing the learning rate of the Llama 3 8B model, which has been trained with 50%, to 0 over 40 billion tokens. In these experiments, we assign 30% weights to the new dataset and the remaining 70% weights to the default data mix. It is more efficient to use annealing to evaluate new data sources than to perform scale law experiments on each small data set.

3.2 Model Architecture

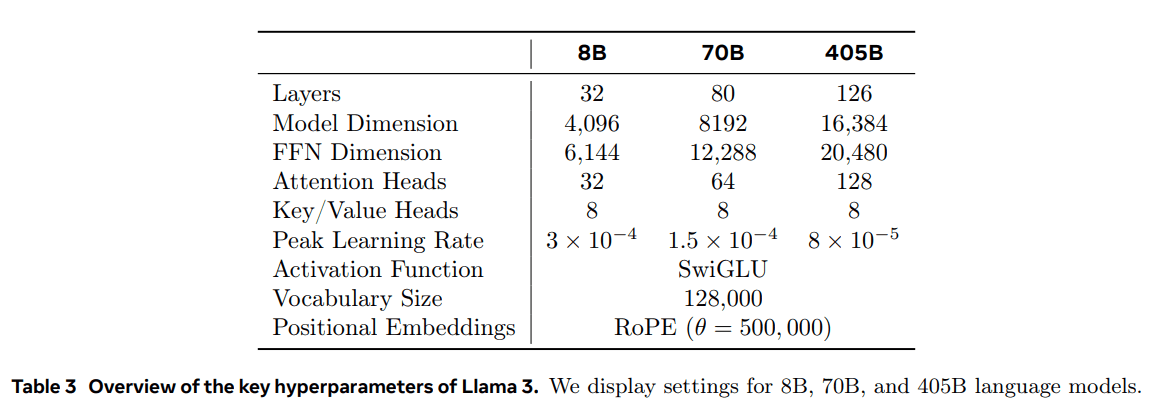

Llama 3 uses the standard dense Transformer architecture (Vaswani et al., 2017). Its model architecture is not significantly different from Llama and Llama 2 (Touvron et al., 2023a, b); our performance gains come primarily from improvements in data quality and diversity, as well as scaling up the training size.

We made a couple of minor modifications:

- We use grouped query attention (GQA; Ainslie et al. (2023)), where 8 key-value headers are used to increase inference speed and reduce the size of the key-value cache during decoding.

- We use an attention mask to prevent self-attention mechanisms between different documents in the sequence. We find that this change has limited impact during standard pretraining, but is important during continuous pretraining of very long sequences.

- We use a vocabulary of 128K tokens. Our tokenized vocabulary combines the 100K tokens of the tiktoken3 vocabulary with 28K additional tokens to better support non-English languages. Compared to the Llama 2 vocabulary, our new vocabulary improves the compression of English data samples from 3.17 to 3.94 characters/token. This allows the model to "read" more text with the same amount of training computation. We also found that adding 28K tokens from specific non-English languages improved compression and downstream performance, while having no effect on English tokenization.

- We increase the RoPE base frequency hyperparameter to 500,000. this allows us to better support longer contexts; Xiong et al. (2023) show that this value is valid for context lengths up to 32,768.

The Llama 3 405B uses an architecture with 126 layers, 16,384 labeled representation dimensions, and 128 attention heads; for more information, see Table 3.This results in a model size that is approximately computationally optimal based on our data and a training budget of 3.8 × 10^25 FLOPs.

3.2.1 Law of Scale

We utilize Scaling Laws (Hoffmann et al., 2022; Kaplan et al., 2020) to determine the optimal size of the flagship model given our pre-training computational budget. In addition to determining the optimal model size, predicting the performance of the flagship model on downstream benchmark tasks presents significant challenges for the following reasons:

- Existing Scaling Laws typically predict only the next markup prediction loss, not a specific benchmarking performance.

- Scaling Laws can be noisy and unreliable because they are developed based on pre-training runs using a small computational budget (Wei et al., 2022b).

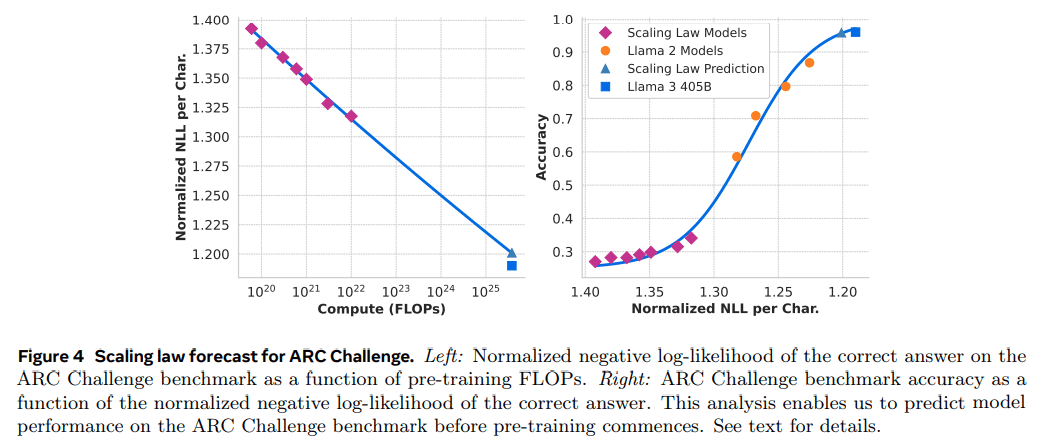

To address these challenges, we implemented a two-phase approach to develop Scaling Laws that accurately predict downstream benchmarking performance:

- We first establish the correlation between pre-trained FLOPs and the negative log-likelihood of computing the best model on the downstream task.

- Next, we correlate the negative log-likelihood on the downstream task with the task accuracy using the Scaling Laws model and an older model previously trained using higher computational FLOPs. In this step, we exclusively utilize the Llama 2 family of models.

This approach allows us to predict downstream task performance (for computationally optimal models) based on a specific number of pre-trained FLOPs. We use a similar approach to select our pre-training data combinations (see Section 3.4).

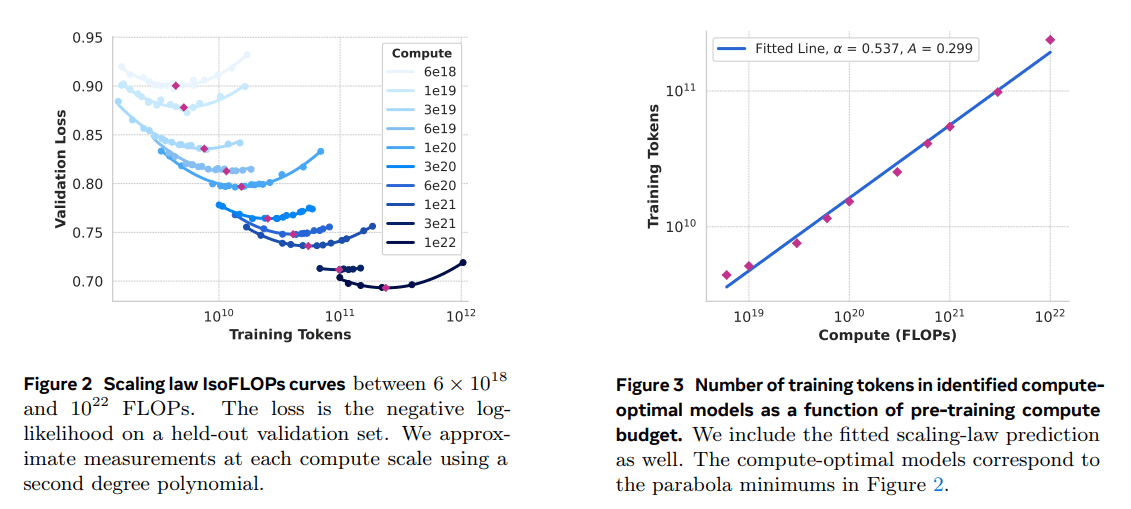

Scaling Law Experiment.Specifically, we constructed Scaling Laws by pre-training models using computational budgets between 6 × 10^18 FLOPs and 10^22 FLOPs. at each computational budget, we pre-trained models with sizes between 40M and 16B parameters, and used a fraction of the model size at each computational budget. In these training runs, we use cosine learning rate scheduling and linear warm-up within 2,000 training steps. The peak learning rate was set between 2 × 10^-4 and 4 × 10^-4 depending on the model size. We set the cosine decay to 0.1 times the peak value. The weight decay for each step was set to 0.1 times the learning rate for that step. We used a fixed batch size for each computational size, ranging from 250K to 4M.

These experiments produced the IsoFLOPs curves in Figure 2. The losses in these curves were measured on separate validation sets. We fit the measured loss values using a second-order polynomial and determine the minimum value of each parabola. We refer to the minimum of the parabola as the computationally optimal model under the corresponding pretrained computational budget.

We use computationally optimal models identified in this way to predict the optimal number of training tokens for a given computational budget. For this purpose, we assume a power-law relationship between the computational budget C and the optimal number of training tokens N (C):

N (C) = AC α .

We fit A and α using the data in Fig. 2. We find (α, A) = (0.53, 0.29); the corresponding fit is shown in Fig. 3. Extrapolating the resulting scaling law to 3.8 × 10 25 FLOPs suggests training a model with 402B parameters and using 16.55T tokens.

An important observation is that the IsoFLOPs curve becomes flatter around the minimum as the computational budget increases. This implies that the performance of the flagship model is relatively stable against small variations in the tradeoff between model size and training markers. Based on this observation, we finally decided to train a flagship model containing the 405B parameter.

Predicting performance of downstream tasks.We use the generated computationally optimal model to predict the performance of the flagship Llama 3 model on the benchmark dataset. First, we linearly relate the (normalized) negative log-likelihood of the correct answer in the benchmark to the training FLOPs. For this analysis, we used only the scaling law model trained to 10^22 FLOPs on the above data mixture. Next, we established an S-shaped relationship between log-likelihood and accuracy using the scaling law model and the Llama 2 model, which was trained using the Llama 2 data mix and tagger. (We show the results of this experiment on the ARC Challenge benchmark in Figure 4.) We find this two-step scaling law prediction (extrapolated over four orders of magnitude) to be quite accurate: it only slightly underestimates the final performance of the flagship Llama 3 model.

3.3 Infrastructure, expansion and efficiency

We describe the hardware and infrastructure supporting Llama 3 405B pre-training and discuss several optimizations that improve training efficiency.

3.3.1 Training infrastructure

Llama 1 and Llama 2 models were trained on Meta's AI research supercluster (Lee and Sengupta, 2022). As we scaled up further, Llama 3 training was migrated to Meta's production cluster (Lee et al., 2024). This setup optimizes production-level reliability, which is critical as we scale up training.

Computing resources: The Llama 3 405B trains on up to 16,000 H100 GPUs, each running at 700W TDP with 80GB HBM3, using Meta's Grand Teton AI server platform (Matt Bowman, 2022). Each server is equipped with eight GPUs and two CPUs; inside the server, the eight GPUs are connected via NVLink. Training jobs are scheduled using MAST (Choudhury et al., 2024), Meta's global-scale training scheduler.

Storage: Tectonic (Pan et al., 2021), Meta's general-purpose distributed file system, was used to build the storage architecture for Llama 3 pre-training (Battey and Gupta, 2024). It provides 240 Petabytes of storage space and consists of 7,500 SSD-equipped servers supporting a sustainable throughput of 2 TB/s and a peak throughput of 7 TB/s. A major challenge is to support highly bursty checkpoint writes that saturate the storage fabric in a short period of time. Checkpoints save the model state of each GPU, ranging from 1MB to 4GB per GPU, for recovery and debugging. Our goal is to minimize GPU pause time during checkpointing and increase the frequency of checkpointing to reduce the amount of work lost after recovery.

Networking: The Llama 3 405B uses an RDMA over Converged Ethernet (RoCE) architecture based on the Arista 7800 and Minipack2 Open Compute Project (OCP) rack switches. smaller models in the Llama 3 series were trained using the Nvidia Quantum2 Infiniband network. Both the RoCE and Infiniband clusters utilize 400 Gbps link connectivity between GPUs. Despite the differences in the underlying network technology of these clusters, we have tuned both to provide equivalent performance to handle these large training workloads. We will elaborate further on our RoCE network as we take full ownership of its design.

- Network Topology: Our RoCE-based AI cluster contains 24,000 GPUs (Footnote 5) connected via a three-tier Clos network (Lee et al., 2024). At the bottom tier, each rack hosts 16 GPUs, allocated to two servers and connected via a single Minipack2 top-of-rack (ToR) switch. In the middle tier, 192 of these racks are connected via cluster switches to form a Pod of 3,072 GPUs with full bi-directional bandwidth, ensuring no oversubscription. At the top tier, eight such Pods within the same data center building are connected via aggregation switches to form a cluster of 24,000 GPUs. However, instead of maintaining full bi-directional bandwidth, the network connections at the aggregation layer utilize an oversubscription rate of 1:7. Both our model-parallel approach (see Section 3.3.2) and the training job scheduler (Choudhury et al., 2024) are optimized to be aware of the network topology and are designed to minimize network communication between Pods.

- Load Balancing: The training of large language models generates heavy network traffic that is difficult to balance across all available network paths through traditional methods such as Equal Cost Multipath (ECMP) routing. To address this challenge, we employ two techniques. First, our aggregate library creates 16 network flows between two GPUs instead of one, thus reducing the amount of traffic per flow and providing more flows for load balancing. Second, our Enhanced ECMP (E-ECMP) protocol effectively balances these 16 flows across different network paths by hashing other fields in the RoCE header packet.

- Congestion control: We use deep buffer switches (Gangidi et al., 2024) in the backbone to accommodate transient congestion and buffering caused by aggregate communication patterns. This helps limit the impact of persistent congestion and network backpressure caused by slow servers, which is common in training. Finally, better load balancing through E-ECMP greatly reduces the likelihood of congestion. With these optimizations, we successfully ran a 24,000 GPU cluster without the need for traditional congestion control methods such as Data Center Quantified Congestion Notification (DCQCN).

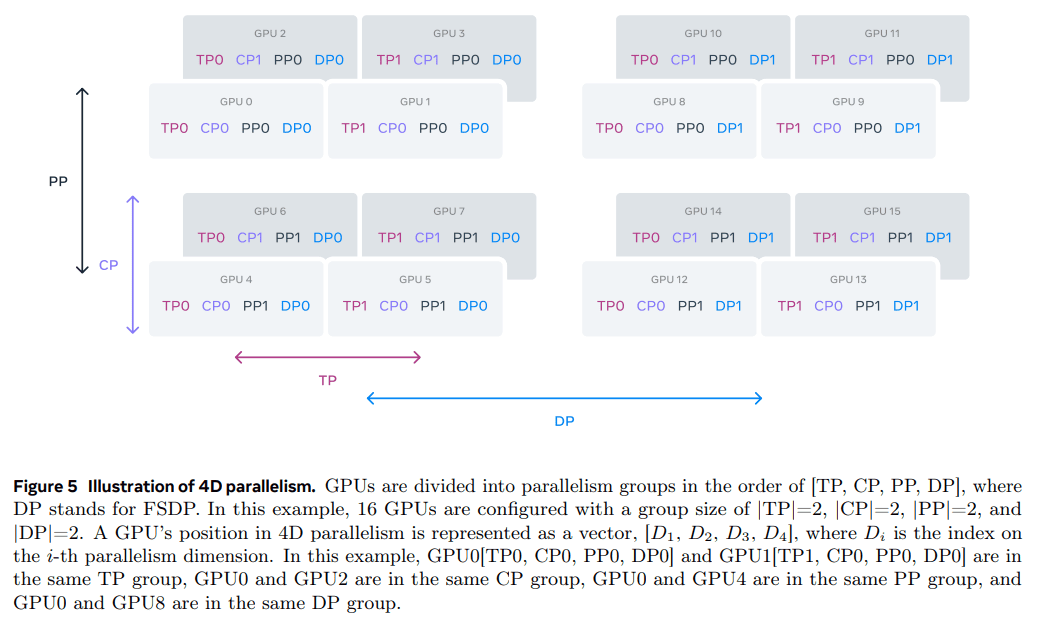

3.3.2 Parallelism in Model Scale-Up

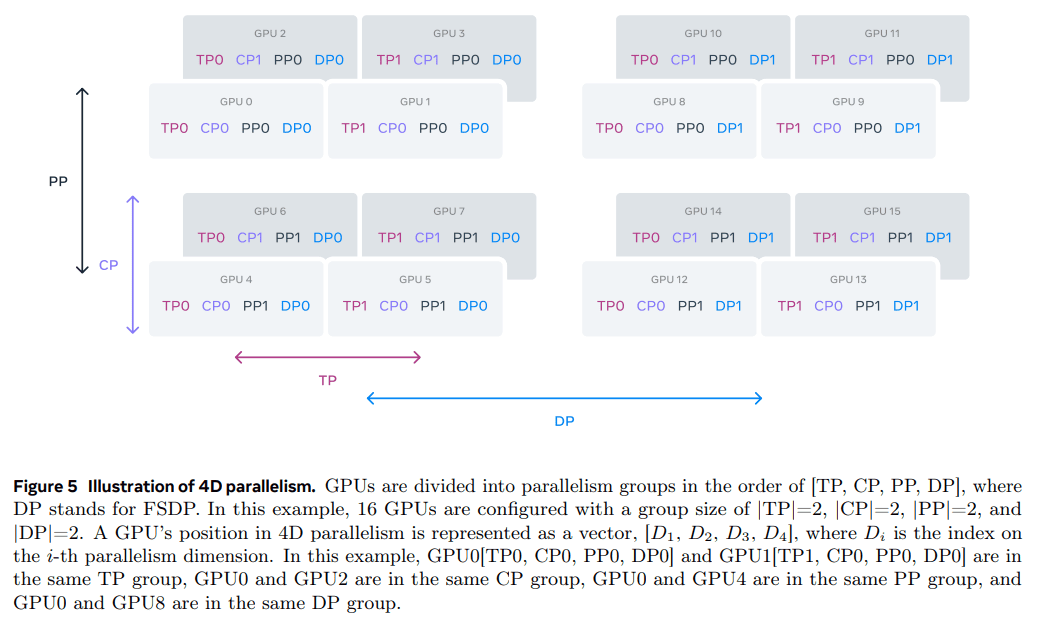

To scale up the training of our largest model, we shard the model using 4D parallelism - a scheme that combines four different parallel approaches. This approach effectively distributes the computation across many GPUs and ensures that each GPU's model parameters, optimizer states, gradients, and activation values fit within its HBM. Our 4D parallel implementation (as shown in et al. (2020); Ren et al. (2021); Zhao et al. (2023b)), which slices the model, optimizer, and gradient, also implements data parallelism, a parallel approach that processes the data in parallel on multiple GPUs and synchronizes them after each training step. We use FSDP to slice the optimizer state and gradient for Llama 3, but for model slicing, we do not re-slice after forward computation to avoid additional full-collection communication during backward passes.

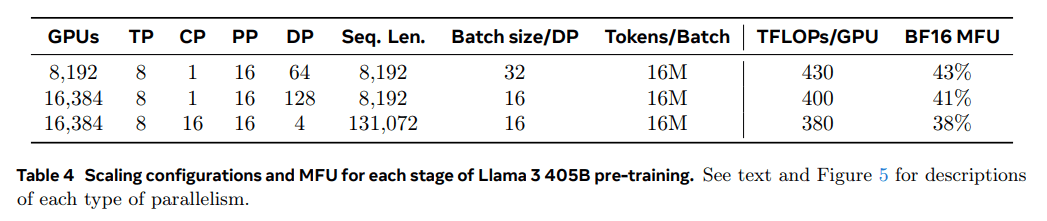

GPU utilization.By carefully tuning the parallel configuration, hardware, and software, we achieve a BF16 model FLOPs utilization (MFU; Chowdhery et al. (2023)) of 38-43%. The configurations shown in Table 4 indicate that compared to 43% on 8K GPUs and DP=64, the decrease in MFU on 16K GPUs and DP=128 to 41% is due to the need to reduce the batch size of each DP group to keep the number of global markers constant during training.

Streamline parallel improvements.We encountered several challenges in our existing implementation:

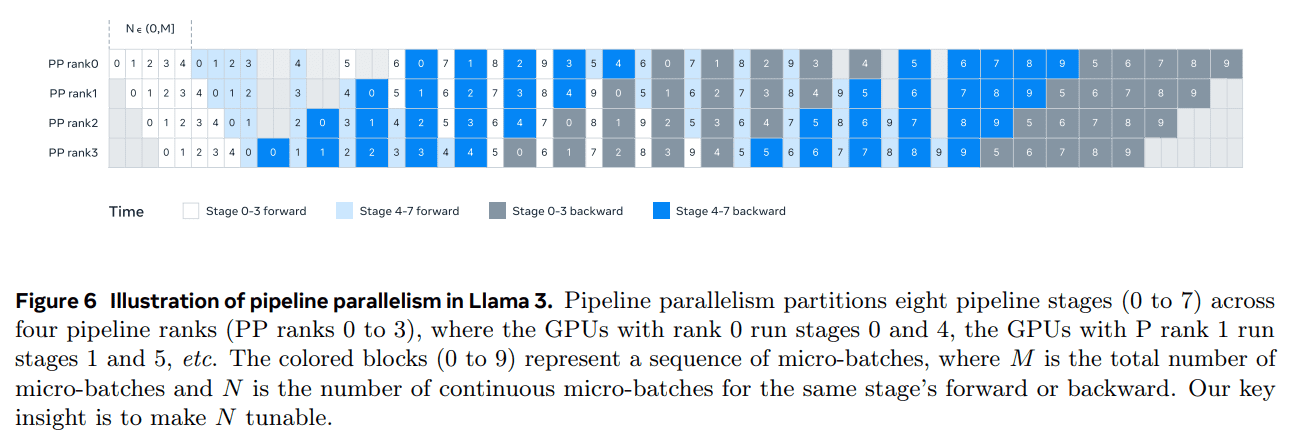

- Batch size limitations.Current implementations place a limit on the batch size supported per GPU, requiring it to be divisible by the number of pipeline stages. For the example in Fig. 6, pipeline parallelism for depth-first scheduling (DFS) (Narayanan et al. (2021)) requires N = PP = 4, while breadth-first scheduling (BFS; Lamy-Poirier (2023)) requires N = M, where M is the total number of microbatches and N is the number of consecutive microbatches in the same stage in the forward or reverse direction. However, pre-training usually requires flexibility in batch sizing.

- Memory imbalance.Existing pipeline parallel implementations lead to unbalanced resource consumption. The first stage consumes more memory due to embedding and warming up micro batches.

- The calculation is not balanced. After the last layer of the model, we need to compute the outputs and losses, making this phase a bottleneck in execution latency. where Di is the index of the i-th parallel dimension. In this example, GPU0[TP0, CP0, PP0, DP0] and GPU1[TP1, CP0, PP0, DP0] are in the same TP group, GPU0 and GPU2 are in the same CP group, GPU0 and GPU4 are in the same PP group, and GPU0 and GPU8 are in the same DP group.

To address these issues, we modified the pipeline scheduling approach, as shown in Figure 6, which allows for a flexible setting of N - in this case N = 5, which allows for any number of microbatches to be run in each batch. This allows us to:

(1) When there is a batch size limit, run fewer microbatches than the number of stages; or

(2) Run more micro-batches to hide the peer-to-peer communication and find the best communication and memory efficiency between Depth-First Scheduling (DFS) and Breadth-First Scheduling (BFS). To balance the pipeline, we reduce one Transformer layer from the first stage and the last stage, respectively. This means that the first model block on the first stage has only the embedding layer, while the last model block on the last stage has only the output projection and loss computation.

To minimize pipeline bubbles, we use an interleaved scheduling approach (Narayanan et al., 2021) on a pipeline hierarchy with V pipeline stages. The overall pipeline bubble ratio is PP-1 V * M . In addition, we employ asynchronous peer-to-peer communication, which significantly speeds up training, especially in cases where document masks introduce additional computational imbalances. We enable TORCH_NCCL_AVOID_RECORD_STREAMS to reduce the memory usage from asynchronous peer-to-peer communication. Finally, to reduce memory costs, based on a detailed memory allocation analysis, we proactively free tensors that will not be used for future computations, including input and output tensors for each pipeline stage. ** With these optimizations, we are able to use the 8K tensors without using activation checkpoints. token sequences for Llama 3 pre-training.

Context parallelization is used for long sequences. We utilize context parallelization (CP) to improve memory efficiency when scaling Llama 3 context lengths and to allow training on very long sequences up to 128K. In CP, we partition across sequence dimensions, specifically we divide the input sequence into 2 × CP blocks so that each CP level receives two blocks for better load balancing. The ith CP level receives the ith and (2 × CP -1 -i) blocks.

Unlike existing CP implementations that overlap communication and computation in a ring structure (Liu et al., 2023a), our CP implementation employs an all-gather-based approach, which first globally aggregates key-value (K, V) tensors, and then computes the attentional outputs of a block of local query (Q) tensors. Although the all-gather communication latency is on the critical path, we still adopt this approach for two main reasons:

(1) It is easier and more flexible to support different types of attention masks, such as document masks, in all-gather based CP attention;

(2) The exposed all-gather latency is small because the K and V tensor of the communication is much smaller than the Q tensor, due to the use of GQA (Ainslie et al., 2023). As a result, the time complexity of the attention computation is an order of magnitude larger than that of all-gather (O(S²) versus O(S), where S denotes the length of the sequence in the full causal mask), making the all-gather overhead negligible.

Network-aware parallelized configuration.The order of parallelization dimensions [TP, CP, PP, DP] is optimized for network communication. The innermost layer of parallelization requires the highest network bandwidth and lowest latency, and is therefore usually restricted to within the same server. The outermost layer of parallelization may span multi-hop networks and should be able to tolerate higher network latency. Therefore, based on the network bandwidth and latency requirements, we rank the parallelization dimensions in the order of [TP, CP, PP, DP].DP (i.e., FSDP) is the outermost layer of parallelization because it can tolerate longer network latency by asynchronously prefetching the slicing model weights and reducing the gradient. Determining the optimal parallelization configuration with minimal communication overhead while avoiding GPU memory overflow is a challenge. We developed a memory consumption estimator and a performance projection tool, which help us explore various parallelization configurations and predict overall training performance and identify memory gaps efficiently.

Numerical Stability.By comparing training losses between different parallel settings, we fix some numerical issues that affect training stability. To ensure training convergence, we use FP32 gradient accumulation during the reverse computation of multiple micro-batches and reduce-scatter gradients using FP32 between data parallelizers in FSDP. For intermediate tensors that are used multiple times in the forward computation, such as the visual coder output, the reverse gradient is also accumulated in FP32.

3.3.3 Collective communications

Llama 3's collective communication library is based on a branch of Nvidia's NCCL library called NCCLX. NCCLX greatly improves the performance of NCCL, especially for high latency networks. Recall that the order of parallel dimensions is [TP, CP, PP, DP], where DP corresponds to FSDP, and that the outermost parallel dimensions, PP and DP, may communicate over a multi-hop network with latencies in the tens of microseconds. The collective communication operations all-gather and reduce-scatter of the original NCCL are used in FSDP, while point-to-point communication is used for PP, which require data chunking and staged data replication. This approach leads to some of the following inefficiencies:

- A large number of small control messages need to be exchanged over the network to facilitate data transfer;

- Additional memory copy operations;

- Use additional GPU cycles for communication.

For Llama 3 training, we address some of these inefficiencies by adapting chunking and data transfers to our network latency, which can be as high as tens of microseconds in large clusters. We also allow small control messages to cross our network with higher priority, specifically avoiding head-of-queue blocking in deeply buffered core switches.

Our ongoing work for future versions of Llama includes deeper changes to NCCLX to fully address all of the above issues.

3.3.4 Reliability and operational challenges

The complexity and potential failure scenarios of 16K GPU training exceed those of larger CPU clusters we have operated on. In addition, the synchronous nature of training makes it less fault-tolerant - a single GPU failure may require restarting the entire job. Despite these challenges, for Llama 3 we achieved effective training times higher than 90% while supporting automated cluster maintenance such as firmware and Linux kernel upgrades (Vigraham and Leonhardi, 2024), which resulted in at least one training outage per day.

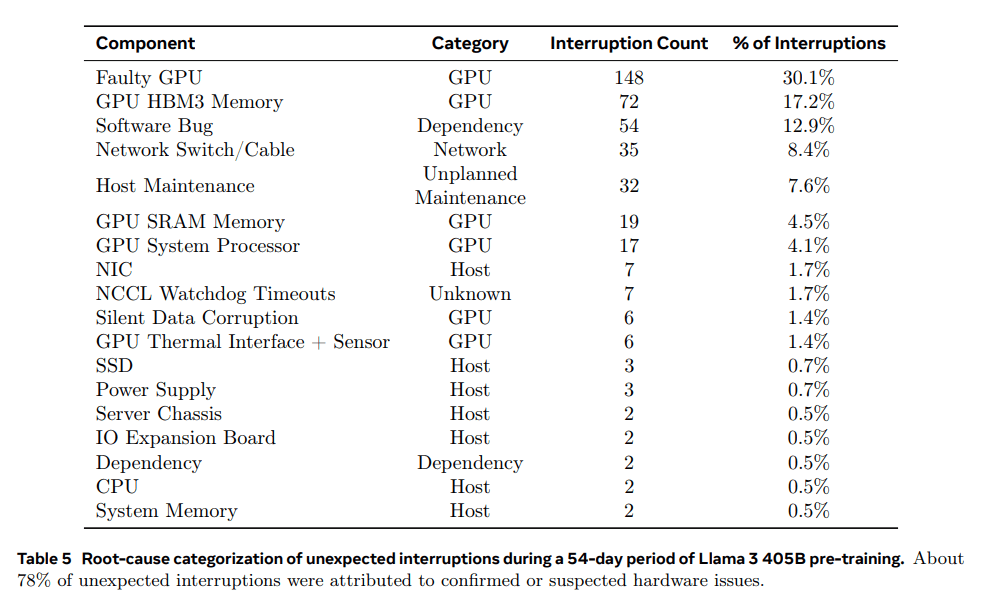

Effective training time is the time spent on effective training during the elapsed time. During the 54-day pre-training snapshot, we experienced a total of 466 operational outages. Of these, 47 were planned interruptions due to automated maintenance operations (e.g., firmware upgrades or operator-initiated operations such as configuration or dataset updates). The remaining 419 were unanticipated outages, which are categorized in Table 5. Approximately 78% of the unanticipated outages were attributed to either identified hardware issues, such as GPU or host component failures, or suspected hardware-related issues, such as silent data corruption and unplanned individual host maintenance events.GPU issues were the largest category, accounting for 58.7% of all the unanticipated issues.Despite the large number of failures, only three major manual interventions and the remaining issues were handled through automation.

To improve effective training time, we reduced job startup and checkpointing time, and developed tools for rapid diagnosis and problem solving. We made extensive use of PyTorch's built-in NCCL Flight Recorder (Ansel et al., 2024)-this feature captures collective metadata and stack traces into a ring buffer, allowing us to quickly diagnose hangs and performance issues at scale, especially in the case of NCCLX aspects. Using it, we can efficiently log communication events and durations for each collective operation, and automatically dump trace data in the event of an NCCLX watchdog or heartbeat timeout. With online configuration changes (Tang et al., 2015), we can selectively enable more computationally intensive trace operations and metadata collection without code releases or job restarts. Debugging issues in large training sessions is complicated by the mixed use of NVLink and RoCE in our network. Data transfers are typically performed over NVLink via load/store operations issued by the CUDA kernel, and failure of a remote GPU or NVLink connection often manifests itself as a stalled load/store operation in the CUDA kernel without returning an explicit error code.NCCLX improves the speed and accuracy of fault detection and localization by being tightly engineered with PyTorch, allowing PyTorch to access the internal state of NCCLX and track relevant information. While it is not possible to completely prevent stalls due to NVLink failures, our system monitors the state of the communication libraries and automatically times out when such stalls are detected. In addition, NCCLX tracks kernel and network activity for each NCCLX communication and provides a snapshot of the internal state of the failing NCCLX collective, including completed and uncompleted data transfers between all ranks. We analyze this data to debug NCCLX extension issues.

Sometimes, hardware problems can result in still-running but slow stragglers that are hard to detect. Even if there is only one straggler, it can slow down thousands of other GPUs, often in the form of normal operation but slow communication. We developed tools to prioritize potentially problematic communications from selected groups of processes. By investigating only a few key suspects, we are often able to effectively identify stragglers.

An interesting observation is the impact of environmental factors on large-scale training performance. For the Llama 3 405B, we noticed a throughput fluctuation of 1-2% based on time variation. This fluctuation is caused by higher midday temperatures affecting GPU dynamic voltage and frequency scaling. During training, tens of thousands of GPUs may simultaneously increase or decrease power consumption, e.g., due to the fact that all GPUs are waiting for a checkpoint or collective communication to end, or for an entire training job to start or shut down. When this happens, it can lead to transient fluctuations in power consumption within the data center of the order of tens of megawatts, which stretches the limits of the power grid. This is an ongoing challenge as we scale training for future and even larger Llama models.

3.4 Training programs

The pre-training recipe for Llama 3 405B contains three main stages:

(1) initial pretraining, (2) long context pretraining and (3) annealing. Each of these three stages is described below. We use similar recipes to pre-train the 8B and 70B models.

3.4.1 Initial pre-training

We pretrained the Llama 3 405B model using a cosine learning rate scheme with a maximum learning rate of 8 × 10-⁵, linearly warming up to 8,000 steps and decaying to 8 × 10-⁷ after 1,200,000 training steps. To improve the stability of training, we use a smaller batch size at the beginning of training and subsequently increase the batch size to improve efficiency. Specifically, we initially have a batch size of 4M tokens and a sequence length of 4,096. after pre-training 252M tokens, we double the batch size and sequence length to 8M sequences and 8,192 tokens, respectively. after pre-training 2.87T tokens, we again double the batch size to 16M. we found that This training method is very stable: very few loss spikes occur and no intervention is needed to correct deviations in model training.

Adjusting data combinations. During training, we made several adjustments to the pre-training data mix to improve the model's performance in specific downstream tasks. In particular, we increased the proportion of non-English data during pre-training to improve the multilingual performance of Llama 3. We also up-adjusted the proportion of mathematical data to enhance the model's mathematical reasoning, added more recent network data in the later stages of pre-training to update the model's knowledge cutoffs, and down-adjusted the proportion of a subset of the data that was later identified as being of lower quality.

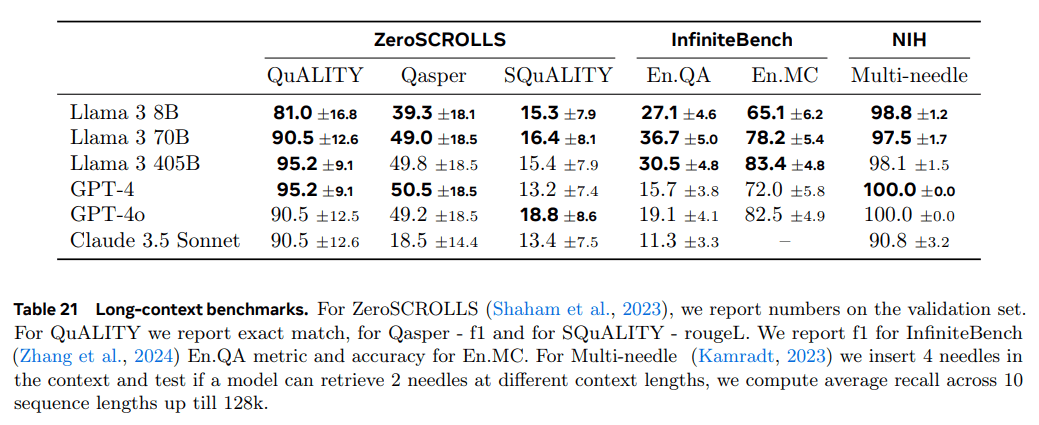

3.4.2 Long-context pre-training

In the final stage of pre-training, we train long sequences to support context windows of up to 128,000 tokens. We do not train long sequences earlier because the computation in the self-attention layer grows quadratically with sequence length. We incrementally increase the supported context length and pre-train after the model has successfully adapted to the increased context length. We evaluate successful adaptation by measuring both:

(1) Whether the model's performance in short-context evaluations has been fully recovered;

(2) Whether the model can perfectly solve the "needle in a haystack" task up to this length. In the Llama 3 405B pre-training, we incrementally increased the length of the context in six phases, starting with an initial context window of 8,000 tokens and eventually reaching a context window of 128,000 tokens. This long context pre-training phase used approximately 800 billion training tokens.

3.4.3 Annealing

During pre-training of the last 40M tokens, we annealed the learning rate linearly to 0 while maintaining a context length of 128K tokens. During this annealing phase, we also adjust the data mix to increase the sample size of very high-quality data sources; see Section 3.1.3. Finally, we computed the average of the model checkpoints (Polyak (1991) average) during annealing to generate the final pretrained model.

4 Follow-up training

We generated and aligned Llama 3 models by applying multiple rounds of follow-up training. These follow-up trainings are based on pre-trained checkpoints and incorporate human feedback for model alignment (Ouyang et al., 2022; Rafailov et al., 2024). Each round of follow-up training consisted of supervised fine-tuning (SFT) followed by direct preference optimization (DPO; Rafailov et al., 2024) using examples generated via manual annotation or synthesis. We describe our subsequent training modeling and data methods in Sections 4.1 and 4.2, respectively. Additionally, we provide further details on customized data wrangling strategies in Section 4.3 to improve the model's inference, programming capabilities, factoring, multi-language support, tool usage, long contexts, and precise instruction adherence.

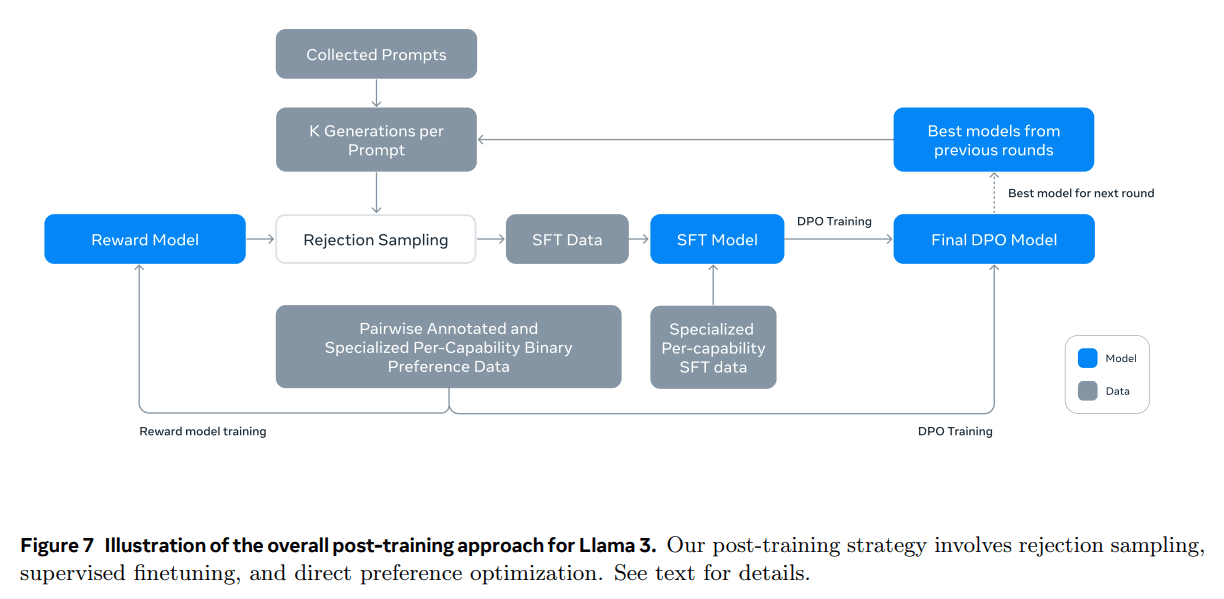

4.1 Modeling

The basis of our post-training strategy is a reward model and a language model. We first train a reward model on top of the pre-training checkpoints using human-labeled preference data (see Section 4.1.2). We then fine-tune the pre-training checkpoints with supervised fine-tuning (SFT; see Section 4.1.3) and further align them with the checkpoints using direct preference optimization (DPO; see Section 4.1.4). This process is shown in Figure 7. Unless otherwise noted, our modeling process applies to Llama 3 405B, which we refer to as Llama 3 405B for simplicity.

4.1.1 Chat conversation format

In order to adapt a large-scale language model (LLM) for human-computer interaction, we need to define a chat conversation protocol that allows the model to understand human commands and perform conversational tasks. Compared to its predecessor, Llama 3 has new features such as tool usage (Section 4.3.5), which may require generating multiple messages in a single dialog round and sending them to different locations (e.g., user, ipython). To support this, we have designed a new multi-message chat protocol that uses a variety of special header and termination tokens. Header tokens are used to indicate the source and destination of each message in a conversation. Similarly, termination markers indicate when it is the turn of the human and the AI to alternate speaking.

4.1.2 Reward models

We trained a reward model (RM) covering different abilities and built it on top of pre-trained checkpoints. The training objective is the same as in Llama 2, but we remove the marginal term in the loss function because we observe a reduced improvement as the data size increases. As in Llama 2, we use all preference data for reward modeling after filtering out samples with similar responses.

In addition to the standard (selected, rejected) response preference pairs, the annotation creates a third "edited response" for some cues, where the response selected from the pair is further edited for improvement (see Section 4.2.1). Thus, each preference sorting sample has two or three responses that are clearly ranked (edited > selected > rejected). During training, we concatenated the cues and multiple responses into one row and randomized the responses. This is an approximation of the standard scenario of computing scores by placing responses in separate rows, but in our ablation experiments, this approach improves training efficiency without loss of precision.

4.1.3 Oversight fine-tuning

Human labeled cues are first rejected for sampling using the reward model, the detailed methodology of which is described in Section 4.2. We combine these rejection-sampled data with other data sources (including synthetic data) to fine-tune the pre-trained language model using standard cross-entropy losses, with the goal of predicting target tokens (while masking the loss of cued markup). See Section 4.2 for more details on data blending. Although many of the training targets are model-generated, we refer to this phase as supervised fine-tuning (SFT; Wei et al. 2022a; Sanh et al. 2022; Wang et al. 2022b).

Our maximal model is fine-tuned with a learning rate of 1e-5 within 8.5K to 9K steps. We found these hyperparameter settings to be suitable for different rounds and data mixes.

4.1.4 Direct preference optimization

We further trained our SFT models for human preference alignment using Direct Preference Optimization (DPO; Rafailov et al., 2024). In training, we mainly use the latest preference data batches collected from the best performing models in the previous round of alignment. As a result, our training data better matches the distribution of optimized strategy models in each round. We also explored strategy algorithms such as PPO (Schulman et al., 2017), but found that DPO requires less computation and performs better on large-scale models, especially in instruction adherence benchmarks such as IFEval (Zhou et al., 2023).

For Llama 3, we used a learning rate of 1e-5 and set the β hyperparameter to 0.1. In addition, we applied the following algorithmic modifications to DPO:

- Masking Format Markers in DPO Losses. We mask out special format markers (including header and termination markers described in Section 4.1.1) from selected and rejected responses to stabilize DPO training. We note that the participation of these markers in the loss may lead to undesired model behavior, such as tail duplication or sudden generation of termination markers. We hypothesize that this is due to the contrasting nature of DPO loss - the presence of common markers in both selected and rejected responses can lead to conflicting learning goals, as the model needs to simultaneously increase and decrease the likelihood of these markers.

- Regularization using NLL loss: the We added an additional negative log-likelihood (NLL) loss term to the selected sequences with a scaling factor of 0.2, similar to Pang et al. (2024). This helps to further stabilize DPO training by maintaining the format required for generation and preventing the log-likelihood of the selected responses from decreasing (Pang et al., 2024; Pal et al., 2024).

4.1.5 Model averaging

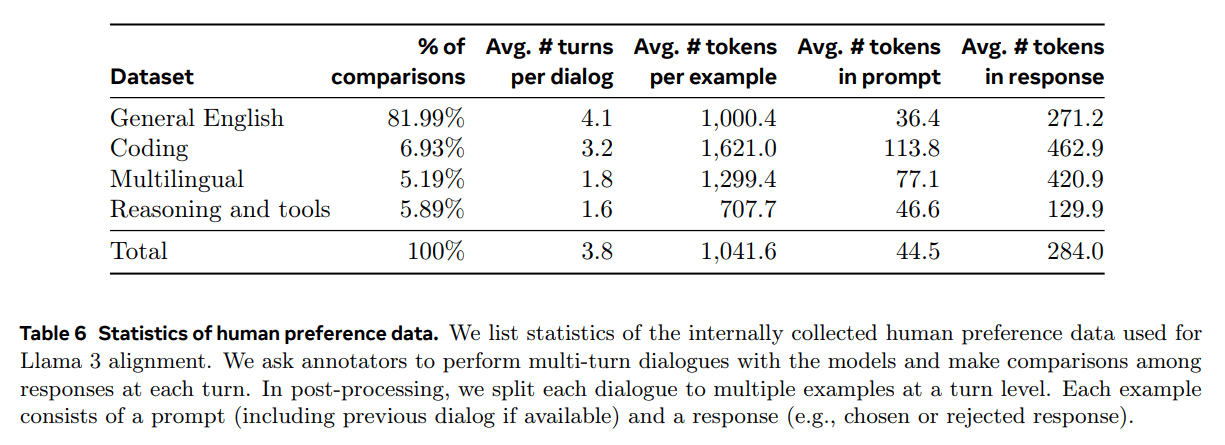

Finally, we averaged the models obtained in experiments using various data versions or hyperparameters at each RM, SFT, or DPO stage (Izmailov et al. 2019; Wortsman et al. 2022; Li et al. 2022). We present statistical information on the internally collected human preference data used for Llama 3 conditioning. We asked evaluators to engage in multiple rounds of dialog with the model and compared responses from each round. During post-processing, we split each conversation into multiple examples, each of which contains a prompt (including the previous conversation if available) and a response (e.g., a response that was selected or rejected).

4.1.6 Iteration rounds

Following Llama 2, we applied the above methodology for six rounds of iterations. In each round, we collect new preference labeling and fine-tuning (SFT) data and sample synthetic data from the latest model.

4.2 Post-training data

The composition of the post-training data plays a crucial role in the utility and behavior of the language model. In this section, we discuss our annotation procedure and preference data collection (Section 4.2.1), the composition of the SFT data (Section 4.2.2), and methods for data quality control and cleaning (Section 4.2.3).

4.2.1 Preferences

Our preference data labeling process is similar to Llama 2. After each round, we deploy multiple models for labeling and sample two responses from different models for each user cue. These models can be trained using different data mixing and alignment schemes, resulting in different capability strengths (e.g., code expertise) and increased data diversity. We asked annotators to categorize preference scores into one of four levels based on their level of preference: significantly better, better, slightly better, or slightly better.

We have also included an editing step after the preference ordering to encourage the annotator to further refine the preferred response. The annotator can either edit the selected response directly, or use the feedback cue model to refine their own response. As a result, some preference data has three sorted responses (Edit > Select > Reject).

The preference annotation statistics we used for Llama 3 training are reported in Table 6. Generalized English covers multiple subcategories, such as knowledge-based question and answer or precise instruction following, which are beyond the scope of specific abilities. Compared to Llama 2, we observed an increase in the average length of prompts and responses, suggesting that we are training Llama 3 on more complex tasks.In addition, we implemented a quality analysis and manual evaluation process to critically assess the collected data, allowing us to refine the prompts and provide systematic, actionable feedback to the annotators. For example, as Llama 3 improves after each round, we will correspondingly increase the complexity of the cues to target areas where the model is lagging.

In each late training round, we use all available preference data at the time for reward modeling, and only the most recent batches from each capability for DPO training. For both reward modeling and DPO, we train with samples labeled "selection response significantly better or better" and discard samples with similar responses.

4.2.2 SFT data

Our fine-tuning data comes primarily from the following sources:

- Cues from our manually labeled collection and their rejection of sampling responses

- Synthetic data for specific capabilities (see Section 4.3 for details)

- Small amount of manually labeled data (see Section 4.3 for details)

As we progressed through our post-training cycle, we developed more powerful variants of Llama 3 and used these to collect larger datasets to cover a wide range of complex capabilities. In this section, we discuss the details of the rejection sampling process and the overall composition of the final SFT data mixture.

Refusal to sample.In Rejection Sampling (RS), for each cue we collect during manual annotation (Section 4.2.1), we sample K outputs from the most recent chat modeling strategy (typically the best execution checkpoints from the previous post-training iteration, or the best execution checkpoints for a given competency) and use our reward model to select the best candidate, in line with Bai et al. (2022). At later stages of post-training, we introduce system cues to guide RS responses to conform to a desired tone, style, or format, which may vary for different abilities.

To improve the efficiency of rejection sampling, we employ PagedAttention (Kwon et al., 2023).PagedAttention improves memory efficiency through dynamic key-value cache allocation. It supports arbitrary output length by dynamically scheduling requests based on the current cache capacity. Unfortunately, this introduces the risk of swapping when memory runs out. To eliminate this swapping overhead, we define a maximum output length and only execute requests if there is enough memory to hold outputs of that length.PagedAttention also allows us to share the hinted key-value cache page across all corresponding outputs. Overall, this resulted in more than a 2x increase in throughput during rejection sampling.

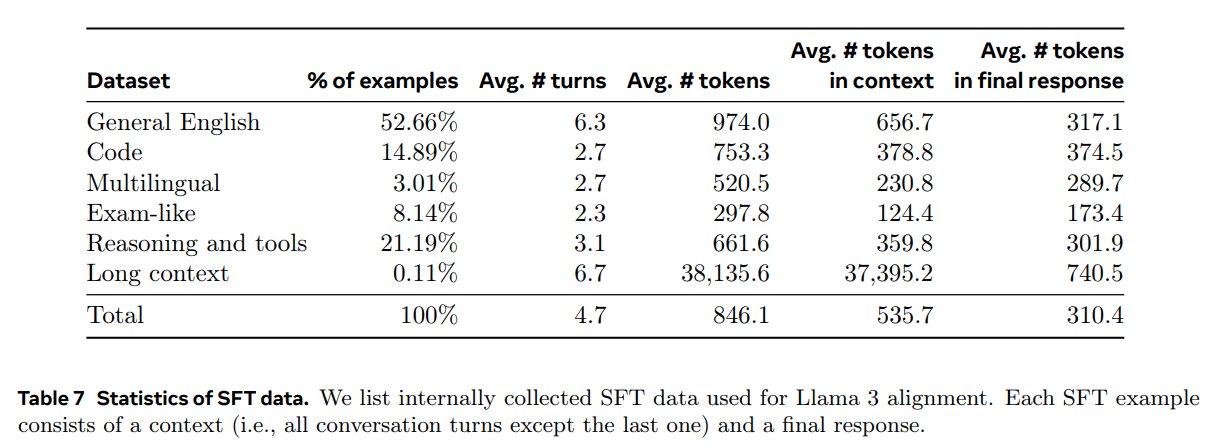

Aggregate data composition.Table 7 shows the statistics for each of the broad categories of data in our "usefulness" mix. While the SFT and preference data contain overlapping domains, they are curated differently, resulting in different count statistics. In Section 4.2.3, we describe the techniques used to categorize the subject matter, complexity, and quality of our data samples. In each round of post-training, we carefully tune our overall data mix to adjust performance across multiple axes for a wide range of benchmarks. Our final data blend will be iterated multiple times for certain high-quality sources and downsampled for others.

4.2.3 Data processing and quality control

Considering that most of our training data is model-generated, careful cleaning and quality control is required.

Data cleansing: In the early stages, we observed many unwanted patterns in the data, such as excessive use of emoticons or exclamation points. Therefore, we implemented a series of rule-based data deletion and modification strategies to filter or remove problematic data. For example, to mitigate the problem of over-apologizing intonation, we would identify overused phrases (e.g., "I'm sorry" or "I apologize") and carefully balance the proportion of such samples in the dataset.

Data pruning: We also apply a number of model-based techniques to remove low-quality training samples and improve overall model performance:

- Subject Categorization: We first fine-tuned Llama 3 8B into a topic classifier and reasoned over all the data to categorize it into coarse-grained categories ("Mathematical Reasoning") and fine-grained categories ("Geometry and Trigonometry").

- Quality rating: We used the reward model and Llama-based signaling to obtain quality scores for each sample. For the RM-based scores, we considered data with scores in the highest quartile as high quality. For the Llama-based scores, we prompted the Llama 3 checkpoints to score the General English data on three levels (accuracy, instruction adherence, and tone/presentation) and the code data on two levels (error recognition and user intent) and considered the samples with the highest scores as high-quality data. RM and Llama-based scores have high conflict rates, and we found that combining these signals resulted in the best recall for the internal test set. Ultimately, we select those examples that are labeled as high quality by either the RM or Llama-based filters.

- Difficulty Rating: Since we were also interested in prioritizing more complex model examples, we scored the data using two difficulty metrics: Instag (Lu et al., 2023) and Llama-based scoring. For Instag, we prompted Llama 3 70B to perform intent labeling on SFT cues, where more intent implies higher complexity. We also prompted Llama 3 to measure the difficulty of the dialog on three levels (Liu et al., 2024c).

- Semantic de-emphasis: Finally, we perform semantic de-duplication (Abbas et al., 2023; Liu et al., 2024c). We first cluster complete conversations using RoBERTa (Liu et al., 2019b) and sort by quality score × difficulty score in each cluster. We then perform greedy selection by iterating over all sorted examples, keeping only those whose maximum cosine similarity to the examples seen in the clusters so far is less than a threshold.

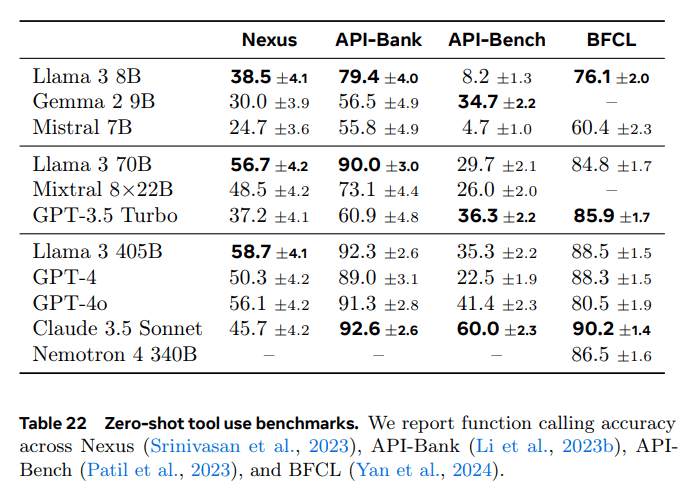

4.3 Capacity

In particular, we highlight some of the efforts made to improve specific competencies, such as code handling (Section 4.3.1), multilingualism (Section 4.3.2), mathematical and reasoning skills (Section 4.3.3), long contextualization (Section 4.3.4), tool use (Section 4.3.5), factuality (Section 4.3.6), and controllability (Section 4.3.7).

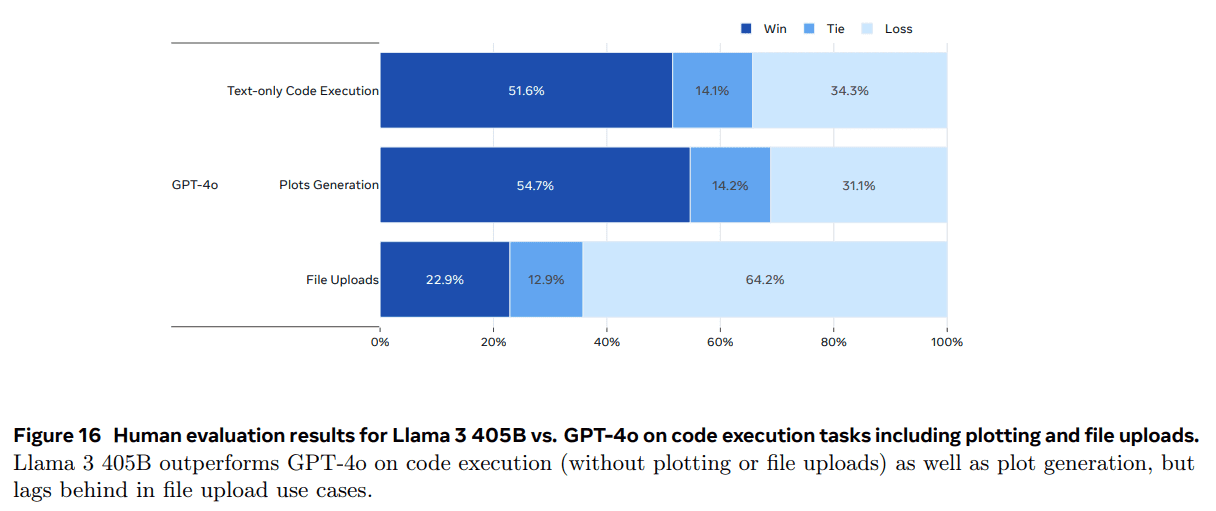

4.3.1 Code

since (a time) Copilot Since the release of LLMs for code and Codex (Chen et al., 2021), there has been a lot of interest. Developers now use these models extensively to generate code snippets, debug, automate tasks, and improve code quality. For Llama 3, our goal is to improve and evaluate code generation, documentation, debugging, and review capabilities for the following prioritized programming languages: Python, Java, JavaScript, C/C++, TypeScript, Rust, PHP, HTML/CSS, SQL, and bash/shell.Here, we present the results obtained by training code experts, generating synthetic data for SFT, moving to improved formats via system prompts, and creating quality filters to remove bad samples from training data to improve these coding features.

Specialist training.We trained a code expert and used it in subsequent multiple rounds of post-training to collect high-quality human code annotations. This was achieved by branching off the main pre-training run and continuing to pre-train on a mix of 1T tokens that were primarily (>85%) code data. Continued pre-training on domain-specific data has been shown to be effective in improving performance in specific domains (Gururangan et al., 2020). We follow a similar recipe to CodeLlama (Rozière et al., 2023). In the last few thousand steps of training, we perform long context fine-tuning (LCFT) on a high-quality blend of repository-level code data, extending the expert's context length to 16K tokens. Finally, we follow a similar post-training modeling recipe described in Section 4.1 to align the model, but use a mix of SFT and DPO data that is primarily code-specific. The model is also used for rejection sampling of coding cues (Section 4.2.2).

Synthetic data generation.During development, we identified key problems with code generation, including difficulty following instructions, code syntax errors, incorrect code generation, and difficulty fixing errors. While dense human annotations could theoretically solve these problems, synthetic data generation provides a complementary approach that is cheaper, scales better, and is not limited by the level of expertise of the annotators.

Therefore, we used Llama 3 and Code Expert to generate a large number of synthetic SFT conversations. We describe three high-level methods for generating synthetic code data. Overall, we used over 2.7 million synthetic examples during SFT.

1. Synthetic data generation: implementing feedback.The 8B and 70B models show significant performance improvements on training data generated by larger, more competent models. However, our preliminary experiments show that training only Llama 3 405B on its own generated data does not help (or even degrades performance). To address this limitation, we introduce execution feedback as a source of truth that allows the model to learn from its mistakes and stay on track. In particular, we generate a dataset of approximately one million synthesized coded conversations using the following procedure:

- Problem description generation:First, we generated a large set of programming problem descriptions covering a variety of topics (including long-tail distributions). To achieve this diversity, we randomly sampled code snippets from a variety of sources and prompted the model to generate programming problems based on these examples. This allowed us to capitalize on the wide range of topics and create a comprehensive set of problem descriptions (Wei et al., 2024).

- Solution Generation:We then prompted Llama 3 to solve each problem in the given programming language. We observed that adding good programming rules to the prompts improved the quality of the generated solutions. In addition, we found it helpful to ask the model to explain its thought process with annotations.

- Correctness analysis: After generating solutions, it is critical to recognize that their correctness is not a guarantee, and that including incorrect solutions in the fine-tuned dataset may compromise the quality of the model. While we cannot ensure complete correctness, we develop methods to approximate correctness. To this end, we take the extracted source code from the generated solutions and apply a combination of static and dynamic analysis techniques to test their correctness, including:

- Static analysis: We run all generated code through a parser and code checking tools to ensure syntactic correctness, catching syntax errors, use of uninitialized variables or unimported functions, code style issues, type errors, and more.

- Unit test generation and execution: For each problem and solution, we prompt the model to generate unit tests and execute them with the solution in a containerized environment, catching runtime execution errors and some semantic errors.

- Error feedback and iterative self-correction: When the solution fails at any step, we prompt the model to modify it. The prompt contains the original problem description, the wrong solution, and feedback from the parser/code inspection tool/testing program (standard output, standard error, and return code). After a unit test execution failure, the model can either fix the code to pass the existing tests or modify its unit tests to fit the generated code. Only dialogs that pass all checks are included in the final dataset for supervised fine-tuning (SFT). Notably, we observed that approximately 20% of solutions were initially incorrect but self-corrected, suggesting that the model learns from execution feedback and improves its performance.

- Fine-tuning and iterative improvement: The fine-tuning process takes place over multiple rounds, with each round building on the previous round. After each fine-tuning round, the model is improved to generate higher quality synthetic data for the next round. This iterative process allows for incremental refinements and enhancements to model performance.

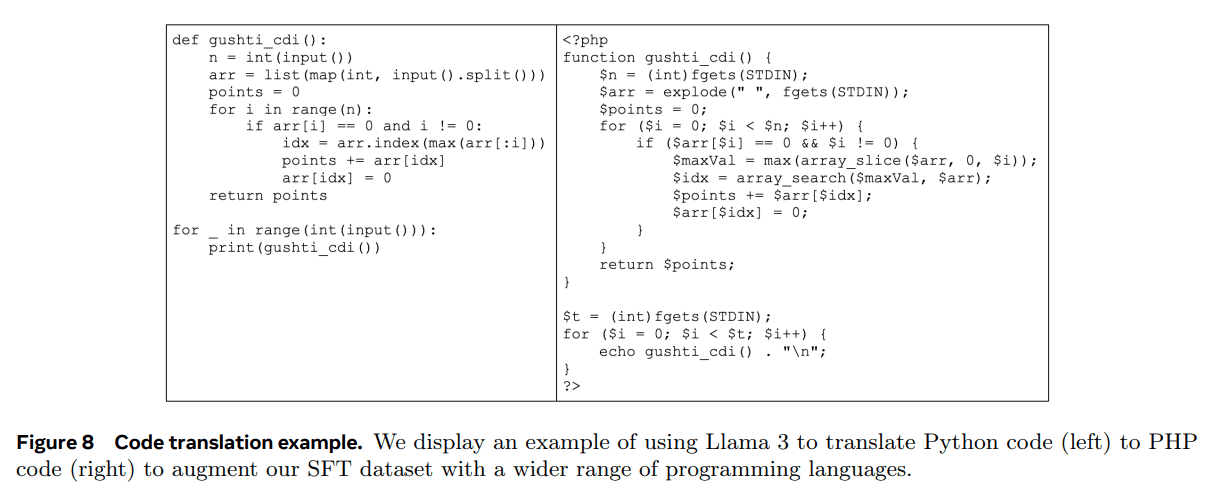

2. Synthetic data generation: programming language translation. We observe a performance gap between major programming languages (e.g. Python/C++) and less common programming languages (e.g. Typescript/PHP). This is not surprising since we have less training data for less common programming languages. To mitigate this, we will supplement the available data by translating data from common programming languages into less common ones (similar to Chen et al. (2023) in the field of inference). This is accomplished by prompting Llama 3 and ensuring quality through syntactic parsing, compilation, and execution. Figure 8 shows an example of synthesized PHP code translated from Python. This significantly improves the performance of less common languages measured by the MultiPL-E (Cassano et al., 2023) benchmark.

3. Synthetic data generation: reverse translation. In order to improve certain coding capabilities (e.g., documentation, interpretation) where the amount of information from execution feedback is not sufficient to determine quality, we use another multi-step approach. Using this process, we generated approximately 1.2 million synthetic conversations related to code interpretation, generation, documentation, and debugging. Starting with code snippets in various languages from the pre-training data:

- Generate: We prompted Llama 3 to generate data representing the target capabilities (e.g., adding comments and documentation strings to a code snippet, or asking the model to interpret a piece of code).

- Reverse translation. We prompt the model to "back-translate" the synthetically-generated data back to the original code (e.g., we prompt the model to generate code only from its documents, or let us ask the model to generate code only from its explanations).

- Filtration. Using the original code as a reference, we prompt Llama 3 to determine the quality of the output (e.g., we ask the model how faithful the back-translated code is to the original code). We then use the generated example with the highest self-validation score in SFT.

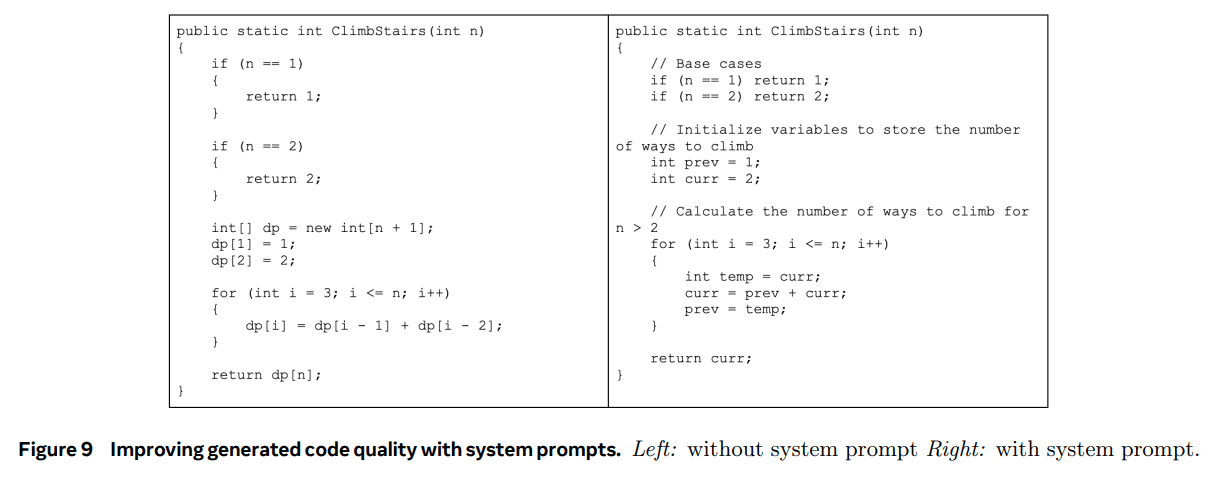

System prompt guide for rejecting samples. During rejection sampling, we use code-specific system cues to improve the readability, documentation, completeness, and concreteness of the code. Recall from Section 7 that this data is used to fine-tune the language model. Figure 9 shows an example of how system hints can help improve the quality of the generated code - it adds necessary comments, uses more informative variable names, saves memory, and so on.

Filtering training data using execution and modeling as rubrics. As described in Section 4.2.3, we occasionally encountered quality problems in the rejected sampled data, such as the inclusion of erroneous code blocks. Detecting these problems in rejection sampling data is not as simple as detecting our synthetic code data, because rejection sampling responses often contain a mixture of natural language and code that may not always be executable. (For example, user prompts may explicitly ask for pseudocode or edits to only very small pieces of the executable program.) To address this issue, we utilize a "model-as-judge" approach, in which earlier versions of Llama 3 are evaluated and assigned a binary (0/1) score based on two criteria: code correctness and code style. Only samples with a perfect score of 2 were retained. Initially, this strict filtering resulted in a degradation of downstream benchmark performance, primarily because it disproportionately removed samples with challenging hints. To offset this, we strategically modified some of the responses categorized as the most challenging coded data until they met the Llama-based "model as judge" criteria. By improving these challenging questions, the coded data balanced quality and difficulty to achieve optimal downstream performance.

4.3.2 Multilingualism

This section describes how we have improved the multilingual capabilities of Llama 3, including: training an expert model specialized on more multilingual data; sourcing and generating high-quality fine-tuned data of multilingual commands for German, French, Italian, Portuguese, Hindi, Spanish, and Thai; and solving the specific challenges of multilingual language bootstrapping in order to improve the overall performance of our model.

Specialist training.Our Llama 3 pre-training data mix contains far more English tokens than non-English tokens. In order to collect higher-quality non-English manual annotations, we train a multilingual expert model by branching the pretraining runs and continuing the pretraining on a data mix containing 90% multilingual tokens. We then post-train this expert model as described in Section 4.1. This expert model is then used to collect higher quality non-English human annotations until the pre-training is fully completed.

Multilingual data collection.Our multilingual SFT data mainly comes from the following sources. The overall distribution is 2.41 TP3T of human annotations, 44.21 TP3T of data from other NLP tasks, 18.81 TP3T of rejection sampling data, and 34.61 TP3T of translation inference data.

- Manual Annotation:We collect high-quality, manually annotated data from linguists and native speakers. These annotations consist mainly of open-ended cues that represent real-world use cases.

- Data from other NLP tasks:For further enhancement, we use multilingual training data from other tasks and rewrite them into a dialog format. For example, we use data from exams-qa (Hardalov et al., 2020) and Conic10k (Wu et al., 2023). To improve language alignment, we also use parallel texts from GlobalVoices (Prokopidis et al., 2016) and Wikimedia (Tiedemann, 2012). We used LID-based filtering and Blaser 2.0 (Seamless Communication et al., 2023) to remove low-quality data. For the parallel text data, instead of directly using bi-text pairs, we applied a multilingual template inspired by Wei et al. (2022a) to better model real conversations in translation and language learning scenarios.

- Reject sampling data:We applied rejection sampling to human-annotated cues to generate high-quality samples for fine-tuning, with few modifications compared to the process for English data:

- Generation: we explored randomly selecting temperature hyperparameters in the range of 0.2 -1 in early rounds of post-training to diversify generation. When using high temperatures, responses to multilingual cues may become creative and inspiring, but can also be prone to unnecessary or unnatural code switching. In the final stages of post-training, we used a constant value of 0.6 to balance this tradeoff. In addition, we used specialized system cues to improve response formatting, structure, and general readability.

- Selection: prior to reward model-based selection, we implemented multilingual-specific checks to ensure a high rate of linguistic matches between prompts and responses (e.g., Romanized Hindi prompts should not be expected to be responded to using Hindi Sanskrit scripts).

- Translation data:We attempted to avoid using machine translation data to fine-tune the model to prevent the emergence of transliterated English (Bizzoni et al., 2020; Muennighoff et al., 2023) or possible name bias (Wang et al., 2022a), gender bias (Savoldi et al., 2021), or cultural bias (Ji et al., 2023) . In addition, we aimed to prevent the model from being exposed only to tasks rooted in English-speaking cultural contexts, which may not be representative of the linguistic and cultural diversity we aimed to capture. We made an exception to this and translated the synthesized quantitative reasoning data (see Section 4.3.3 for more information) into non-English to improve quantitative reasoning performance in non-English languages. Due to the simple nature of the language of these math problems, the translated samples were found to have few quality issues. We observed significant gains from adding this translated data to the MGSM (Shi et al., 2022).

4.3.3 Mathematics and reasoning

We define reasoning as the ability to perform a multi-step computation and arrive at the correct final answer.

Several challenges guided our approach to training models that excel at mathematical reasoning:

- Lack of tips. As problem complexity increases, the number of valid cues or problems for supervised fine-tuning (SFT) decreases. This scarcity makes it difficult to create diverse and representative training datasets to teach models various mathematical skills (Yu et al. 2023; Yue et al. 2023; Luo et al. 2023; Mitra et al. 2024; Shao et al. 2024; Yue et al. 2024b).

- Lack of Real Reasoning Processes. Effective reasoning requires stepwise solutions to facilitate the reasoning process (Wei et al., 2022c). However, there is often a lack of realistic reasoning processes that are essential to guide the model on how to progressively decompose the problem and arrive at the final answer (Zelikman et al., 2022).

- Incorrect intermediate step. When using model-generated inference chains, intermediate steps may not always be correct (Cobbe et al. 2021; Uesato et al. 2022; Lightman et al. 2023; Wang et al. 2023a). This inaccuracy can lead to incorrect final answers and needs to be addressed.

- Training the model using external tools. Enhancing models to utilize external tools such as code interpreters allows them to reason by interweaving code and text (Gao et al. 2023; Chen et al. 2022; Gou et al. 2023). This ability can significantly improve their problem solving skills.

- Differences between training and reasoning: the The way models are fine-tuned during training usually differs from the way they are used during reasoning. During reasoning, the fine-tuned model may interact with humans or other models and require feedback to improve its reasoning. Ensuring consistency between training and real-world applications is critical to maintaining inference performance.

To address these challenges, we apply the following methodology:

- Resolving the lack of cues. We take relevant pre-training data from mathematical contexts and convert it into a question-answer format that can be used for supervised fine-tuning. In addition, we identify mathematical skills in which the model performs poorly and actively collect cues from humans to teach the model these skills. To facilitate this process, we created a taxonomy of math skills (Didolkar et al., 2024) and asked humans to provide the corresponding prompts/questions.

- Augmenting Training Data with Stepwise Reasoning Steps. We use Llama 3 to generate step-by-step solutions for a set of cues. For each prompt, the model produces a variable number of generated results. These generated results are then filtered based on the correct answers (Li et al., 2024a). We also perform self-validation, where Llama 3 is used to verify that a particular step-by-step solution is valid for a given problem. This process improves the quality of the fine-tuned data by eliminating instances where the model does not produce valid inference trajectories.

- Filtering Faulty Reasoning Steps. We train results and stepwise reward models (Lightman et al., 2023; Wang et al., 2023a) to filter training data with incorrect intermediate inference steps. These reward models are used to eliminate data with invalid stepwise inference, ensuring that fine-tuning yields high-quality data. For more challenging cues, we use Monte Carlo Tree Search (MCTS) with learned stepwise reward models to generate valid inference trajectories, which further enhances the collection of high-quality inference data (Xie et al., 2024).

- Interweaving Codes and Textual Reasoning. We suggest that Llama 3 solves the inference problem through a combination of textual inference and its associated Python code (Gou et al., 2023). Code execution is used as a feedback signal to eliminate cases where the inference chain is invalid and to ensure the correctness of the inference process.