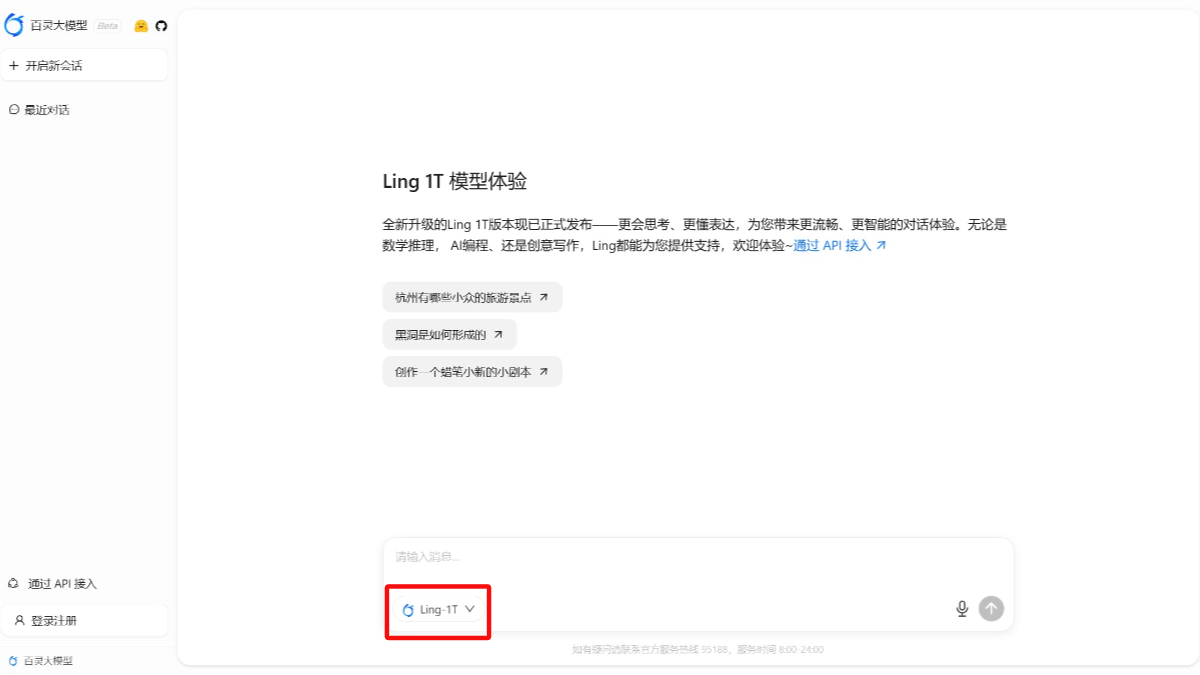

Ling-1T - Ant Group's open source universal language model for trillions of parameters

What is Ling-1T?

Ling-1T is a trillion-parameter general-purpose language model open-sourced by Ant Group, which belongs to the flagship product of the Ling 2.0 series of Bering's large models. The model adopts high-efficiency MoE architecture, supports 128K context windows, and outperforms mainstream models such as GPT-5 in 7 benchmarks including code generation, mathematical reasoning, and logic test, especially in the AIME competition math test with 70.42% accuracy rate topping the list of open source models. Its innovation lies in achieving Pareto optimization between reasoning accuracy and efficiency through FP8 mixed-accuracy training and evolutionary thought chain strategy, and realizing lightweight reasoning deployment of trillion models for the first time.

Ling-1T Functional Features

- Powerful reasoning: Achieved SOTA performance in a number of complex reasoning benchmarks, such as achieving an accuracy rate of 70.42% with less Token consumption than Gemini-2.5-Pro in the Competition Math List AIME 25 test; breaking the 74-point mark in both Omni-Math and UGMathBench, a comprehensive test, and reaching a score of 87.45 in FinanceReasoning reached 87.45 points, demonstrating strong logical consistency and cross-domain reasoning ability.

- Excellent code generation and optimization skills: Highest score on LiveCodeBench, a programming competition task, significantly higher than DeepSeek; 94.69 in CodeForces test, exceeding GPT-5, capable of generating highly compatible front-end code for multi-end environments.

- Excellent intellectual understanding: Leading or tied for the lead on several key datasets, including C-Eval, MMLU-Redux, MMLU-Pro, MMLU-Pro-STEM, OlympiadBench, etc., and overall generally 1 to 3 percentage points better than DeepSeek, Kimi, and GPT-5 backbone models, with some metrics even approaching the Gemini -2.5-Pro upper limit.

- Efficient multi-round dialog capabilities: It performs well in Agent reasoning and multi-round dialog scenarios, especially in tasks characterized by open thinking such as BFCL-v3 and Creative-Writing, showing a balance between natural language expression and coherent thinking.

- Reasoning for High Efficiency and Low CostThe paradigm of "large parameter reserve + small parameter activation" is adopted, with trillions of parameters in hand, but only 10 billion computing resources are needed for each call, which significantly increases the energy efficiency ratio, stabilizes the end-to-end reasoning latency at less than 200 milliseconds, and the energy consumption is only 38% that of the same kind of closed-source model, which can significantly reduce the deployment cost of the enterprise.

- long contextual comprehensionThe support of up to 128K context windows, close to the "long memory" experience, a book-level content in one breath, without losing clues, especially for legal, financial, scientific research and other long documents business is critical.

- Open Source Collaboration and Community SupportThe code and weights are completely open-source and published in Hugging Face and other mainstream open-source platforms, which facilitates community exploration and feedback, and accelerates the iterative refinement of the model.

Core Benefits of Ling-1T

- High inference accuracy: Outperforms in a number of complex reasoning benchmark tests in areas such as competitive math and professional math, leading in accuracy and demonstrating strong logical reasoning.

- Strong generalization ability: In cross-domain tasks such as intelligent body tool invocation, high accuracy can be achieved with only a small amount of instruction fine-tuning, with excellent inference migration and generalization capabilities.

- Strong mandate implementation capacity: It can accurately understand complex natural language commands and autonomously complete comprehensive tasks, such as code generation and copywriting, to meet diversified needs.

- Highly efficient reasoningIt adopts the paradigm of "large parameter reserve + small parameter activation", with end-to-end reasoning latency stabilized at less than 200 milliseconds and low energy consumption, which significantly reduces the cost of enterprise deployment.

What is Ling-1T's official website?

- Spiritus major model:: https://ling.tbox.cn/chat

- HuggingFace Model Library:: https://huggingface.co/inclusionAI/Ling-1T

People for whom Ling-1T is indicated

- software developer: You can use Ling-1T's powerful code generation and optimization capabilities to quickly generate high-quality code snippets, improve development efficiency, and reduce repetitive work.

- (scientific) researcher: Ling-1T's long contextual understanding and reasoning capabilities can provide strong support when working with complex scientific data, writing academic papers, and conducting interdisciplinary research.

- financial practitioner: In areas such as financial data analysis, risk assessment and investment decision-making, Ling-1T's efficient reasoning and knowledge comprehension capabilities help to quickly process large amounts of financial information and provide accurate analysis and recommendations.

- educator: It can be used for content generation, curriculum design, and student learning path planning to help teachers teach more efficiently.

- content creator: Including copywriting, creative writing, video scripting, and more, Ling-1T generates high-quality content that inspires creativity on demand.

- Corporate decision makers: When developing business strategies, market analysis and strategic planning, Ling-1T can provide data-driven insights and recommendations to assist in the decision-making process.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...