LightLLM: An Efficient Lightweight Framework for Reasoning and Serving Large Language Models

General Introduction

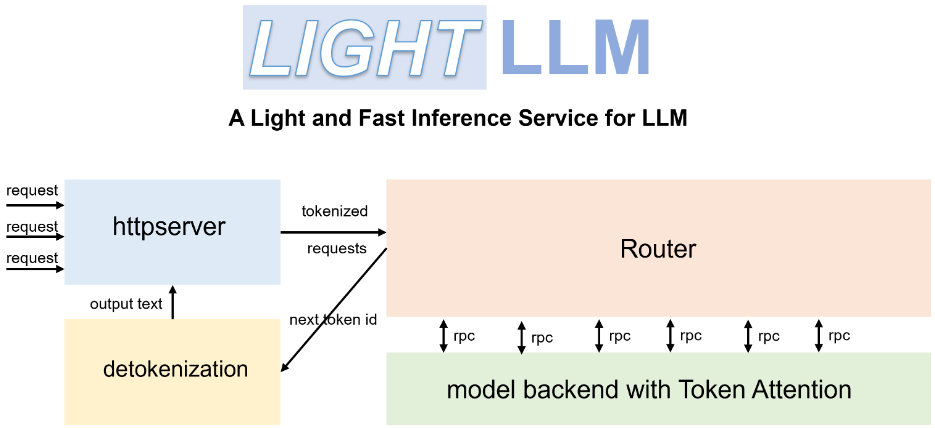

LightLLM is a Python-based Large Language Model (LLM) inference and service framework known for its lightweight design, ease of scaling, and efficient performance. The framework leverages a variety of well-known open source implementations, including FasterTransformer, TGI, vLLM, and FlashAttention, among others. lightLLM dramatically improves GPU utilization and inference speed through techniques such as asynchronous collaboration, dynamic batching, and tensor parallelism for a wide range of models and application scenarios.

Function List

- Asynchronous Collaboration: Supports asynchronous disambiguation, model inference, and deambiguation operations to improve GPU utilization.

- Fillerless Attention: supports fillless attention operations for multiple models and handles requests with large differences in length.

- Dynamic Batch Processing: Supports dynamic batch scheduling of requests.

- FlashAttention: Increase speed and reduce GPU memory footprint with FlashAttention.

- Tensor Parallelism: Accelerate inference on multiple GPUs using tensor parallelism.

- Token Attention: token-based KV cache memory management mechanism is implemented with zero memory waste.

- High Performance Router: Collaborates with Token Attention to optimize system throughput.

- Int8KV Cache: Increase token capacity, almost doubling it.

- Supports a variety of models: including BLOOM, LLaMA, StarCoder, ChatGLM2, and more.

Using Help

Installation process

- Install LightLLM using Docker:

docker pull modeltc/lightllm

docker run -it --rm modeltc/lightllm

- Install the dependencies:

pip install -r requirements.txt

Usage

- Start the LightLLM service:

python -m lightllm.server

- Query model (console example):

python -m lightllm.client --model llama --text "你好,世界!"

- Query model (Python example):

from lightllm import Client

client = Client(model="llama")

response = client.query("你好,世界!")

print(response)

Main function operation flow

- asynchronous collaboration: LightLLM significantly improves GPU utilization by asynchronously executing the segmentation, model inference, and de-segmentation operations. Users simply start the service and the system handles these operations automatically.

- unfilled attention span: When processing requests with large differences in length, LightLLM supports padding-free attention operations to ensure efficient processing. No additional configuration is required by the user, and the system will be optimized automatically.

- Dynamic Batch Processing: LightLLM supports dynamic batch scheduling, users can set the batch parameters through the configuration file, the system will dynamically adjust the batch policy according to the request.

- FlashAttention: By integrating FlashAttention technology, LightLLM improves inference speed and reduces GPU memory footprint. Users can enable this feature in the configuration file.

- tensor parallelism: LightLLM supports tensor parallelism on multiple GPUs. Users can set the number of GPUs and parallelism parameters through a configuration file, and the system will automatically assign tasks.

- Token Attention: LightLLM implements a token-based memory management mechanism for KV caches, ensuring zero memory waste. No additional configuration is required and the system manages the memory automatically.

- High Performance Router: LightLLM's high performance routers work with Token Attention to optimize system throughput. Users can set the routing parameters in the configuration file and the system will automatically optimize the routing policy.

- Int8KV Cache: LightLLM supports Int8KV cache to increase token capacity and almost double it. Users can enable this feature in the configuration file, and the system will automatically adjust the caching strategy.

Supported Models

LightLLM supports a variety of models, including but not limited to:

- BLOOM

- LLaMA

- StarCoder

- ChatGLM2

- InternLM

- Qwen-VL

- Llava

- Stablelm

- MiniCPM

- Phi-3

- CohereForAI

- DeepSeek-V2

Users can select the appropriate model according to their needs and set it accordingly in the configuration file.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...