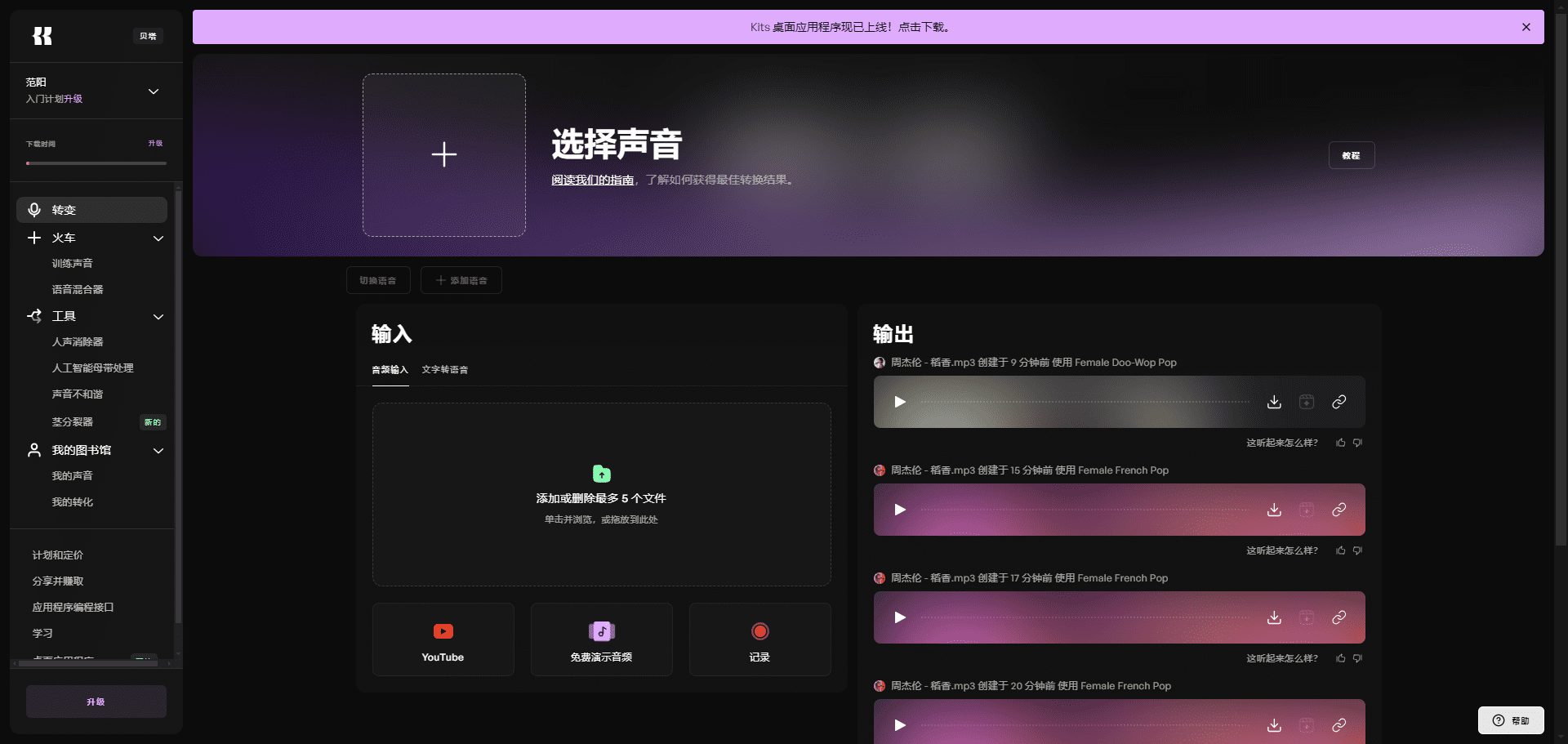

Lepton AI: Cloud-native AI platform offering free GPU-limited rate AI model deployment

General Introduction

Lepton AI is a leading cloud-native AI platform dedicated to providing developers and enterprises with efficient, reliable and easy-to-use AI solutions. Through its powerful computing capabilities and user-friendly interface, Lepton AI helps users achieve rapid landing and scaling in complex AI projects.

Function List

- Efficient computing: Provides high-performance computing resources to support training and inference of large-scale AI models.

- Cloud Native Experience: Seamlessly integrates with cloud computing technology to simplify the process of developing and deploying AI applications.

- GPU Infrastructure: Provides top-notch GPU hardware support to ensure efficient execution of AI tasks.

- Rapid deployment: Supports native Python development for rapid deployment of models without having to learn containers or Kubernetes.

- Flexible API: Provides a simple and flexible API that facilitates the calling of AI models in any application.

- Horizontal expansion: Supports horizontal scaling to handle large-scale workloads.

Using Help

Installation and use

- Register for an accountVisit the Lepton AI website, click on the "Register" button, and fill in the relevant information to complete the registration.

- Create a project: After logging in, go to "Control Panel", click "Create Project", fill in the name and description of the project.

- Selecting Computing Resources: In Project Settings, select the required computing resources, including GPU type and number.

- Upload model: In "Model Management", click "Upload Model" and select the local model file for uploading.

- Configuration environment: In "Environment Configuration", select the required runtime environment and dependency packages.

- Deployment modelsClick "Deploy", the system will automatically deploy the model and generate the API interface.

- invoke an API: In "API Documentation", view the generated API interface documentation and use the provided API call model for reasoning.

workflow

- model training: Train the model locally using Python to ensure that the model performs as expected.

- model testing: Perform model testing locally to verify the accuracy and stability of the model.

- Model Upload: Upload the trained model to the Lepton AI platform for online deployment.

- Environment Configuration: Configure the runtime environment and dependency packages according to the model requirements to ensure that the model runs properly.

- API call: Use the generated API interface to call the model for inference in the application and get the results in real time.

- Monitoring and Maintenance: On the "Monitor" page, you can view the model's running status and performance indicators for timely maintenance and optimization.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...