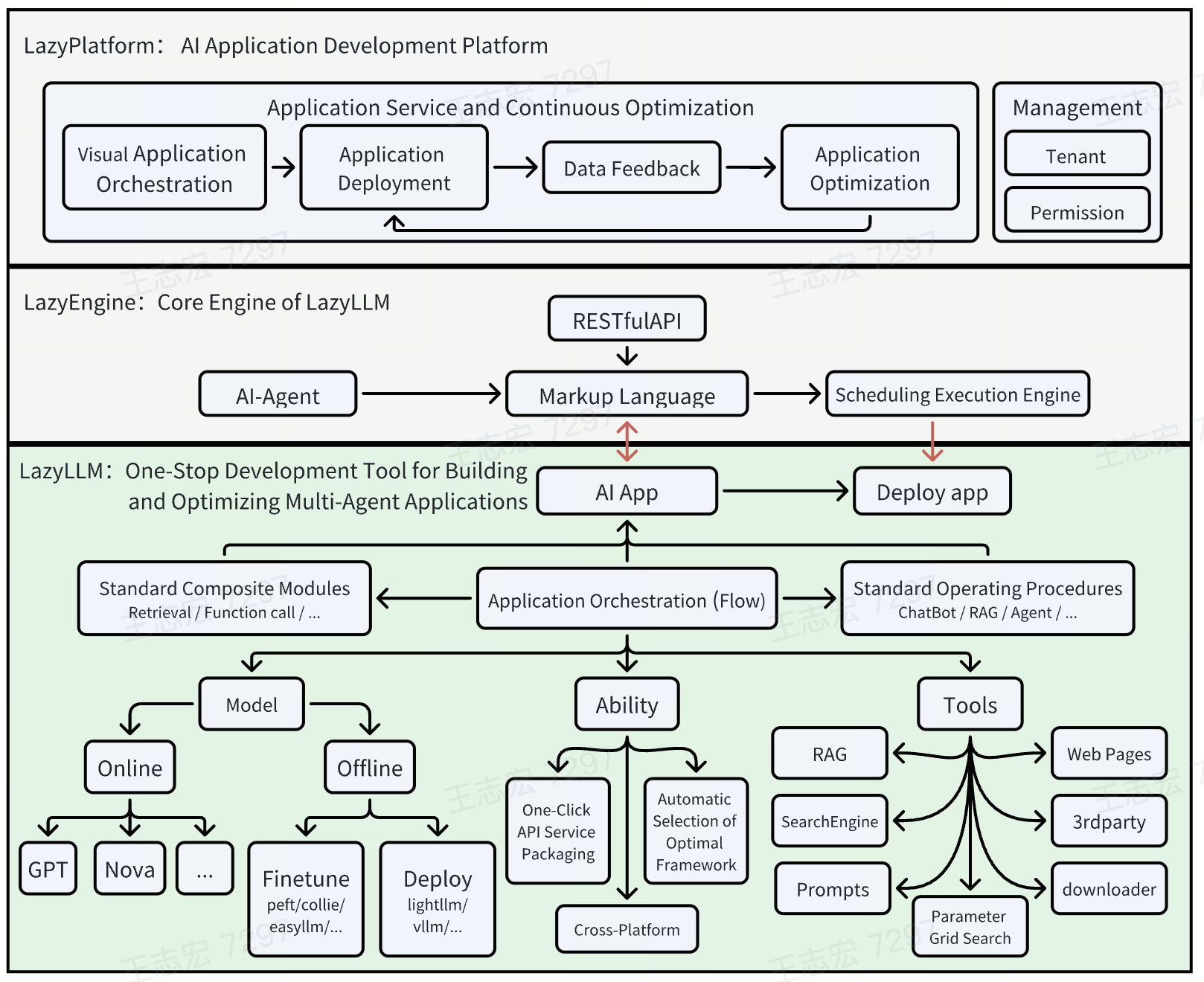

LazyLLM: Shangtang's open source low-code development tool for building multi-intelligence body applications

General Introduction

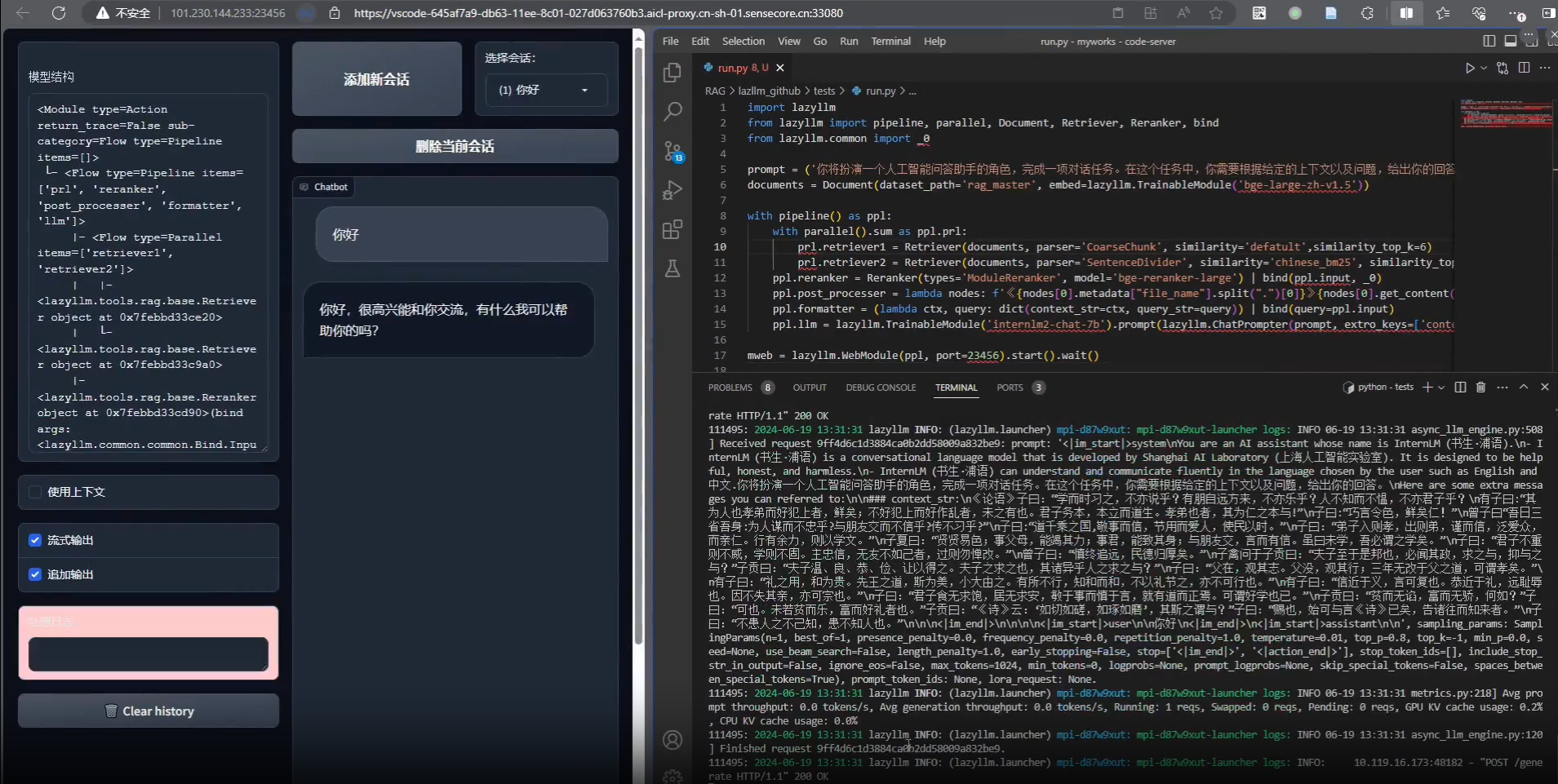

LazyLLM is an open source tool developed by the LazyAGI team, focusing on simplifying the development process of multi-intelligence large model applications. It helps developers quickly build complex AI applications and save time on tedious engineering configuration through one-click deployment and lightweight gateway mechanisms. Whether you are a beginner or an experienced developer, LazyLLM provides support: novices can easily get started with pre-built modules, while experts can achieve advanced development with flexible customization features. The tool emphasizes efficiency and practicality, integrating preferred components to ensure that production-ready applications are built at the lowest possible cost. With over 1100 stars on GitHub and an active community, updates are ongoing.

Function List

- Deploy complex applications with one click: Supports the complete process from prototype validation to production release with automated configuration of submodule services.

- Cross-platform compatibility: Adapt to bare metal servers, development machines, Slurm clusters, and public clouds with no code changes.

- Data flow management (Flow): Provides predefined processes such as Pipeline and Parallel to easily organize complex application logic.

- modular component: Support for customization and extensions, integration of user algorithms or third-party tools.

- Lightweight gateway mechanism: Simplify service startup and URL configuration for more efficient development.

- Supports multi-intelligence development: Rapidly build applications containing multiple AI agents adapted to large model tasks.

Using Help

Installation process

LazyLLM is a Python based open source project , the installation process is simple and straightforward . Here are the detailed steps:

environmental preparation

- Checking system requirements: Make sure you have Python 3.8 or above installed on your device.

- Installing Git: If you don't have Git installed, you can use a command-line tool such as

apt-get install gitmaybebrew install git) Installation. - Creating a virtual environment (optional but recommended)::

python -m venv lazyllm_env source lazyllm_env/bin/activate # Linux/Mac lazyllm_env\Scripts\activate # Windows

Download & Installation

- Cloning GitHub Repositories::

git clone https://github.com/LazyAGI/LazyLLM.git cd LazyLLM - Installation of dependencies::

- Run the following command to install the required libraries:

pip install -r requirements.txt - If you encounter a dependency conflict, try upgrading pip:

pip install --upgrade pip

- Run the following command to install the required libraries:

- Verify Installation::

- Run the sample code to confirm successful installation:

python -m lazyllm --version - If the version number is returned (e.g. v0.5), the installation is complete.

- Run the sample code to confirm successful installation:

Optional: Docker Deployment

- LazyLLM supports Docker one-click image packaging:

- Install Docker (refer to the official website: https://docs.docker.com/get-docker/).

- Run it in the project root directory:

docker build -t lazyllm:latest . docker run -it lazyllm:latest

How to use

At the core of LazyLLM is the ability to quickly build AI applications through modularity and data flow management. Below is a detailed how-to guide for the main features:

Feature 1: Deploy complex applications with one click

- procedure::

- Preparing the Application Configuration File: Create the

config.yaml, define modules and services. For example:modules: - name: llm type: language_model url: http://localhost:8000 - name: embedding type: embedding_service url: http://localhost:8001 - Starting services::

python -m lazyllm deploy - status: Access log output to confirm that all modules are functioning properly.

- Preparing the Application Configuration File: Create the

- Featured Description: This feature automatically connects submodules through a lightweight gateway, eliminating the need to manually configure URLs and making it ideal for rapid prototyping.

Feature 2: Cross-platform compatibility

- procedure::

- Designated platforms: Add parameters to the command line, for example:

python -m lazyllm deploy --platform slurm - Switching environments: No need to change the code, just replace

--platformparameters (e.g.cloudmaybebare_metal).

- Designated platforms: Add parameters to the command line, for example:

- application scenario: Developers can seamlessly migrate to the cloud after local testing to reduce adaptation efforts.

Function 3: Data Flow Management (Flow)

- procedure::

- Defining the data flow: Call a predefined Flow in a Python script. such as building a Pipeline:

from lazyllm import pipeline flow = pipeline( step1=lambda x: x.upper(), step2=lambda x: f"Result: {x}" ) print(flow("hello")) # 输出 "Result: HELLO" - Running complex processes: Multitasking in combination with Parallel or Diverter:

from lazyllm import parallel par = parallel( task1=lambda x: x * 2, task2=lambda x: x + 3 ) print(par(5)) # 输出 [10, 8]

- Defining the data flow: Call a predefined Flow in a Python script. such as building a Pipeline:

- Featured Description: Flow provides standardized interfaces to reduce duplication of data conversion and support collaborative development between modules.

Function 4: Modular Component Customization

- procedure::

- Registering Custom Functions::

from lazyllm import register @register def my_function(input_text): return f"Processed: {input_text}" - Integration into applications: Called in a Flow or deployment configuration

my_functionThe

- Registering Custom Functions::

- Advanced Usage: Supports Bash command registration for hybrid script development.

Tips for use

- adjust components during testing: Run-time additions

--verboseparameter to view the detailed log:python -m lazyllm deploy --verbose - Community Support: You can submit feedback on GitHub Issues and the team will respond in a timely manner.

- update: Pull the latest code on a regular basis:

git pull origin main

With these steps, you can quickly get started with LazyLLM and build applications ranging from simple prototypes to large models at the production level.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...