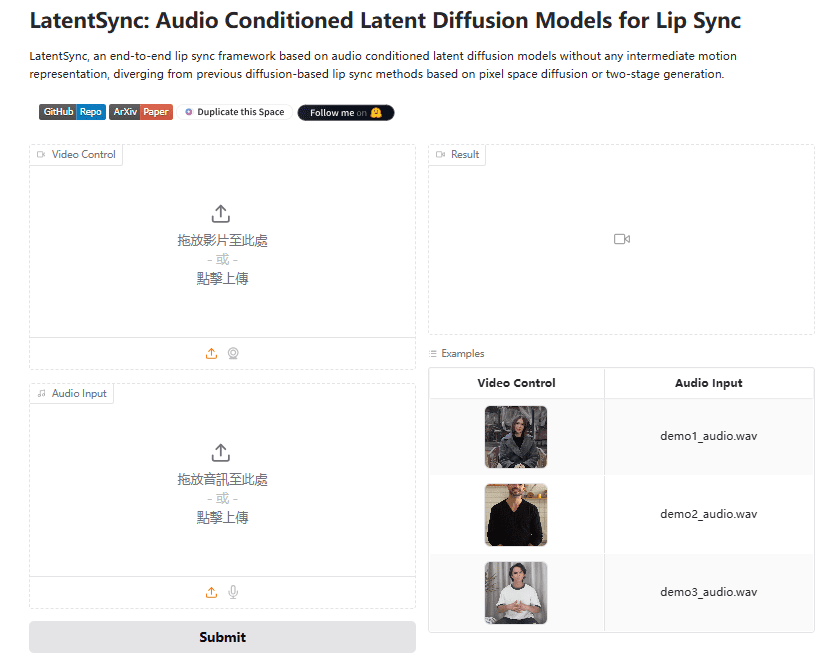

LatentSync: an open source tool for generating lip-synchronized video directly from audio

General Introduction

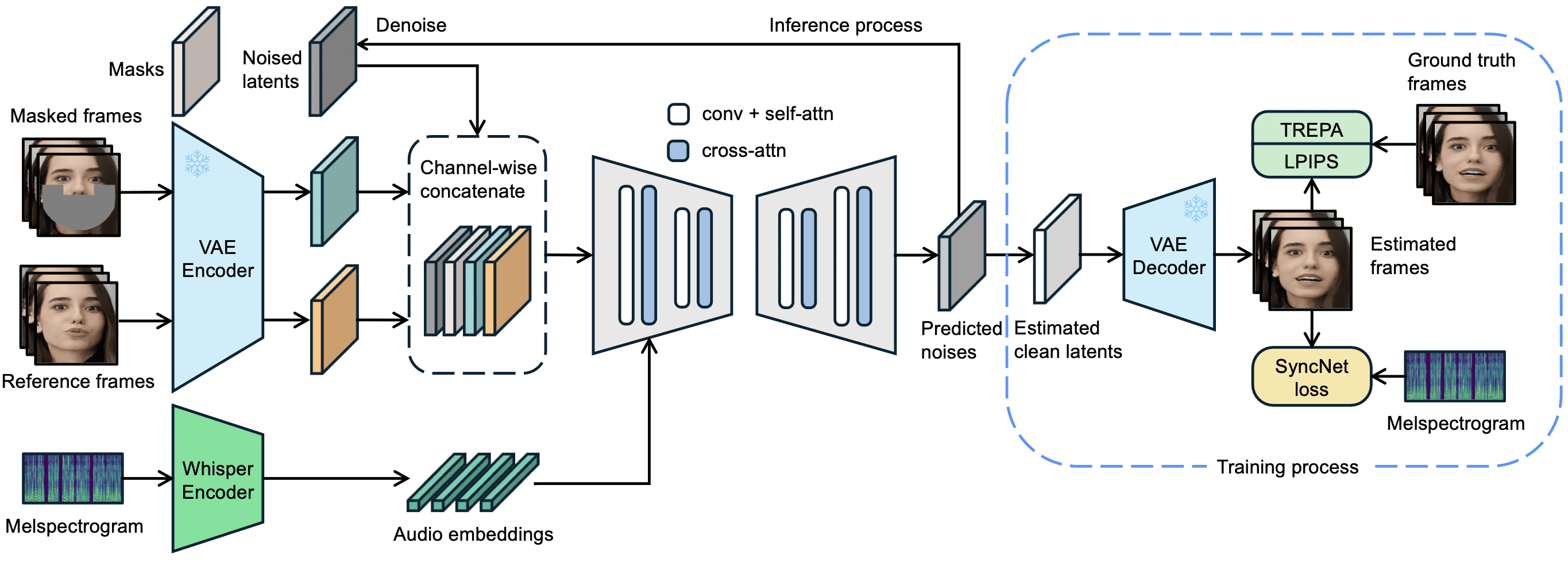

LatentSync is an open source tool developed by ByteDance and hosted on GitHub. It drives the lip movements of characters in a video directly through the audio, so that the mouth shape matches the voice precisely. The project is based on Stable Diffusion's latent diffusion model, combined with the Whisper Audio features are extracted to generate video frames over the U-Net network. version 1.5 of LatentSync, released March 14, 2025, optimizes temporal consistency, support for Chinese video, and reduces the training video memory requirement to 20GB. users can run the inference code to generate lip-synchronized 256x256 resolution video by simply having a graphics card that supports 6.8GB of video memory. videos in 256x256 resolution. The tool is completely free and provides code and pre-trained models for tech enthusiasts and developers.

Experience: https://huggingface.co/spaces/fffiloni/LatentSync

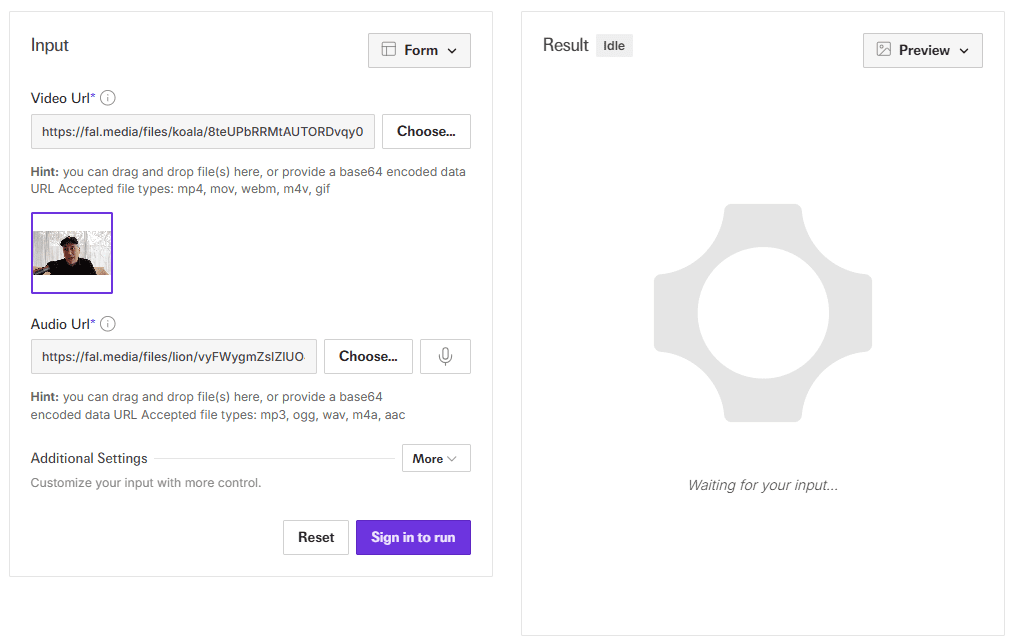

API demo address: https://fal.ai/models/fal-ai/latentsync

Function List

- Audio Driver Lip Synchronization: Input audio and video to automatically generate lip movements that match the sound.

- End-to-end video generation: No intermediate representation is required, and clear lip-synchronized video is output directly.

- Time Consistency Optimization: Reduces picture jumps with TREPA technology and timing layers.

- Chinese video support: Version 1.5 improves the handling of Chinese audio and video.

- Efficient training support: Multiple U-Net configurations are available, with memory requirements as low as 20GB.

- Data processing pipeline: Built-in tools to clean the video data and ensure the quality of the generation.

- parameterization: Support for adjusting the number of inference steps and guidance scales to optimize the generation effect.

Using Help

LatentSync is a locally run tool for users with a technical base. The installation, reasoning and training process is described in detail below.

Installation process

- Hardware and software requirements

- Requires NVIDIA graphics card with at least 6.8GB video memory (for inference), 20GB or more recommended for training (e.g. RTX 3090).

- Supports Linux or Windows (Windows requires manual script adjustment).

- Install Python 3.10, Git, and PyTorch with CUDA support.

- Download Code

Runs in the terminal:

git clone https://github.com/bytedance/LatentSync.git

cd LatentSync

- Installation of dependencies

Execute the following command to install the required libraries:

pip install -r requirements.txt

Additional installation of ffmpeg for audio and video processing:

sudo apt-get install ffmpeg # Linux

- Download model

- surname Cong Hugging Face downloading

latentsync_unet.ptcap (a poem)tiny.ptThe - Put the file into the

checkpoints/directory with the following structure:checkpoints/ ├── latentsync_unet.pt ├── whisper/ │ └── tiny.pt - If you train SyncNet, you will also need to download

stable_syncnet.ptand other auxiliary models.

- Verification Environment

Run the test command:

python gradio_app.py --help

If no error is reported, the environment is built successfully.

inference process

LatentSync offers two inference methods, both with a graphics memory requirement of 6.8GB.

Method 1: Gradio Interface

- Launch the Gradio application:

python gradio_app.py

- Open your browser and visit the prompted local address.

- Upload the video and audio files, click Run and wait for the results to be generated.

Method 2: Command Line

- Prepare the input file:

- Video (e.g.

input.mp4), need to contain clear faces. - Audio (e.g.

audio.wav), 16000Hz recommended.

- Run the reasoning script:

python -m scripts.inference

--unet_config_path "configs/unet/stage2_efficient.yaml"

--inference_ckpt_path "checkpoints/latentsync_unet.pt"

--inference_steps 25

--guidance_scale 2.0

--video_path "input.mp4"

--audio_path "audio.wav"

--video_out_path "output.mp4"

inference_steps: 20-50, the higher the value the higher the quality and the slower the speed.guidance_scale: 1.0-3.0, the higher the value the more accurate the lip shape, there may be slight distortion.

- probe

output.mp4, confirming the lip synchronization effect.

Input Preprocessing

- The video frame rate is recommended to be adjusted to 25 FPS:

ffmpeg -i input.mp4 -r 25 resized.mp4

- The audio sample rate needs to be 16000Hz:

ffmpeg -i audio.mp3 -ar 16000 audio.wav

Data Processing Flow

If you need to train the model, you need to process the data first:

- Run the script:

./data_processing_pipeline.sh

- modifications

input_dirfor your video catalog. - The process includes:

- Delete the corrupted video.

- Adjust video to 25 FPS and audio to 16000Hz.

- Split the scene using PySceneDetect.

- Cut the video into 5-10 second segments.

- Detect faces with face-alignment and resize to 256x256.

- Filters videos with a synchronization score of less than 3.

- Calculate hyperIQA score and remove videos below 40.

- The processed video is saved in the

high_visual_quality/Catalog.

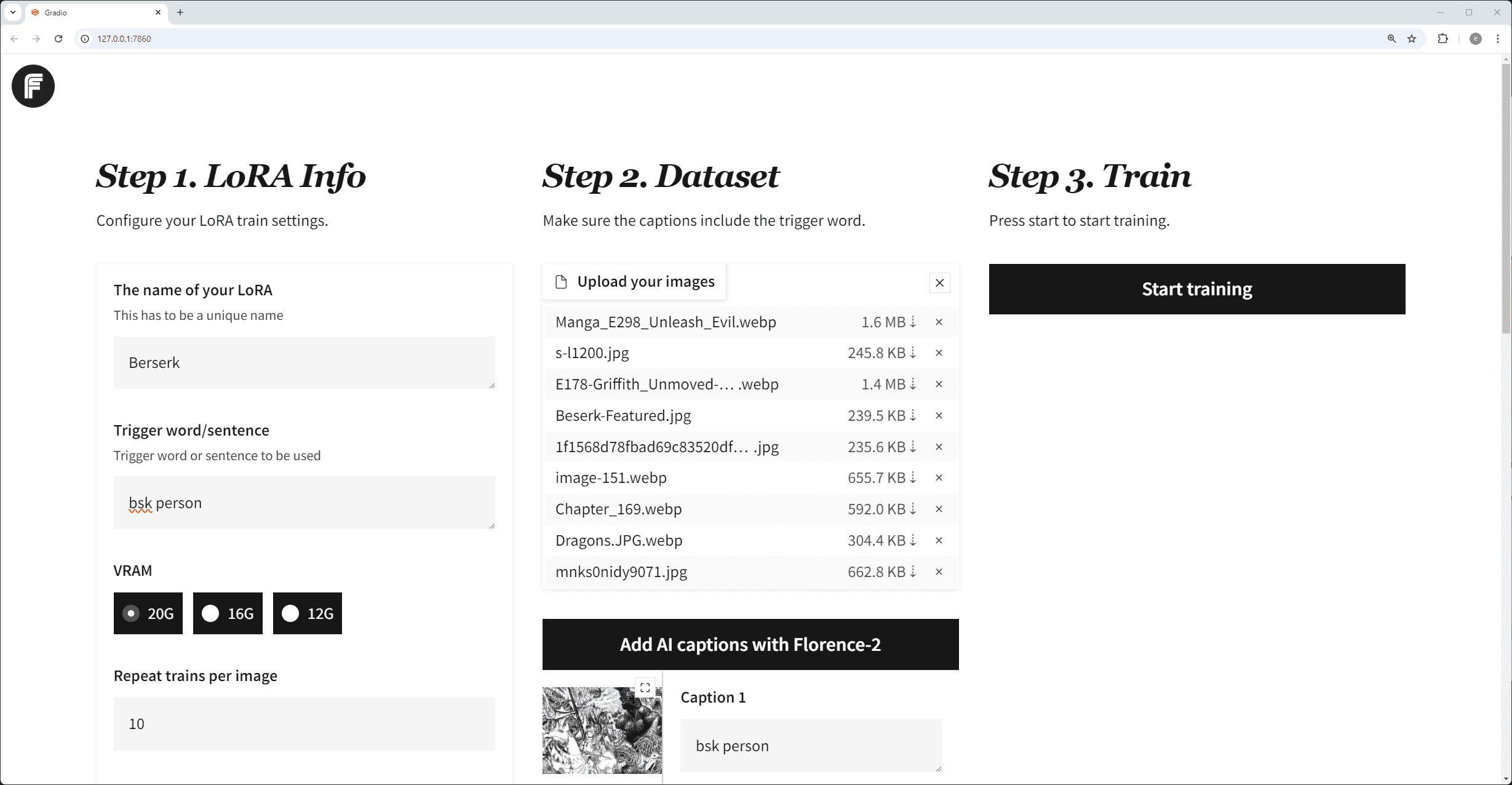

Training U-Net

- Prepare data and all checkpoints.

- Select the profile (e.g.

stage2_efficient.yaml). - Run training:

./train_unet.sh

- Modify the data path and save path in the configuration file.

- Graphics Memory Requirements:

stage1.yaml: 23GB.stage2.yaml: 30GB.stage2_efficient.yaml: 20GB for regular graphics cards.

caveat

- Windows users need to set the

.shChange to Python command run. - If the screen jumps, increase the

inference_stepsor adjust the video frame rate. - Chinese audio support was optimized in version 1.5 to ensure that the latest models are used.

With these steps, users can easily install and use LatentSync to generate lip sync videos or further train the model.

application scenario

- post-production

Replace audio for existing videos to generate new lips suitable for dubbing adjustments. - virtual image

Input audio to generate videos of avatars talking for live or short videos. - game production

Add dynamic dialog animations to characters to enhance the gameplay experience. - multilingualism

Generate instructional videos with audio in different languages, adapted for global users.

QA

- Does it support real-time generation?

Not supported. The current version requires full audio and video and takes seconds to minutes to generate. - What is the minimum video memory?

Reasoning requires 6.8GB and training recommends 20GB (after optimization in version 1.5). - Can you handle anime videos?

Can. Official examples include anime videos that work well. - How can I improve Chinese language support?

Use LatentSync version 1.5, which has been optimized for Chinese audio processing.

LatentSync One-Click Installer

Quark: https://pan.quark.cn/s/c3b482dcca83

WIN/MAC standalone installer

Quark: https://pan.quark.cn/s/90d2784bc502

Baidu: https://pan.baidu.com/s/1HwN1k6v-975uLfI0d8N_zQ?pwd=gewd

LatentSync Enhanced:https://pan.quark.cn/s/f8d3d9872abb

LatentSync 1.5 Local Deployment 1: https://pan.baidu.com/s/1QLqoXHxGbAXV3dWOC5M5zg?pwd=ijtt

LatentSync 1.5 Local Deployment 2: https://pan.baidu.com/share/init?surl=zIUPiJc0qZaMp2NMYNoAug&pwd=8520

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...