LangManus: an open source AI automation framework supporting multi-intelligence collaboration

General Introduction

LangManus is an open source AI automation framework hosted on GitHub. Developed by a group of former colleagues in their spare time, it is an academically-driven project with the goal of combining language models and specialized tools to accomplish tasks such as web search, data crawling, and code execution. The framework uses a multi-agent system, including roles such as coordinator, planner, and supervisor, to collaborate on complex tasks.LangManus emphasizes the spirit of open source and relies on the community's excellence, while welcoming code contributions and feedback on issues. It uses uv Manage dependencies and support rapid build environments. The project is still in development and is suitable for developers interested in AI automation and multi-agent technologies.

Function List

- Multi-agent collaboration:: The system contains coordinators, planners, supervisors, etc., dividing up the work to handle task routing, strategy development, and execution management.

- Task automation: Support for language modeling combined with tools for web searching, data crawling, Python code generation, and other operations.

- Language Model Integration: Support for open source models (e.g., Qwen) and OpenAI-compatible interfaces, providing a multi-layer LLM system to handle different tasks.

- Search and retrieval:: Adoption Tavily API to implement web search, using Jina for neural search and content extraction.

- Development Support: Built-in Python REPL and code execution environment using the

uvManaging dependencies. - Workflow management:: Provide tasking, monitoring and process visualization capabilities.

- Document management: Support for file manipulation to generate formatted Markdown files.

Using Help

LangManus is a locally run framework for users with programming experience. Detailed installation and usage instructions are provided below.

Installation process

To use LangManus locally, you need to install Python,uv and other tools. The steps are as follows:

- Preparing the environment

- Make sure Python 3.12 is installed. check the version:

python --versionIf the version does not match, download and install from https://www.python.org/downloads/.

- Install Git for cloning repositories. Download it from https://git-scm.com/.

- Make sure Python 3.12 is installed. check the version:

- Installing uv

uvis a dependency management tool. Run:

pip install uv

Check the installation:

uv --version

- clone warehouse

Runs in the terminal:

git clone https://github.com/langmanus/langmanus.git

cd langmanus

- Setting up a virtual environment

utilizationuvCreating the environment:

uv python install 3.12

uv venv --python 3.12

source .venv/bin/activate # Windows: .venv\Scripts\activate

- Installation of dependencies

Running:

uv sync

This will install all dependent packages.

- Installing Browser Support

LangManus uses Playwright to control the browser. Run:

uv run playwright install

- Configuring Environment Variables

- Copy the example file:

cp .env.example .env - compiler

.env, add the API key. Example:TAVILY_API_KEY=your_tavily_api_key REASONING_MODEL=your_model REASONING_API_KEY=your_api_key - Tavily API keys are available from https://app.tavily.com/.

- Running Projects

Input:

uv run main.py

Usage

Once installed, LangManus can be run from the command line or from an API.

- basic operation

- Runs in a virtual environment:

uv run main.py - There are no examples of default tasks in the current version.

README.mdOr wait for an official update.

- API Services

- Start the API server:

make serveOr:

uv run server.py - Call interfaces, for example:

curl -X POST "http://localhost:8000/api/chat/stream" -H "Content-Type: application/json" -d '{"messages":[{"role":"user","content":"搜索最新AI论文"}],"debug":false}' - Returns a real-time streaming response.

- Examples of tasks

- Let's say we want to calculate the number of times the HuggingFace DeepSeek The impact index of R1:

- Edit task inputs (e.g., via API or code).

- The system assigns researcher agents to search for data and encoder agents to generate computational code.

- The results are output by the reporter agent.

LangManus Default Web User Interface: https://github.com/langmanus/langmanus-web

Featured Function Operation

- Multi-agent collaboration

Once a task is entered, the coordinator analyzes it and routes it to the planner, who creates a strategy that the supervisor assigns to the researcher or encoder to execute. For example, if you type "search for the latest AI papers", the researcher will call the Tavily API to get the results. - Language Model Integration

Multiple models are supported. Configuration.envThe modeling of different tasks in the - Complex Tasks

REASONING_MODELThe - simple task

BASIC_MODELThe - graphics task

VL_MODELThe - Search and retrieval

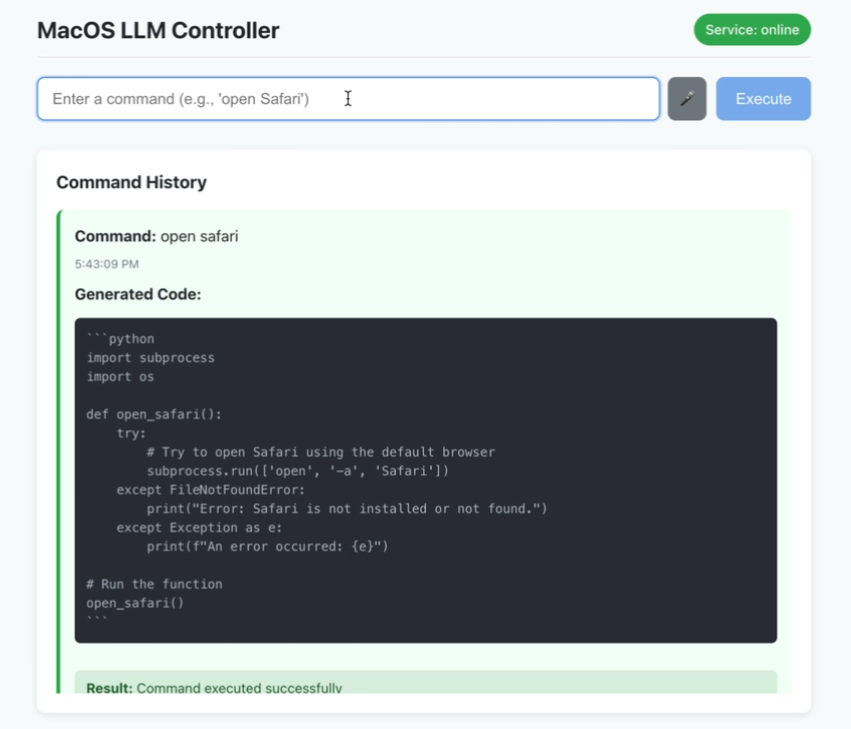

Use the Tavily API (which returns 5 results by default) or Jina to extract page content. Once the API key is configured, the browser agent can navigate and crawl the page. - code execution

The encoder agent supports Python and Bash scripts. For example, generating code:

print("Hello, LangManus!")

Runs directly in the built-in REPL.

Development and contribution

- Customized Agents

modificationssrc/prompts/under the Markdown file to adjust agent behavior. For example, enhance the researcher's search capabilities. - Submit a contribution

- Fork the repository and change the code.

- Submit a pull request to GitHub.

Documentation is limited at this time, so we recommend following the official updates.

application scenario

- academic research

Researchers use LangManus to collect data from papers, generate analyses, and participate in GAIA rankings. - Automation Development

Developers enter requirements and the framework generates Python code to accelerate project development. - Technical Learning

Students learn about multi-agent system design by modifying agent prompt words.

QA

- Is LangManus a commercial program?

It isn't. It is an academically driven open source project focused on research and community collaboration. - What API keys are required?

At least the Tavily API key is required for searching, other model keys are configured on demand. - How do I handle runtime errors?

probe.envconfiguration is correct, or submit an issue on GitHub.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...