LangGraph CodeAct: generating code to help intelligences solve complex tasks

General Introduction

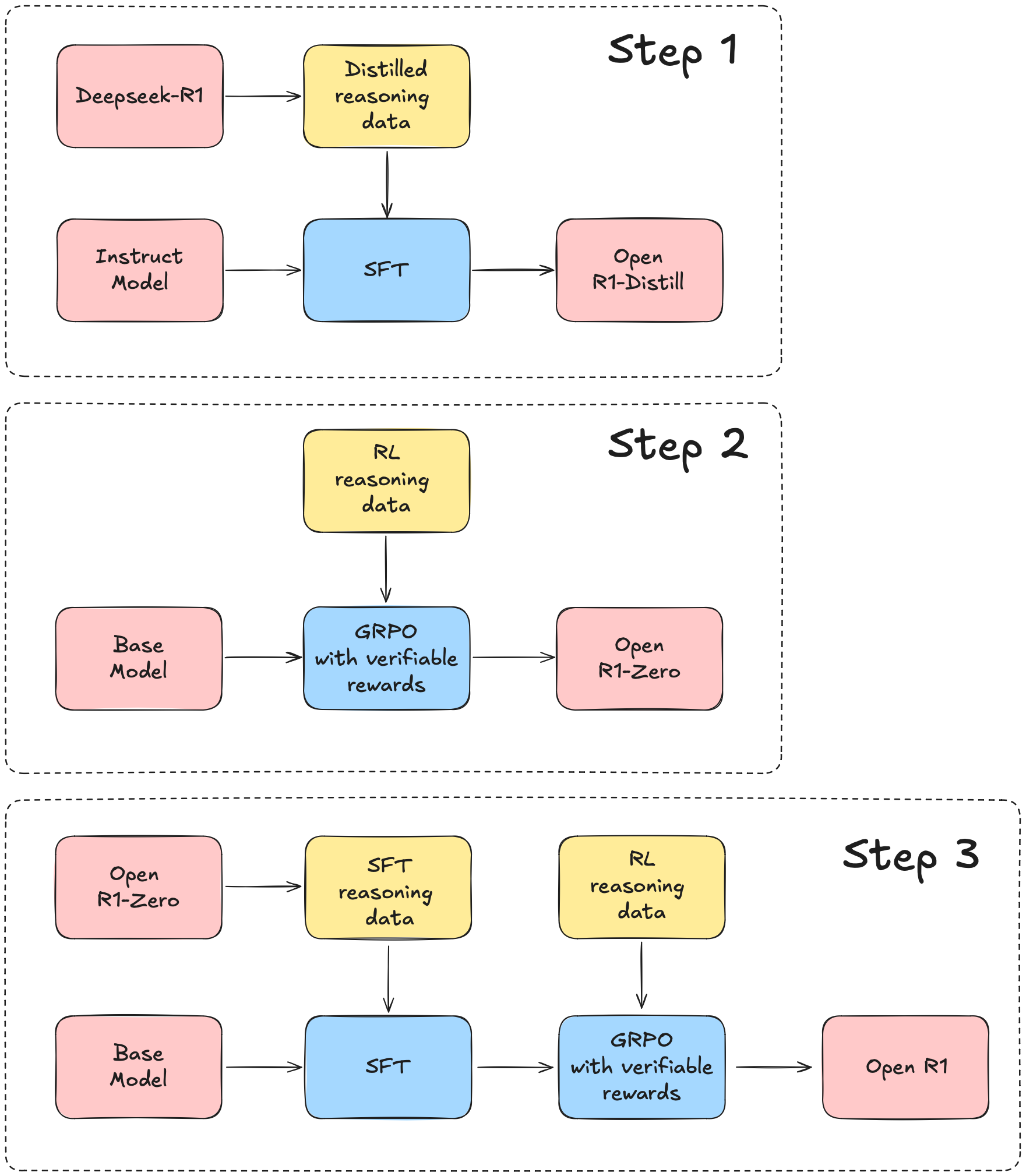

LangGraph CodeAct is a framework open-sourced on GitHub by the LangChain AI team, based on the CodeAct architecture (see paper arXiv:2402.01030 for details). It helps intelligences efficiently process complex tasks by generating and executing Python code. This tool is being Manus.im uses, as opposed to traditional JSON function callIt utilizes the full programming power of Python to integrate the output of multiple tools and reduce the number of steps. It supports message history and variable saving for continuous conversations or advanced task scenarios. As of March 29, 2025, the project is still actively maintained and open to AI developers.

Function List

- Intelligent bodies generate and execute Python code to accomplish tasks directly.

- Save conversation history and support continuous questioning.

- Preserve Python variables for easy referencing by subsequent tasks.

- be in favor of

.invoke()Get the final result, or.stream()Get step-by-step output. - Compatible with custom tools, LangChain tools and MCP Tools.

- Works with any LangChain-supported model (official testing only) Claude 3.7).

- Provides a custom code sandbox interface for flexibility.

- The system prompt words can be freely adjusted.

Using Help

LangGraph CodeAct is an open source framework for developers that runs mainly through the Python environment. The following is a detailed installation and usage guide to help you get started quickly.

Installation process

You will need Python 3.8 or higher before you can use it. The installation steps are as follows:

- Download Project

Open a terminal and enter the command Clone Repository:

git clone https://github.com/langchain-ai/langgraph-codeact.git

Then go to the project directory:

cd langgraph-codeact

- Installing core dependencies

Run the following command to install the basic dependencies:

pip install langgraph-codeact

Installation is also required if you need to run examples or use a specific model (e.g. Claude 3.7):

pip install langchain langchain-anthropic

- Configuring Environment Variables

When using the Claude model, you need to set the Anthropic API key. Enter it in the terminal:

export ANTHROPIC_API_KEY="你的密钥"

The key can be obtained from the Anthropic website. If you are using other models, refer to the corresponding documentation for configuration.

Basic usage

After the installation is complete, LangGraph CodeAct is invoked via a Python script. here is how to do it:

- Initializing the Intelligence

Create a simple script such asrun_agent.py, enter the following code:

from langchain.chat_models import init_chat_model

from langgraph_codeact import create_codeact

from langgraph.checkpoint.memory import MemorySaver

# 初始化模型

model = init_chat_model("claude-3-7-sonnet-latest", model_provider="anthropic")

# 创建 CodeAct 实例(无工具示例)

code_act = create_codeact(model, tools=[], eval=None)

# 编译智能体,启用内存保存

agent = code_act.compile(checkpointer=MemorySaver())

- Running Tasks

Enter a problem and let the intelligences generate code and execute it. Example:messages = [{"role": "user", "content": "计算 5 的平方根"}] result = agent.invoke({"messages": messages}) print(result["messages"][-1].content)The output is similar:

sqrt(5) = 2.23606797749979The

Add Tools

The official example provides math tools to empower intelligences. Here is how to add it:

- Definition tools

Add math functions to the script:from langchain_core.tools import tool import math @tool def add(a: float, b: float) -> float: """加法工具""" return a + b @tool def sqrt(a: float) -> float: """平方根工具""" return math.sqrt(a) tools = [add, sqrt] - Integration Tools

Modify the initialization code:code_act = create_codeact(model, tools=tools, eval=None) agent = code_act.compile(checkpointer=MemorySaver())Intelligentsia can now use

add(3, 4)maybesqrt(16)The

Customized Code Sandbox

Default use eval Execute the code, but the production environment needs to be securely sandboxed. Sample code:

def custom_sandbox(code: str, _locals: dict) -> tuple[str, dict]:

try:

with open("temp.py", "w") as f:

f.write(code)

import subprocess

result = subprocess.check_output(["python", "temp.py"], text=True)

return result, {}

except Exception as e:

return f"错误: {e}", {}

Pass this function in:

code_act = create_codeact(model, tools=tools, eval=custom_sandbox)

Featured Function Operation

- Generate Code

Enter "Calculate the sum of 3 and 5", and the SmartBody will generate it:result = add(3, 5) print(result) # 输出 8 - Dialog History

Then ask "result plus 2", and intelligently remember thatresult, generation:new_result = result + 2 print(new_result) # 输出 10 - streaming output

utilization.stream()View step-by-step results:for typ, chunk in agent.stream({"messages": messages}): if typ == "messages": print(chunk[0].content, end="")

caveat

- Production environments avoid direct use of

eval, which needs to be protected with a sandbox. - Claude 3.7 is the official recommended model, other models may need to adjust cue words.

- Tool definitions need to conform to the LangChain specification with clear parameter types.

With these steps, you can build intelligences to solve various tasks with LangGraph CodeAct.

application scenario

- math education

Students enter a topic, such as "Calculate the distance of a parabola," and the smart body generates code and outputs results to aid learning. - data processing

Developers use it to automatically generate scripts, process CSV files or calculate statistics. - AI Experiments

Researchers test code generation capabilities of intelligences to optimize complex task processes.

QA

- What programming languages are supported?

Currently only Python is supported, as it is the primary execution language. - How to debug code errors?

utilization.stream()View the generated code, or check the sandbox output for error messages. - Can you not use tools?

Yes, set at initializationtools=[], relying only on the model to generate code.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...