LangChain vs. LangGraph: The Officials Tell You What to Choose

The field of generative AI is currently evolving rapidly, with new frameworks and technologies emerging. Therefore, readers should be aware that the content presented in this article may be current. In this article, we will take a deep dive into two mainstream frameworks for building LLM applications, LangChain and LangGraph, and analyze their strengths and weaknesses to help you choose the most suitable tool.

LangChain and LangGraph Foundation Components

Understanding the foundational elements of both frameworks can help developers gain a deeper understanding of the key differences in how they handle core functionality. The following descriptions do not list all of the components of each framework, but are intended to provide a clear understanding of their overall design.

LangChain

LangChain can be used in two main ways: a sequential chain of predefined commands (Chain) and the LangChain Agent, which differs in terms of tooling and organization. The Chain uses a predefined linear workflow, while the Agent acts as a coordinator and can make more dynamic (non-linear) decisions.

- Chain: A combination of steps that can include calls to LLMs, Agents, tools, external data sources, and procedural code. Chains can split a single process into multiple paths based on logical conditional branching.

- Agent or LLM: While the LLM itself is capable of generating natural language responses, the Agent combines the LLM with additional capabilities that allow it to reason, invoke the tool, and try to invoke the tool if it fails.

- Tool: are code functions that can be called in a chain or triggered by an Agent to interact with an external system.

- Prompt: Includes system prompts (to indicate how the model accomplishes a task and what tools are available), injected information from external data sources (to provide more context for the model), and user input tasks.

LangGraph

LangGraph takes a different approach to building AI workflows. As the name suggests, it orchestrates workflows as a Graph. Because of its ability to flex between AI Agents, procedural code, and other tools, it's better suited for complex application scenarios where linear chains, branching chains, or simple Agent systems don't cut it.LangGraph is designed to handle more complex conditional logic and feedback loops, and is more powerful than LangChain.

- GraphLangGraph also supports Cyclical Graphs, which create loops and feedback mechanisms that allow certain nodes to be accessed multiple times.

- Node: Represents a step in a workflow, such as an LLM query, API call, or tool execution.

- Edge and Conditional Edge: Edges are used to connect nodes and define the flow of information so that the output of one node is used as input to the next. Conditional edges allow information to flow from one node to another when specific conditions are met. Developers can customize these conditions.

- StateThe state is a developer-defined variable TypedDict object that contains all the relevant information needed for the current execution of the graph. The state is a developer-defined variable TypedDict object that contains all the relevant information needed for the current execution of the graph.LangGraph automatically updates the state at each node.

- Agent or LLM: The LLM in the graph is only responsible for generating textual responses to inputs. The Agent capability, on the other hand, allows the graph to contain multiple nodes representing different components of the Agent (e.g., reasoning, tool selection, and tool execution.) The Agent can decide which paths to take in the graph, update the state of the graph, and perform more tasks than just text generation.

In short, LangChain is better suited for linear and tool-based invocations, while LangGraph is better suited for complex, multi-path and AI workflows with feedback mechanisms.

Differences between LangChain and LangGraph in the way core functionality is handled

LangGraph and LangChain overlap in some of their capabilities, but they approach the problem differently; LangChain focuses on linear workflows (through chains) or different AI Agent patterns, while LangGraph focuses on creating more flexible, fine-grained, process-based workflows that can contain AI Agents, tool calls, procedural code, etc.

In general, LangChain has a relatively low learning curve because it provides more abstraction encapsulation and predefined configurations, which makes LangChain easier to apply to simple usage scenarios. LangGraph, on the other hand, allows for finer-grained customization of the workflow design, which means it is less abstract and developers need to learn more to use it effectively.

Tool Calling

LangChain

In LangChain, the way a tool is invoked depends on whether a series of steps are performed sequentially in the chain, or whether only Agent capabilities are used (not explicitly defined in the chain).

- Tools are included as predefined steps in the chain, which means that they are not necessarily invoked dynamically by the Agent, but rather it is decided which tools are invoked at the time the chain is designed.

- When the Agent is not defined in a chain, the Agent has more autonomy and can decide which tool to call and when, based on the list of tools it has access to.

Example of a process for the chain approach:

This is an example of the flow of the Agent method:

LangGraph

In LangGraph, a tool is usually represented as a node on the graph. If the graph contains an Agent, the Agent is responsible for deciding which tool to invoke, based on its reasoning ability. When the Agent selects a tool, the workflow jumps to the corresponding Tool Node to execute the tool's operation. The edge between the Agent and the Tool Node can contain Conditional Logic, which adds additional judgment logic to determine whether or not to execute a tool. In this way, the developer can have more fine-grained control. If there is no Agent in the graph, the tool is invoked in a manner similar to a LangChain chain, i.e., the tool is executed in the workflow based on predefined conditional logic.

Contains an example of a diagram flow for an Agent:

Example of a flow of a diagram without an Agent:

Dialogue on history and memory

LangChain

LangChain provides a built-in abstraction layer to handle conversation history and memories. It supports memory management at different levels of granularity, thus controlling the amount of token quantity, mainly in the following ways:

- Full session conversation history (Full session conversation history)

- Summarized version of the conversation history

- Custom defined memory (CDM)

In addition, developers can customize the long-term memory system to store conversation history in an external database and retrieve relevant memories when needed.

LangGraph

In LangGraph, State is responsible for managing memory, which keeps track of state information by recording variables defined at each moment in time.State can include:

- Dialog History

- Steps in the implementation of the mandate

- The last output of the language model

- Other important information

State can be passed between nodes so that each node has access to the current state of the system. However, LangGraph itself does not provide long-term memory across sessions. If developers need to store memories persistently, they can introduce specific nodes for storing memories and variables in an external database for subsequent retrieval.

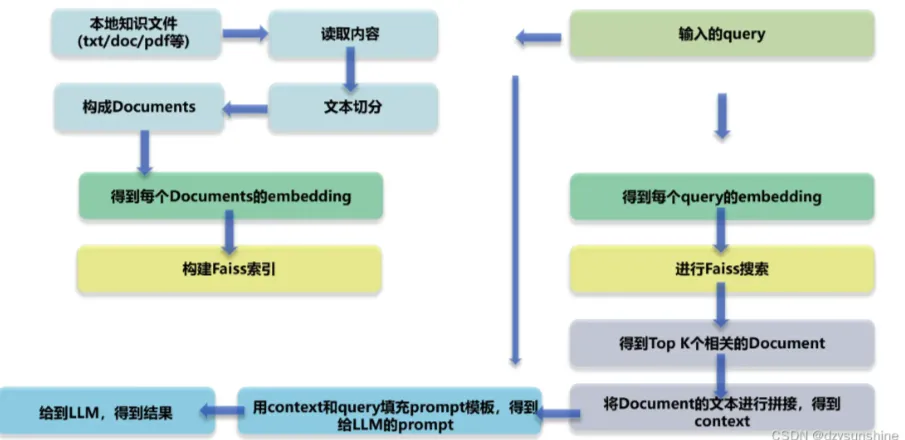

Ready-to-use RAG capability

LangChain

LangChain natively supports complex RAG workflows, and provides a sophisticated set of tools that make it easy for developers to integrate RAG into their applications. For example, it provides:

- Document Loading

- Text Parsing

- Embedding Creation

- Vector Storage

- Retrieval Capabilities (Retrieval Capabilities)

Developers can directly use the APIs provided by LangChain (such as the langchain.document_loaders,langchain.embeddings cap (a poem) langchain.vectorstores) to implement RAG workflows.

LangGraph

In LangGraph, RAGs need to be designed by the developer and implemented as part of the graph structure. For example, developers can create separate nodes for each:

- Document Parsing

- Embedding Computation (Embedding Computation)

- Semantic Search (Retrieval)

These nodes can be connected to each other by Normal Edges or Conditional Edges, and the state of the individual nodes can be used to pass information to share data between different steps of the RAG pipeline.

Parallelism

LangChain

LangChain allows parallel execution of multiple chains or Agents, which can be executed using the RunnableParallel class to implement basic parallel processing.

However, if more advanced parallel computation or asynchronous tool calls are needed, developers need to use Python libraries such as asyncio) self-realization.

LangGraph

LangGraph naturally supports parallel execution of nodes as long as there are no dependencies between these nodes (e.g., the output of one LLM cannot be used as input to the next). This means that multiple Agents can run simultaneously, provided they are not interdependent nodes.

Also supported by LangGraph:

- utilization

RunnableParallelRunning Multiple Graphs - With Python's

asyncioLibrary Parallel Calling Tool

Retry logic and error handling

LangChain

LangChain's error handling needs to be explicitly defined by the developer, which can be done via:

- Introducing Retry Logic in the Chain

- Handling Tool Call Failures in the Agent

LangGraph

LangGraph can embed error handling logic directly in the workflow by making error handling a separate node.

- When a task fails, you can jump to another error handling node or retry at the current node.

- Failed nodes are retried individually, rather than the entire workflow being re-executed.

- In this way, the graph can continue execution from the point of failure without having to start from scratch.

If your task involves multiple steps and tool calls, this error handling mechanism can be very important.

How to choose between LangChain and LangGraph?

Developers can:

- Use LangChain only

- Use LangGraph only

- Using LangChain and LangGraph together

It is also possible to combine LangGraph's graph structuring capabilities with other Agent frameworks such as Microsoft's AutoGen, e.g., by combining the AutoGen Agent as a node of LangGraph.

LangChain and LangGraph each have their advantages, and choosing the right tool can be confusing.

When to choose LangChain?

If developers need to build AI workflows quickly, consider LangChain for the following scenarios:

- linear task: Predefined workflows for document retrieval, text generation, summarization, etc.

- AI Agent requires dynamic decision making, but without the need for fine-grained control of complex processes.

When to choose LangGraph?

If the application scenario requires a non-linear (non-linear) workflow, consider LangGraph for the following situations:

- The task involves dynamic interaction of multiple components.

- Requires conditional judgment, complex branching logic, error handling, or parallel execution.

- Developers are willing to implement some of the features not provided by LangChain on their own.

When should I use LangChain and LangGraph together?

If one wishes to take advantage of both LangChain's readily available abstraction capabilities (e.g., RAG components, dialog memories, etc.) and LangGraph's nonlinear orchestration capabilities, one might consider using both.

Combined, the two can fully utilize their respective strengths to create more flexible and powerful AI workflows.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...