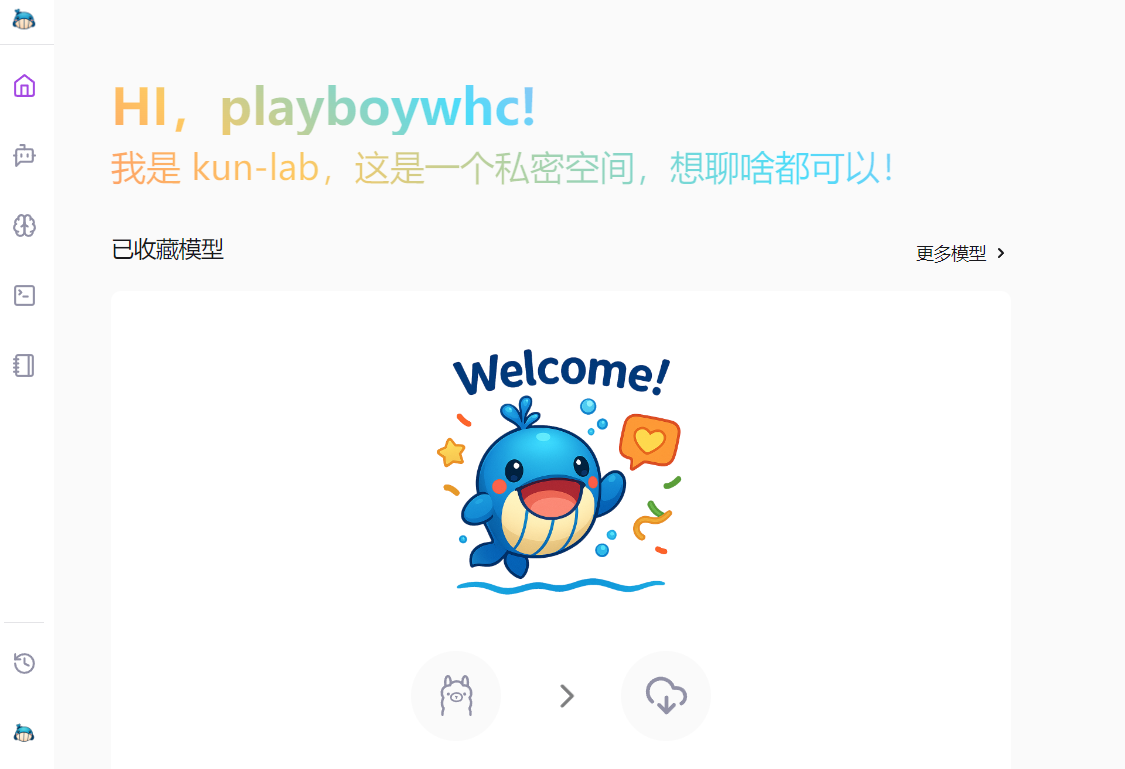

kun-lab: a native lightweight AI dialog client based on Ollama

General Introduction

kun-lab is a program based on the Ollama is an open source AI conversation app focused on providing a lightweight, fast, localized and intelligent conversation experience. It supports Windows, macOS, and Linux (Windows is currently the main focus), and requires no complex configuration to use. Users can have smooth multi-round conversations with AI, parse documents, recognize images, and even search for answers online. All data is stored locally for privacy and security. kun-lab also offers code rendering, prompt templates, and a multi-language interface for developers, students, or those who need an efficient AI tool.

Function List

- intelligent dialog: Supports multiple rounds of real-time conversations, smooth AI response, and networkable search for more comprehensive answers.

- document resolution: Upload PDF, DOC, PPT, TXT files, AI Understand the content and answer the questions.

- image recognition: Recognize JPG, PNG images, extract text or analyze scenes, support multiple rounds of dialog.

- model management: Easily switch between Ollama or Hugging Face models, supporting GGUF and safetensors formats.

- Cue word templates: Built-in templates and support for customization make it easy to inspire AI creativity.

- Code Rendering: Automatically highlight multiple programming language codes for clear presentation.

- Quick Notes: Support Markdown syntax, real-time preview and one-click export.

- Multi-user support: Allows multiple people to log in at the same time, each with their own individual conversation space.

- multilingual interface: Support Chinese, English and other languages, the operation is more friendly.

Using Help

kun-lab is a feature-rich AI dialog tool, running on Ollama, easy to operate and suitable for local use. Below is a detailed description of the installation process, core functions and getting started steps to help users quickly master it.

Install kun-lab

kun-lab provides both desktop application and source code deployment. Currently, the desktop application only supports Windows, and the source code deployment supports Windows, macOS and Linux.

Mode 1: Desktop application (recommended)

- Visit the GitHub release page (https://github.com/bahamutww/kun-lab/releases).

- Download the appropriate installation package for your system:

- Windows:

.exeDocumentation. - macOS:

.dmgDocumentation (future support). - Linux:

.AppImagemaybe.debDocumentation (future support).

- Windows:

- Double-click the installation package and follow the prompts to complete the installation.

- After installation, click the desktop icon to run kun-lab without additional configuration.

- After startup, select the language (Chinese is supported by default) and enter the main interface.

Approach 2: Source Code Deployment

If you want to customize or develop, you need to install the environment and run the code. Below are the detailed steps:

- Preparing the environment::

- Make sure the system is Windows, macOS, or Linux.

- Install Python 3.10 or later (download: https://www.python.org).

- Install Node.js 20.16.0 or later (download: https://nodejs.org).

- Install Ollama and start the service (reference: https://ollama.com).

- clone warehouse::

git clone https://github.com/bahamutww/kun-lab.git cd kun-lab - Creating a Virtual Environment::

python -m venv venv .\venv\Scripts\activate # Windows # source venv/bin/activate # macOS/Linux - Installing back-end dependencies::

cd backend pip install -r requirements.txt - Installing front-end dependencies::

cd frontend npm install - Configuring Environment Variables::

cp .env.example .env- Open with a text editor

.envfile, modify the configuration (e.g., port number) as needed.

- Open with a text editor

- launch an application::

python run_dev.py - Open your browser and visit http://localhost:5173 to get started.

Core Function Operation

The following describes the main functions of kun-lab and the specific operation procedures to ensure that users can easily get started.

1. Intelligent AI dialogues

- Starting a conversation::

- Open kun-lab and click on "Chat Conversation" or "New Conversation".

- Select a model in the model list (by default there are models provided by Ollama).

- Enter a question and the AI responds in real time.

- Internet search::

- If the question requires up-to-date information, check the box "Enable web search".

- The AI will combine the web page data to answer with a more comprehensive answer.

- Managing History::

- Conversations are automatically saved and can be viewed by clicking "History" in the sidebar.

- A conversation can be deleted or exported.

- Code Support::

- Enter a code-related question and AI displays the code in a highlighted format.

- Support for Python, JavaScript, and many other languages.

2. Document parsing

- Upload a document::

- Click the Document Dialog screen.

- Click the "Upload" button and select PDF, DOC, PPT or TXT file.

- After the document is parsed, the AI displays a summary of the document.

- ask questions::

- Enter a question related to the document in the dialog box.

- AI answers based on content and supports contextualization.

- Search content::

- Enter keywords and AI quickly locates the relevant part of the document.

- Click on the results to jump to the location of the original article.

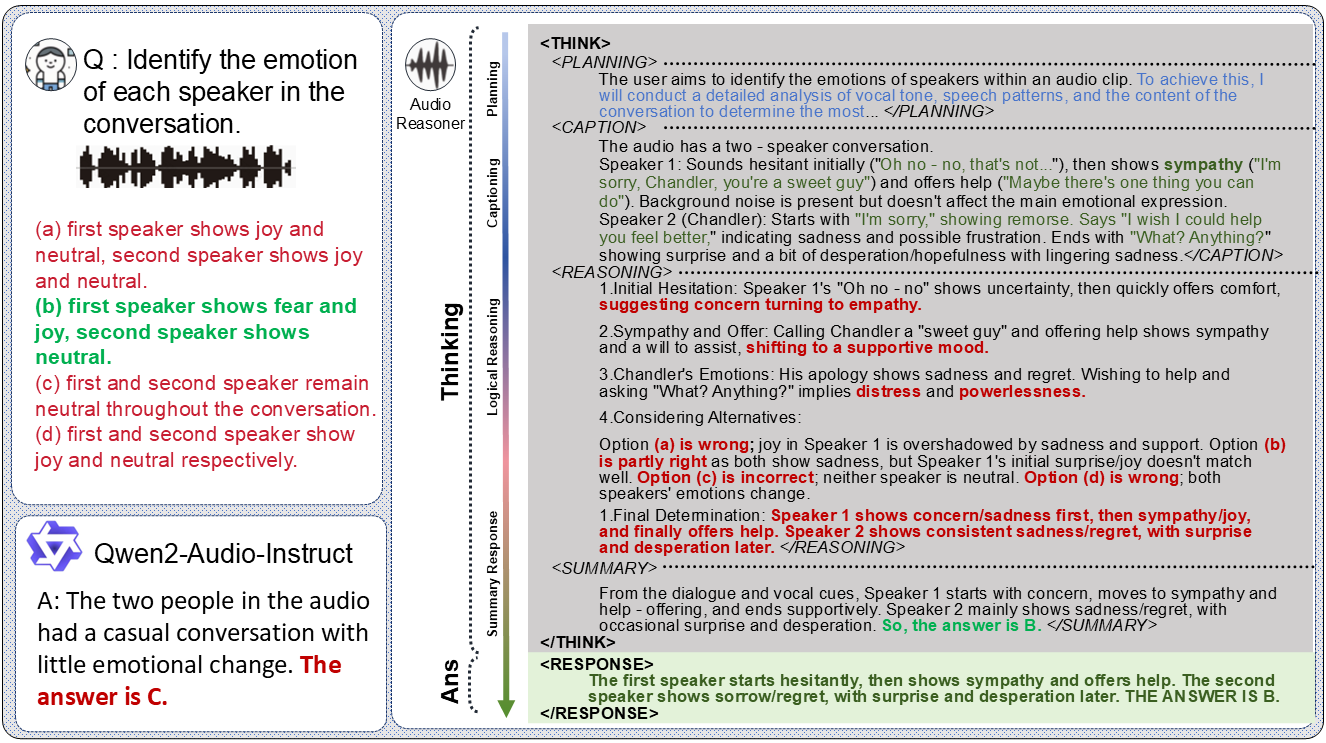

3. Image recognition

- Upload a picture::

- Go to the "Picture dialog" page.

- Click "Upload Image" and select JPG, PNG or JPEG file.

- AI automatically recognizes scenes or extracts text.

- many rounds of dialogue::

- Ask questions based on pictures, such as "What's in this picture?" .

- After the AI answers, you can continue to ask deeper questions.

- OCR Functions::

- If the image contains text, AI will extract and display it.

- Text can be copied or text-based questions can be asked.

4. Model management

- pull model::

- Go to the Model Library page.

- Click on "Pull Model".

- Enter commands such as

ollama run qwq:32bmaybeollama run hf.co/Qwen/QwQ-32B-GGUF:Q2_KThe - Wait for the download to complete and the model to be ready to use.

- Switching Models::

- On the dialog page, click the Model drop-down menu.

- Select the downloaded model to switch immediately.

- Custom Models::

- Click the "Customize" button.

- Enter a model name and a system prompt (e.g., "Play Math Teacher").

- Select the base model and click "Create".

5. Cue management

- Using templates::

- Open the "Prompts" page.

- Browse the built-in templates, such as "Write Article Outline" or "Code Debugging".

- Click on the template to apply it directly to the dialog.

- Customized Cues::

- Click on "New Cue".

- Enter the name and content, save it, and then you can categorize and manage it.

- Quick Apps::

- During a dialog, select the prompt word and the AI responds as set.

6. Quick notes

- Creating Notes::

- Click the Notes page.

- Enter content in Markdown format, such as a title, list, or code.

- Real-time preview of the effect on the right side.

- Exporting notes::

- Click on the "Export" button and save as

.mdDocumentation. - Can be shared or imported into other tools.

- Click on the "Export" button and save as

caveat

- Ensure that the Ollama service is running, otherwise the AI function is not available.

- It may take time to pull the model for the first time and it is recommended to check the internet connection.

- Locally stored data takes up a lot of space, clean up your history regularly.

- If you run into problems, check out GitHub's

issuespage or submit feedback.

By following these steps, you can easily install and utilize the functions of kun-lab. Whether it is dialog, document parsing or image analysis, the operation is intuitive and convenient.

application scenario

- Personal Learning Assistant

Students can use kun-lab to parse courseware or textbooks, ask questions, and the AI will answer them in detail. Upload math handouts and the AI can explain formulas step by step. - Developer Tools

Programmers can use kun-lab to debug code or learn a new language. Enter a code snippet and the AI provides optimization suggestions and highlights them. - Documentation

Career professionals can quickly summarize reports with the document parsing feature. Upload long PPTs, AI extracts key points and generates concise notes. - Creative Exploration

Creators can generate stories or design inspiration with prompt word templates. Upload sketches that AI analyzes and suggests improvements.

QA

- Does kun-lab require an internet connection?

Core functions run locally and do not require networking. Network search function is optional and needs to be turned on manually. - What document formats are supported?

Supports PDF, DOC, PPT, TXT files, and may be expanded for more formats in the future. - How do I add a new model?

On the Model Library screen, typeollama runcommand to pull an Ollama or Hugging Face model. - Is the data safe?

All data is stored locally and not uploaded to the cloud to ensure privacy and security.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...