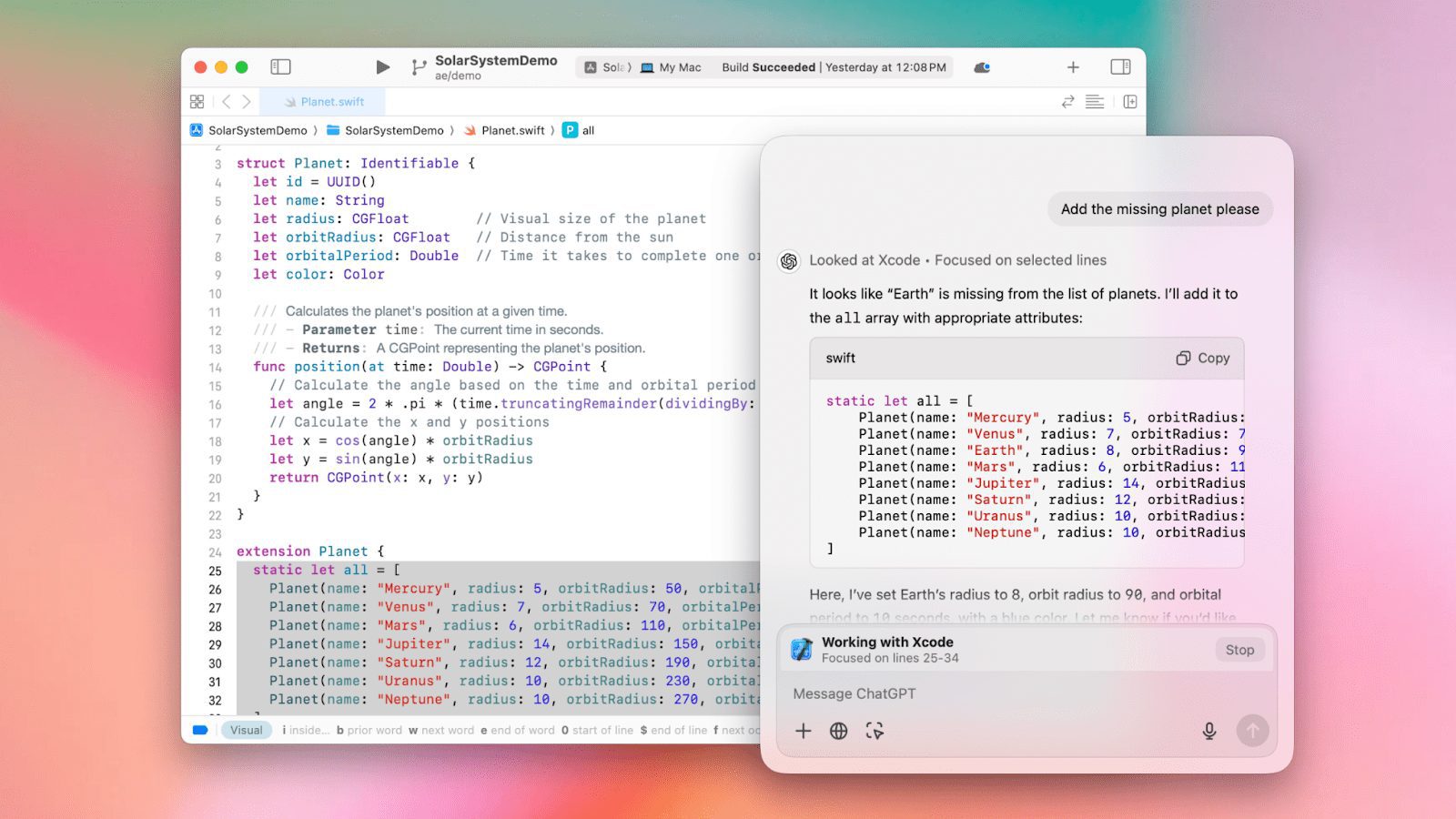

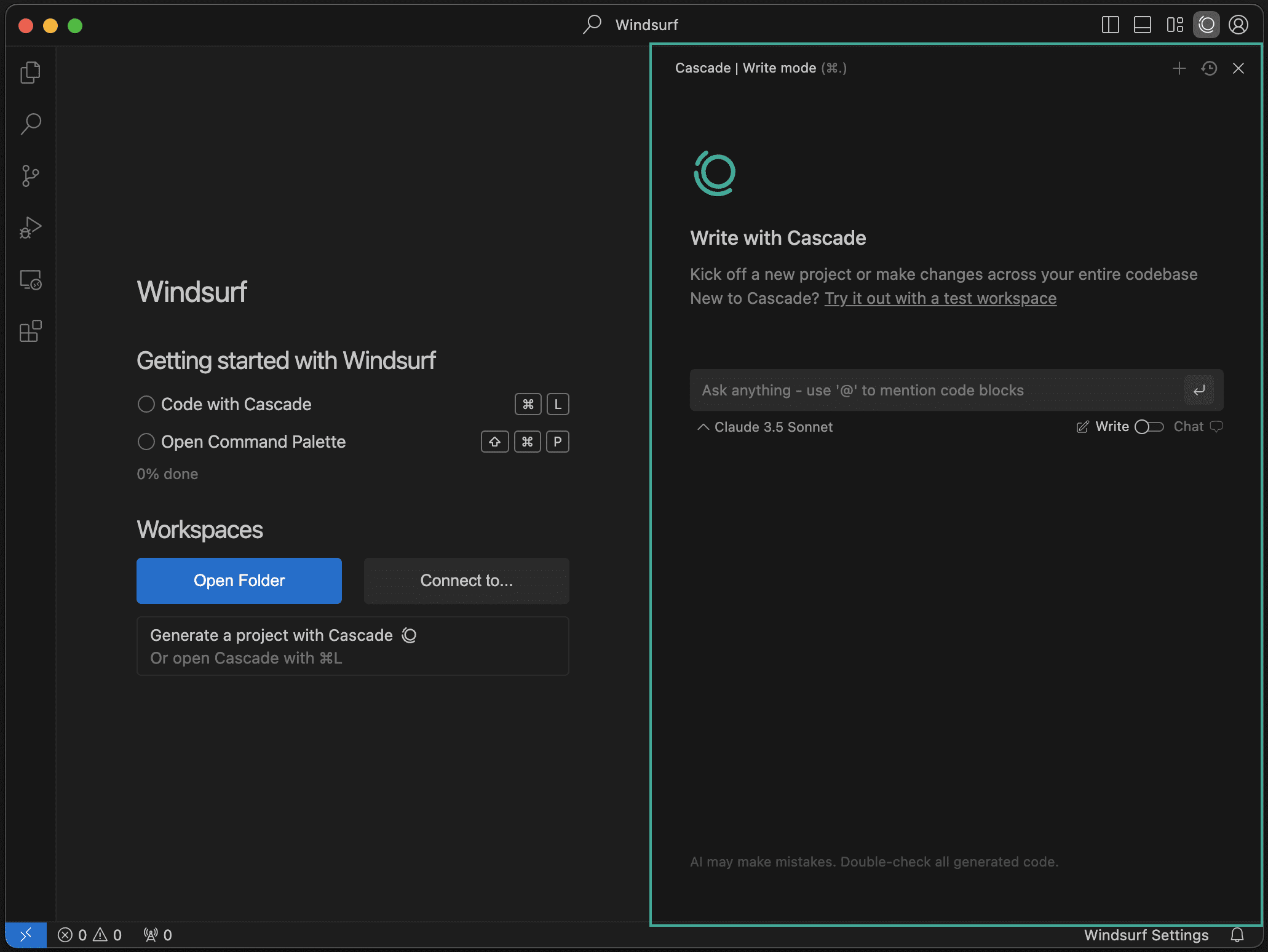

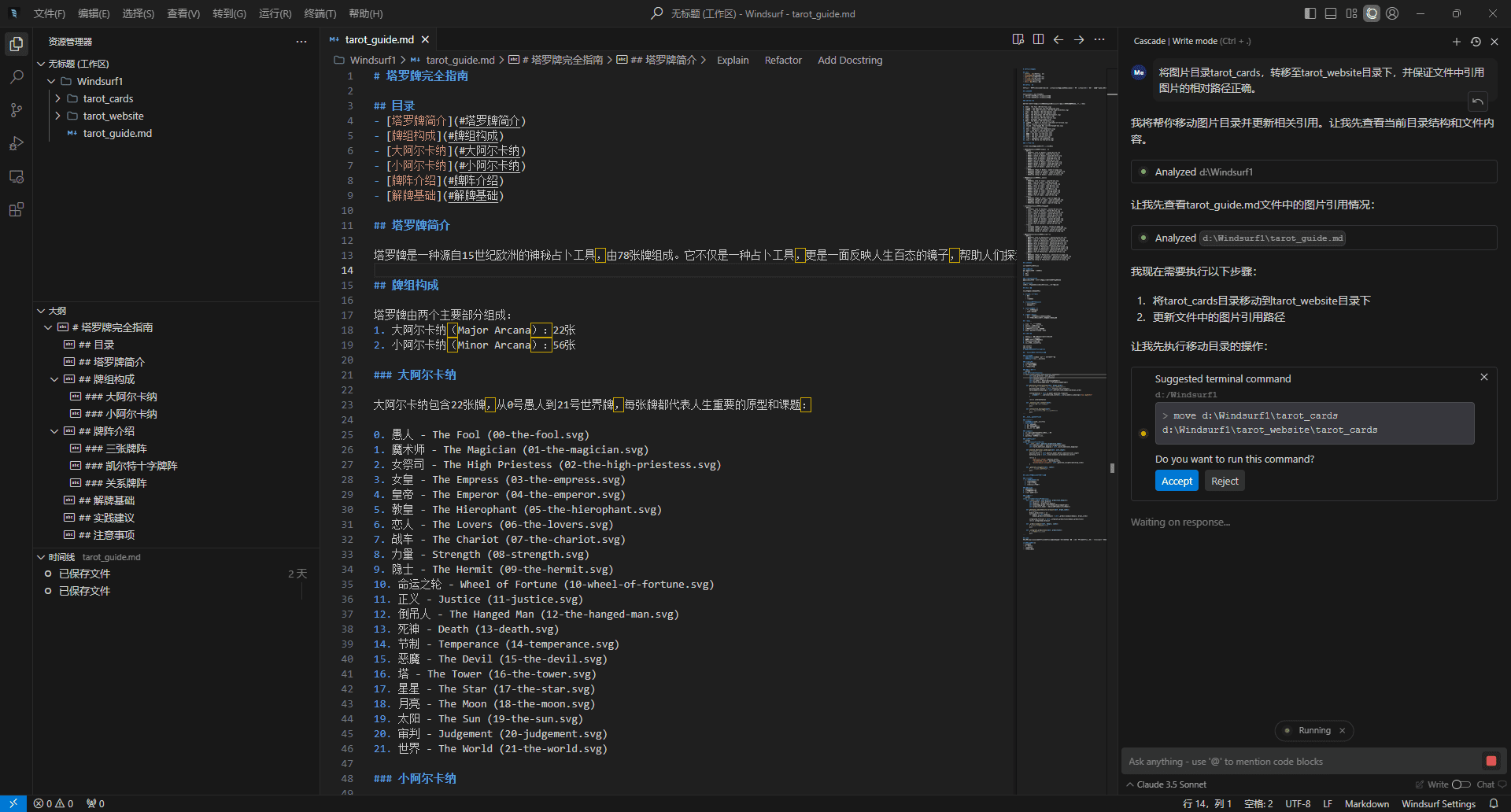

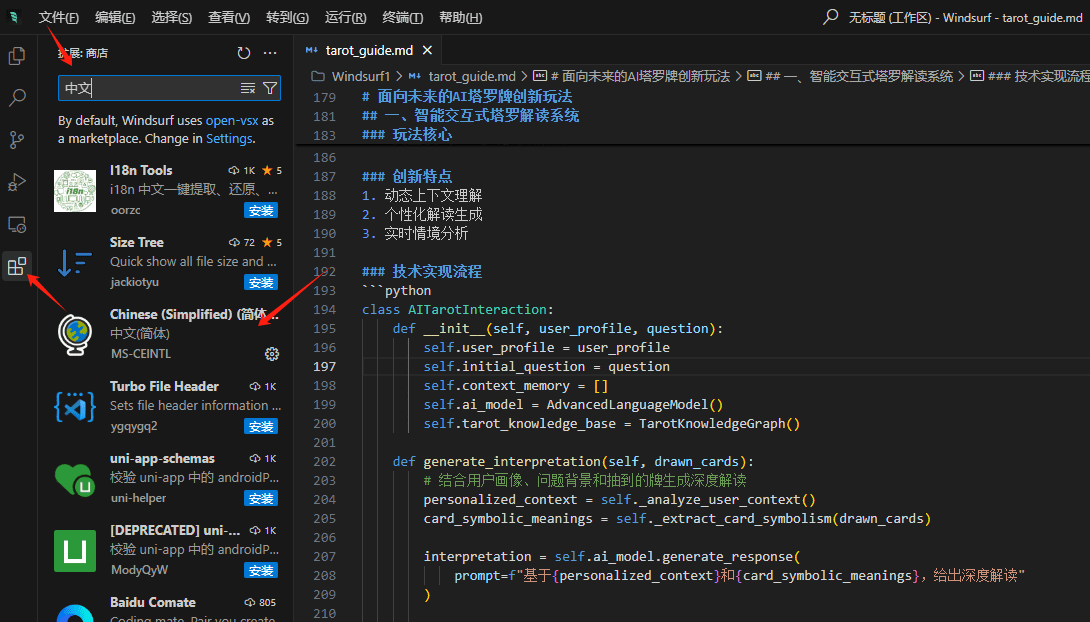

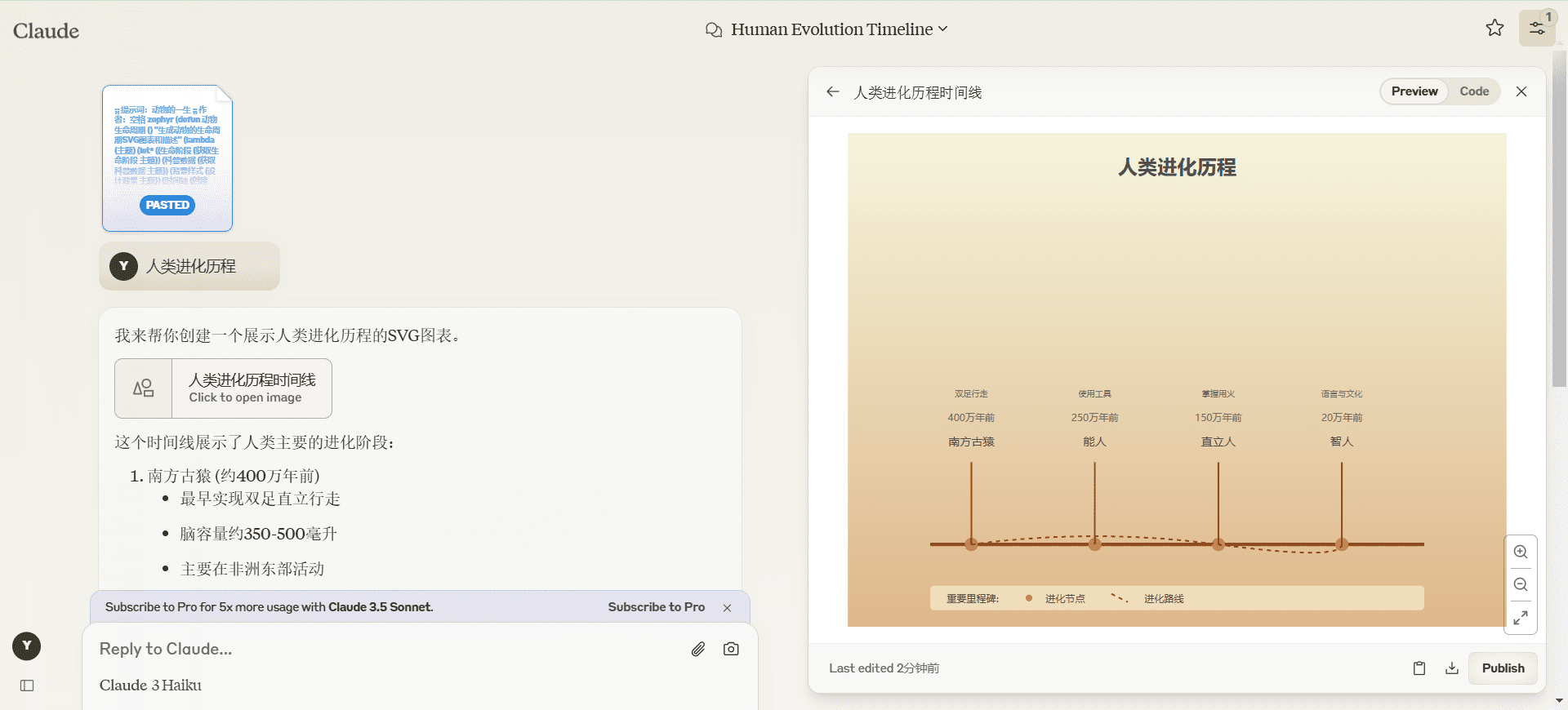

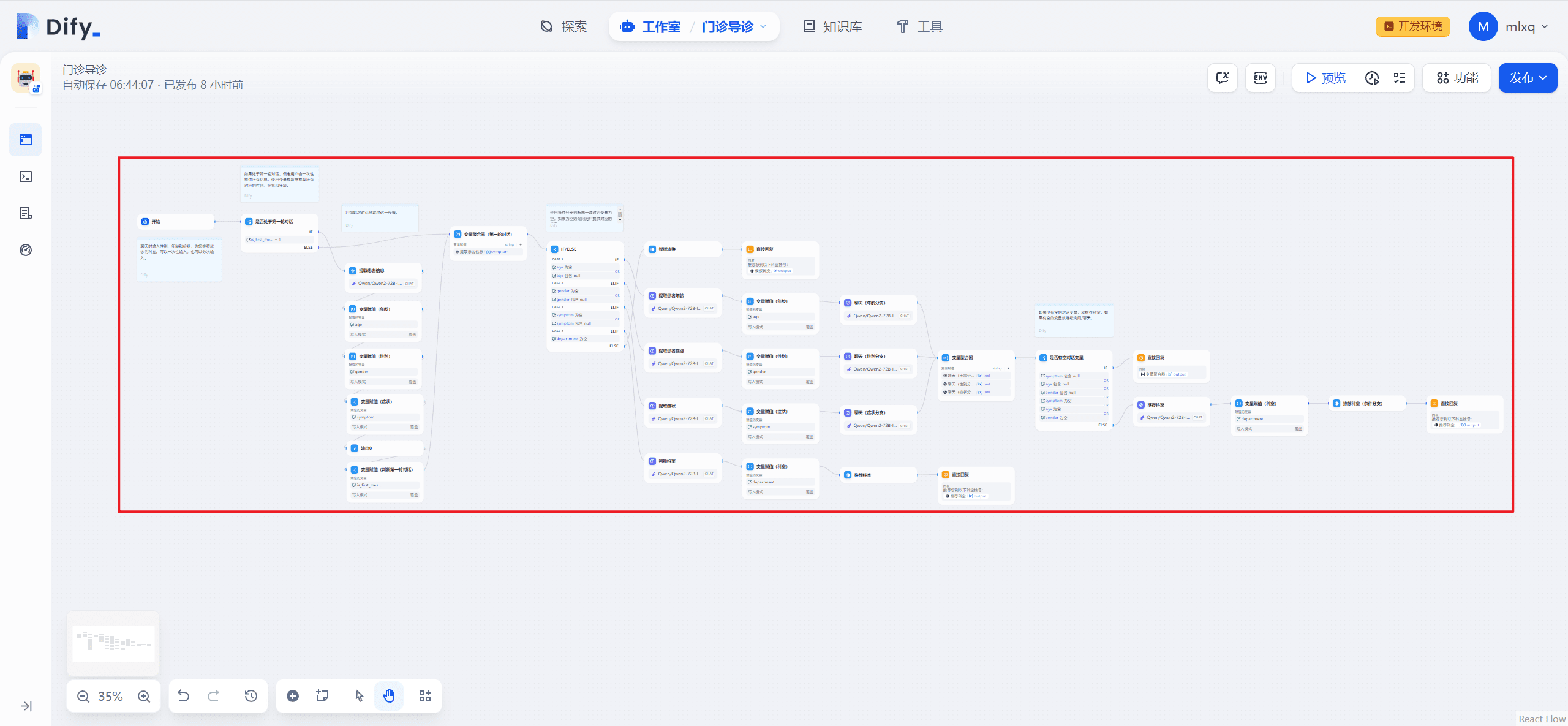

Playing with the Dify upgrade with Windsurf: a silky smooth experience with full automation!

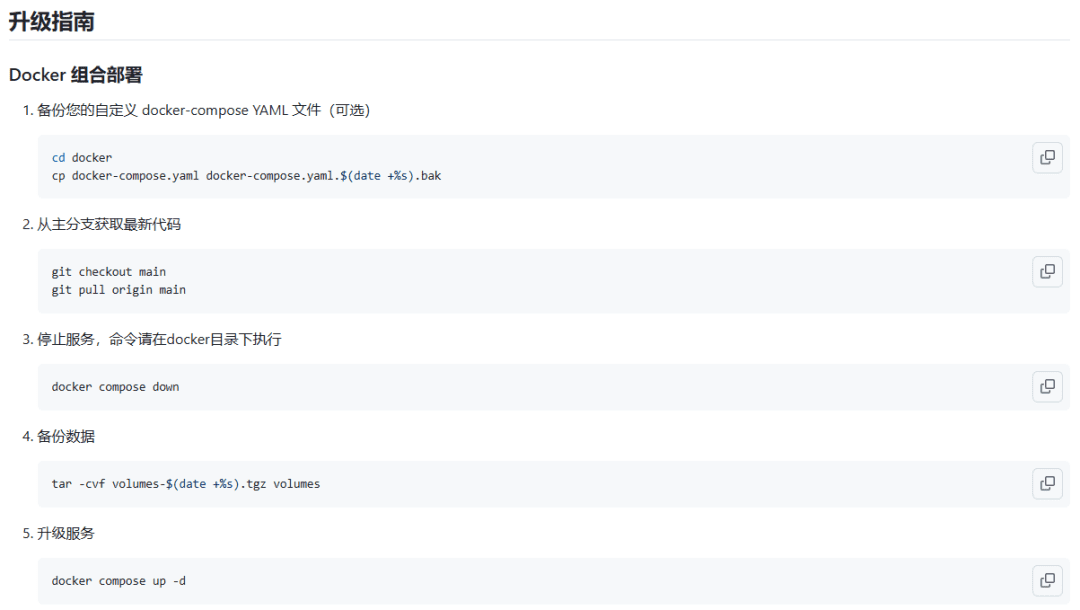

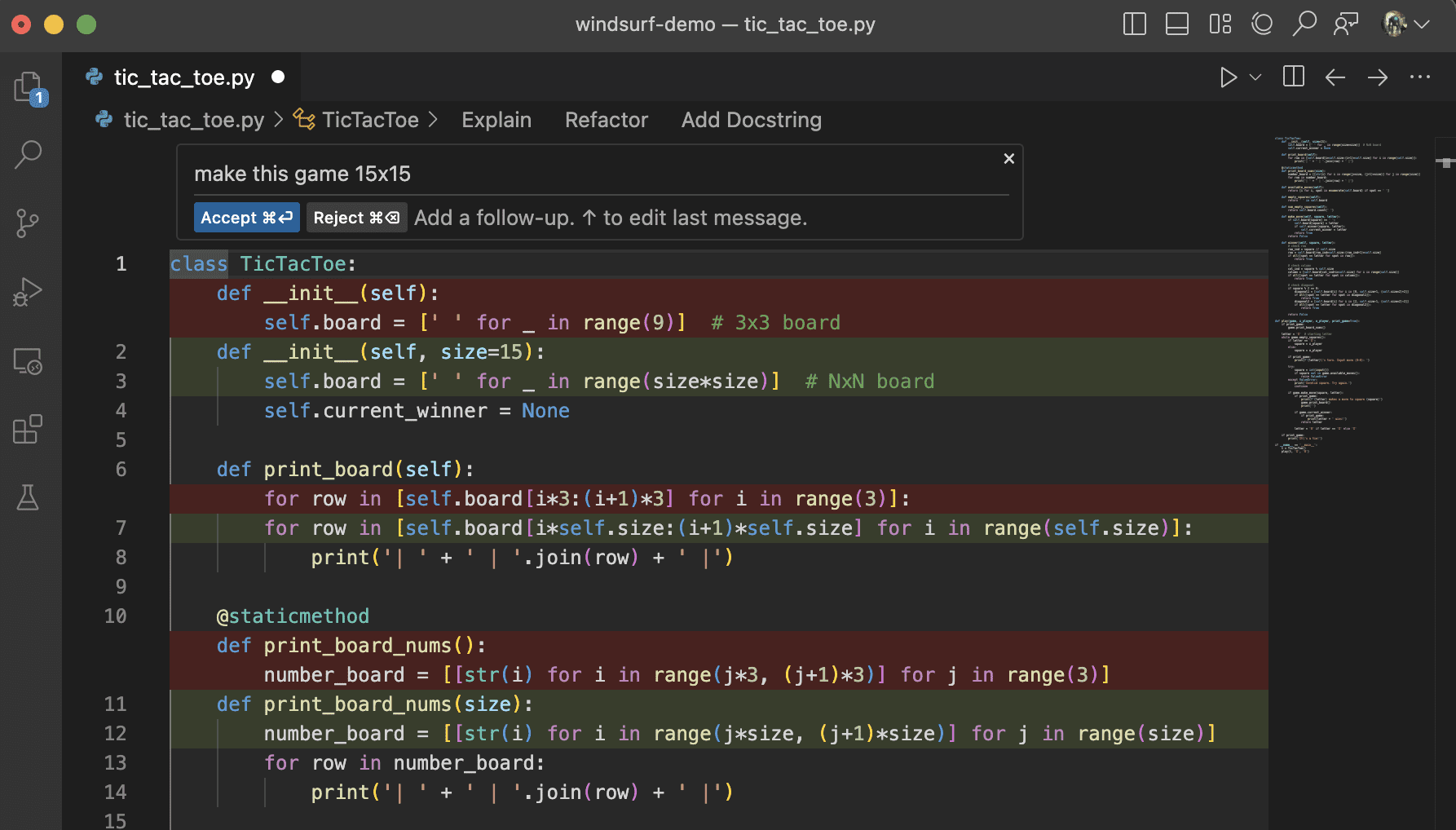

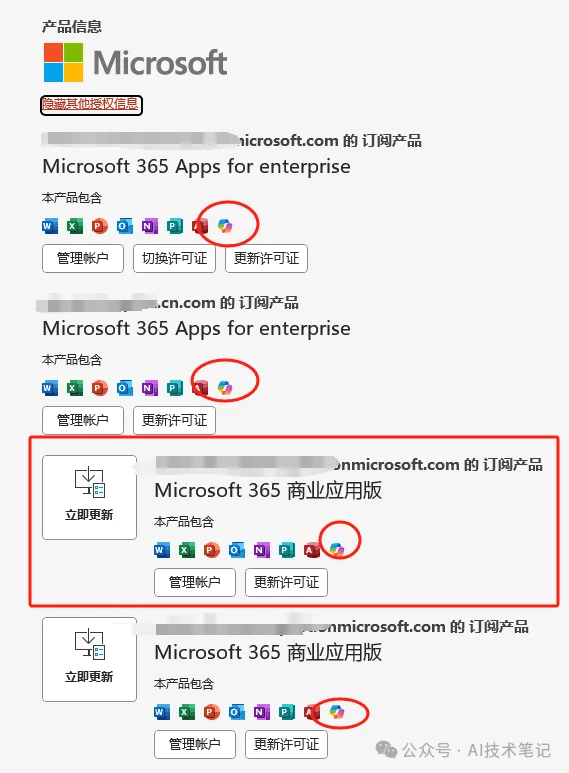

Introduction: Say goodbye to cumbersome manual updatesToday Dify updated version 0.12.1, but the traditional way of updating is daunting to old gold me - backing up data, downloading updates, overriding the installation, each step may take a lot of time and energy. In the face of these troubles, the emergence of Windsurf...