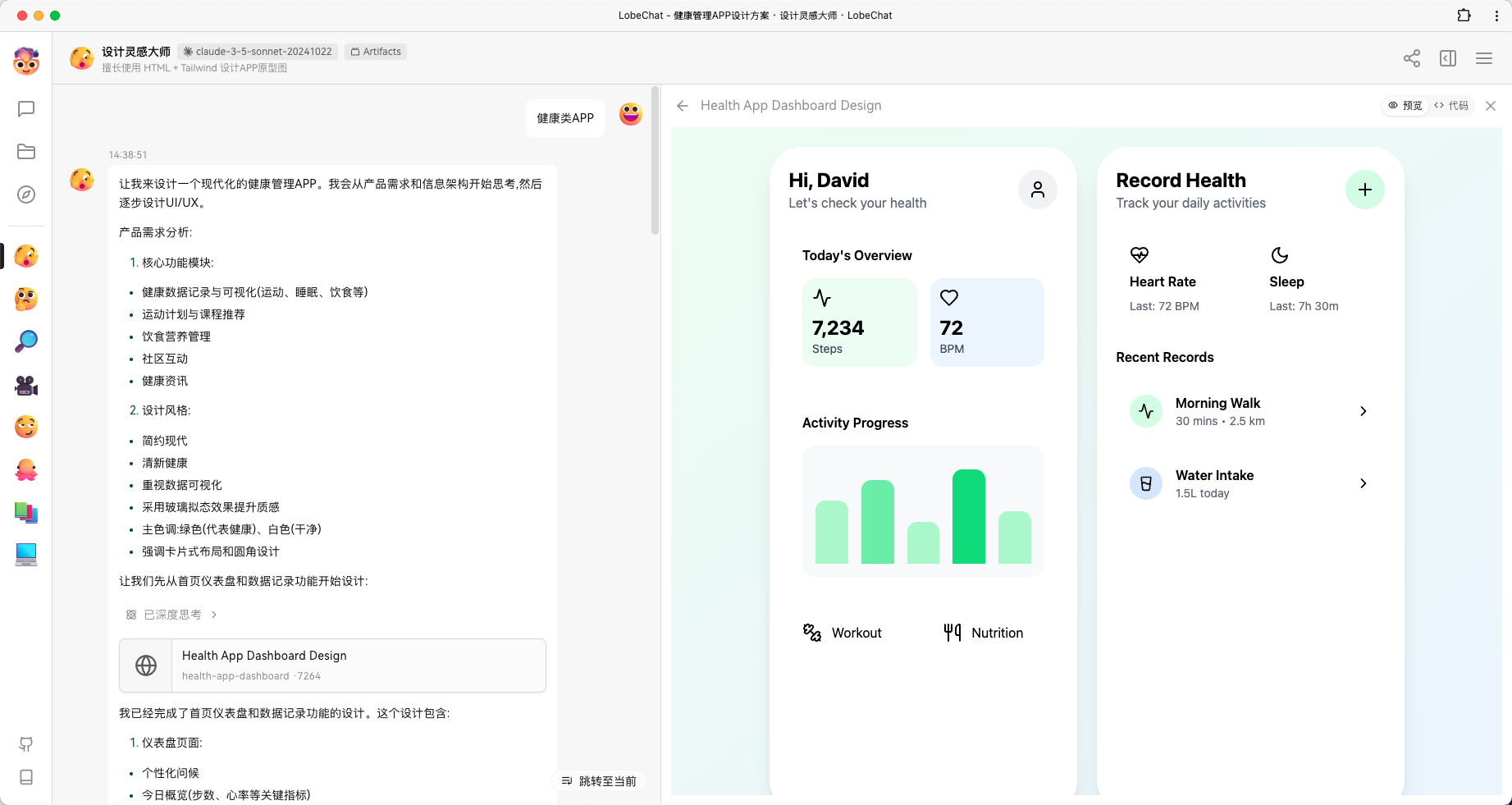

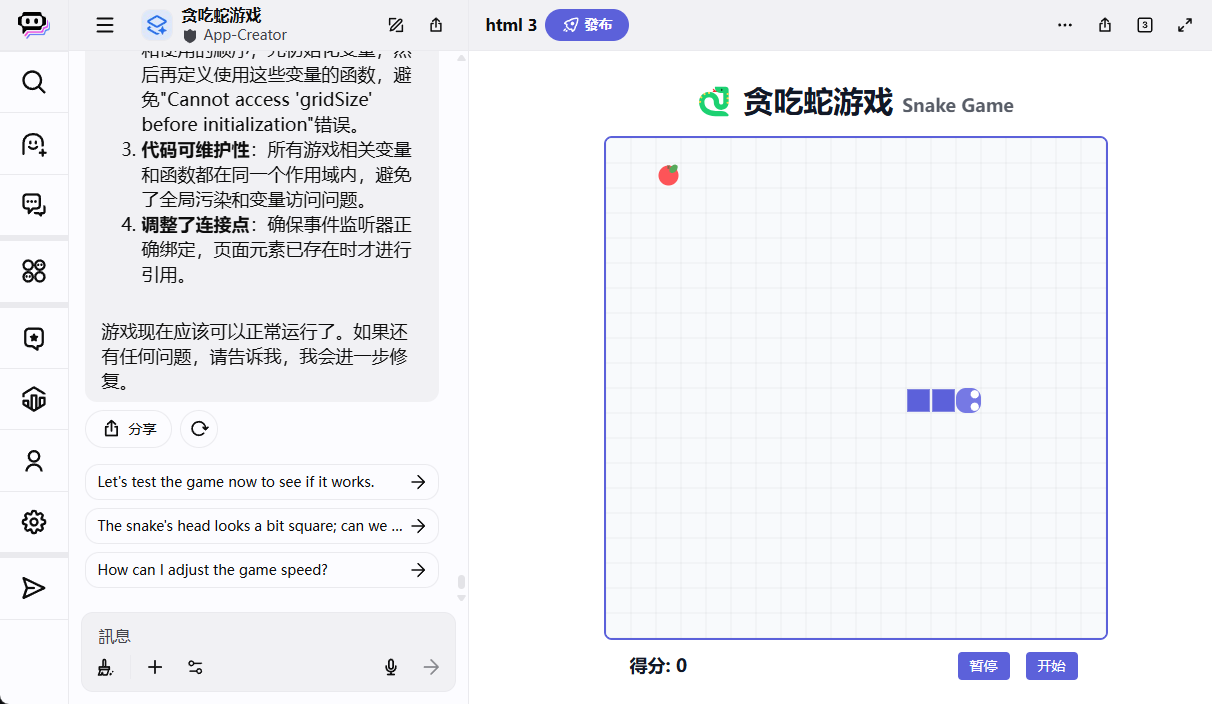

Poe AI Platform Generates Prompts for "Canvas Apps"

Functionality This prompt word configures the AI as an "App-Creator", an expert who specializes in creating Canvas Apps (HTML/CSS/JS based web apps) for the Poe platform. It provides detailed instructions...

![[转载]QwQ-32B 的工具调用能力及 Agentic RAG 应用](https://aisharenet.com/wp-content/uploads/2025/03/b04be76812d1a15.jpg)