Anthropic Prompts Engineering Course - Chapter 9 Exercise: Complex Prompts for Financial Services

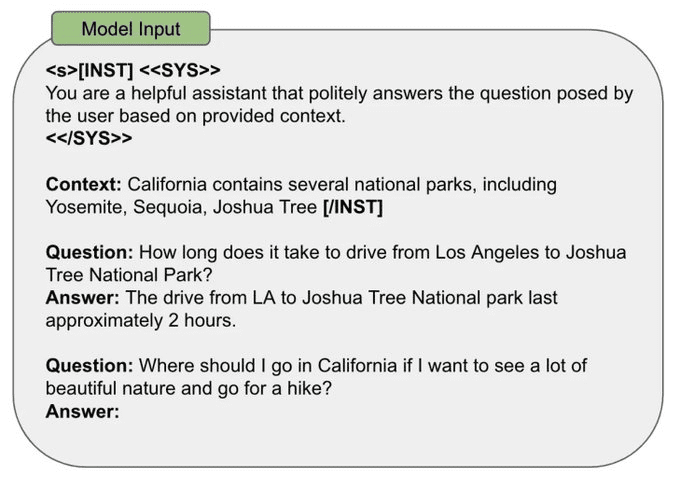

Example Prompts in finance can be quite complex for reasons similar to legal prompts. Below is an exercise for a financial usage scenario where Claude is used to analyze tax information and answer questions. As with the legal services example, we have reordered some of the elements...