Kimi Linear - A New Hybrid Linear Attention Architecture Open-Sourced by Dark Side of the Moon

What's Kimi Linear?

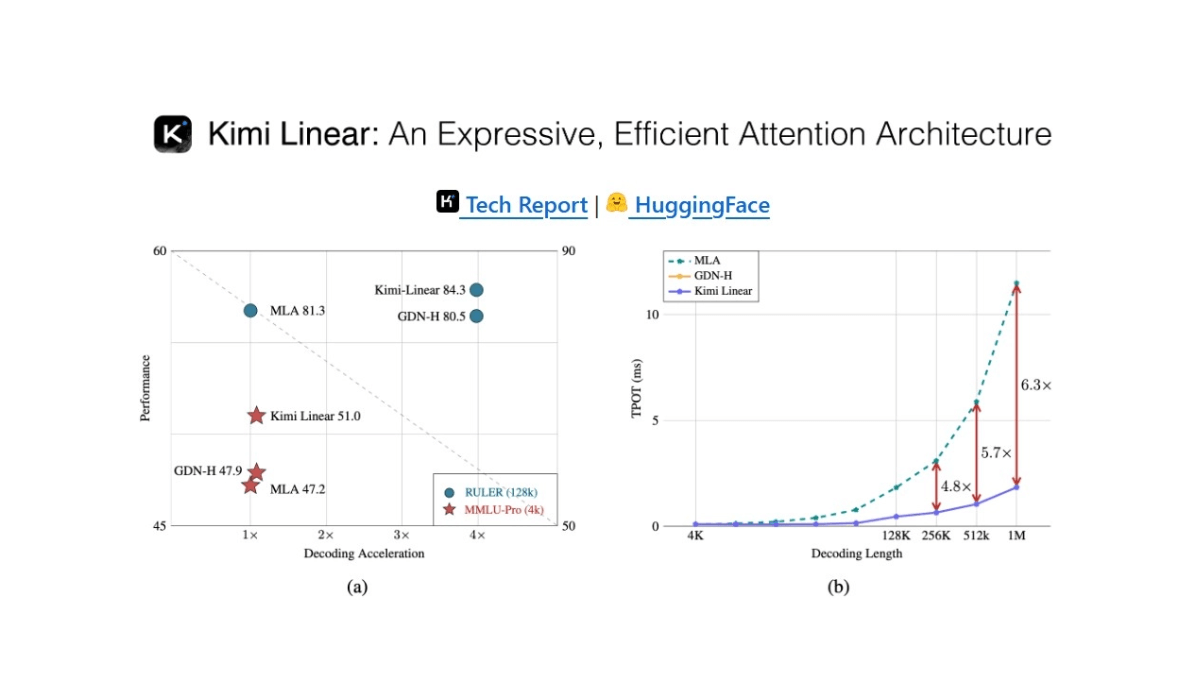

Kimi Linear is a new hybrid linear attention architecture open-sourced by Dark Side of the Moon, with Kimi Delta Attention (KDA) as the core, which optimizes the traditional attention model through a finer-grained gating mechanism, significantly improving hardware efficiency and memory control. The architecture adopts a 3:1 hybrid hierarchical structure, i.e., one full attention layer (MLA) is inserted after every three KDA linear attention layers, which ensures efficient local information processing and periodically captures global dependencies. Combined with the Mixing of Experts (MoE) technique, Kimi Linear activates only 3 billion parameters per forward propagation at a parameter scale of 48 billion, dramatically improving computational efficiency.

Features of Kimi Linear

- Efficient Architecture DesignThe 3:1 hybrid hierarchical structure combines linear attention and full attention, balancing efficiency and global information capture.

- Innovative attention mechanisms: Kimi Delta Attention (KDA) at the core introduces channel-level diagonal gating and special matrix parameterization to optimize memory control and hardware efficiency.

- Expert hybrid technology: Incorporate Mixing of Experts (MoE) to improve computational efficiency by activating only 3 billion parameters per forward propagation at 48 billion parameter size.

- Significant Performance Improvements: KV cache utilization is reduced by 75%, and long context decoding throughput is improved to 6x that of the full MLA model for long sequence tasks.

- Open Source and Ease of Use: KDA kernel and vLLM The implementation has been open-sourced to provide pre-training and instruction tuning model checkpoints for research and applications.

Kimi Linear's core strengths

- Architectural Advantages: Kimi Linear utilizes an innovative 3:1 hybrid hierarchical structure that combines linear attention and full attention, balancing efficiency and global information capture to achieve efficient processing of long sequence data.

- Performance Advantages: KV cache utilization is reduced by 75%, and long context decoding throughput is increased to 6 times of the full MLA model, significantly improving the efficiency of processing long sequence tasks.

- Efficiency AdvantageThe result: Combined with the Mixing of Experts (MoE) technique, only 3 billion parameters are activated per forward propagation at the 48 billion parameter scale, dramatically reducing computational cost.

- Innovation Advantage: Kimi Delta Attention (KDA) introduces channel-level diagonal gating and special matrix parameterization to optimize memory control and hardware efficiency and improve model performance.

What is Kimi Linear's official website?

- Github repository:: https://github.com/MoonshotAI/Kimi-Linear

- HuggingFace Model Library:: https://huggingface.co/moonshotai/Kimi-Linear-48B-A3B-Instruct

- Technical Papers:: https://github.com/MoonshotAI/Kimi-Linear/blob/master/tech_report.pdf

Who Kimi Linear is for

- natural language processing (NLP) researcher: Kimi Linear provides new research directions and tools that help explore more efficient language modeling architectures and attention mechanisms.

- Deep Learning Engineer: Its open-source implementation and pre-trained model checkpoints facilitate engineers to quickly integrate into projects to improve model performance and efficiency.

- Large-scale data processing developer: For scenarios where long text or large-scale datasets need to be processed, such as text generation, machine translation, etc.

- Users with high demands on model efficiency: Kimi Linear's advantages in KV cache usage and decoding throughput make it suitable for application scenarios that are sensitive to model runtime efficiency and resource usage.

- Open Source Community Contributors: Its open source nature encourages community members to participate in improvements and extensions, and is suitable for developers interested in contributing to open source projects.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...