Solving the confusion o1, are inference models like DeepSeek-R1 thinking or not?

Discover a fun essay on TheThoughts Are All Over the Place: On the Underthinking of o1-Like LLMs", the topic is to analyze o1 class reasoning models Frequent switching of thinking paths and lack of focus in thinking, referred to as"underthinking".Mitigation methods are also given. This article also answers the question of whether the inference model is thinking again or not, and I hope readers find their own answers.

I. Background:

In recent years, Large Language Models (LLMs), represented by OpenAI's o1 model, have demonstrated superior capabilities in complex reasoning tasks, where they mimic the depth of human thinking by scaling up the amount of computation involved in the reasoning process. However, existing studies have questioned the depth of thinking of LLMs:Are these models really thinking deeply?

To answer this question, the authors of this paper propose"Underthinking."of the concept and analyzes it systematically. The authors argue that thenot enough food for thoughtis the o1 class of LLMs in solving complex problems.Abandoning promising inference paths too early leads to insufficient depth of thought and ultimately affects model performance. This phenomenon is particularly prominent in math puzzles.

II. Methods of reflection and research:

To delve deeper into the phenomenon of underthinking, the authors conducted the following research:

1. Definitions and the phenomenon of under-observation and under-thinking

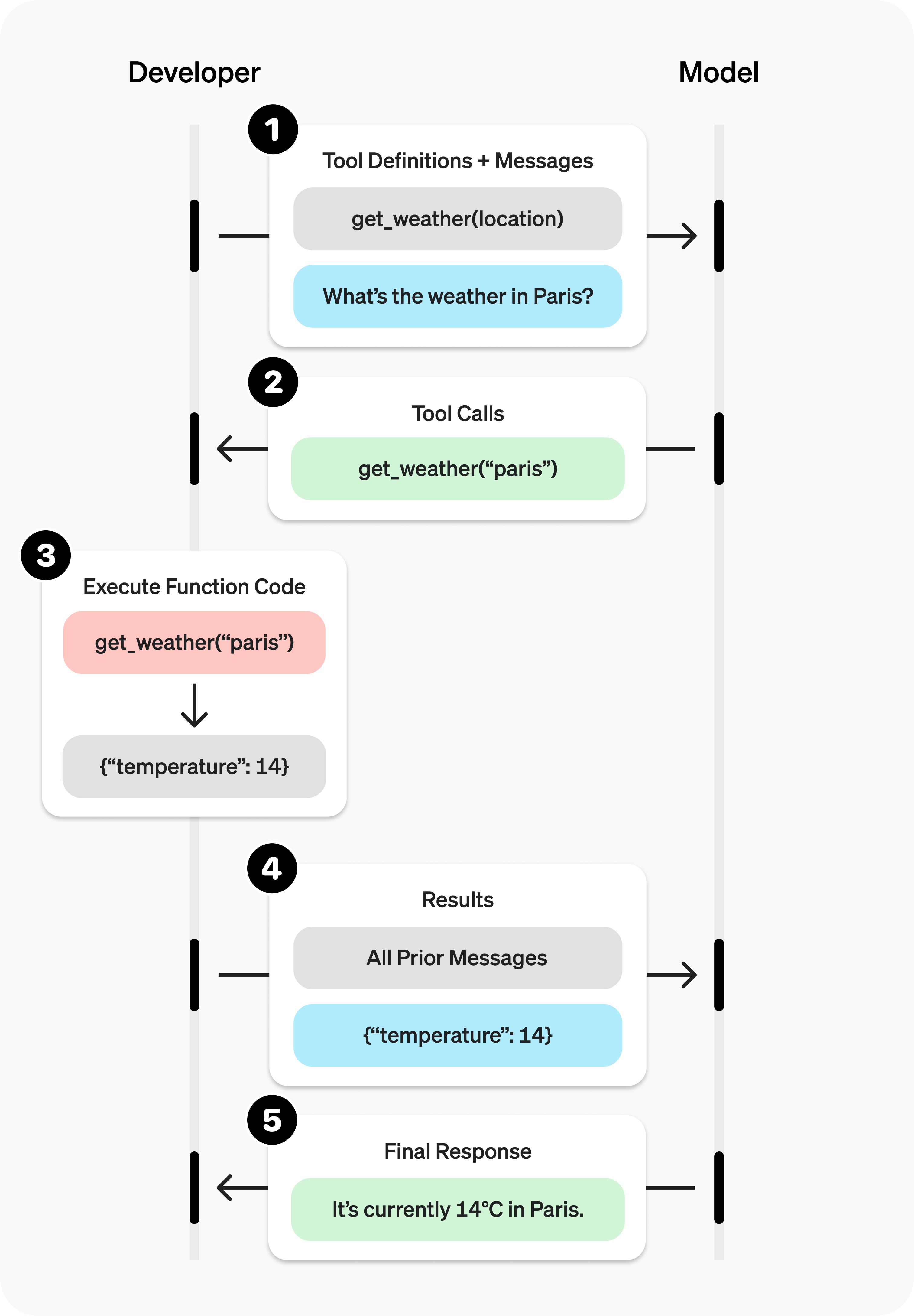

- Defining Thinking: The authors define "thinking" as an intermediate cognitive step in the reasoning process of a model, and use terms such as "alternatively" as a sign of a switch in thinking.

- Example: In Figure 2, the authors show an example of the output of a model containing 25 thinking steps and compare it to the output of overthinking.

- Experimental design:

- Test Sets: The authors chose three challenging test sets:

- MATH500. Contains questions from high school math competitions ranging in difficulty from 1 to 5.

- GPQA Diamond. Contains graduate level multiple choice questions in physics, chemistry and biology.

- AIME2024. Topics from the U.S. Invitational Mathematics Tournament cover a wide range of areas including algebra, counting, geometry, number theory, and probability.

- Model Selection: The authors chose two open-source models of the o1 class with visible long chains of thought: the QwQ-32B-Preview and the DeepSeek-R1-671B, and used the DeepSeek-R1-Preview as a complement to show the development of the R1 family of models.

- Test Sets: The authors chose three challenging test sets:

2. Analyzing the manifestations of inadequate reflection

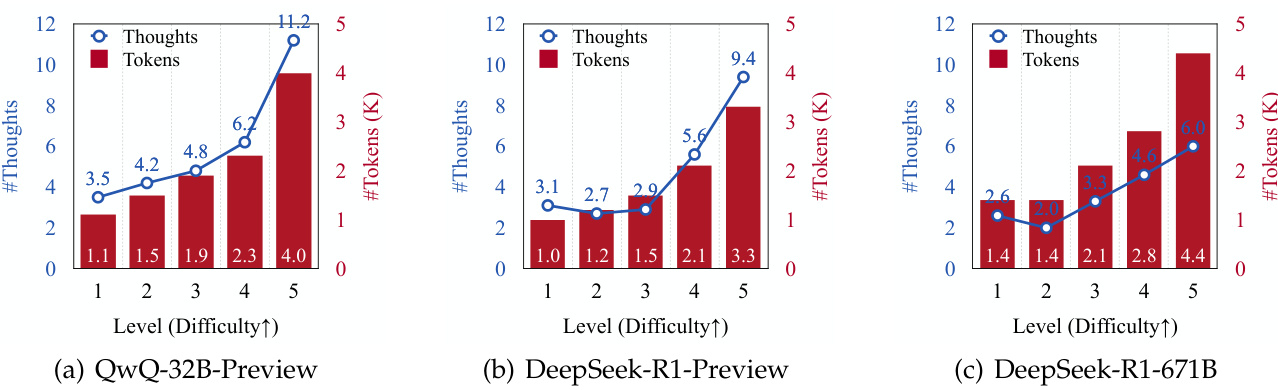

- Thinking about switching frequency and problem difficulty:

- The authors found that the number of inference reflections generated and the number of tokens generated increased for all models as the difficulty of the problem increased (see Figure 3).

- This suggests that class o1 LLMs are able to dynamically adapt their reasoning process to cope with more complex problems.

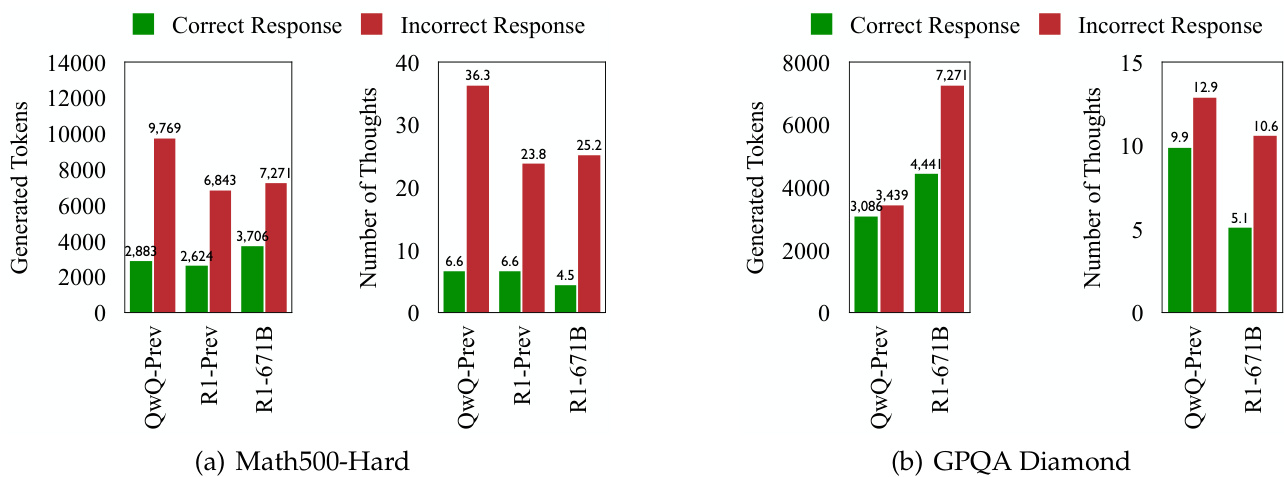

- Thinking about switching and error response:

- In all test sets, class o1 LLMs showed more frequent thinking switches when generating incorrect answers (see Figures 1 and 4).

- This suggests that more frequent thinking switches do not necessarily lead to higher accuracy, despite the fact that the model is designed to dynamically adjust cognitive processes to solve problems.

3. Digging deeper into the nature of inadequate thinking

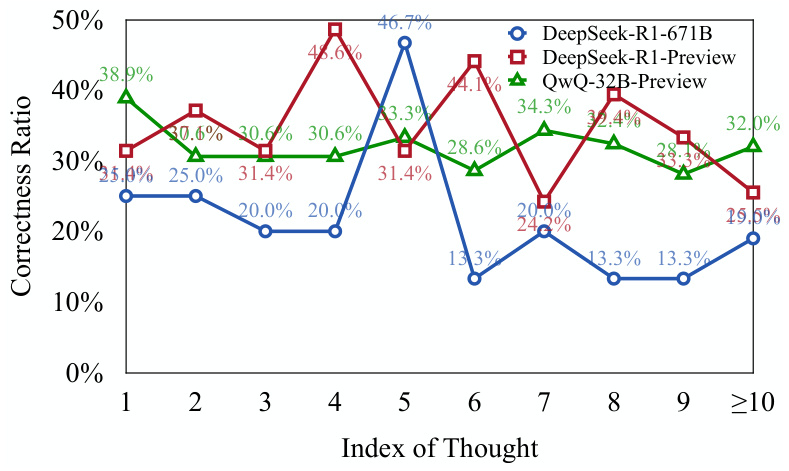

- Evaluate the correctness of thinking:

- The authors used two models based on Llama and Qwen (DeepSeek-R1-Distill-Llama-70B and DeepSeek-R1-Distill-Qwen-32B) to assess whether each thinking step was correct.

- The results show thatA significant portion of the early thinking steps in the error response are correct but under-explored(see figure 5).

- This suggests that the model, when confronted with a complex problemTendency to abandon promising paths of reasoning prematurely, leading to a lack of depth of thoughtThe

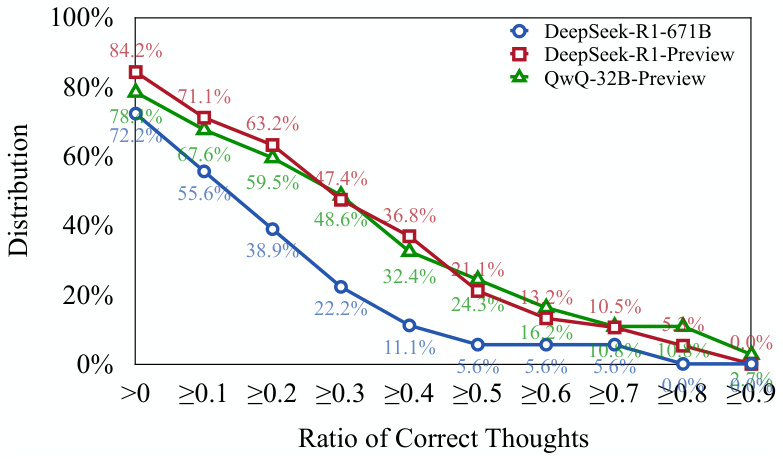

- Think about the correctness distribution:

- The authors found that more than 701 TP3T error responses contained at least one correct thinking step (see Figure 6).

- This further supports the above view:o Class 1 models are able to initiate the correct paths of reasoning, but may have difficulty continuing those paths to the correct conclusionThe

4. Quantifying the lack of reflection: proposing new indicators for assessment

- Thinking about under-indicators (UT):

- This metric quantifies the degree of underthinking by measuring the efficiency of the token when generating an error response.

- Specifically, the UT metric calculates the error response in which theThe number of tokens from the beginning to the first correct thought as a percentage of the total number of tokensThe

- Higher UT values indicate higher levels of underthinking, i.e., the model fails to contribute effectively to generating correct thinking in a larger proportion of the tokens generated in incorrect responses.

5. Impact of underthinking on model performance:

- The authors found thatThe phenomenon of underthinking behaves differently across data sets and tasks::

- On the MATH500-Hard and GPQA Diamond datasets, the DeepSeek-R1-671B model, while more accurate, also had a higher UT, indicating more underthinking in its error response.

- On the AIME2024 test set, the DeepSeek-R1-671B model not only has a higher accuracy, but also a lower UT value, indicating a more focused and efficient inference process.

III. Important conclusions:

- Insufficient thinking is an important factor in the poor performance of o1 LLMs on complex problems. Frequent thinking switches result in the model not being able to explore promising inference paths in depth, which ultimately affects its accuracy.

- The phenomenon of underthinking is related to problem difficulty and modeling ability. Harder problems exacerbate underthinking, and more powerful models don't always reduce underthinking.

- Underthinking is different from overthinking. Overthinking is when a model wastes computational resources on simple problems, while underthinking is when a model prematurely abandons promising inference paths on complex problems.

- The Underthinking Indicator (UT) can effectively quantify the degree of underthinking. This metric provides a new perspective for assessing the reasoning efficiency of LLMs of class o1.

IV. Coping strategies:

To alleviate the problem of inadequate thinking, the authors propose aDecoding strategy with think-aloud switching penalty (TIP)::

- Core Ideas: During decoding, penalties are imposed on tokens associated with think-switches, theEncourage models to explore current thinking more deeply before switching to new thinkingThe

- Results: The TIP strategy improves the accuracy of the QwQ-32B-Preview model on all test sets, demonstrating its effectiveness in alleviating the underthinking problem.

V. Future prospects:

The authors suggest future research directions include:

- Development of adaptive mechanisms to enable models to self-regulate thinking switches.

- Further improving the inference efficiency of LLMs of class o1.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...