HunyuanVideoGP: A Hybrid Video Generation Model with Support for Running on Low-End GPUs

General Introduction

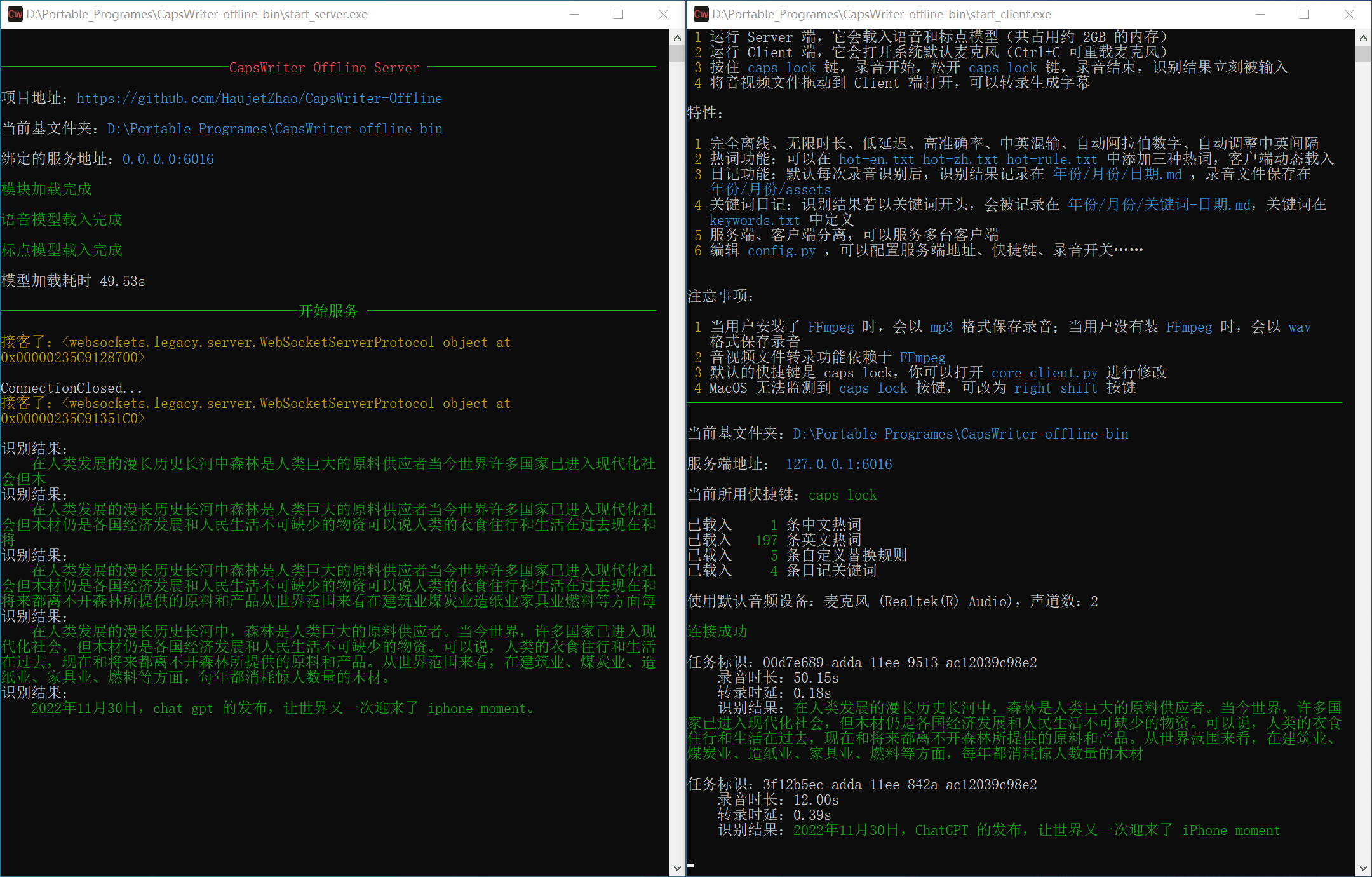

HunyuanVideoGP is a large-scale video generation model developed by DeepBeepMeep and designed for low-end GPU users. The model is an improved version of the original Hunyuan Video model, with significantly reduced memory and video memory requirements, allowing it to run smoothly on GPUs ranging from 12GB to 24GB. HunyuanVideoGP dramatically improves the generation speed through compilation and fast load/unload techniques, and provides a variety of profiles to accommodate different hardware configurations. The model also integrates multiple pre-trained Loras, supports multiple cueing and multiple generation, and allows users to easily switch between different models and quantized/non-quantized models. You can also find the model in the pinokio One-click deployment.

Function List

- Supports low-end GPU operation (12GB to 24GB video memory)

- Significantly reduced memory and graphics memory requirements

- Fast load/unload technology

- Multiple profiles for different hardware configurations

- Supports multiple prompts and multiple generation

- Integration of multiple pre-trained Loras

- Improved Gradio interface with progress bar and more options

- Automatic download of required model files

- Support for compiling on Linux and WSL

Using Help

Installation process

- Prepare the Conda environment:

conda env create -f environment.yml

- Activate the environment:

conda activate HunyuanVideo

- Install pip dependencies:

python -m pip install -r requirements.txt

- Optional: Install Flash attention support (easy to install for Linux, harder for Windows):

# 具体安装步骤请参考官方文档

Usage Process

- Start the Gradio server:

bash launch.sh

- Open a browser and visit the local server address (usually http://localhost:7860) to access the Gradio interface.

- In the Gradio interface, select the desired profile and model, enter the prompt word, and click the Generate button.

- The generated video will be displayed on the interface and can be downloaded or further edited by the user.

Main Functions

- Selecting a profile: Select the appropriate profile based on the hardware configuration to optimize generation speed and quality.

- Enter the prompt: Enter descriptive text in the prompt box and the model will generate the corresponding video content according to the prompt.

- Multiple generation: Supports the generation of multiple videos at a single prompt, allowing the user to choose the most satisfactory result.

- Switching Models: Supports switching between different Hunyuan and Fast Hunyuan models to meet different needs.

- Quantitative/non-quantitative models: Users can choose to use quantitative or non-quantitative models to balance generation speed and quality.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...