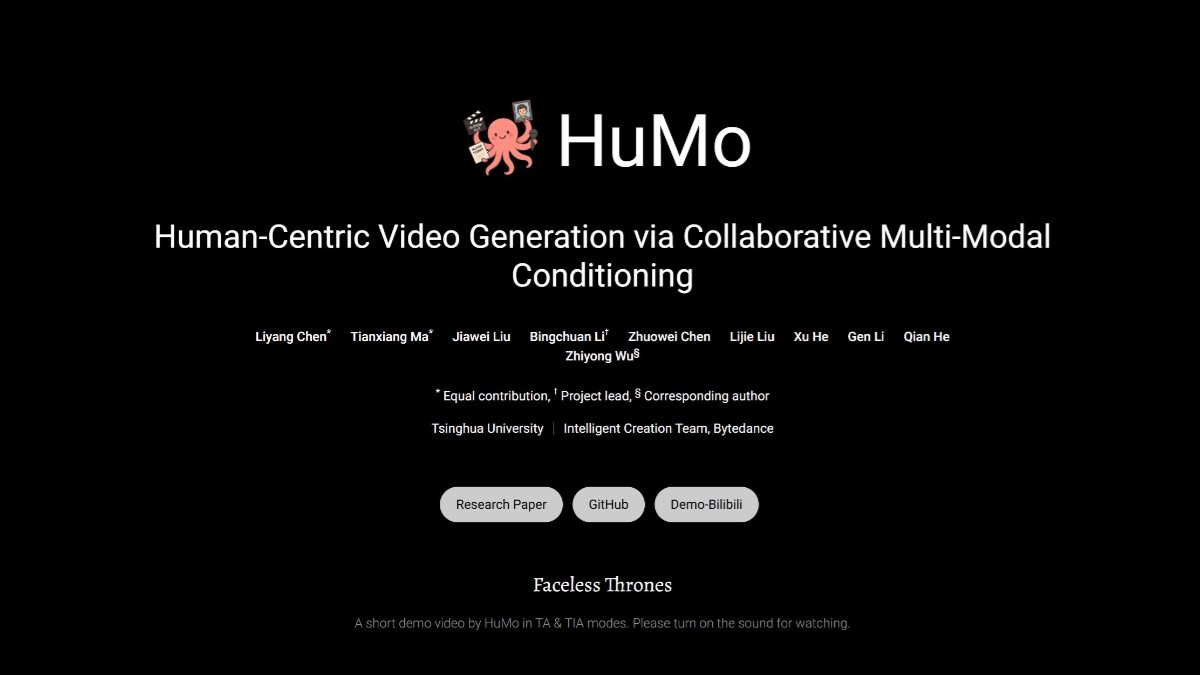

HuMo - Tsinghua University United Bytes open source multimodal video generation framework

What is HuMo?

HuMo is a multi-modal video generation framework jointly open-sourced by Tsinghua University and ByteDance Intelligent Creation Lab, focusing on human-centered video generation. Capable of generating high quality, detailed and controlled human videos from multiple modal inputs such as text, image and audio, HuMo supports strong text cue following capabilities, consistent subject retention and audio-driven motion synchronization. Support for generating video from text-image, text-audio, and text-image-audio provides users with greater customization and control. Supports video generation at 480p and 720p resolutions, with higher quality generation at 720p. HuMo provides configuration files to customize generation behavior and output, including generation length, video resolution, and balance of text, image, and audio inputs.

Features of HuMo

- Multimodal Input Fusion: It can simultaneously process text, image and audio inputs in all three modalities to generate high-quality video content.

- Precise control of text drives: Highly customizable video generation with precise control of video content via text prompts.

- Audio synchronized motion generation: Audio inputs drive character movements and expressions, making video content more vivid and natural.

- Subject consistency maintained: Maintain consistency in character appearance and features across multiple video frames to avoid subject inconsistencies.

- High resolution video output: Supports video generation in 480P and 720P resolutions to meet the needs of different scenarios.

- Customizable configurations: Adjust the generation parameters such as the number of frames, the resolution and the weighting of the modal inputs via a configuration file.

- Highly effective reasoning skills: Supports multi-GPU inference to improve the speed and efficiency of video generation.

HuMo's core strengths

- Multimodal synergy capability: It can process text, image and audio inputs simultaneously, enabling co-driving of multiple modalities to generate richer and more detailed video content.

- High-quality generation of results: Trained on high-quality datasets, the resulting videos are visually and audibly high-definition and high-fidelity to meet professional demands.

- Powerful text following: Accurately transform text descriptions into video content, ensuring that the generated results are highly consistent with user intent, and improving the accuracy and compliance of generation.

- Subject consistency maintained: Maintain consistency of character appearance and features across multiple frames of video, avoiding inconsistencies in the subject from frame to frame, and enhancing the coherence and professionalism of the video.

- Audio-driven motion synchronization: Audio can be used to generate background sounds that can drive the character's movements and expressions, synchronizing the character's movements with the audio tempo, tone, and other elements to enhance the realism and attractiveness of the video.

- Customizability and Flexibility: Adjust the generation parameters, such as the number of frames, resolution, weight of modal inputs, etc., through the configuration file to meet the individual needs of different users and application scenarios.

- Efficient Reasoning and Scalability: Supports multi-GPU reasoning to improve the speed and efficiency of video generation, while having good scalability for future upgrades and optimization.

What is the official HuMo website?

- Project website:: https://phantom-video.github.io/HuMo/

- HuggingFace Model Library:: https://huggingface.co/bytedance-research/HuMo

- arXiv Technical Paper:: https://arxiv.org/pdf/2509.08519

Who is HuMo for?

- content creator: Video producers, animators, advertising creatives, and others can use HuMo to quickly generate high-quality video content, increasing the efficiency of creation and the speed of creative realization.

- educator: Educational videos can be generated to help students better understand and learn complex concepts through vivid animations and audio explanations to enhance teaching effectiveness.

- Film & TV Production Team: In film and television production, HuMo can be used to quickly generate character animation or preview videos, assisting in scriptwriting and set design, and improving production efficiency and the speed of creative exploration.

- game developer: In game development, HuMo can generate character animations and virtual scenes, providing more creativity and flexibility in game design and enriching the game experience.

- Social Media Operators: Personalized and engaging video content can be generated for social media platforms to enhance user engagement and content distribution.

- Corporate marketers: Used to create personalized advertising videos, generating customized content based on the preferences of the target audience, improving advertising effectiveness and brand impact.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...