Hugging Face Launches Agent Intelligence Body Rankings: Who's the Leader in Tool Calling?

NVIDIA CEO Jen-Hsun Huang hails AI intelligences as the "digital workforce," and he's not the only tech leader to hold this view. Microsoft CEO Satya Nadella also believes that intelligent body technology will fundamentally change the way businesses operate.

The ability of these intelligences to interact with external tools and APIs has greatly expanded their application scenarios. However, AI intelligences are far from perfect, and evaluating their performance in real-world applications has been a challenge due to the complexity of potential interactions.

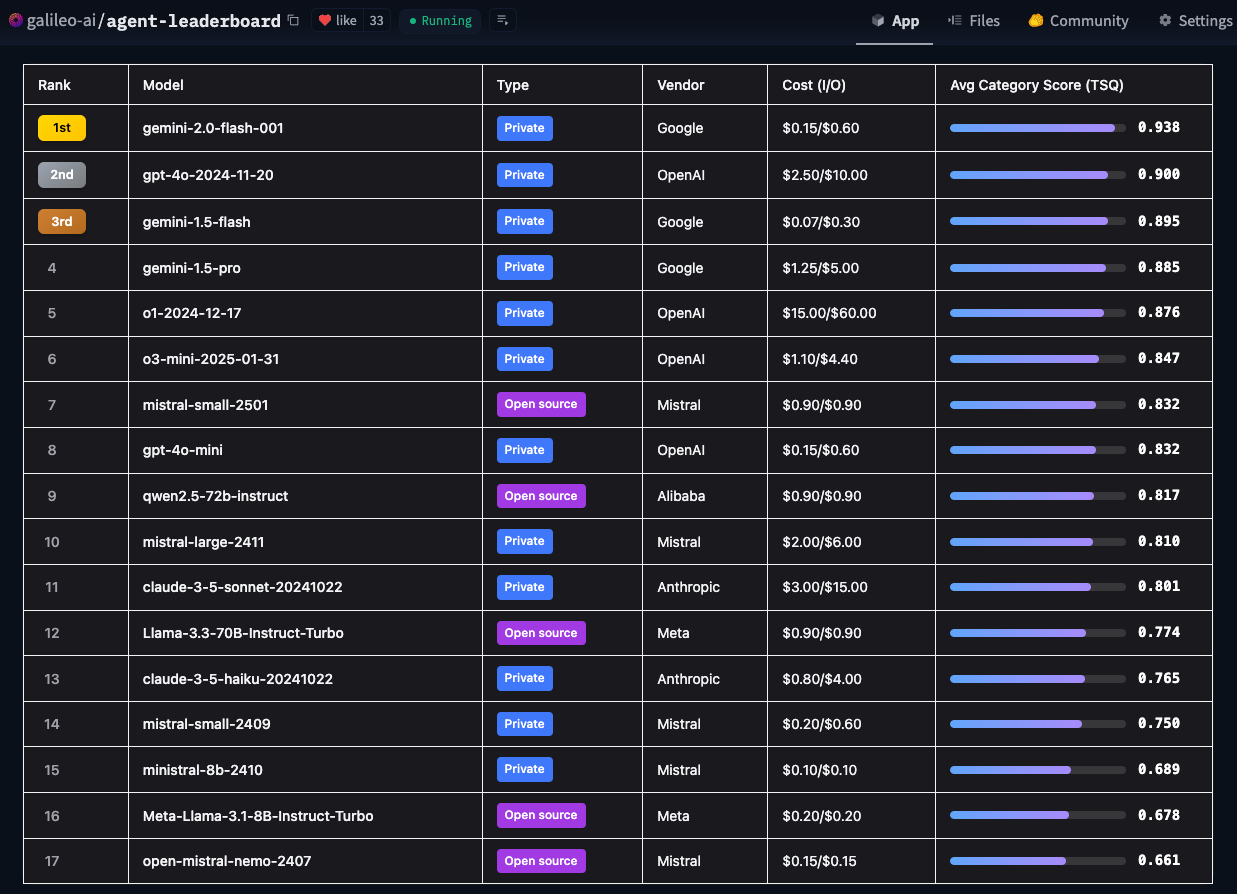

To address this challenge, Hugging Face introduces the Agent Intelligence Body Ranking. The Leaderboard utilizes Galileo's Tool Selection Quality (TSQ) metric to evaluate the performance of different Large Language Models (LLMs) in handling tool-based interactions, thus providing a clear reference for developers.

- Hugging Face Agent Intelligent Body Ranking

Hugging Face built this ranking to answer the central question, "How well do AI intelligences actually perform in real business environments?" Academic benchmarks are important, but they focus on technical capabilities, while Hugging Face focuses more on which models are actually applicable to a variety of real-world use cases.

Unique Features of Hugging Face Agent Intelligent Body Assessment Leaderboards

Current mainstream evaluation frameworks usually focus on specific domains. For example, BFCL excels in academic domains such as math, entertainment, and education; τ-bench focuses on industry-specific scenarios such as retail and airlines; xLAM covers 21 data-generation domains; and ToolACE focuses on API interactions across 390 domains. Hugging Face's leaderboard is unique in that it integrates these datasets into a comprehensive evaluation framework designed to cover a wider range of domains and real-world use cases.

By integrating विविध benchmarks and test scenarios, Hugging Face's leaderboards not only provide an assessment of a model's technical capabilities, but also focus on providing actionable insight into how the model handles edge cases and security considerations. Hugging Face also delves into multiple factors such as cost-effectiveness, implementation guidelines, and business impact - all of which are critical for organizations looking to deploy AI intelligences. With this ranking, Hugging Face hopes to help enterprise teams more accurately pinpoint the AI intelligences model that best meets their specific needs, taking into account the constraints of real-world applications.

Given the frequency of new Large Language Model (LLM) releases, Hugging Face plans to update the leaderboard benchmarks on a monthly basis to ensure that it stays current with rapidly evolving modeling technologies.

Key findings

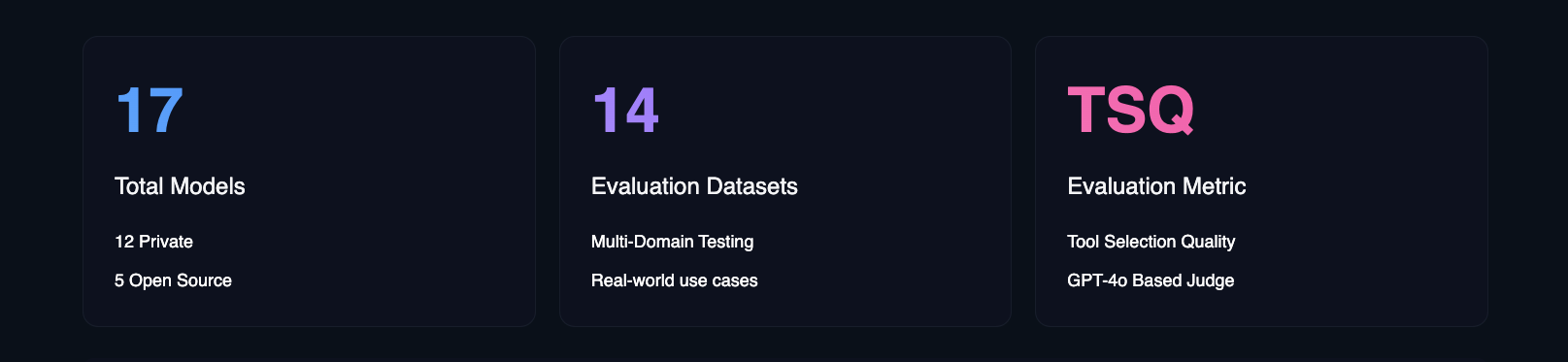

Hugging Face's in-depth analysis of 17 leading Large Language Models (LLMs) reveals interesting patterns in the way AI intelligences approach real-world tasks. Hugging Face conducted comprehensive stress tests on both private and open source models across 14 different benchmarks, evaluating dimensions ranging from simple API calls to complex multi-tool interactions.

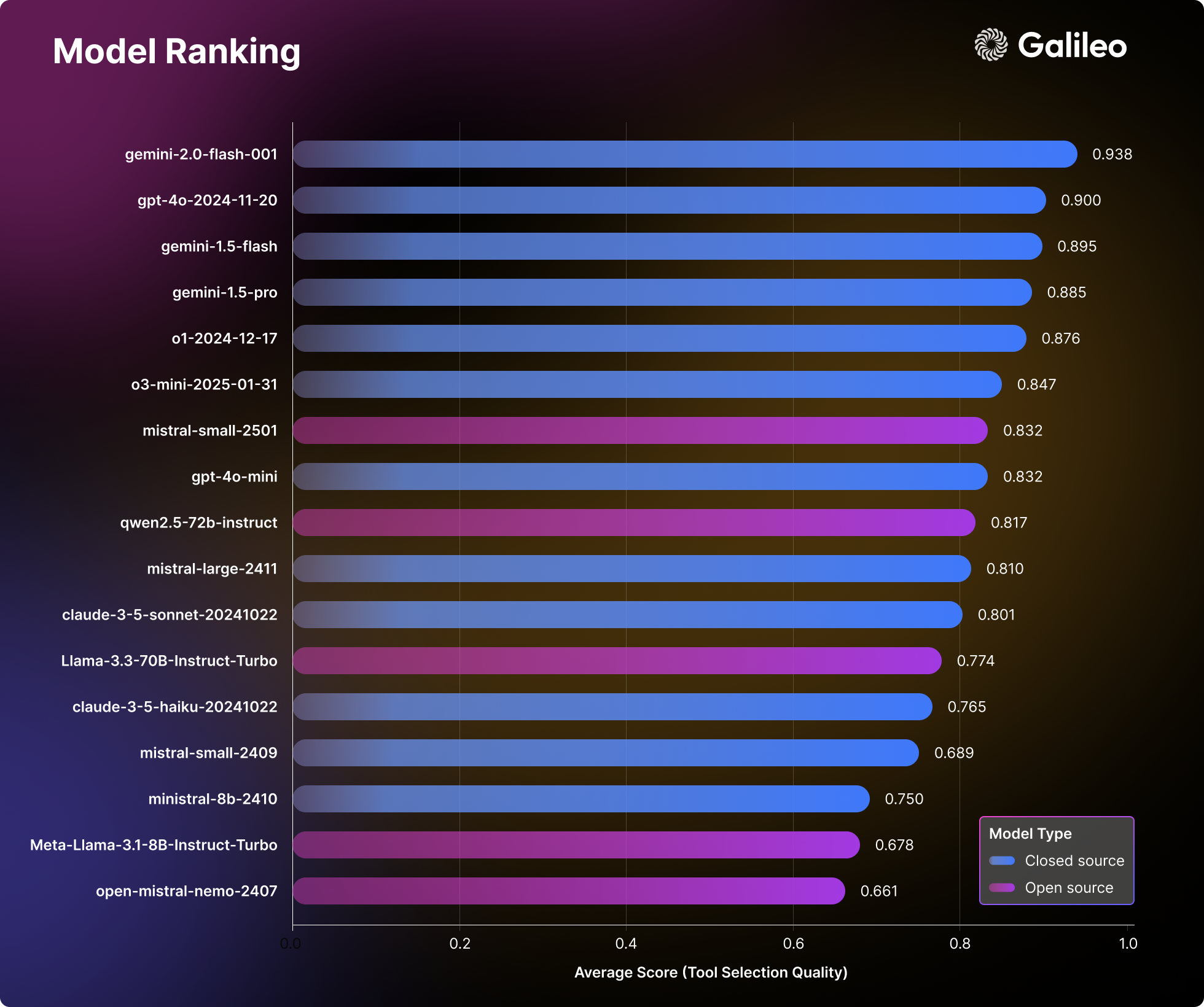

Large Language Model (LLM) Rankings

Hugging Face's evaluation results challenge traditional perceptions of model performance and provide a valuable practical reference for teams that are building AI intelligences.

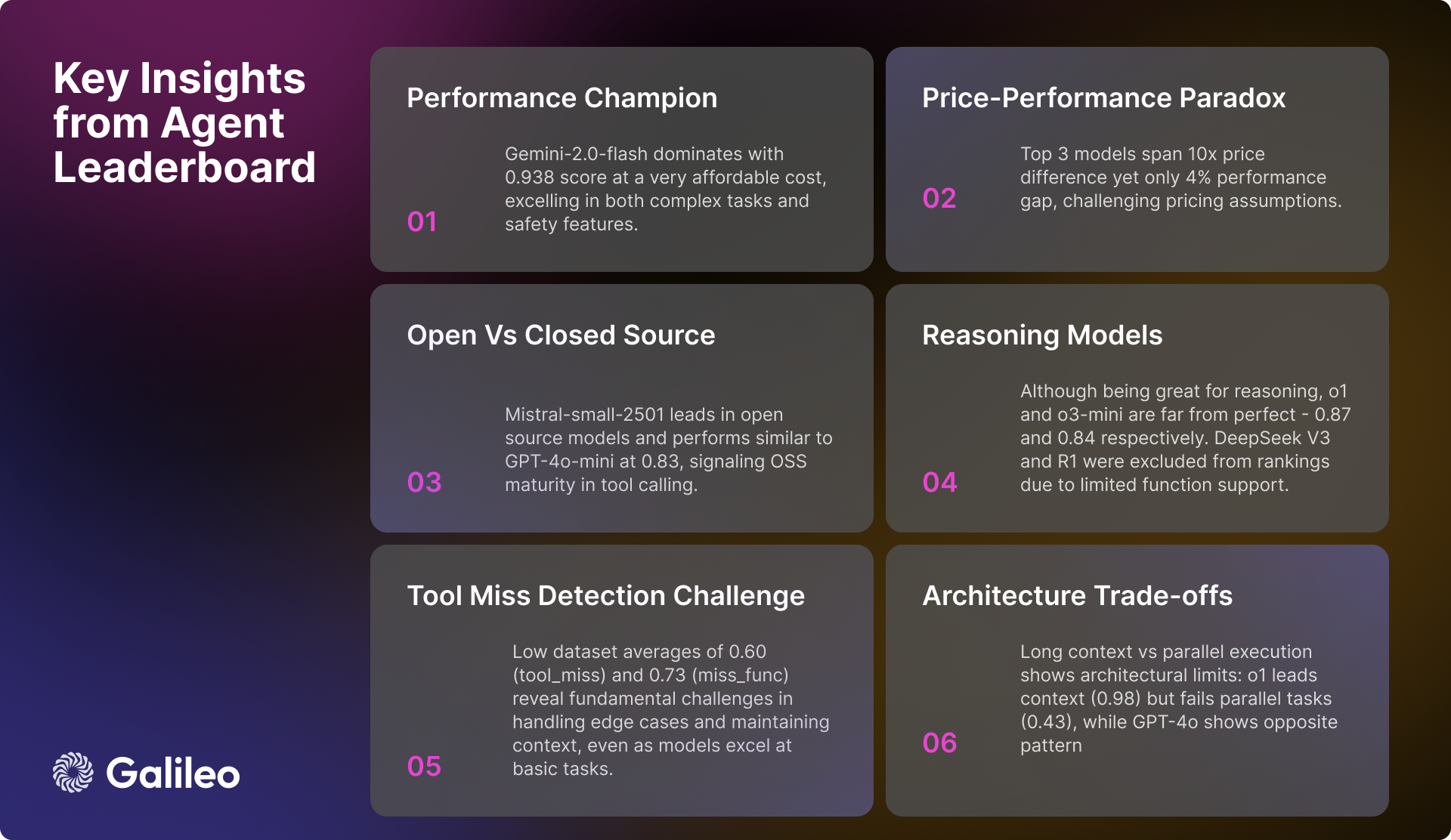

Key Findings from Agent's Intelligent Body Rankings

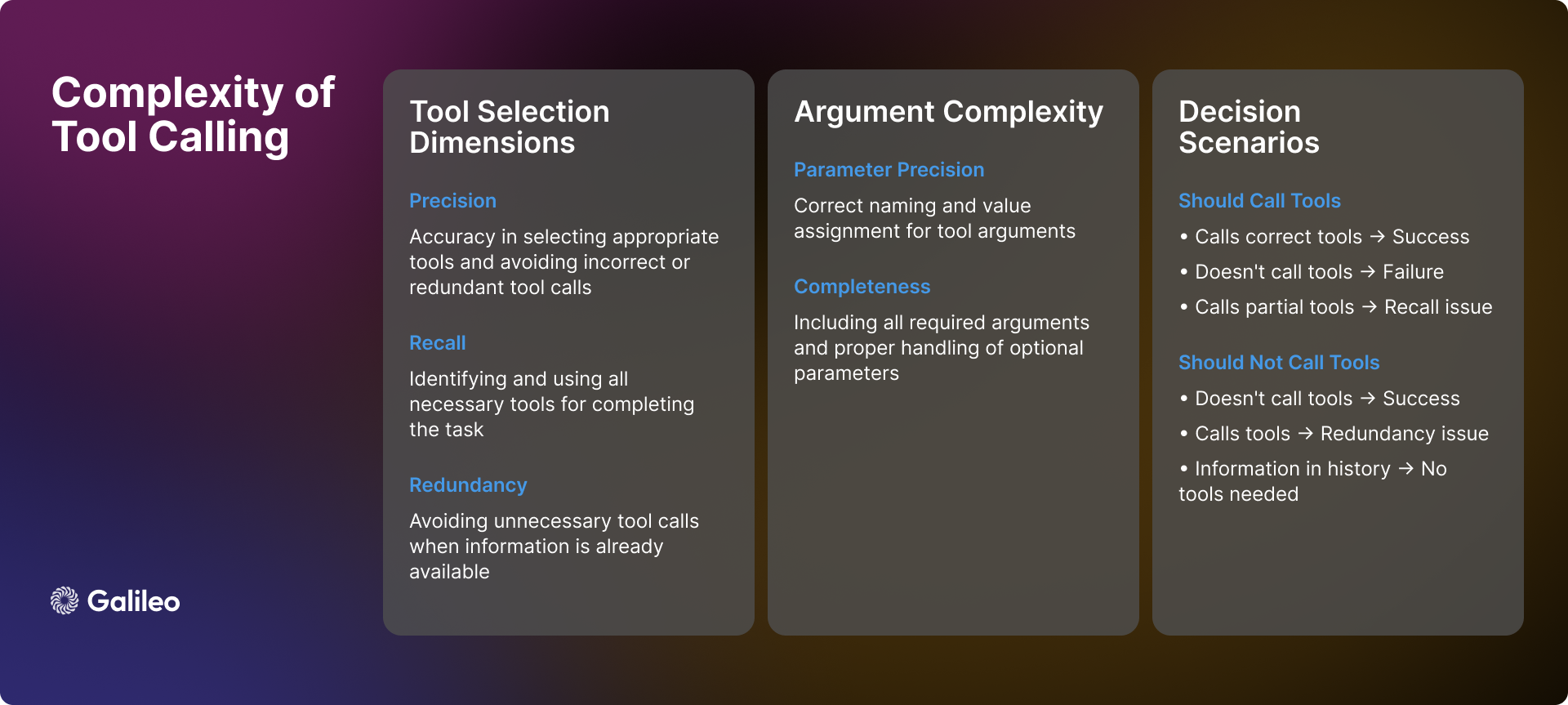

Complexity of tool calls

The complexity of tool invocation goes far beyond simple API calls. In practice, AI intelligences face many complex scenarios and challenges in tool usage, and need to make precise decisions:

scene recognition

When an intelligent body receives a user query, its first task is to determine whether tool invocation is required. Sometimes, the required information may already exist in the dialog history, making tool invocation redundant. In addition, the available tools may not be sufficient to solve the user's problem or may be irrelevant to the task itself. In these cases, the intelligences need to be able to recognize their limitations and inform the user honestly, rather than forcing the use of inappropriate tools.

Tool Selection Dynamics

Tool selection is not a simple "yes" or "no" binary problem; it involves precision and recall. Ideally, an intelligent body should be able to accurately recognize all necessary tools while avoiding the selection of irrelevant tools. However, the reality is often more complex. An intelligent body may correctly identify one necessary tool but omit others (insufficient recall), or incorrectly select some unnecessary tools while choosing the appropriate ones (insufficient precision). While neither case is optimal, they represent different degrees of selection bias.

parameter processing

Even if an intelligent body successfully selects the right tool, parameter processing may still introduce new challenges. Intelligent bodies must:

- Provide all required parameters and make sure they are named correctly.

- Proper handling of optional parameters.

- Ensure the accuracy of the parameter values.

- Format the parameters according to the specific specification of the tool.

sequential decision-making

For multi-step tasks, intelligences need to exhibit more advanced decision-making capabilities:

- Determine the optimal order of tool invocation.

- Handles interdependencies between tool calls.

- Maintain contextual coherence between multiple operations.

- Flexibility to respond to localized successes or failures.

The complexity described above amply demonstrates that the quality of tool selection should not be viewed as a simple metric. Instead, it should be viewed as a comprehensive assessment of the ability of intelligences to make complex decisions in real-life scenarios.

methodology

Hugging Face's evaluation process follows a systematic methodology that strives to provide a comprehensive and fair assessment of AI intelligences:

- Model Selection: Hugging Face has carefully selected a diverse set of leading language models, both proprietary and open source implementations. This selection strategy aims to provide a comprehensive view of the current technology landscape.

- Smart Body Configuration: Hugging Face configures each model as an intelligence with standardized system cues and grants them access to a consistent set of tools. This standardized configuration ensures that performance variance is a true reflection of the model's own inherent capabilities, rather than being influenced by external factors such as cue engineering.

- Indicator Definition: Hugging Face establishes Tool Selection Quality (TSQ) as the core evaluation metric, focusing on the correctness of tool selection and the effectiveness of parameter usage. The design of TSQ metrics takes into account the performance requirements of real-world application scenarios.

- Dataset Curation: Hugging Face builds a balanced and multi-domain dataset by strategically sampling from existing mature benchmark datasets. The dataset comprehensively tests the capabilities of the intelligences, ranging from basic function calls to complex multi-round interactions, to ensure the comprehensiveness of the evaluation.

- Scoring system: The final performance score is derived by calculating the average of equal weights over all datasets. This approach ensures a balanced assessment and avoids any single capability dominating the overall assessment results, thus reflecting the comprehensive performance of the intelligences more objectively.

Through this structured assessment methodology, Hugging Face aims to provide insights that can directly guide real-world deployment decisions.

How does Hugging Face measure the performance of Agent intelligences?

How the assessment framework works

As mentioned above, tool invocation evaluation requires reliable measurements in a variety of different scenarios. Hugging Face has developed the Tool Selection Quality metric to assess the tool invocation performance of intelligences, looking at the accuracy of tool selection and the effectiveness of parameter usage. The evaluation framework is designed to determine whether the intelligences are utilizing tools appropriately to accomplish tasks and to identify situations where tool use is unnecessary.

During the evaluation process, Hugging Face used the GPT-4o and ChainPoll models to evaluate tool selection decisions. For each interaction, Hugging Face collects multiple independent judgments, with the final score representing the percentage of positive assessments. Each judgment includes a detailed explanation to ensure transparency in the evaluation process.

The following code example shows how to use TSQ metrics to evaluate the tool call performance of a Large Language Model (LLM) on a dataset:

import promptquality as pq

import pandas as pd

file_path = "path/to/your/dataset.parquet" # 替换为你的数据集文件路径

project_name = "agent-leaderboard-evaluation" # 替换为你的项目名称

run_name = "tool-selection-quality-run" # 替换为你的运行名称

model = "gpt-4o" # 你想要评估的模型名称

tools = [...] # 你的工具列表

llm_handler = pq.LLMHandler() # 初始化 LLMHandler

df = pd.read_parquet(file_path, engine="fastparquet")

chainpoll_tool_selection_scorer = pq.CustomizedChainPollScorer(

scorer_name=pq.CustomizedScorerName.tool_selection_quality,

model_alias=pq.Models.gpt_4o,

)

evaluate_handler = pq.GalileoPromptCallback(

project_name=project_name,

run_name=run_name,

scorers=[chainpoll_tool_selection_scorer],

)

llm = llm_handler.get_llm(model, temperature=0.0, max_tokens=4000)

system_msg ={

"role":"system",

"content":'Your job is to use the given tools to answer the query of human. If there is no relevant tool then reply with "I cannot answer the question with given tools". If tool is available but sufficient information is not available, then ask human to get the same. You can call as many tools as you want. Use multiple tools if needed. If the tools need to be called in a sequence then just call the first tool.',

}

outputs = []

for row in df.itertuples():

chain = llm.bind_tools(tools)

outputs.append(

chain.invoke(

[system_msg,*row.conversation],

config=dict(callbacks=[evaluate_handler])

)

)

evaluate_handler.finish()

Why did Hugging Face choose to use the Large Language Model (LLM) for tool call evaluation?

The evaluation method based on the Large Language Model (LLM) enables a comprehensive evaluation of various complex scenarios. This approach can effectively verify whether the intelligentsia has properly handled the situation of insufficient contextual information to determine whether more information is required from the user before the tool can be used. In multi-tool application scenarios, the method is able to check whether the intelligent body recognizes all the necessary tools and invokes them in the correct order. In long contextual conversations, the method ensures that the intelligent body fully considers relevant information from earlier in the conversation history. Even in cases where tools are missing or inapplicable, the evaluation method determines whether the intelligent body correctly avoids tool invocations and thus avoids inappropriate actions.

To excel in TSQ metrics, AI intelligences need to demonstrate sophisticated capabilities, including: selecting the right tool when necessary, providing accurate parameters, efficiently coordinating multiple tools, and identifying scenarios where tool use is unnecessary. For example, when all the necessary information is already present in the dialog history or when no suitable tool is available, avoiding the tool is preferable.

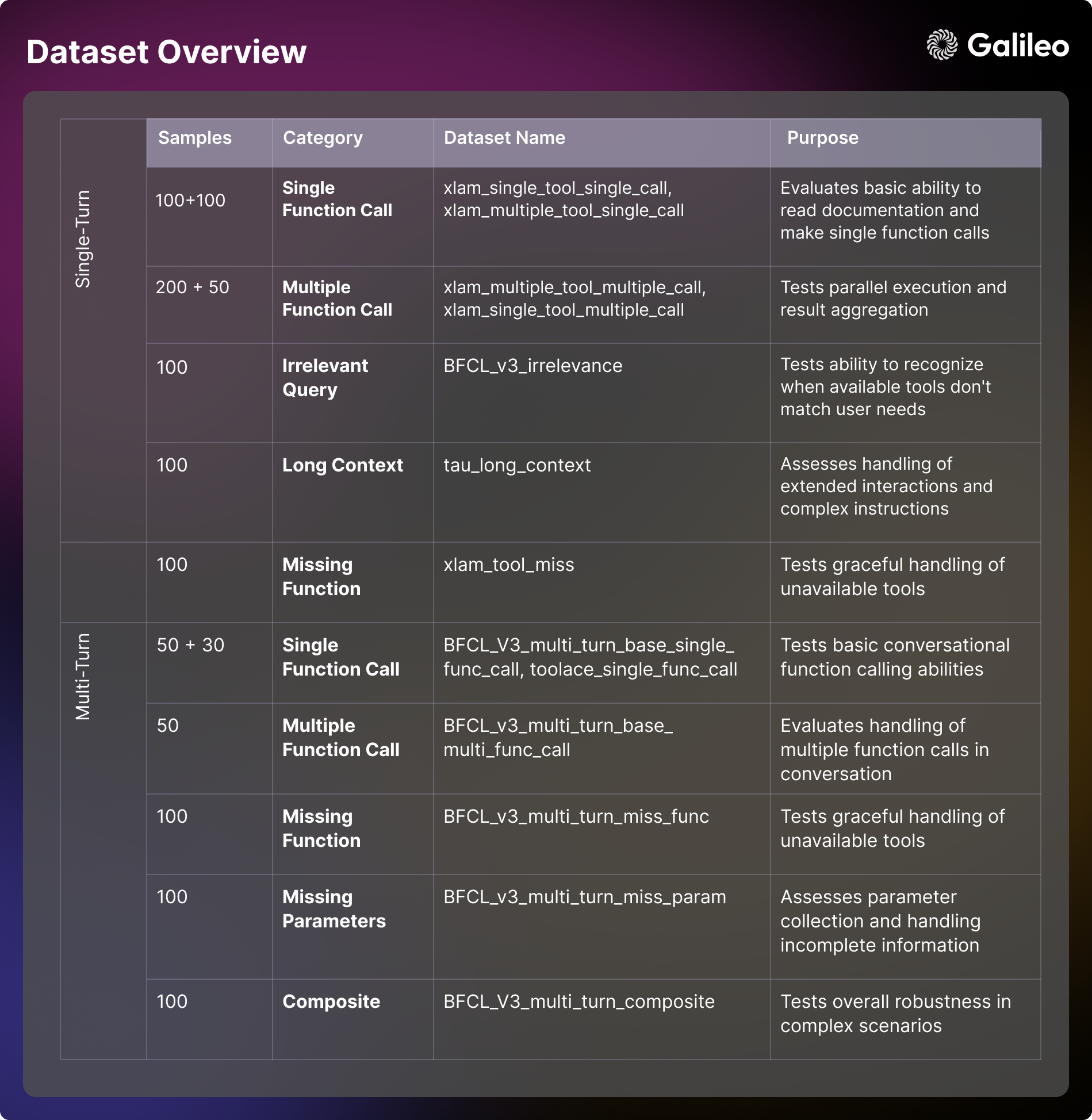

Assessment of the content composition of the dataset

Hugging Face's evaluation framework utilizes a set of carefully curated benchmark datasets derived from the BFCL (Berkeley Function Calling Leaderboard (Berkeley Function Call Leaderboard), τ-bench (Tau benchmark), Xlam, and ToolACE. Each dataset is designed to be targeted to test the capabilities of the intelligences in a specific aspect. Understanding these evaluation dimensions is crucial for both model evaluation and practical application development.

single-round capacity

- Basic tool use Scenario: Focuses on assessing the ability of an intelligent body to understand tool documentation, process parameters, and perform basic function calls. This dimension focuses on examining the response formatting and error handling capabilities of the intelligences in direct interactions. This ability is critical for simple automated tasks in real-world applications, such as setting reminders or getting basic information [xlam_single_tool_single_call].

- Tool Selection Scenario: Assesses the model's ability to select the correct tool from multiple tool options. This dimension examines the extent to which the model is able to understand the tool documentation and make sound decisions about the suitability of the tool. For real-world application scenarios where multi-purpose intelligences are built, this ability is critical [xlam_multiple_tool_single_call].

- parallel execution Scenario: Examine the ability of a model to program multiple tools to work together at the same time. This dimension is critical for improving efficiency in real-world applications [xlam_multiple_tool_multiple_call].

- Tool reuse Scenario: Evaluate the ability of the intelligences to efficiently handle batch operations and parameter variations. This aspect is particularly important for batch processing scenarios in real-world applications [xlam_single_tool_multiple_call].

Error handling and edge cases

- irrelevance testing Scenario: The ability of a test model to identify tool limitations and to communicate reasonably when available tools do not match user needs. This ability is a cornerstone of securing user experience and system reliability [BFCL_v3_irrelevance].

- Handling of missing tools Scenario: Examine how elegantly the model handles situations where the desired tool is unavailable, including the ability to inform the user of its limitations and provide alternatives [xlam_tool_miss, BFCL_v3_multi_turn_miss_func].

context management (computing)

- long context Scenario: Evaluate a model's ability to maintain contextual coherence and understand complex instructions in long-range conversations. This ability is critical for handling complex workflows and long interactions [tau_long_context, BFCL_v3_multi_turn_long_context].

multilayered interaction

- basic conversation Scenario: Test the ability of intelligences to make conversational function calls and maintain context in a multi-round dialog. This basic ability is critical for developing interactive applications [BFCL_v3_multi_turn_base_single_func_call, toolace_single_func_call].

- complex interaction Scenario: Combine multiple challenges to comprehensively test the overall robustness and problem solving ability of intelligences in complex scenarios [BFCL_v3_multi_turn_base_multi_func_call, BFCL_v3_multi_turn_composite].

parameter management

- missing parameter Scenario: Examine how the model handles situations where information is incomplete and how effectively it interacts with the user to collect the necessary parameters [BFCL_v3_multi_turn_miss_param].

Hugging Face has open-sourced the dataset to facilitate community research and application development - Hugging Face Agent Leaderboard Dataset

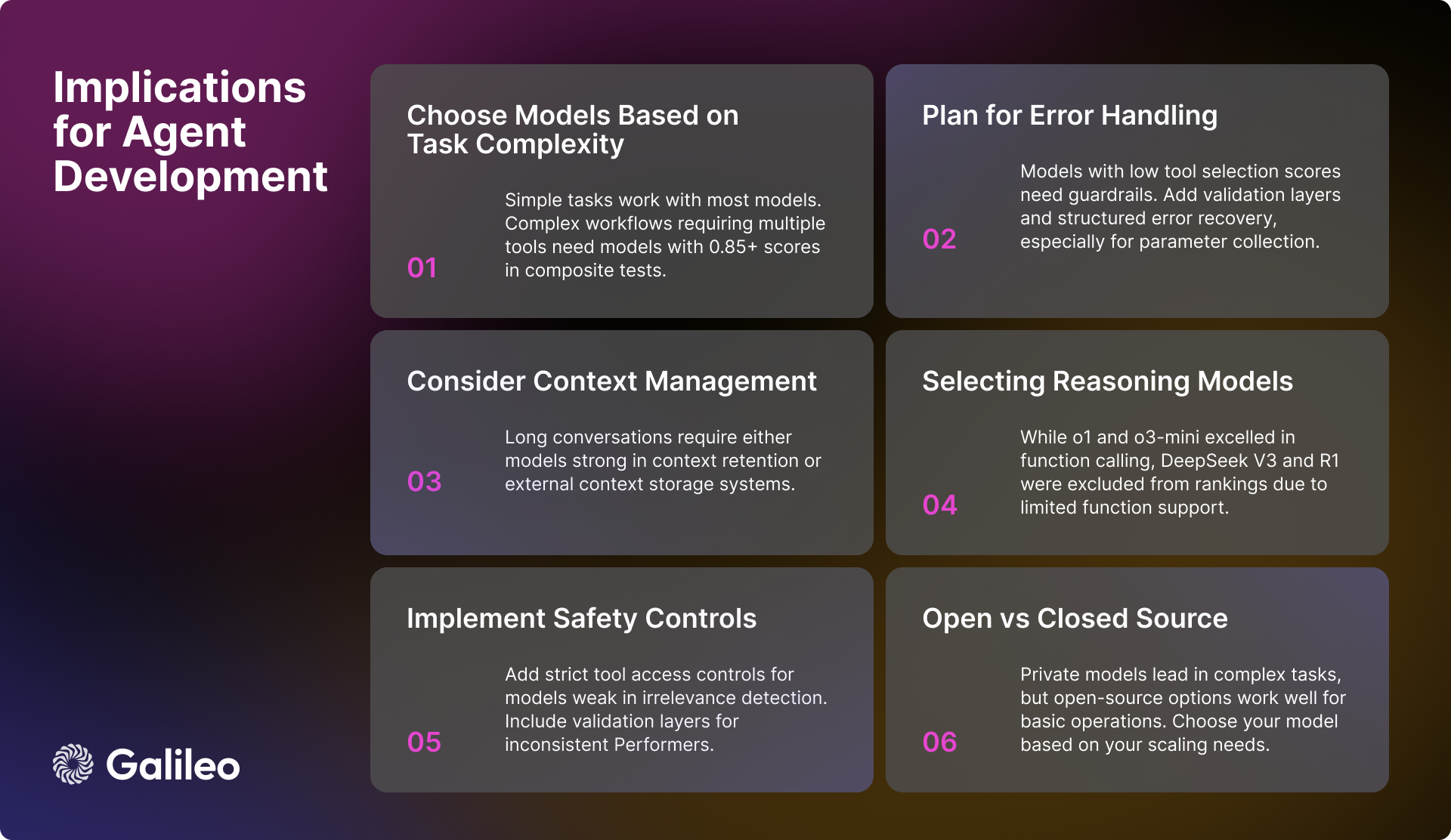

Practical implications for AI engineers

The results of Hugging Face's evaluation provide many valuable insights for AI engineers when developing AI intelligences. The following key factors need to be considered when building a robust and efficient intelligent body system:

Model Selection and Performance

For applications that need to handle complex workflows, it is critical to select high-performance models that score above 0.85 on the composite task. While most models are capable of basic tool invocation tasks, when dealing with parallel operations, it is more important to focus on the model's execution score on a specific task rather than relying solely on overall performance metrics.

Context and error management

For models that perform poorly in long context scenarios, it is crucial to implement an effective context summarization strategy. When models with shortcomings in irrelevance detection or parameter processing are selected, then robust error handling mechanisms must be constructed. For models that require additional support in parameter collection, a structured workflow that guides the user to provide the necessary parameter information can be considered.

Safety and reliability

To ensure that the system is safe and reliable, it is important to implement strict access control for tools, especially for models that perform poorly in detecting irrelevant operations. For models with insufficient performance stability, consider adding additional verification layers to enhance the overall reliability of the system. In addition, a well-constructed error recovery system is crucial, especially for models that have difficulty handling missing parameters.

Optimize system performance

When designing the system workflow architecture, due consideration should be given to the differences in the ability of different models to handle parallel execution and long context scenarios. When implementing a batch processing strategy, it is important to evaluate the tool reuse capability of the model, as this will directly affect the overall efficiency of the system.

Current status of AI model development

While proprietary models still lead in overall capability, open source models are rapidly improving in performance. In simple tool interaction tasks, all types of models are becoming more sophisticated. However, in complex multi-round interactions and long context scenarios, models still face many challenges.

The variability of model performance in different dimensions highlights the importance of selecting a model based on the needs of a specific use case. Instead of focusing only on the generic performance metrics of a model, developers should deeply evaluate the actual performance of the model in the target application scenario.

Hugging Face hopes that this list of Agent Intelligentsia will provide a valuable reference for developers.

Model Performance Overview

inference model

A phenomenon of interest in Hugging Face's analysis is the performance of the inference model. Although o1 cap (a poem) o3-mini While it performed well in function call capability integration, achieving high scores of 0.876 and 0.847, respectively, Hugging Face encountered some challenges with other inference models. Specifically.DeepSeek V3 cap (a poem) Deepseek R1 The model, while performing admirably in terms of general-purpose capabilities впечатляющий, was excluded from the Hugging Face leaderboard due to its limited support for function calls in its current version.

It is important to emphasize that it will be DeepSeek V3 cap (a poem) Deepseek R1 The exclusion from the rankings is not a denial of the superior performance of these models, but rather a prudent decision made with a full understanding of the published limitations of the models. In the Deepseek V3 and Deepseek R1 In the official discussion of Hugging Face, the developers made it clear that the current versions of these models do not yet support function calls. Hugging Face has chosen to wait for a future release with native function call support rather than attempt to devise workarounds or present potentially misleading performance metrics.

This case подчеркивает Function calls are a specialized feature that not all high-performance language models have by default. Even models that excel at reasoning may not natively support structured function calls if they are not specifically designed and trained for them. Therefore, a thorough evaluation of the model for your specific use case will ensure that you make the best choice.

Elite Tier Performance (>= 0.9)

Gemini-2.0-flash The model continues to lead the rankings with an excellent average score of 0.938. The model demonstrates excellent stability and consistency across all evaluation categories, with particular strengths in composite scenarios (0.95) and irrelevance detection (0.98). Considering its per-million token 0.15/$0.6 pricing.Gemini-2.0-flash A compelling balance between performance and cost-effectiveness.

Closely followed by GPT-4oThe model achieved a high score of 0.900 and performed well in complex tasks such as multi-tool processing (0.99) and parallel execution (0.98). Although GPT-4o The price is significantly higher at $2.5/$10 per million tokens, but its superior performance still оправдывает its higher cost.

High-performance band (0.85 to 0.9)

The high performance segment concentrates on a number of strong models. Gemini-1.5-flash The excellent mark of 0.895 is maintained, especially in the detection of uncorrelation (0.98) and single function performance (0.99). Gemini-1.5-pro Despite the higher pricing of $1.25/$5 per million tokens, it still achieved a high score of 0.885, demonstrating significant strengths in combined tasks (0.93) and single-tool execution (0.99).

o1 The model, although more expensive at $15/$60 per million tokens, still proves its market position with a score of 0.876 and industry-leading long context processing power (0.98). Emerging Models o3-mini It is competitive at 0.847, excels in single function calls (0.975) and irrelevance detection (0.97), and offers users a balanced choice with pricing of $1.1/$4.4 per million tokens.

Medium tier capacity (0.8 to 0.85)

GPT-4o-mini maintains an efficient performance of 0.832, performing particularly well in parallel tool use (0.99) and tool selection. However, the model performs relatively poorly in long context scenarios (0.51).

In the open source modeling camp, themistral-small-2501 Leading the pack with a score of 0.832, the model shows significant improvements in both long context processing (0.92) and tool selection capabilities (0.99) over the previous version. Qwen-72b With a score of 0.817, it follows closely behind, being able to match the private model in irrelevance detection (0.99) and demonstrating strong long context processing (0.92). Mistral-large Performs well on tool selection (0.97), but still faces challenges in integrating tasks (0.76).

Claude-sonnet achieved a score of 0.801 and excelled in instrumental deletion detection (0.92) and single function processing (0.955).

Base layer model (<0.8)

The base tier models consisted primarily of models that performed well in specific domains but had relatively low overall scores. Claude-haiku Delivering a more balanced performance with a score of 0.765, and priced at $0.8/$4 per million tokens, it demonstrates great cost-effectiveness.

open source model Llama-70B shows a potential of 0.774, especially in the multi-tool scenario (0.99). While Mistral-small (0.750), Mistral-8b (0.689) and Mistral-nemo (0.661) and other smaller scale variants of the model, on the other hand, provide users with efficient options in basic task scenarios.

These datasets are essential for constructing a framework for comprehensively assessing the invocation capabilities of language modeling tools.

Comments: The Agent Intelligent Body Ranking launched by Hugging Face accurately captures the core pain point of current big language modeling applications - how to efficiently utilize tools. For a long time, the industry's attention has been focused on the model's own capabilities, however, for the model to be truly implemented and solve real-world problems, the ability to utilize tools is the key. The emergence of this ranking undoubtedly provides developers with valuable references to help them choose the most suitable models for specific application scenarios. From evaluation methodology to dataset construction to final performance analysis, Hugging Face's report demonstrates its rigorous and meticulous professionalism, and also reflects its active efforts in promoting the realization of AI applications. It is especially commendable that the ranking does not only focus on the absolute performance of the models, but also analyzes the performance of the models in different scenarios, such as long context processing and multi-tool invocation, which are crucial factors in real-world applications. In addition, Hugging Face has generously open-sourced the evaluation dataset, which will undoubtedly further promote the community's research and development in the area of tool invocation capabilities. All in all, this is a very timely and valuable endeavor.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...