From Neural Networks to Hugging Face - A Brief History of Neural Networks and Deep Learning

TL;DR This article is 8200+ words and takes about 15 minutes to read in full. This paper briefly reviews the latest big model applications from perceptual machines to deep learning ChatGPT The history of the

Original: https://hutusi.com/articles/the-history-of-neural-networks

There is nothing to fear in life, only things to understand.

-- Mrs. Curie

I Deep belief networks

In 2006, Jeffrey Hinton, a professor at the University of Toronto in Canada, was researching how to train multi-layer neural networks, and he has been quietly working in the field of neural networks for more than three decades. Although he is considered a titanic figure in this field, his research results have been underappreciated by the industry because neural networks have been underappreciated by the artificial intelligence industry.

Born in London, England, Hinton's family produced a number of well-known scholars, and George Boole, the logician who founded Boolean algebra, was his great-great-grandfather. His grandfather was a science writer and his father an entomologist. Hinton was smarter than everyone else around him, but his path to schooling was a bit convoluted, first studying architecture at university, then physics, then philosophy, and finally graduating with a bachelor's degree in psychology. 1972 Hinton entered the University of Edinburgh to pursue a doctorate in the field of neural networks. At the time, neural networks were despised by the industry, and even Hinton's supervisor thought they were of little practical use and had no future. However, Hinton was not moved and had faith in neural network research, insisting that he could prove the value of neural networks, and this insistence was for more than thirty years.

Hinton slipped a disk in his lumbar spine when he was a young man moving a heater, and has suffered from low back problems ever since. In recent years, the problem has worsened, and most of the time, he needs to lie flat on his back to relieve the pain, which means he can't drive, can't fly, and even when he meets with students in the lab, he has to lie flat on a folding bed in his office. The physical pain of the ordeal didn't hit Hinton nearly as hard as the indifference to academic research. As early as 1969, Minsky, in his book Perceptual Machines, laid down the law on multilayer perceptual machines, putting a stamp of approval on subsequent neural network research: "There will be no future for multilayer perceptual machines because no one in the world can train a multilayer perceptual machine well enough, even to make it capable of learning the simplest methods of functioning." The limited ability of single-layer perceptron, even "different or" such a basic classification problem can not be realized, and multi-layer perceptron and no available training methods, is tantamount to say that the direction of neural network research is a dead end. Neural networks were considered academic heresy in the industry, and no one believed they could succeed, so students were careful to bypass neural networks when choosing a mentor, and for a while Sinton couldn't even recruit enough graduate students.

In 1983, Hinton invented the Boltzmann machine, and later, the simplified restricted Boltzmann machine was applied to machine learning and became the basis for the hierarchical structure of deep neural networks. in 1986, Hinton proposed the error back propagation algorithm (BP) for multilayer perceptual machines, an algorithm that laid the foundation for what would become deep learning. Hinton invented something new every once in a while, and he persisted in writing more than two hundred neural network-related papers, even though they were not well received. By 2006, Hinton had amassed a rich theoretical and practical foundation, and this time, he published a paper that would change the whole of machine learning and indeed the whole world.

Hinton found that neural networks with multiple hidden layers can have the ability to automatically extract features for learning, which is more effective than traditional machine learning with manual feature extraction. In addition, the difficulty of training multi-layer neural networks can be reduced through layer-by-layer pre-training, which solves the long-standing problem of multi-layer neural network training. Hinton published his results in two papers at a time when the term neural network was rejected by many academic journal editors, and some manuscript titles were even returned because they contained the word "neural network". In order not to irritate the sensitivities of these people, Hinton took a new name and named the model "Deep Belief Network" (Deep Belief Network).

II. Sensors

In fact, the research of neural network can be traced back to the 1940s, in 1940, 17-year-old Walter Pitts met 42-year-old professor Warren McCulloch at the University of Illinois at Chicago, and joined the latter's research project: attempting to use neuron networks to build a mechanical model of the brain based on logical operations. They used logical operations to abstract the thinking model of the human brain, and proposed the concept of "Neural Network" (Neural Network), in which the neuron is the smallest information processing unit; and they abstracted and simplified the working process of neurons into a very simple logical operation model, which was later named as "M The model was named "M-P Neuron Model" after the initials of their two names.

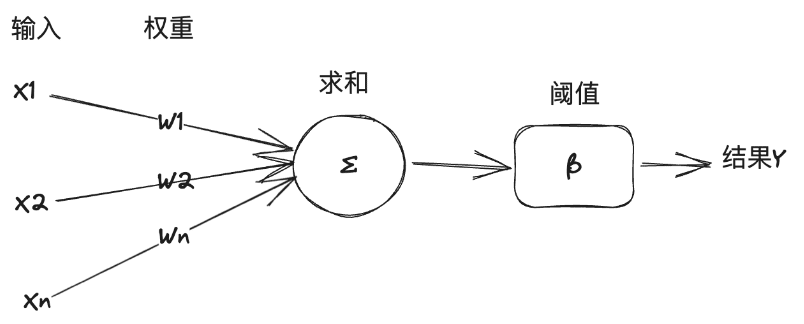

In this model, a neuron receives input signals from other neurons, and different input signals have different significance, which is represented by the "weight" of the connection, and the neuron sums up all the inputs according to the weight, and compares the result with the "Threshold" of the neuron to decide whether to output the signal or not. The neuron sums up all the inputs according to the weights, and compares the result with the neuron's "threshold" to decide whether or not to output a signal to the outside world.

The "M-P model" is simple enough and straightforward enough to be modeled by symbolic logic, and artificial intelligence experts use it as the basis for building neural network models to solve machine learning tasks. Here is a brief explanation of the relationship between artificial intelligence, machine learning and deep learning: Artificial intelligence is the use of computer technology to achieve human intelligence technology, in the general textbook is defined as the study and construction of intelligent agent. Intelligent agent is Intelligent agent, or simply agent, which solves specific tasks or generalized tasks by imitating human thinking and cognition. Intelligent agent that solves characteristic tasks is known as weak artificial intelligence, or narrow artificial intelligence (ANI), while intelligent agent that solves generalized tasks is known as strong artificial intelligence, or generalized artificial intelligence (AGI). Machine learning is a branch of artificial intelligence that learns from data and improves systems. Deep learning, on the other hand, is another branch of machine learning, which uses neural network techniques for machine learning.

In 1957, Rosenblatt, a professor of psychology at Cornell University, simulated and implemented a neural network model on an IBM computer that he called the Perceptron. His approach was to combine a set of M-P model neurons, which can be used to train and complete some machine vision pattern recognition tasks. In general, machine learning has two kinds of tasks: classification and regression. Classification is the problem of determining which class the data is in, such as recognizing whether an image is a cat or a dog; while regression is the problem of predicting one data from another, such as predicting the weight of a person based on their image. Perceptual machines solve linear classification problems. Explain this with an example of how perceptron machines work from the book "The Frontiers of Intelligence":

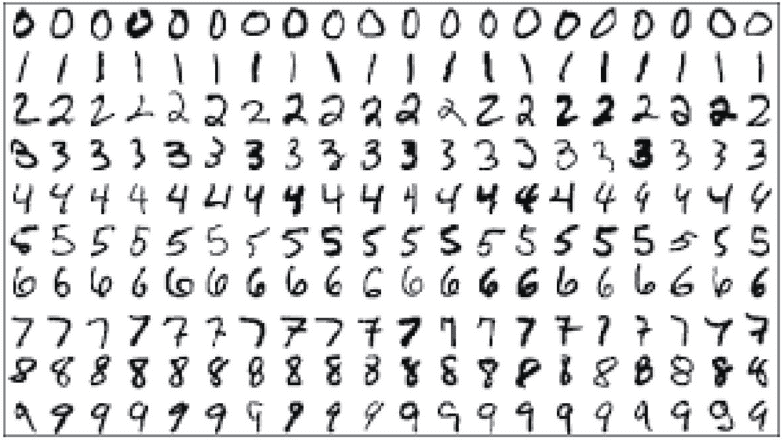

Suppose the task objective is to automatically recognize Arabic numerals, the numbers to be recognized will be handwritten or printed in various forms, the numbers will be scanned through and then stored in an image file of 14*14 pixels size. First, a training set similar to the following figure is prepared for machine learning. The training set is the training dataset, which is specially provided for computer learning, it is not only a set of pictures and other data, it will be manually labeled in advance to tell the machine what the numbers represented by these picture data are.

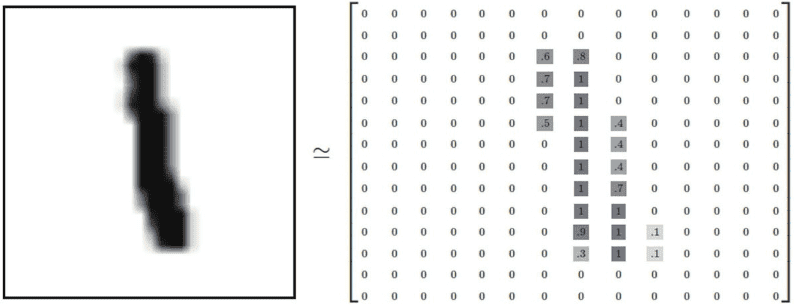

Then, we have to design a data structure so that the machine can store and process these pictures. For a 14*14 grayscale digital picture, the black pixels can be represented by 1, the white pixels by 0, and the grayscale pixels between black and white are represented by a floating point number between 0 and 1 depending on their grayscale intensity. As shown in the following figure for this figure can be converted into a two-dimensional tensor array:

And the machine is able to recognize what the numbers in the picture are, mainly by finding the features of that picture that represent a particular number. For humans, it is easy for recognizing these handwritten numbers, but it is hard for us to explain what these features are. The goal of machine learning is to extract the features of these pictures in the training set that represent numbers, and according to the M-P model, the way to extract the features is to choose to weight and sum the values of each pixel of the picture, and to calculate the weight of each pixel corresponding to each number based on the results of the correspondence between the sample pictures in the training set and the annotated data: if a certain pixel has a very negative evidence that the picture does not belong to a certain number If a pixel has very negative evidence that the image does not belong to a certain number, the weight of that pixel is set to a negative value for that number, on the contrary, if a pixel has very positive evidence that the image belongs to a certain number, the weight of that pixel is set to a positive value for that number. For example, for the number "0" the pixel in the middle point of the picture should not have a black (1) pixel, if it occurs then it indicates that the picture belongs to the number 0 as a negative evidence, it reduces the probability that the picture is the number 0. In this way, after training and calibration of the dataset, the weight distribution of each pixel corresponding to each number 0-9 can be obtained as 14*14 (=196).

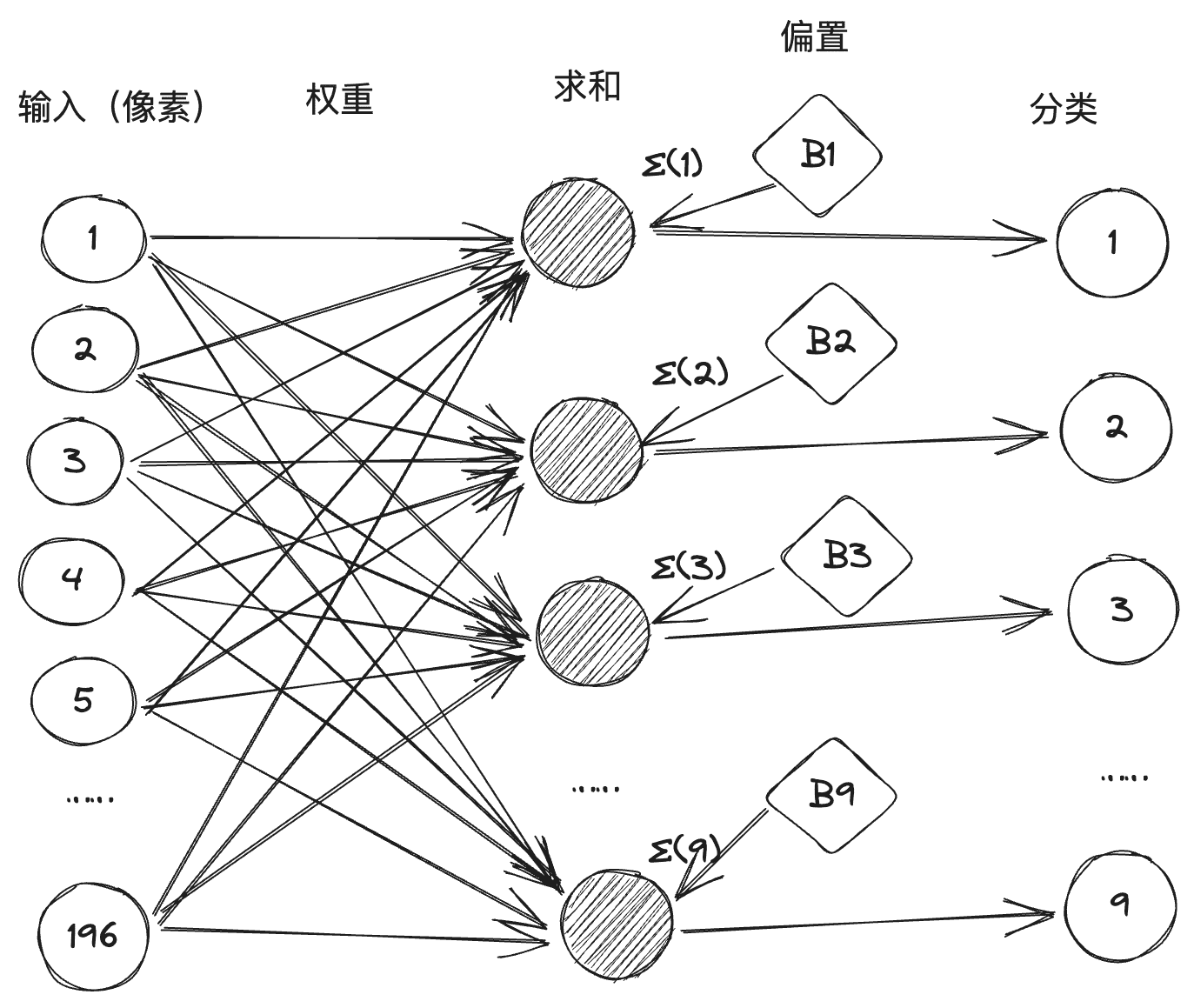

We then convert the classification process of each number into an M-P neuron, each neuron has 196 pixel inputs, and the weight value between each input and that neuron is obtained by training, so that it constitutes a neural network consisting of 10 neurons, 196 inputs, and 1,960 connecting lines with weights before them, as shown below: (Generally, in a neural network, the threshold value will be is converted into a bias bias, called one of the summation terms, to simplify the arithmetic process.)

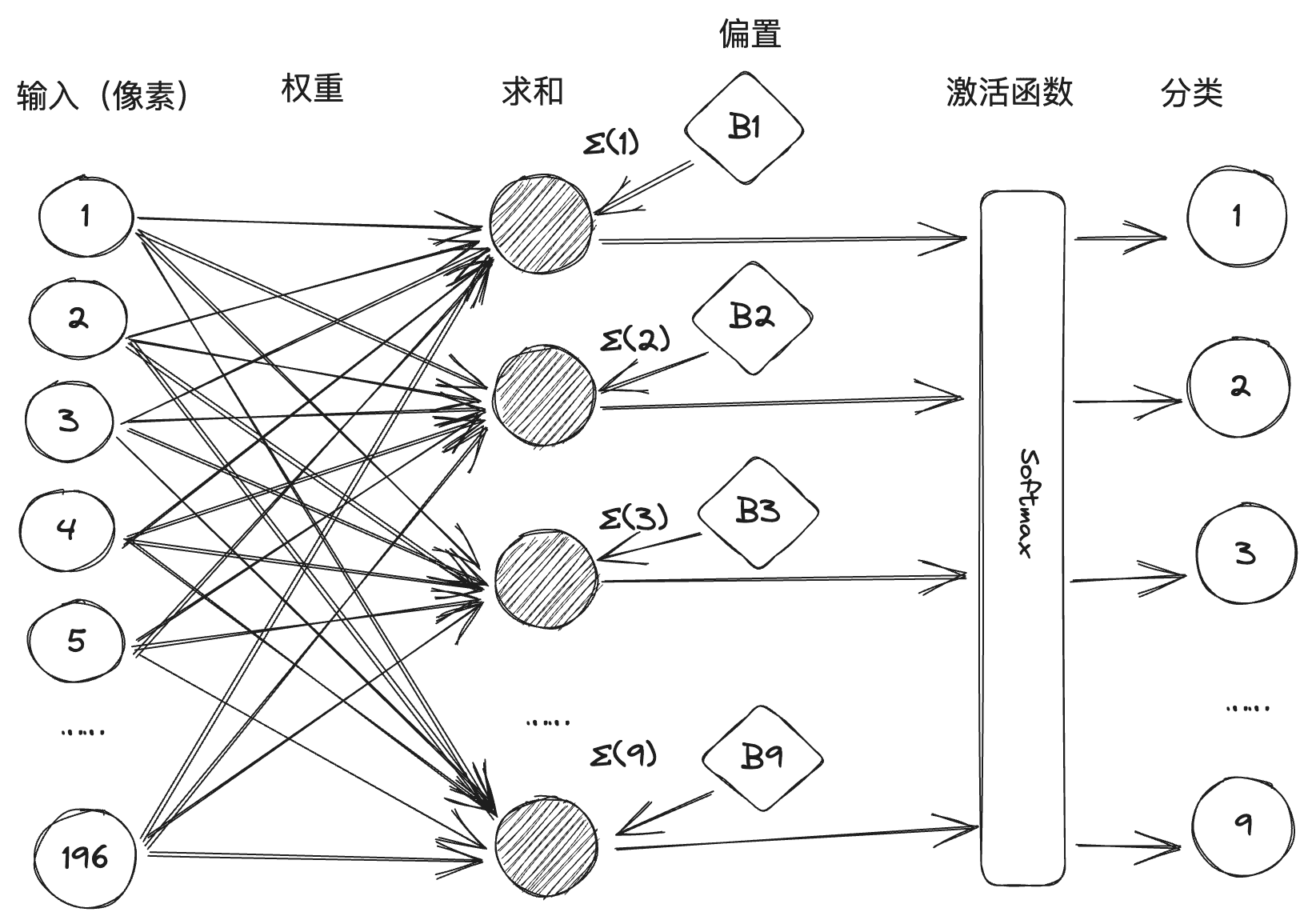

However, in practice, there are ambiguities in some handwritten fonts that may result in two or more neurons being activated after weighted summation. Therefore the perceptual machine is implemented by introducing the design of activation function, as shown in the figure below, Softmax is an activation function, which will process the summation value to inhibit the numerical classification with small probability and enhance the numerical classification with large probability.

Rosenblatt made the world's first hardware perceptron "Mark-1" two years later, the perceptron can recognize the English alphabet, which caused a huge sensation at that time. The U.S. Department of Defense and the Navy military also noticed, and gave a lot of financial support, Rosenblatt on the perception of the machine's confidence has reached its peak, and even a reporter asked "there is no perception of things that can not be done", Rosenblatt's answer was "love, hope, despair! Rosenblatt's answer was "love, hope, despair". Rosenblatt's fame grew, and his flamboyant personality led him to make enemies everywhere, the most famous of which was another giant of artificial intelligence, Marvin Minsky. Minsky was an organizer of the Dartmouth Conference and one of the founders of AI. in 1969, he published the book Perceptual Machines, which clearly identified the flaws that existed in perceptual machines. The first was a mathematical demonstration of the inability of perceptual machines to deal with nonlinear classification problems such as heteroscedasticity, which was followed by a demonstration that the complexity of multilayer perceptual machines led to a dramatic expansion of connected data without a suitable training method. Minsky won the fourth Turing Award in the year of the book's publication, and the immense prestige of his judgment on perceptual machines sentenced neural network research to death. Connectionism took a beating, and symbolist research became mainstream in AI.

There are two main schools of thought in the field of artificial intelligence: connectivism and symbolism, sort of like the Sword Sect and the Qi Sect in martial arts novels, which have been competing with each other for a long time. Connectionism develops AI by modeling the human brain to build neural networks, storing knowledge in a large number of connections, and learning based on data. Symbolism, on the other hand, believes that knowledge and reasoning should be represented by symbols and rules, i.e., a large number of "if-then" rule definitions to generate decisions and reasoning, and develops AI based on rules and logic. The former is represented by neural networks and the latter by expert systems.

III Deep Learning

With the failure of the perception machine, government investment in the field of artificial intelligence decreased, and artificial intelligence entered its first winter period. And by the 1980s, symbolism, represented by expert systems, became the mainstream of AI, triggering the second wave of AI, while neural network research was left out in the cold. As mentioned earlier, there is only one person who still persists, and that is Jeffrey Hinton.

Based on his predecessors, Sinton successively invented the Boltzmann machine and the error back propagation algorithm. Sinton's pioneering contributions to the field of neural networks brought life to the field, although the mainstream of the field of artificial intelligence from the 1980s to the beginning of the century was still knowledge base and statistical analysis, and the various techniques of neural networks began to break through, with representative examples such as the Convolutional Neural Networks (CNNs), the Long Short-Term Memory networks (LSTM), etc. In 2006, Hinton proposed deep belief networks, which opened the era of deep learning.

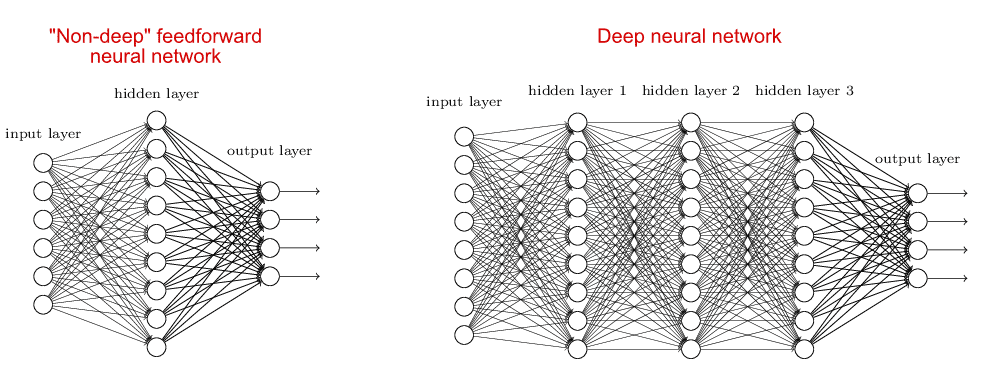

The neural network model corresponding to deep learning is called a deep neural network, which is relative to shallow neural networks. For shallow neural networks, there is generally only one hidden layer (or middle layer), plus input and output layers, for a total of three layers. Deep neural networks, on the other hand, have more than one hidden layer, comparing the two types of neural networks:

The reason that people have been focusing on shallow neural networks before deep learning is that the increase in the number of neural network layers leads to an increase in the difficulty of training, on the one hand, there is a lack of sufficient arithmetic support, and on the other hand, there are no good algorithms. The deep belief network proposed by Hinton solves this training problem by using an error back propagation algorithm and by pre-training layer by layer. After the deep belief network, the deep neural network becomes the mainstream model of machine learning, the current popular GPT, Llama and other big models are built from one or more deep neural networks.

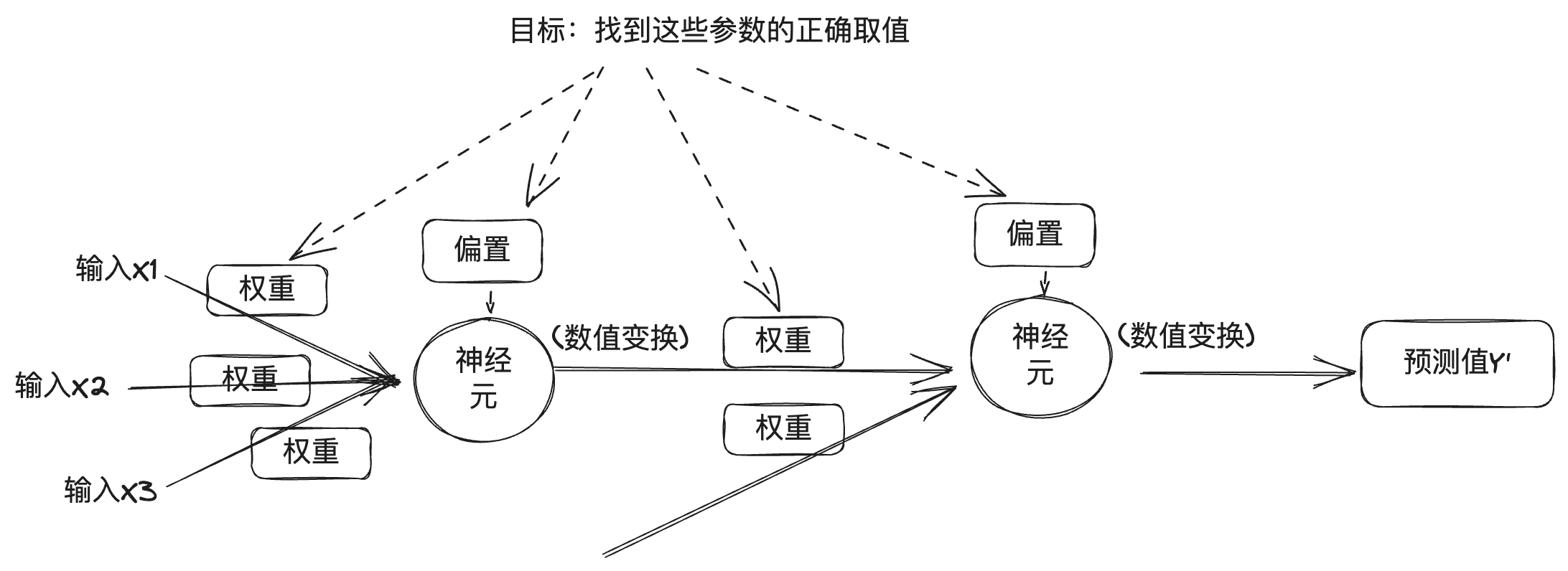

For the understanding of deep neural networks, you can refer to the introduction of the principle of perceptual machine above, the deep neural network is regarded as a combination of multiple neurons in multiple layers, and from the previous section, you can understand that the output of each layer is related to the weight, bias, and activation function, and the output of the deep neural network is also related to the number of layers and other numerical values. In deep neural networks, these values can be divided into two categories, one is the number of layers, activation function, optimizer, etc., called hyperparameter (hyperparameter), which is set by the engineer; the other is the weights and bias, called parameter (parameter), which is automatically obtained during the training process of deep neural networks, and to find the right parameter is the purpose of deep learning.

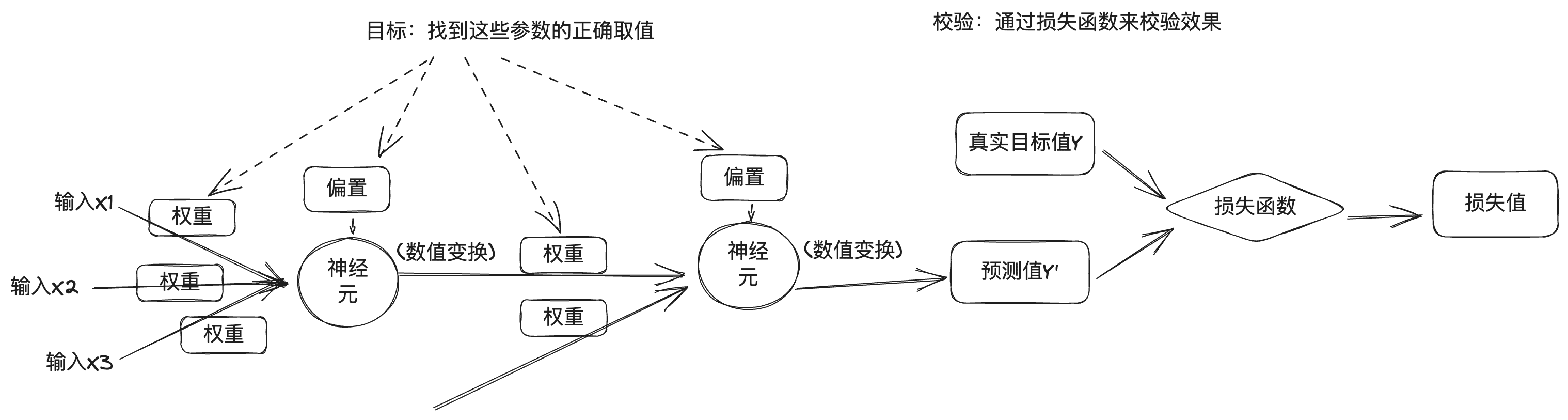

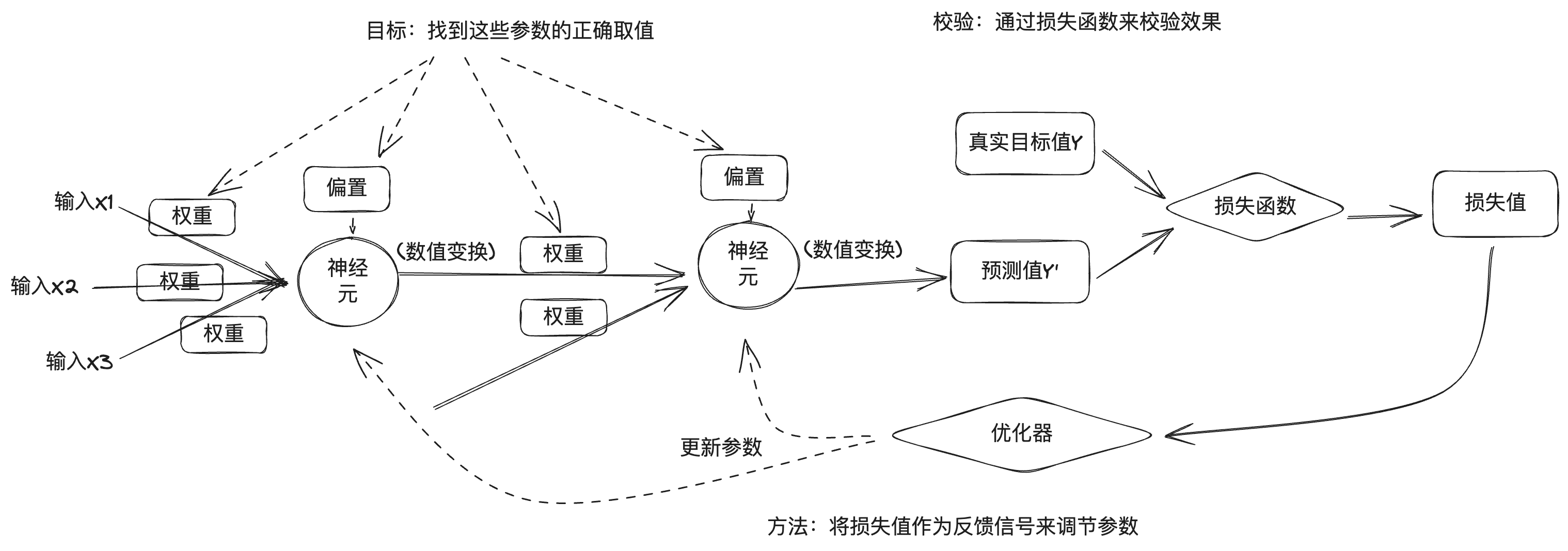

But the problem is that a deep neural network contains a huge number of parameters, and modifying one parameter will affect the behavior of the others, so how to find the correct values of these parameters is a difficult task. To find out the correct values of the parameters and make the model output accurately, we need a way to measure the gap between the model output and the desired output. Therefore deep learning training is measured using a loss function, which is also known as an objective function or a cost function. The loss function indicates how good or bad the neural network model is on this training sample by comparing the predicted value of the deep neural network with the true target value to get the loss value.

The deep learning approach uses the loss values as feedback signals to fine-tune the parameters in order to reduce the loss values for the current sample training. This tuning is achieved by an optimizer, which implements optimization algorithms such as gradient descent to update the parameters of the neuron nodes in each layer by back propagation.

At the beginning, the parameters of the neural network will be randomly assigned, a batch of training data will be input, through the input layer, hidden layer to the output layer, and after getting the predicted output of the network, the loss value will be calculated according to the loss function, which is the forward propagation process; then, starting from the output layer, the gradient of the parameters will be calculated in the reverse direction along each layer up to the input layer and the parameters of the network will be updated using an optimization algorithm based on the gradient, which is the backward propagation process .. Each batch of training samples processed by the neural network, the parameters are fine-tuned in the right direction and the loss values are reduced, which is the training cycle. A sufficient number of training cycles will result in parameters that minimize the loss function, which will result in a good neural network model.

Of course, the actual deep learning process is much more complex than this, so here is just a brief overview of the general process.

Back in 2012, Hinton led two of his students, Alex Krizhevsky and Ilya Sutskever, to develop the AlexNet neural network, which competed in the ImageNet image recognition competition and won the championship, with a much higher accuracy rate than the second place finisher. Hinton and his students then founded DNNResearch, Inc. to focus on deep neural networks. The company had no products or assets, but AlexNet's success attracted several Internet giants. in the winter of 2012, a secret auction was underway at Lake Tahoe on the U.S.-Canada border: the object of the auction was the recently founded DNNResearch, and the buyers were Google, Microsoft, DeepMind, and Baidu. In the end, while Google and Baidu were still bidding up the price, Hinton called off the auction and chose to sell it to Google for $44 million.In 2014, Google pocketed DeepMind.In 2016, AlphaGo, which uses a combination of classical Monte Carlo tree search and deep neural networks, defeated Lee Sedol, and then, the following year, defeated the world's No. 1-ranked player in the world's Go ranking, Ke Jie, and AlphaGo pushed the boundaries of artificial intelligence and deep learning to a new high.

IV. Large models

In 2015, Musk, Stripe's CTO Greg Brockman, YC Ventures CEOs Sam Altman and Ilya Sutskever, and others met at the Resewood Hotel in California to discuss creating an AI lab to counter the control of AI technology by large internet companies. Next, Greg Brockman brought in a group of researchers from Google, Microsoft, and other companies to form a new lab called OpenAI, with Greg Brockman, Sam Altman, and Ilya Sutskever serving as OpenAI's Chairman, CEO, and Chief Scientist, respectively.

Musk and Sam Altman originally envisioned OpenAI as a non-profit organization that would open up AI technology to everyone as a way to combat the dangers posed by large internet companies controlling AI technology. Because deep learning AI technology was exploding, no one could predict whether the technology would form a threat to humanity in the future, and openness might be the best way to counter that. And later in 2019 OpenAI chose to set up a profitable subsidiary in order to finance the development of its technology and closed source its core technology, which was an afterthought.

In 2017, engineers at Google published a paper titled Attention is all you need, in which it was proposed that Transformer Neural network architecture, which is characterized by the introduction of human attention mechanisms into neural networks. The image recognition mentioned earlier is a scenario in deep learning, where the image data is discrete data with no correlation between them. In real life, there is another scenario, which is to deal with time-sequential data, such as text, the context of the text is related, as well as voice, video, etc., are time-sequential data. This time-ordered data is called sequence (sequence), and the actual task is often a sequence into another sequence, such as translation, a paragraph of Chinese into a paragraph of English, as well as robot Q&A, a paragraph of the question into a paragraph of intelligently generated answers, and therefore the use of the converter (Transformer), which is also the origin of the name Transformer. This is where the name Transformer comes from. As mentioned earlier, the excitation of a neuron is determined by the weighted sum of the input data to which it is connected, and the weight represents the strength of the connection. In the case of time-series data, the weight of each element is different, which is consistent with our daily experience, e.g., look at the following passage:

Research has shown that the order of Chinese characters does not always affect the reading, for example, when you finish reading this sentence, you realize that all the characters in it are messed up.

This is true not only for Chinese characters, but also for other human languages such as English. This is because our brain will automatically determine the weight of the words in the sentence, in the redundant information to catch the focus, which is Attention.Google engineers introduced the attention mechanism into the neural network model, used in natural language processing, so that the machine can "understand" the intention of human language. Subsequently, in 2018, OpenAI released GPT-1 based on the Transformer architecture, GPT-2 in 2019, GPT-3 in 2020, and ChatGPT AI Q&A program based on GPT-3.5 at the end of 2022, which shocked people with its conversational ability, and AI took a big step forward in the direction of AGI.

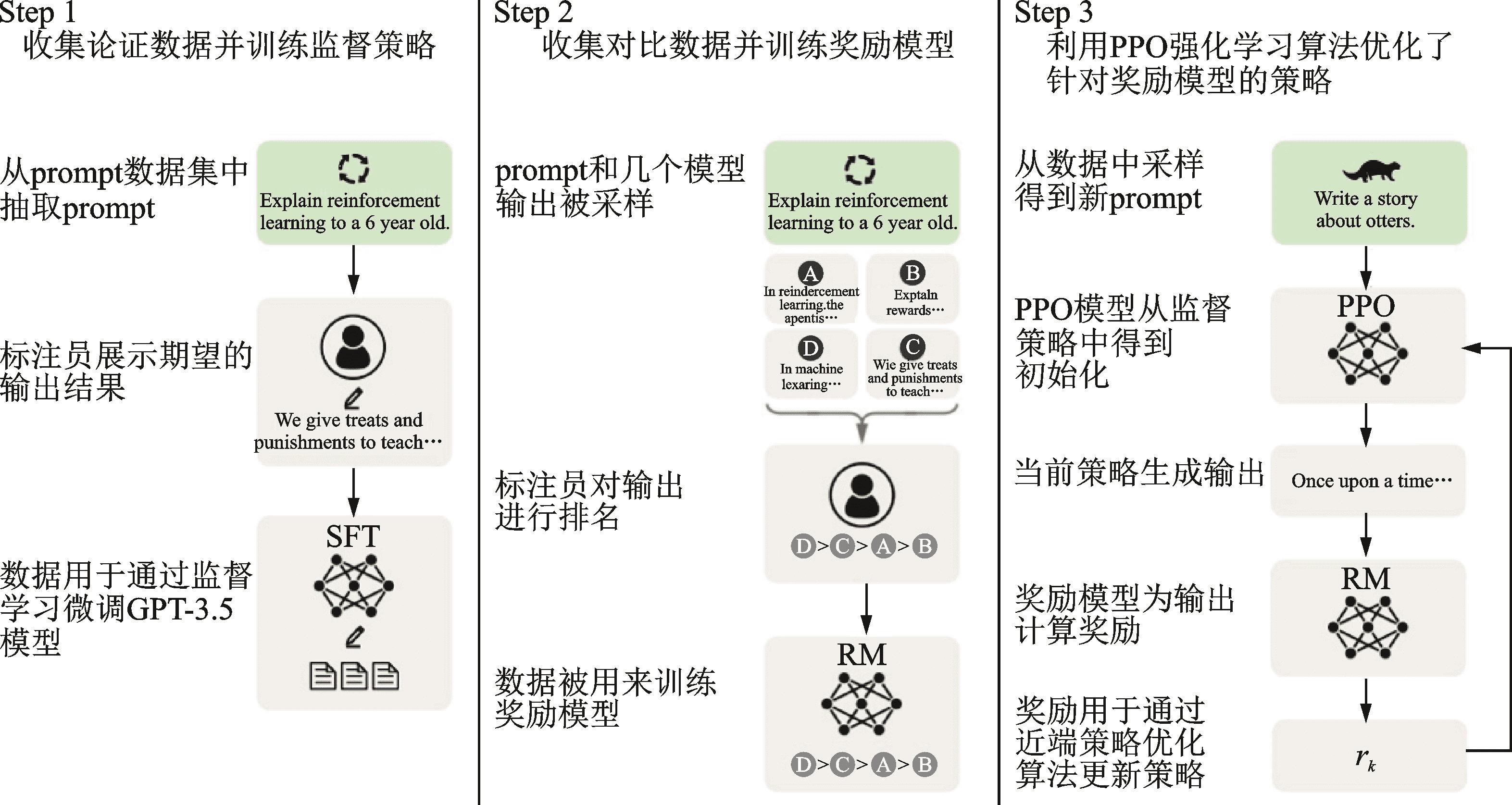

The full name of GPT is Generative Pre-trained Transformer, Generative indicates its ability to generate new content, Transformer is its infrastructure, and Pre-trained in the middle indicates that its training method is pre-training. Why is it called pre-trained? This is because, starting with AlexNet, people started to use bigger data and more parameters in neural network training in order to achieve better results, and this means that the training is becoming more resourceful and time-consuming. This cost is a bit high for training a specific task and is somewhat wasteful as it cannot be shared with other neural networks. Therefore, the industry has begun to adopt a pre-training + fine-tuning approach to train neural network models, i.e., first complete the training of a generalized large model on a larger dataset, and then complete the fine-tuning of the model with a smaller dataset for specific task scenarios.ChatGPT employs reinforcement learning from human feedback (RLHF), which is a method to train neural network models with a larger dataset. ChatGPT uses Reinforcement learning from human feedback (RLHF) for pre-training and fine-tuning, which is divided into three steps: the first step is to pre-train a language model (LM); the second step is to collect Q&A data and train a reward model (RM); and the third step is to fine-tune the language model (LM) with reinforcement learning (RL). This reward model contains human feedback, so the training process is called RLHF.

In addition to marveling at the accuracy of ChatGPT, users are also impressed by the ability to have multiple rounds of conversations. According to the underlying exploration of neural networks, we see that each inference process is from input through the weighting and activation of each neuron to the output, and there is no memory capability. And the reason why ChatGPT works well with multi-round conversations is because it uses the technology of Prompt Engineering in conversation management.

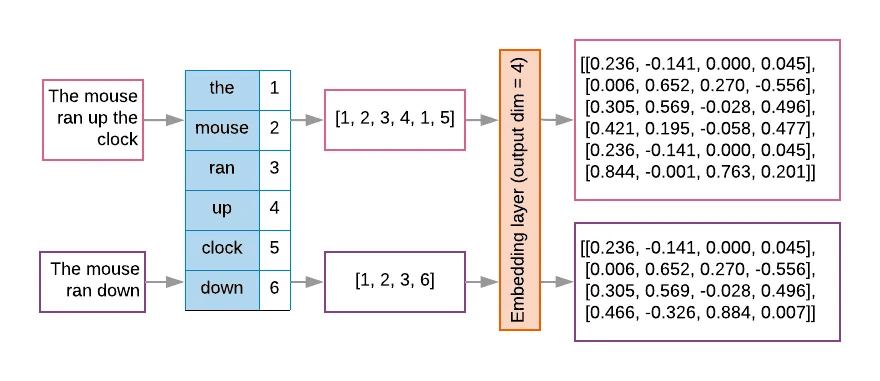

For large language models such as ChatGPT, its input is a token after converting a string of text, and large models generally design a fixed context window to limit the number of input tokens because of computational efficiency and memory constraints. The text will be firstly subdivided by the tokenizer and numbered by the lookup table, and then embedding into the matrix into a high dimensional space vector, which is the process of text vectorization, as shown in the following figure.

Because of the limitations on the number of tokens, it is necessary to use Prompt Engineering techniques in order to tell the larger model more comprehensive information in a limited context window. Prompt engineering uses a number of strategies to optimize model inputs so that the model produces outputs that better match expectations.

Behind the success of ChatGPT is the technical evolution of the large model represented by GPT. OpenAI believes that vigor produces miracles, and keeps expanding the parameters of GPT, with GPT-1 model parameters having 117 million, GPT-2 model parameters increased to 1.5 billion, GPT-3 reaching 175 billion, and GPT-4 model parameters allegedly having 1.8 trillion. More model parameters also means more computing power is needed to support training, OpenAI therefore summarized the "Scaling Law", which says that the performance of the model is related to the size of the model, the amount of data and computational resources, which simply means that the larger the model, the larger the amount of data, and the larger the computational resources, the better the model's performance will be. Rich Sutton, the father of reinforcement learning, expressed a similar view in his article "the Bitter Lesson" (the Bitter Lesson), reviewing the development of artificial intelligence in recent decades, summarizing that in the short term, people are always trying to improve the performance of the intelligent body by constructing knowledge, but in the long term, powerful computing power is the king.

The ability of large models has also changed from quantitative to qualitative changes, Google Chief Scientist Jeff Dean called it "emergent abilities" (Emergent abilities) of large models. The market sees this opportunity, on the one hand, the major vendors in the big model investment in the arms race, on the other hand, the big model of the open source ecology is also in full swing.

Five Hugging Face

In 2016, Frenchmen Clément Delangue, Julien Chaumond, and Thomas Wolf founded a company called Hugging Face, with the emoji icon as its logo. Hugging Face started out developing intelligent chatbots for young people, then developed some model training tools and open-sourced them in the process of training models. Later, they developed some model training tools and open-sourced them in the process, and later they even shifted their focus to the latter, a seemingly "unprofessional" approach that brought them into a new track and made them an indispensable player in the field of deep learning.

Silicon Valley has a lot of companies are in the side business to make achievements, such as Slack originally developed games, the company team is distributed in many places, in the process of operation developed a communication tool results in the accidental fire, that is, Slack. and Hugging Face's turn is also similar, but also in order to solve their own pain points, in 2018, Google released the big model BERT, and Hugging Face employees then used their familiar Pytorch framework to implement BERT, named the model pytorch-pretrained-bert, and open-sourced it to GitHub.Later, with the help of the community, a group of models such as GPT, GPT-2, and Transformer-XL were introduced, and the project was renamed to The deep learning field has been the competition between the two frameworks Pytorch and TensorFlow, and researchers often switch between the two frameworks in order to compare the advantages and disadvantages of the two frameworks, so the open-source project has added the function of switching between the two frameworks, and the name of the project was also changed to Transformers. Transformers has also become the fastest growing project on GitHub.

Hugging Face continues to develop and open source a series of other machine learning tools: Datasets, Tokenizer, Diffusers ...... These tools also standardize the process of AI development, before Hugging Face, it can be said that the AI development to the researchers to the main engineering method. Before Hugging Face, it could be said that AI development was dominated by researchers without a standardized engineering approach. Hugging Face provides a comprehensive AI toolset and establishes a set of de facto standards, which also allows more AI developers and even non-AI practitioners to quickly get started and train models.

Hugging Face then launched the Hugging Face Hub for hosting models, datasets, and AI applications based on Git and Git LFS technologies, and to date, 350,000 models, 75,000 datasets, and 150,000 sample AI applications have been hosted on the platform. The work of hosting and open-sourcing models and datasets, and establishing a global open-source repository center is creative and far-reaching. While the pre-training + fine-tuning approach mentioned above facilitates the sharing of neural network training resources, the Hugging Face Hub goes a step further by allowing AI developers to easily reuse the world's state-of-the-art results and add to them, democratizing the use and development of AI for everyone. GitHub, or as their Slogan says: building the AI community of the future. I have written two articles before, one of theA code commit that changed the worldIntroducing Git, an article inThe Road from Zero to Tens of Billion DollarsIntroducing GitHub, and Git, GitHub, Hugging Face, I think there's some kind of legacy between them, a legacy of hacking that's changing the world to build the future, and that's one of the things that prompted me to write this post.

VI. Postscript

As I was nearing the end of this article, I watched a talk that Hinton recently gave at Oxford University. In the talk, Hinton described two major schools of thought in the field of artificial intelligence, one Hinton called the logical approach, or symbolism, and the other he called the biological approach, or neural network connectionism, which simulates the human brain. And the biological approach proved to be a clear winner over the logical approach. Neural networks are models designed to mimic the understanding of the human brain, and large models work and understand like the brain. Hinton believes that artificial intelligence beyond the human brain will emerge in the future, and much sooner than we predict.

Attachment 1 Chronology of events

In 1943, McCulloch and Pitts published the "M-P Neuron Model", which used mathematical logic to explain and simulate the computational units of the human brain, and introduced the concept of neural networks.

The term "artificial intelligence" was first coined at the Dartmouth Conference in 1956.

In 1957, Rosenblatt proposed the "Perceptron" model and two years later succeeded in building the Mark-1, a hardware perceptron capable of recognizing the English alphabet.

In 1969, Minsky published Perceptual Machines, and the defects of perceptual machines pointed out in the book dealt a heavy blow to the research of perceptual machines and even neural networks.

In 1983, Hinton invented the Boltzmann machine.

In 1986, Hinton invented the error backpropagation algorithm.

In 1989, Yann LeCun invented the Convolutional Neural Network (CNN).

In 2006, Hinton proposed the Deep Belief Network, ushering in the era of deep learning.

Deep learning was taken seriously by the industry in 2012 when Hinton and two of his students designed AlexNet to win the ImageNet competition by a wide margin.

In 2015, DeepMind, a company acquired by Google, launched AlphaGo, which defeated Lee Sedol in 2016 and Ke Jie in 2017.OpenAI was founded.

Hugging Face was founded in 2016.

In 2017, Google published the Transformer model paper.

In 2018, OpenAI released GPT-1 based on the Transformer architecture.Hugging Face released the Transformers project.

In 2019, OpenAI releases GPT-2.

In 2020, OpenAI releases GPT-3. Hugging Face launches Hugging Face Hub.

In 2022, OpenAI releases ChatGPT.

Annex 2 References

Books:

The Frontiers of Intelligence: from Turing Machines to Artificial Intelligence by Zhiming Zhou (Author) Mechanical Industry Press October 2018

The Deep Learning Revolution Cade Metz (Author) Shuguang Du (Translator) CITIC Press January 2023

Deep Learning in Python (2nd Edition) François Chollet (Author) Liang Zhang (Translator) People's Posts and Telecommunications Publishing House August 2022

An Introduction to Deep Learning: theory and implementation based on Python by Yasui Saito (Author) Yujie Lu (Translator) People's Posts and Telecommunications Publishing House 2018

Deep Learning Advanced: Natural Language Processing by Yasuyoshi Saito (Author) Yujie Lu (Translator) People's Posts and Telecommunications Publishing House October 2020

This is ChatGPT Stephen Wolfram (Author) WOLFRAM Media Chineseization Team (Translator) People's Posts and Telecommunications Publishing House July 2023

Generative Artificial Intelligence by Ding Lei (Author) CITIC Press May 2023

Huggingface Natural Language Processing Explained by Li Fulin (Author) Tsinghua University Press April 2023

Article:

An Introduction to Neural Networks by Yifeng Nguyen

2012, 180 Days that Changed the Fate of Mankind" Enkawa Research Institute

A History of the GPT Family Evolution MetaPost

Transformer - Attention is all you need" Knowing

The Evolution of Pre-trained Language Models.

Tips Engineering Guide

RLHF Technology Explained, the "Credit" behind ChatGPT.

Development and Application of ChatGPT Large Modeling Techniques.

The Bitter Lesson, Rich Sutton.

An Interview with HuggingFace CTO: The Rise of Open Source, Startup Stories, and the Democratization of AI".

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...