HeyGem: Silicon Intelligence's Open Source Heygen Digital Human Pantographs

General Introduction

HeyGem is a fully offline video compositing tool for Windows, developed by the GuijiAI team and open-sourced on GitHub. It uses advanced AI algorithms to accurately clone a user's appearance and voice to create realistic avatars, and supports personalized videos driven by text or voice. The tool does not need to be connected to the Internet, all operations are done locally to ensure user privacy and security. HeyGem supports multi-language script (including English, Japanese, Korean, Chinese and other eight languages), simple and intuitive interface, suitable for users with no technical background to get started quickly, and provides an open API to facilitate developers to expand the functionality. A few months ago Silicon Intelligence open source mobile version of the digital man DUIX: Real-time interactive intelligent digital people with multi-platform one-click deployment supportThe

HeyGem official download address: https://heygem.ai/

Function List

- Precise appearance and voice cloning: AI technology captures facial features and vocal details to generate high-fidelity avatars and voices with support for parameter adjustment.

- Text-driven virtual image: After entering text, the tool automatically generates natural speech and drives the avatar through lip synchronization and expression movements.

- Voice-driven video production: Generate dynamic videos by controlling the tone and rhythm of the avatar through user voice input.

- Fully offline operation: No network connection is required and all data is processed locally for privacy and security.

- Multi-language support: Eight language scripts are supported: English, Japanese, Korean, Chinese, French, German, Arabic and Spanish.

- Efficient video compositing: Intelligent optimization of audio and video synchronization ensures a natural match between lip shape and voice.

- Open Source API Interface: Provides APIs for model training and video compositing, with customizable features for developers.

Using Help

Installation process

The following installation process strictly follows the official instructions, retaining the original text and image addresses:

Prerequisites

- Must have a D drive.: Mainly for storing digital images and project data

- Free space requirement: more than 30GB

- C Disk: Used to store service image files

- Free space requirement: greater than 100GB

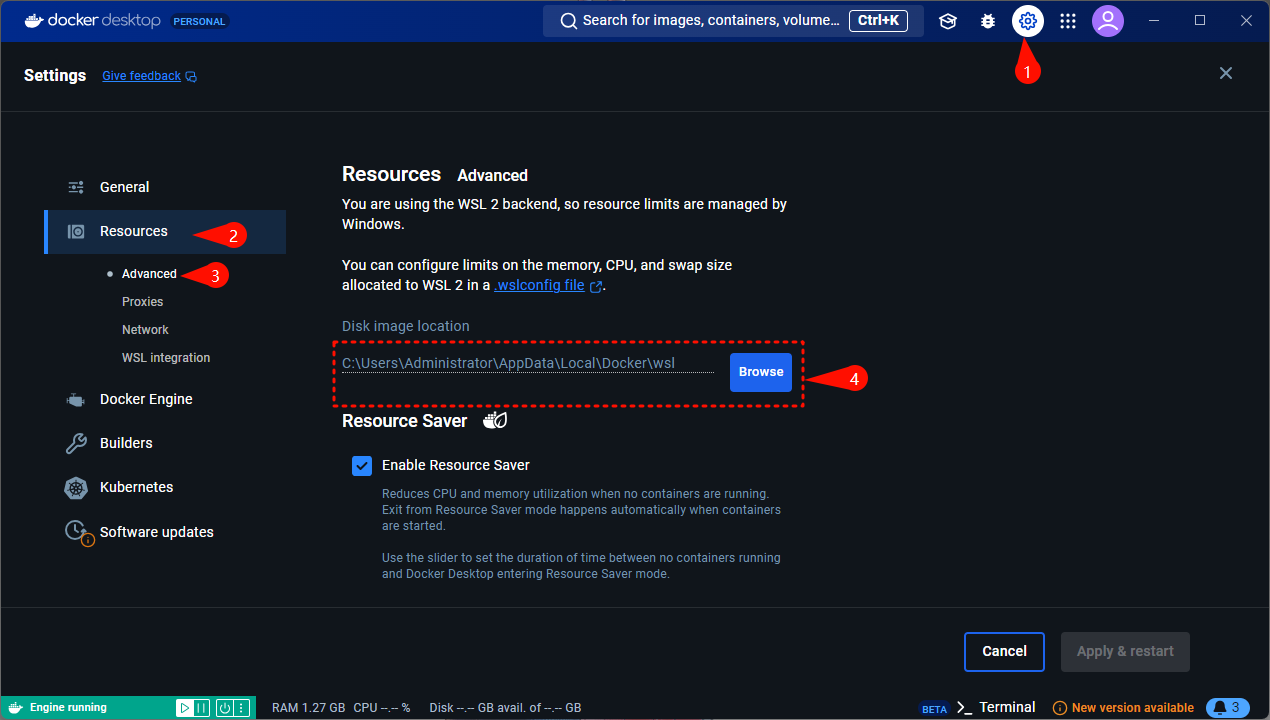

- If you have less than 100GB of free space, after installing Docker, you can select a disk folder with more than 100GB of free space in the location shown below:

- system requirements::

- Current support for Windows 10 19042.1526 or later

- Recommended Configurations::

- CPU: 13th Gen Intel Core i5-13400F

- Memory: 32GB

- Graphics card: RTX-4070

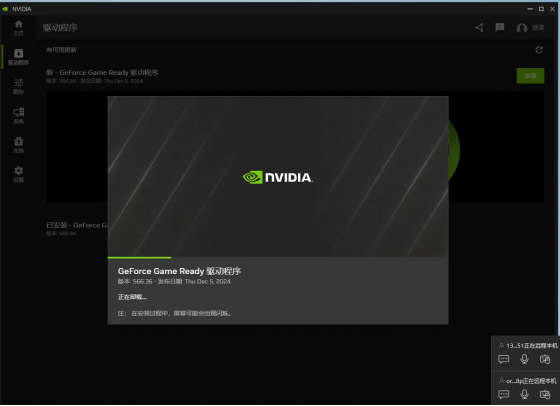

- Ensure that you have an NVIDIA graphics card and that the drivers are installed correctly.

- NVIDIA driver download link: https://www.nvidia.cn/drivers/lookup/

- NVIDIA driver download link: https://www.nvidia.cn/drivers/lookup/

Installing Windows Docker

- Using commands

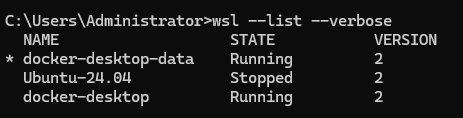

wsl --list --verboseCheck to see if WSL is already installed. the image below shows that it is installed and does not need to be reinstalled:

- WSL installation commands:

wsl --install - May fail due to network problems, please try several times

- Set up and memorize a new username and password during the installation process

- WSL installation commands:

- utilization

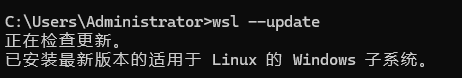

wsl --updateUpdate WSL:

- Download Docker for Windows and choose an installer that fits your CPU architecture.

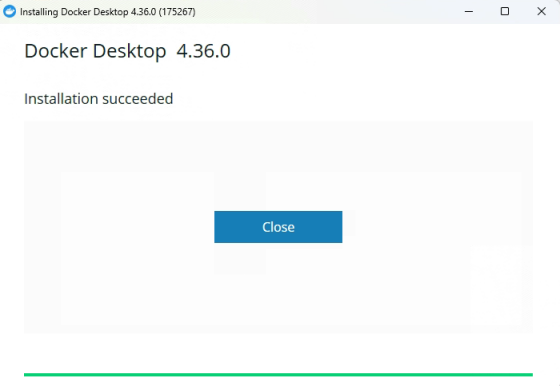

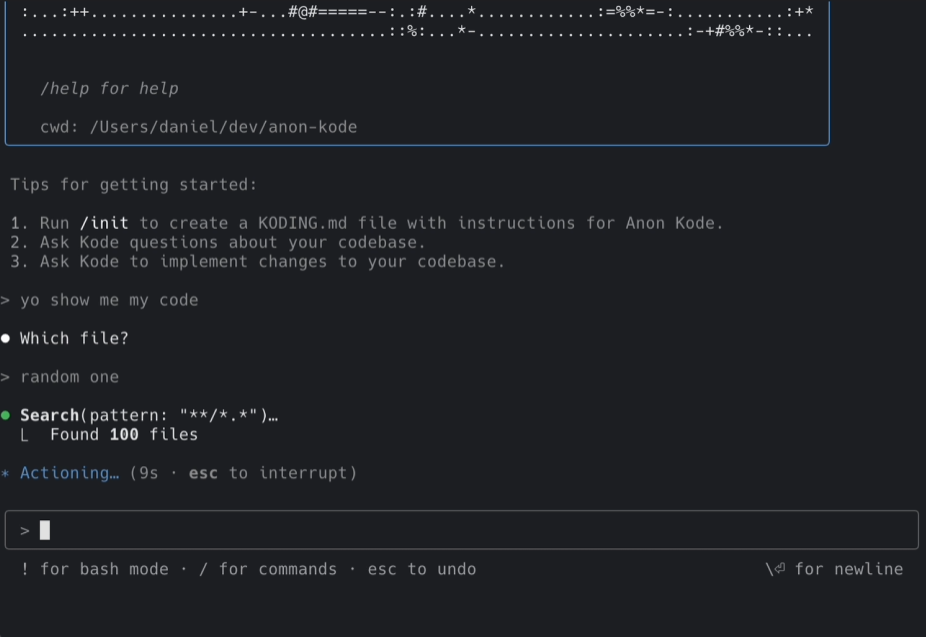

- This screen indicates successful installation:

- Run Docker:

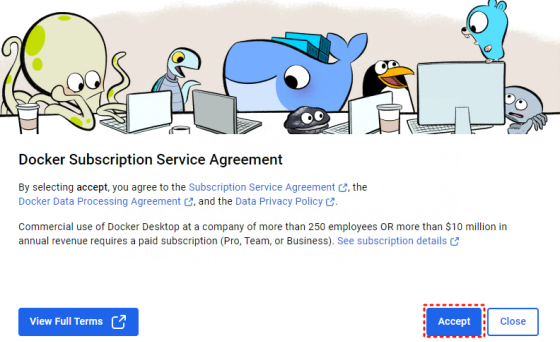

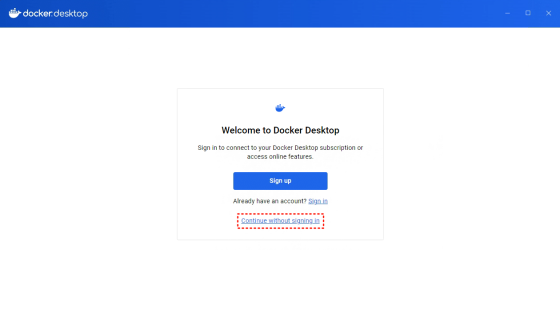

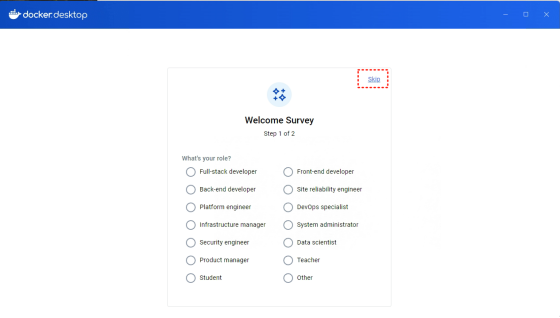

- Accepts protocol and skips login on first run:

Installing the Server

Install the following using Docker and docker-compose:

docker-compose.ymlThe file is located in the/deployCatalog.- exist

/deploydirectory to execute thedocker-compose up -dThe - Wait patiently (about half an hour, depending on the speed of the Internet), the download will consume about 70GB of traffic, please make sure to use WiFi.

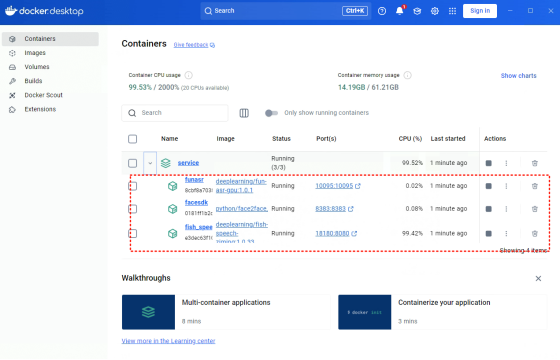

- Success is indicated when three services are seen in Docker:

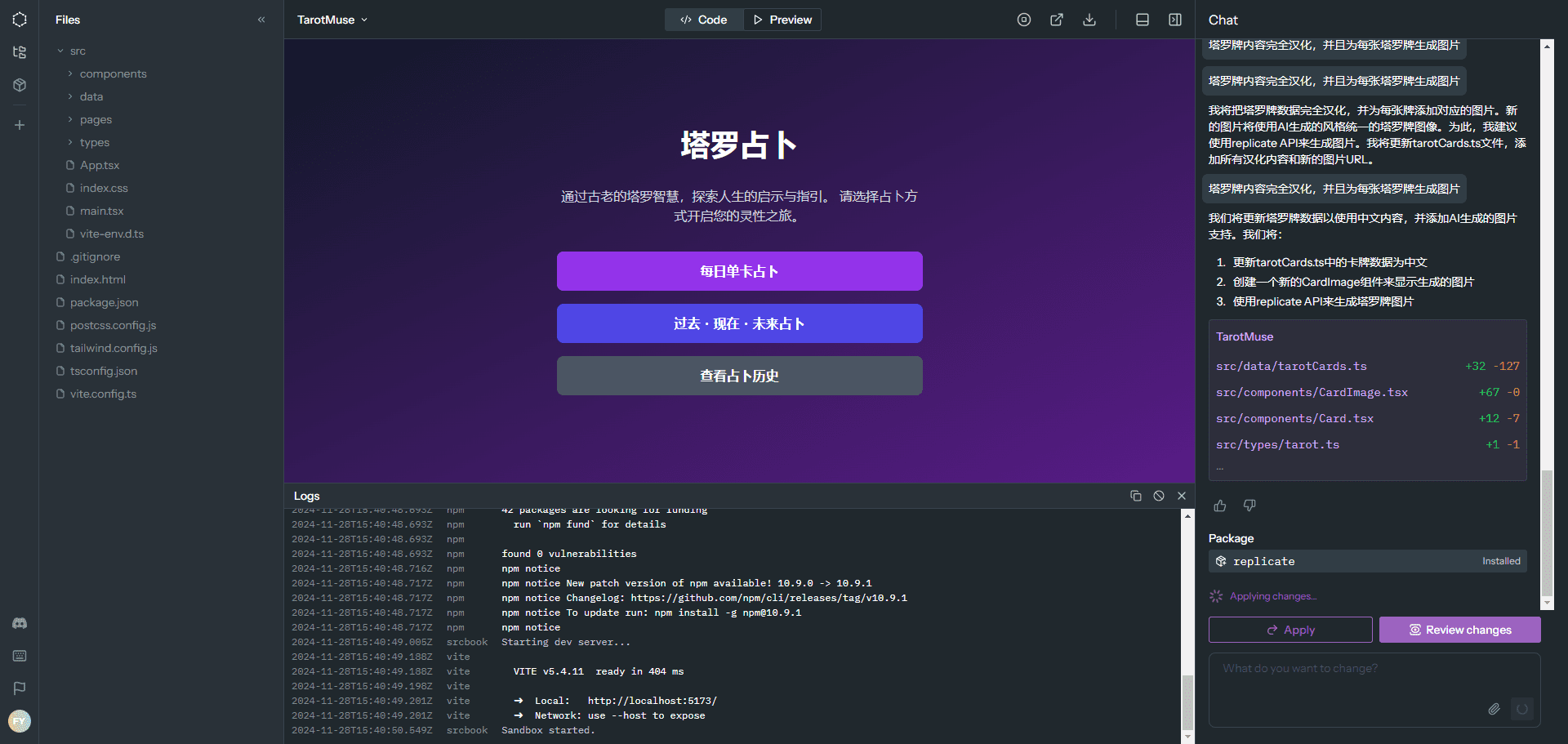

Client

- Build Script

npm run build:winAfter execution, it will be in thedistCatalog GenerationHeyGem-1.0.0-setup.exeThe - double-click

HeyGem-1.0.0-setup.exePerform the installation.

Dependencies

- Nodejs 18

- Docker image:

docker pull guiji2025/fun-asr:1.0.1docker pull guiji2025/fish-speech-ziming:1.0.39docker pull guiji2025/heygem.ai:0.0.7_sdk_slim

Main function operation flow

1. Appearance and voice cloning

- Prepare material

- Record a clear voice (10-30 seconds in WAV format) and put it into the

D:\heygem_data\voice\dataThe - Take a high-resolution photo of the front and place it in the

D:\heygem_data\face2face(Paths can be found in thedocker-compose.yml(adjusted in the middle).

- Record a clear voice (10-30 seconds in WAV format) and put it into the

- Run Clone Function

- Launch the client, open the interface and select "Model Training".

- Calling the API

http://127.0.0.1:18180/v1/preprocess_and_tran, input parameters such as:{ "format": ".wav", "reference_audio": "D:/heygem_data/voice/data/sample.wav", "lang": "zh" } - Get the returned results (e.g. audio path and text) and save them for later use.

2. Text-driven virtual images

- input text

- Select "Audio Synthesis" in the client interface and call the API.

http://127.0.0.1:18180/v1/invoke, input parameters such as:{ "speaker": "unique-uuid", "text": "欢迎体验 HeyGem.ai", "format": "wav", "topP": 0.7, "max_new_tokens": 1024, "chunk_length": 100, "repetition_penalty": 1.2, "temperature": 0.7, "need_asr": false, "streaming": false, "is_fixed_seed": 0, "is_norm": 0, "reference_audio": "返回的音频路径", "reference_text": "返回的文本" }

- Select "Audio Synthesis" in the client interface and call the API.

- Generate Video

- Using the Synthesis Interface

http://127.0.0.1:8383/easy/submit, input parameters such as:{ "audio_url": "生成的音频路径", "video_url": "D:/heygem_data/face2face/sample.mp4", "code": "unique-uuid", "chaofen": 0, "watermark_switch": 0, "pn": 1 } - Query Progress:

http://127.0.0.1:8383/easy/query?code=unique-uuidThe

- Using the Synthesis Interface

- Save results

- When finished, the video file is saved locally in the specified path.

3. Voice-driven video production

- record voice

- Record your voice in the client, or upload a WAV file directly to the

D:\heygem_data\voice\dataThe

- Record your voice in the client, or upload a WAV file directly to the

- Generate Video

- Call the above audio and video compositing APIs to generate an avatar video with actions.

- Preview and Adjustment

- Preview the effect through the client, you can adjust the parameters and re-generate.

Tips for use

- material requirement: Photographs need to be evenly lit and speech needs to be free of noise.

- Multi-language support: set in the API parameters

langis the corresponding language code (e.g. "zh" for Chinese). - Developer Support: Reference

src/main/serviceUnder the code, customize the function.

caveat

- The system needs to meet the 100GB C drive and 30GB D drive space requirements.

- Make sure WSL is enabled before installing Docker.

- 70GB of traffic is required to download the image. Stable WiFi is recommended.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...