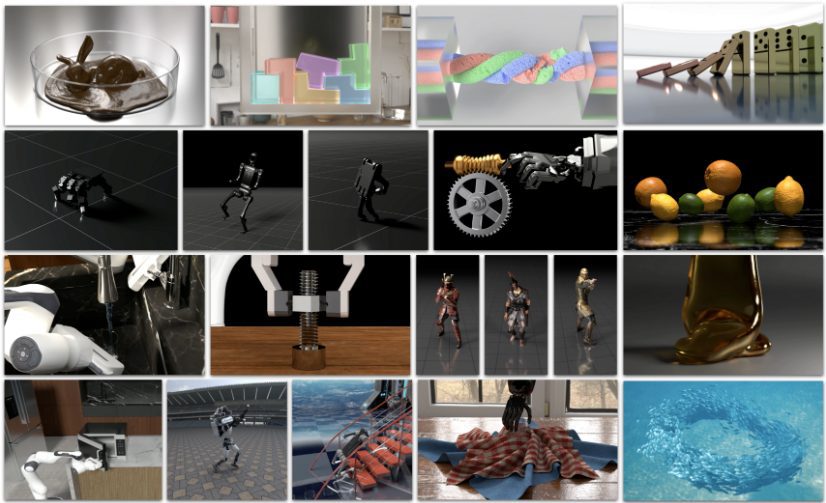

HelloMeme: Generate localized high-fidelity expression-action-consistent images or videos, Runway Act one open-source ping-pong!

General Introduction

HelloMeme is an open source project developed by HelloVision to generate high-quality images and videos by integrating Spatial Knitting Attentions to embed high-level and high-fidelity conditions in diffusion models. The project's code and model implementation are hosted on GitHub and can be freely downloaded and used by users. helloMeme provides a variety of functional modules, including image generation, video generation, and integration with Gradio and ComfyUI The integration is user-friendly for a variety of experiments and applications.

Related:

LivePortrait: Animation tool for generating dynamic portraits from still images and videos(open source)

ConsisID: a portrait reference map to generate character-consistent video, rapid multi-terminal integration(open source)

Reface: face replacement and video generation using AI to create fun animated images (paid)

Function List

- Image Generation: Generate high quality images from reference and driver images.

- Video Generation: Generate high-fidelity videos based on reference and driver videos.

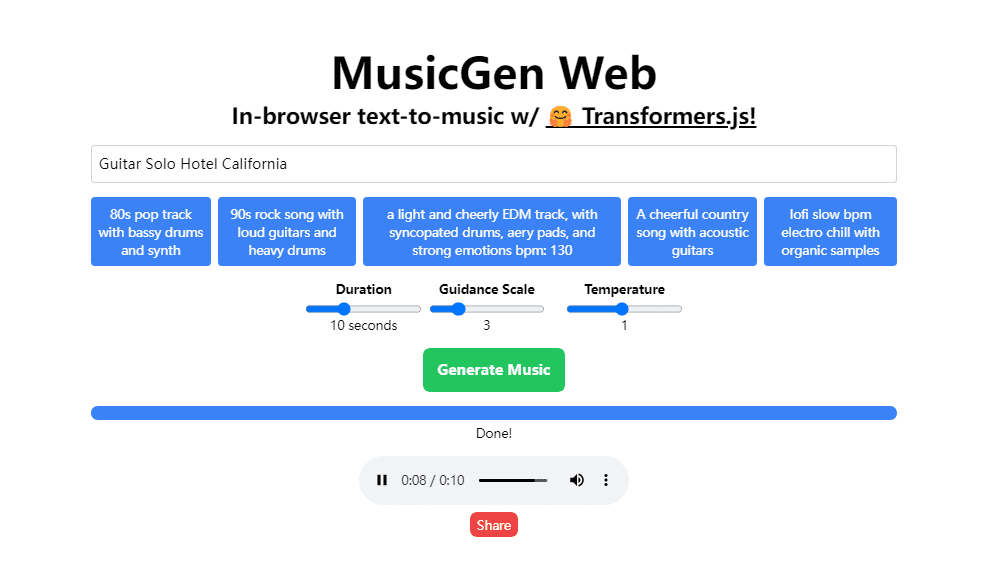

- Gradio Integration: Provides a Gradio interface for user interaction.

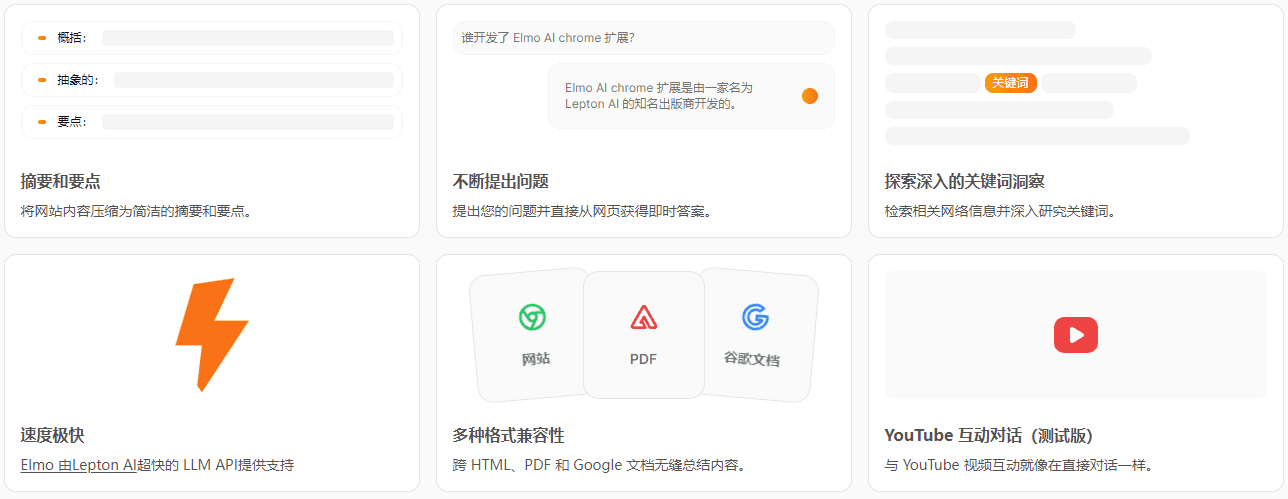

- ComfyUI Integration: Supports ComfyUI interface to simplify the user operation process.

- Experimental Modules: Includes a variety of experimental codes to facilitate users to conduct different experiments and tests.

- efficient algorithm: Optimize algorithms to reduce VRAM usage and improve generation efficiency.

Using Help

Installation process

- Creating a Conda Environment::

conda create -n hellomeme python=3.10.11

conda activate hellomeme

- Installing PyTorch and FFmpeg: Please refer to the official PyTorch and FFmpeg websites for detailed installation.

- Installation of dependencies::

pip install diffusers transformers einops scipy opencv-python tqdm pillow onnxruntime onnx safetensors accelerate peft

- clone warehouse::

git clone https://github.com/HelloVision/HelloMeme

cd HelloMeme

- running code::

- Image Generation:

bash

python inference_image.py - Video Generation:

bash

python inference_video.py

- Image Generation:

- Install the Gradio application::

pip install gradio

pip install imageio[ffmpeg]

python app.py

Functional operation flow

Image Generation

- Preparing the input image: Prepare the reference image and drive image.

- Run the image generation script::

python inference_image.py --reference_image path/to/reference.jpg --drive_image path/to/drive.jpg

- View Generated Results: The generated image will be saved in the specified directory.

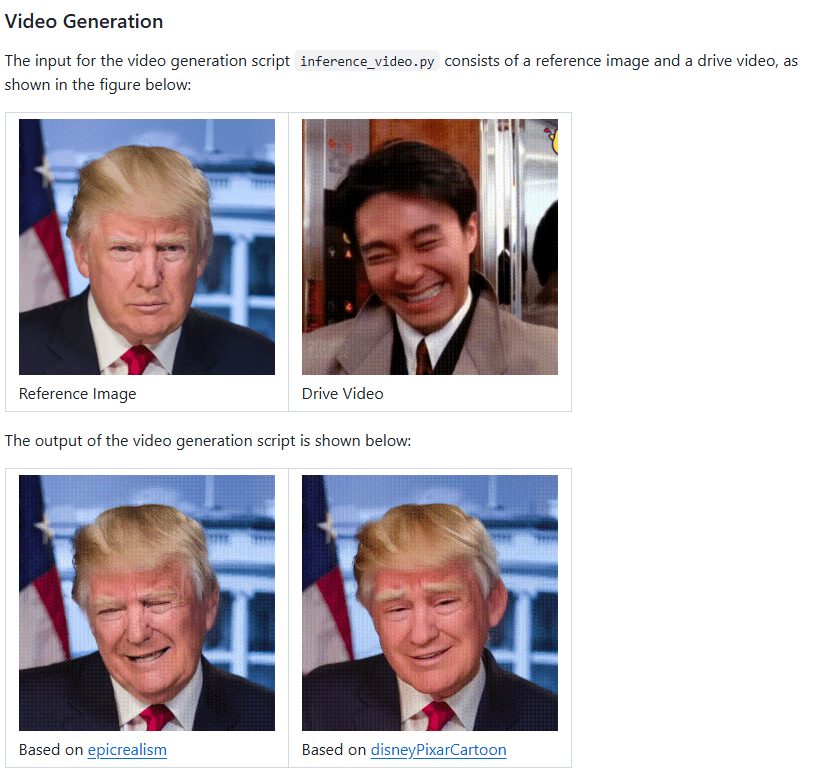

Video Generation

- Preparing to input video: Prepare reference videos and driver videos.

- Run the video generation script::

python inference_video.py --reference_video path/to/reference.mp4 --drive_video path/to/drive.mp4

- View Generated Results: The generated video will be saved in the specified directory.

Using the Gradio Interface

- Launching the Gradio Application::

python app.py

- Access via browser: Open your browser and visit

http://localhost:7860The Gradio interface can be used for image and video generation.

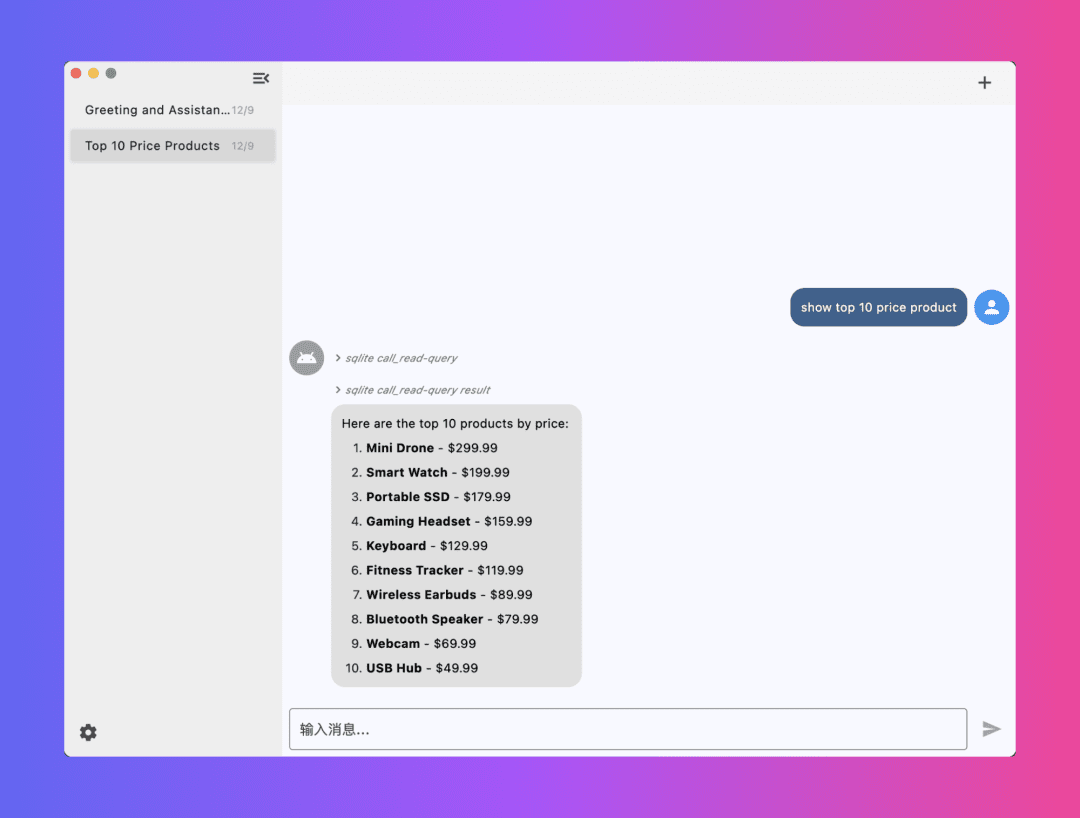

Using the ComfyUI Interface

- Install ComfyUI: Please refer to the official ComfyUI website for installation.

- Run HelloMeme::

python comfyui_hellomeme.py

- Operation via ComfyUI interface: Select the image or video generation function as required, upload the reference and driver files and click the Generate button.

caveat

- dependency version: Please note the diffusers version requirements, currently supported versions are

diffusers==0.31.0The - VRAM Usage: When generating a video, the longer the drive video is, the more VRAM is required, so please adjust it according to the actual situation.

- Model Download: On the first run, all models will be downloaded automatically, which may take a long time.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...