OpenAI Function calling

OpenAI Function calling V2 Features

The core goal of Function calling V2 is to give OpenAI models the ability to interact with the outside world, which is reflected in the following two core functions:

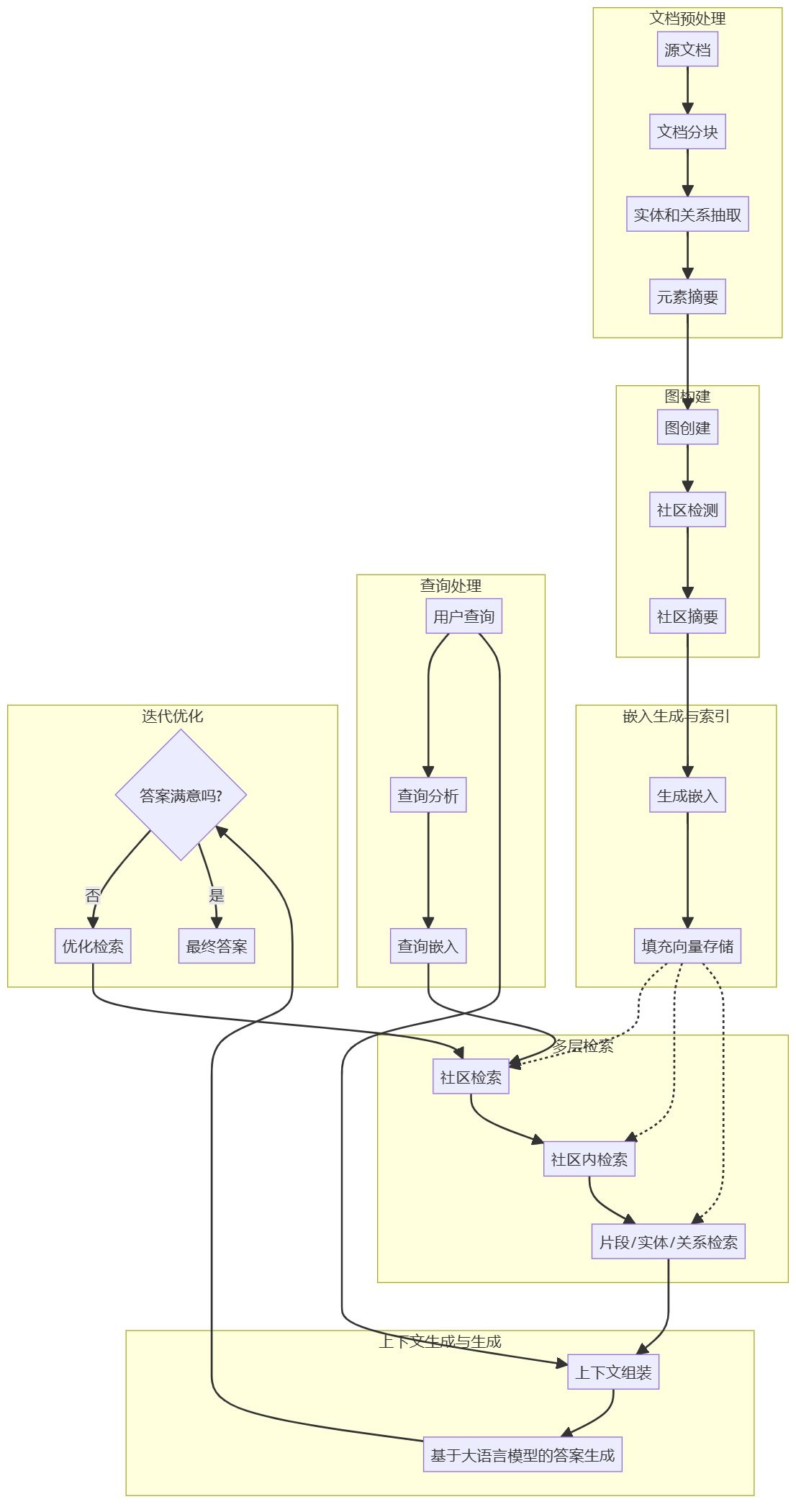

- Fetching Data - RAG's function call implementation.

- Essentially RAG (Retrieval Augmented Generation). Function calling provides a powerful mechanism to implement retrieval enhancement generation (RAG). The model can retrieve up-to-date and relevant information from external data sources (e.g., knowledge bases, APIs, etc.) by calling predefined functions and incorporating it into its own generated responses.

- Addressing information lag and knowledge limitations. Traditional knowledge of large language models is static; Function calling bridges this gap by enabling models to access real-time information or domain-specific knowledge to generate more accurate and comprehensive responses.

- Example of use case.

- Real-time information query. For example, get current weather data, latest news information, stock prices, etc.

- Knowledge Base Search. For example, querying the internal knowledge base, product documentation, FAQs, etc. to provide users with more professional answers.

- API Data Integration. For example, get product information, flight dynamics, geographic location information, etc. from external APIs to enrich the dialog content.

- Realization. Developer-defined functions for data retrieval (e.g.

get_weather,search_knowledge_base), and intoolsprovided to the model in the parameters. When the model determines that external information is needed, it calls these functions and gets the results, which are then integrated into the final response.

- Taking Action - model-driven automation.

- Beyond Information Provision, Driving Practical Operations. Function calling is not limited to information retrieval, but goes further by allowing the model to drive external systems to perform actual operations, enabling deeper automation and application scenarios.

- Enhancing the utility and application of models. This allows models to be more than just conversation partners, they can be intelligent assistants that help users with a variety of tasks.

- Example of use case.

- Automated workflows. For example, submitting forms, creating calendar events, sending emails, booking flights and hotels, and so on.

- Application control. Examples include modifying the state of an application (UI/front-end or back-end), controlling smart home devices, and more.

- Agentic Workflow. For example, depending on the content of the conversation, the conversation is handed over to a more specialized customer service system or triggers a more complex automated process.

- Realization. Developer-defined functions for performing specific operations (e.g.

send_email,create_calendar_event), and intoolsprovided to the model in the parameters. The model can call these functions based on user intent and pass the appropriate parameters to trigger an action.

Other key features of Function calling V2 (support for data acquisition and execution actions).

toolsParameters and Function Schema. Provides a structured way to define and manage the functions that a model can call, including function names, descriptions, and parameter definitions, ensuring that the model accurately understands and calls the functions.- Strict Mode. Improve the reliability and accuracy of function calls to ensure that the model outputs function calls that strictly conform to predefined patterns.

- Tool Choice and Parallel Function Call Control. Provides finer-grained control, allowing developers to tailor the behavior of model-called functions to application scenarios, such as forcing calls to specific functions or limiting the number of parallel calls.

- Streaming. Improve the user experience, you can show the filling process of function parameters in real time, so that users can more intuitively understand the thinking process of the model.

Summary.

The core value of Function calling V2 is the ability to create a new value through the Data acquisition (RAG implementation) cap (a poem) execute an action These two features greatly expand the application boundaries of big language modeling. It not only enables the model to access and utilize external information to generate smarter and more practical responses, but also drives the external system to perform operations and achieve a higher level of automation, laying the foundation for building more powerful AI applications. Data acquisition as an implementation of RAG is a key capability of Function calling V2 in knowledge-intensive applications.

Here's the official OpenAI function call(Function calling) A new version of the description that enables the model to fetch data and perform operations.

function call provides a powerful and flexible way for OpenAI models to interact with your code or external services, and has two main use cases:

| Getting data | Retrieve the latest information and integrate it into the model's responses (RAG). This is useful for searching the knowledge base and getting specific data (e.g., current weather data) from the API. |

| executable operation | Perform actions such as submitting a form, calling an API, modifying the state of the application (UI/front-end or back-end), or taking proxy workflow actions such as handing over a dialog. |

If you only want to makeModel Generation JSONPlease refer to OpenAI's documentation on structured outputs to ensure that the model always generates outputs that match the outputs you provide. JSON Schema The response.

Get Weather

Example of a function call using the get_weather function

from openai import OpenAI

client = OpenAI()

tools = [{

"type": "function",

"function": {

"name": "get_weather",

"description": "获取给定位置的当前温度。",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "城市和国家,例如 Bogotá, Colombia"

}

},

"required": [

"location"

],

"additionalProperties": False

},

"strict": True

}

}]

completion = client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": "今天巴黎的天气怎么样?"}],

tools=tools

)

print(completion.choices[0].message.tool_calls)

exports

[{

"id": "call_12345xyz",

"type": "function",

"function": {

"name": "get_weather",

"arguments": "{\"location\":\"Paris, France\"}"

}

}]

Example of a function call using the send_email function

from openai import OpenAI

client = OpenAI()

tools = [{

"type": "function",

"function": {

"name": "send_email",

"description": "向给定的收件人发送包含主题和消息的电子邮件。",

"parameters": {

"type": "object",

"properties": {

"to": {

"type": "string",

"description": "收件人的电子邮件地址。"

},

"subject": {

"type": "string",

"description": "电子邮件主题行。"

},

"body": {

"type": "string",

"description": "电子邮件消息正文。"

}

},

"required": [

"to",

"subject",

"body"

],

"additionalProperties": False

},

"strict": True

}

}]

completion = client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": "你能给 ilan@example.com 和 katia@example.com 发送邮件说“hi”吗?"}],

tools=tools

)

print(completion.choices[0].message.tool_calls)

exports

[

{

"id": "call_9876abc",

"type": "function",

"function": {

"name": "send_email",

"arguments": "{\"to\":\"ilan@example.com\",\"subject\":\"Hello!\",\"body\":\"Just wanted to say hi\"}"

}

},

{

"id": "call_9876abc",

"type": "function",

"function": {

"name": "send_email",

"arguments": "{\"to\":\"katia@example.com\",\"subject\":\"Hello!\",\"body\":\"Just wanted to say hi\"}"

}

}

]

Search the Knowledge Base

Example of a function call using the search_knowledge_base function

from openai import OpenAI

client = OpenAI()

tools = [{

"type": "function",

"function": {

"name": "search_knowledge_base",

"description": "查询知识库以检索关于某个主题的相关信息。",

"parameters": {

"type": "object",

"properties": {

"query": {

"type": "string",

"description": "用户问题或搜索查询。"

},

"options": {

"type": "object",

"properties": {

"num_results": {

"type": "number",

"description": "要返回的排名靠前的结果数量。"

},

"domain_filter": {

"type": [

"string",

"null"

],

"description": "可选的域,用于缩小搜索范围(例如,“finance”,“medical”)。 如果不需要,则传递 null。"

},

"sort_by": {

"type": [

"string",

"null"

],

"enum": [

"relevance",

"date",

"popularity",

"alphabetical"

],

"description": "如何对结果进行排序。 如果不需要,则传递 null。"

}

},

"required": [

"num_results",

"domain_filter",

"sort_by"

],

"additionalProperties": False

}

},

"required": [

"query",

"options"

],

"additionalProperties": False

},

"strict": True

}

}]

completion = client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": "你能在 AI 知识库中找到关于 ChatGPT 的信息吗?"}],

tools=tools

)

print(completion.choices[0].message.tool_calls)

exports

[{

"id": "call_4567xyz",

"type": "function",

"function": {

"name": "search_knowledge_base",

"arguments": "{\"query\":\"What is ChatGPT?\",\"options\":{\"num_results\":3,\"domain_filter\":null,\"sort_by\":\"relevance\"}}"

}

}]

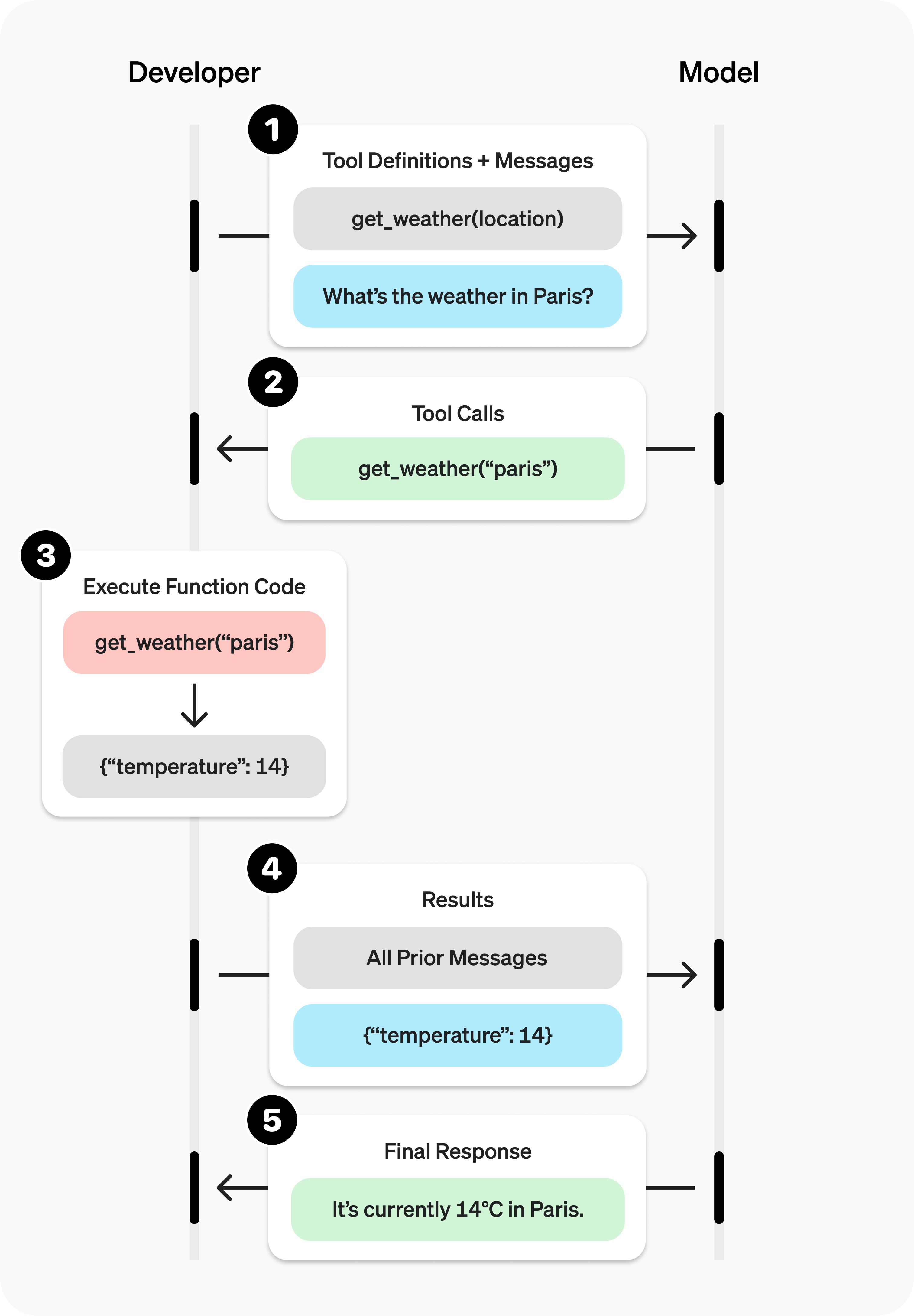

summarize

You can make the OpenAI model accessible by giving it access to the tools to extend its functionality.tools It can take two forms:

| function call | Developer-defined code. |

| Hosting Tools | OpenAI-built tools. (e.g., file search, code interpreter) are only available in the Assistants API. |

This guide will explain how to get the most out of the function call Give the model access to your own functions. Based on system prompts and messages, the model may decide to call these functions - theInstead of (or in addition to) generating text or audioThe

After that, you need to execute the function code, send back the results, and the model will integrate the results into its final response.

sample function (computing)

Let's look at allowing the model to use the following defined real get_weather Steps of the function:

Sample get_weather function implemented in your code base

import requests

def get_weather(latitude, longitude):

response = requests.get(f"https://api.open-meteo.com/v1/forecast?latitude={latitude}&longitude={longitude}¤t=temperature_2m,wind_speed_10m&hourly=temperature_2m,relative_humidity_2m,wind_speed_10m")

data = response.json()

return data['current']['temperature_2m']

Unlike the previous flowchart, this function requires a precise latitude cap (a poem) longitudeand not a generic one location Parameters. (However, our model can automatically determine the coordinates of many locations!)

function call step

Use the defined function call model - along with your system and user messages.

Step 1: Call the model with the defined get_weather tool

from openai import OpenAI

import json

client = OpenAI()

tools = [{

"type": "function",

"function": {

"name": "get_weather",

"description": "获取给定坐标的当前温度,单位为摄氏度。",

"parameters": {

"type": "object",

"properties": {

"latitude": {"type": "number"},

"longitude": {"type": "number"}

},

"required": ["latitude", "longitude"],

"additionalProperties": False

},

"strict": True

}

}]

messages = [{"role": "user", "content": "今天巴黎的天气怎么样?"}]

completion = client.chat.completions.create(

model="gpt-4o",

messages=messages,

tools=tools,

)

Step 2: The model decides which function to call - the model returns the name and input parameters.

completion.choices[0].message.tool_calls

[{

"id": "call_12345xyz",

"type": "function",

"function": {

"name": "get_weather",

"arguments": "{\"latitude\":48.8566,\"longitude\":2.3522}"

}

}]

Step 3: Execute the function code - parse the model's response and process the function call

Execute the get_weather function

tool_call = completion.choices[0].message.tool_calls[0]

args = json.loads(tool_call.function.arguments)

result = get_weather(args["latitude"], args["longitude"])

Step 4: Provide the results to the model - so that the model can integrate the results into its final response.

Provide results and call the model again

messages.append(completion.choices[0].message) # append model's function call message

messages.append({ # append result message

"role": "tool",

"tool_call_id": tool_call.id,

"content": str(result)

})

completion_2 = client.chat.completions.create(

model="gpt-4o",

messages=messages,

tools=tools,

)

Step 4: Model Response - Integrate the results into its output.

completion_2.choices[0].message.content

"巴黎当前温度为 14°C (57.2°F)。"

define a function

function can be added to each API request's tools parameter in the function The object is set in the form of an object.

A function is defined by its schema, which informs the model what the function does and what input parameters are expected. It contains the following fields:

| field | descriptive |

|---|---|

| name | Name of the function (e.g., get_weather) |

| description | Detailed information on when and how to use functions |

| parameters | Define the JSON schema of the function's input parameters |

Sample Function Patterns

{

"type": "function",

"function": {

"name": "get_weather",

"description": "检索给定位置的当前天气。",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "城市和国家,例如 Bogotá, Colombia"

},

"units": {

"type": "string",

"enum": [

"celsius",

"fahrenheit"

],

"description": "温度将以什么单位返回。"

}

},

"required": [

"location",

"units"

],

"additionalProperties": False

},

"strict": True

}

}

on account of parameters defined by the JSON schema, so you can take advantage of its many rich features such as property types, enumerations, descriptions, nested objects, and recursive objects.

(Optional) Function calls with pydantic and zod

While we encourage you to define function modes directly, our SDK provides helper programs that can transform the pydantic cap (a poem) zod Objects are converted to patterns. Not all pydantic cap (a poem) zod Functions are supported.

Defining objects to represent function patterns

from openai import OpenAI, pydantic_function_tool

from pydantic import BaseModel, Field

client = OpenAI()

class GetWeather(BaseModel):

location: str = Field(

...,

description="城市和国家,例如 Bogotá, Colombia"

)

tools = [pydantic_function_tool(GetWeather)]

completion = client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": "今天巴黎的天气怎么样?"}],

tools=tools

)

print(completion.choices[0].message.tool_calls)

Best Practices for Defining Functions

Write clear and detailed function names, parameter descriptions and instructions.

- Explicitly describe what the function and each parameter is used for (and its format), and what the output represents.

- Use system hints to describe when (and when not) to use each function. Usually, tell the model exactly what to do.

- Contains examples and edge cases, especially when correcting any recurring failures. (Attention: Adding examples may degrade the performance of the inference model.)

Apply software engineering best practices.- Make functions easy to understand and intuitive. (Principle of least surprise)

- Use enumerations and object structures to make invalid states unrepresentable. (For example.toggle_light(on: bool, off: bool) (Invalid calls allowed)

- Pass the intern test. Can the intern/human use the function correctly with only what you gave the model? (If not, what questions would they ask you? Add the answer to the prompt.)

Minimize the burden on the model and use code wherever possible.

- Don't let the model populate parameters you already know. For example, if you've already gotten the menu based on the previous order_idIf you do not want to set the order_id Parameters - instead of setting parameters submit_refund()and pass the code order_idThe

- Merge functions that are always called sequentially. For example, if you always call the functions in query_location() followed by a call to mark_location(), simply move the tagging logic to the query function call.For higher accuracy, keep the number of functions small.

- Evaluate your performance using a different number of functions.

- The goal is to have fewer than 20 functions at any one time, but this is only a soft recommendation.

Utilize OpenAI resources.

- exist Playground Generate and iterate over function patterns in

- Consider fine-tuning to improve function call accuracy for large numbers of functions or difficult tasks.

Token usage

At the bottom level, functions are injected into system messages in the syntax that the model has been trained to use. This means that the function counts against the contextual constraints of the model and is used as input Token Charges. If you are experiencing a Token limit, we recommend limiting the number of functions or the length of the description you provide for a function argument.

If you have many functions defined in your tool specification, you can also use fine-tuning to reduce the number of Token used.

Handling function calls

When a model calls a function, you must execute it and return the result. Since the model response may contain zero, one, or more calls, the best practice is to assume that there are multiple calls.

The response contains a tool_calls arrays, each of which has a id(to be used later for submitting the results of the function) and one containing the name and JSON-encoded arguments (used form a nominal expression) functionThe

Sample response with multiple function calls

[

{

"id": "call_12345xyz",

"type": "function",

"function": {

"name": "get_weather",

"arguments": "{\"location\":\"Paris, France\"}"

}

},

{

"id": "call_67890abc",

"type": "function",

"function": {

"name": "get_weather",

"arguments": "{\"location\":\"Bogotá, Colombia\"}"

}

},

{

"id": "call_99999def",

"type": "function",

"function": {

"name": "send_email",

"arguments": "{\"to\":\"bob@email.com\",\"body\":\"Hi bob\"}"

}

}

]

Execute the function call and append the result

for tool_call in completion.choices[0].message.tool_calls:

name = tool_call.function.name

args = json.loads(tool_call.function.arguments)

result = call_function(name, args)

messages.append({

"role": "tool",

"tool_call_id": tool_call.id,

"content": result

})

In the above example, we have a hypothetical call_function to route each call. This is one possible implementation:

Function call execution and result appending

def call_function(name, args):

if name == "get_weather":

return get_weather(**args)

if name == "send_email":

return send_email(**args)

Formatting results

The result must be a string, but the format is up to you (JSON, error code, plain text, etc.). The model will interpret the string as needed.

If your function has no return value (e.g. send_email), simply return a string to indicate success or failure. (For example "success")

Integrate results into responses

Attach the results to your messages Afterward, you can send them back to the model for a final response.

Send results back to the model

completion = client.chat.completions.create(

model="gpt-4o",

messages=messages,

tools=tools,

)

final response

"巴黎大约 15°C,Bogotá 大约 18°C,并且我已经向 Bob 发送了那封邮件。"

Additional Configurations

Tool Selection

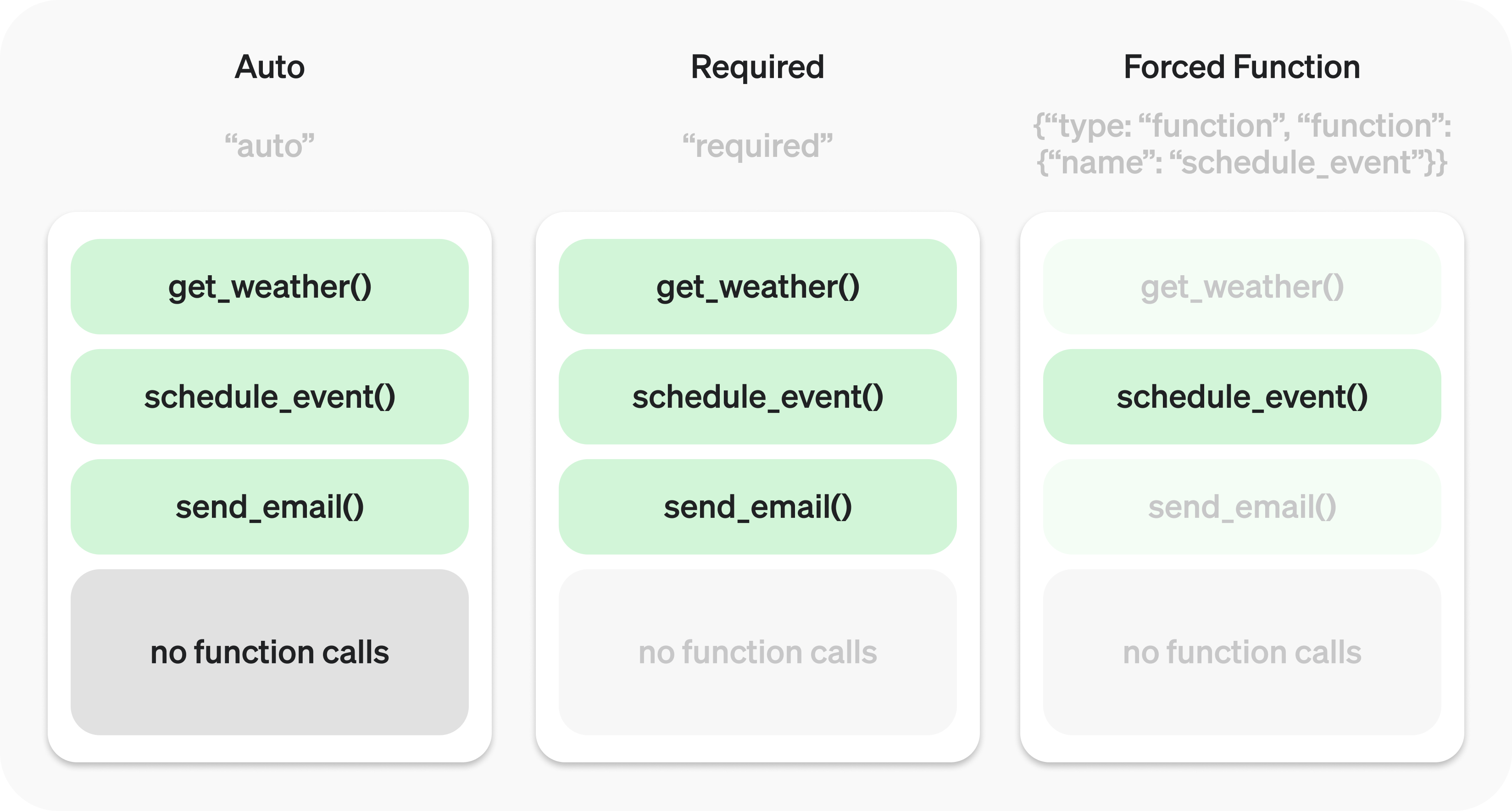

By default, the model will determine when and how many tools to use. You can use the tool_choice parameter enforces a specific behavior.

- Auto: (default (setting)) calls zero, one or more functions. tool_choice: "auto"

- Required: Call one or more functions. tool_choice: "required"

- Forced Function: Call a specific function exactly. tool_choice: {"type": "function", "function": {"name": "get_weather"}}

You can also set the tool_choice set to "none", to simulate the behavior of the non-passing function.

parallel function call

The model may choose to call multiple functions in a single round. You can do this by setting the parallel_tool_calls set to false to prevent this, which will ensure that exactly zero or one tool is called.

Attention: Currently, if the model calls more than one function in a round, strict modev disables these calls.

strict model

commander-in-chief (military) strict set to true will ensure that function calls reliably adhere to the function pattern, rather than doing their best. We recommend always enabling strict mode.

At the bottom, strict mode is implemented by utilizing our structured outputs feature, thus introducing several requirements:

- with regards to parameters Each object in theadditionalProperties must be set to falseThe

- All fields in properties must be labeled as requiredThe

You can do this by adding the null act as type option to indicate optional fields (see the example below).

Enable Strict Mode

{

"type": "function",

"function": {

"name": "get_weather",

"description": "检索给定位置的当前天气。",

"strict": true,

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "城市和国家,例如 Bogotá, Colombia"

},

"units": {

"type": ["string", "null"],

"enum": ["celsius", "fahrenheit"],

"description": "温度将以什么单位返回。"

}

},

"required": ["location", "units"],

"additionalProperties": False

}

}

}

Disable strict mode

{

"type": "function",

"function": {

"name": "get_weather",

"description": "检索给定位置的当前天气。",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "城市和国家,例如 Bogotá, Colombia"

},

"units": {

"type": "string",

"enum": ["celsius", "fahrenheit"],

"description": "温度将以什么单位返回。"

}

},

"required": ["location"],

}

}

}

All patterns generated in playground have strict mode enabled.

While we recommend that you enable strict mode, it has some limitations:

- Some features of the JSON schema are not supported. (See supported schemas.)

- Patterns undergo additional processing on the first request (and are then cached). If your pattern varies from request to request, it may result in higher latency.

- Patterns are cached for performance and do not qualify for zero data retention.

streaming

Streaming can be used to show progress, by displaying functions that are called as the model fills in its parameters, or even displaying parameters in real time.

Streaming a function call is very similar to streaming a regular response: you would stream set to true and get the data with delta Block of objects.

Streaming function calls

from openai import OpenAI

client = OpenAI()

tools = [{

"type": "function",

"function": {

"name": "get_weather",

"description": "获取给定位置的当前温度。",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "城市和国家,例如 Bogotá, Colombia"

}

},

"required": ["location"],

"additionalProperties": False

},

"strict": True

}

}]

stream = client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": "今天巴黎的天气怎么样?"}],

tools=tools,

stream=True

)

for chunk in stream:

delta = chunk.choices[0].delta

print(delta.tool_calls)

Output delta.tool_calls

[{"index": 0, "id": "call_DdmO9pD3xa9XTPNJ32zg2hcA", "function": {"arguments": "", "name": "get_weather"}, "type": "function"}]

[{"index": 0, "id": null, "function": {"arguments": "{\"", "name": null}, "type": null}]

[{"index": 0, "id": null, "function": {"arguments": "location", "name": null}, "type": null}]

[{"index": 0, "id": null, "function": {"arguments": "\":\"", "name": null}, "type": null}]

[{"index": 0, "id": null, "function": {"arguments": "Paris", "name": null}, "type": null}]

[{"index": 0, "id": null, "function": {"arguments": ",", "name": null}, "type": null}]

[{"index": 0, "id": null, "function": {"arguments": " France", "name": null}, "type": null}]

[{"index": 0, "id": null, "function": {"arguments": "\"}", "name": null}, "type": null}]

null

However, instead of aggregating blocks into a single content string, but instead aggregates the blocks into the encoded arguments JSON object.

When the model calls one or more functions, each of the delta (used form a nominal expression) tool_calls fields will be populated. Each tool_call Contains the following fields:

| field | descriptive |

|---|---|

| index | Identifies the function call corresponding to delta |

| id | Tool call ID. |

| function | Function call delta (name and arguments) |

| type | type of tool_call (always function for function calls) |

Many of these fields are only available on the first of each tool call's delta Setting in, e.g. id,function.name cap (a poem) typeThe

Here's a code snippet that demonstrates how the delta Aggregate to the final tool_calls in the object.

Accumulation tool_call delta

final_tool_calls = {}

for chunk in stream:

for tool_call in chunk.choices[0].delta.tool_calls or []:

index = tool_call.index

if index not in final_tool_calls:

final_tool_calls[index] = tool_call

final_tool_calls[index].function.arguments += tool_call.function.arguments

Accumulated final_tool_calls[0]

{

"index": 0,

"id": "call_RzfkBpJgzeR0S242qfvjadNe",

"function": {

"name": "get_weather",

"arguments": "{\"location\":\"Paris, France\"}"

}

}© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...